The plan cache holds a lot of secrets about what’s going on inside SQL Server. In the First Responder Kit we shared one of our plan cache scripts to find the top resource consuming queries. That query works well, but over time we’ve added some additional functionality to the query. I figured it was time to share the new query that we’re using to analyze SQL Server performance.

Our existing query looks at individual query stats, but it doesn’t take into account stored procedure or trigger execution stats. During our SQL Critical Care® checks, we’ve found it helpful to look at both procedures and triggers to figure out if they were causing problems for overall server health.

What Are We Looking At?

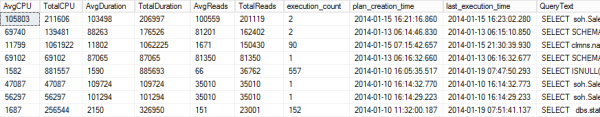

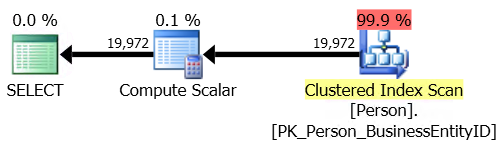

The original query just looked at sys.dm_exec_query_stats and looked at average and total metrics across CPU, duration, and logical reads.

This was a helpful approach, but over time it’s become apparent that we needed to look at more than just individual statements – what if a single procedure was causing problems that only show up in aggregate?

The Bigger Picture

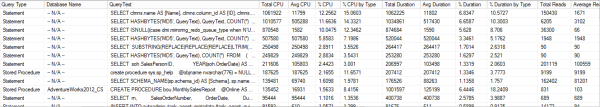

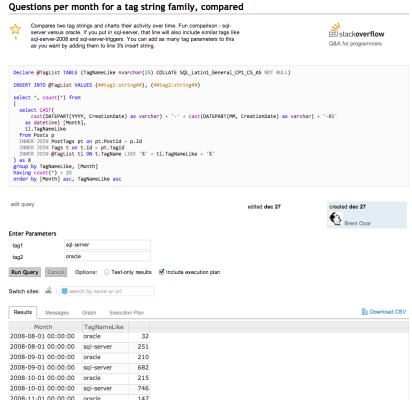

To get a view of the bigger picture, we added in two more DMVs – sys.dm_exec_trigger_stats and sys.dm_exec_procedure_stats. This gives us a big picture view of what’s going on inside SQL Server – the only thing that would make this better would be a DMV for function execution stats.

To avoid skewing results, data is aggregated by the query_hash – unfortunately this means the queries won’t work against SQL Server 2005. Not only do we rank queries by CPU, IO, duration, and execution count, but a second level of ranking is provided that ranks queries within their logical grouping – by statement, procedure, and trigger. If you want to see what your most expensive trigger is doing, it’s easy enough to make a quick change to the query.

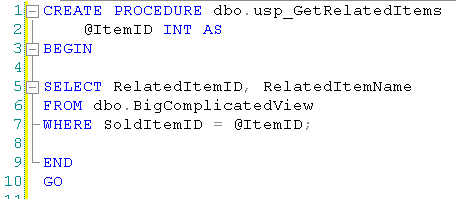

Check it out:

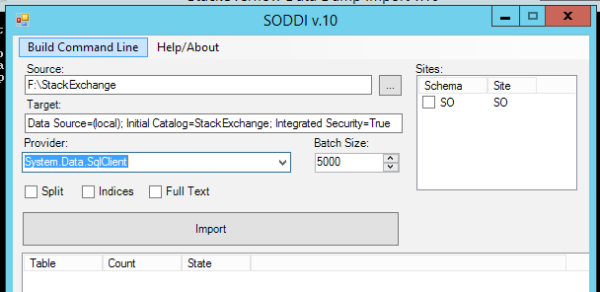

Try it out – get sp_BlitzCache® in the usual way – by agreeing to our crazy terms of service, selling your email address to a foreign government, and clicking “download”.

Using the Query

This query is easy enough to start using right way – just download the file and run it. Once you’re familiar with it, scroll to the bottom and you’ll see two separate queries you can run. The first is suitable for pasting into Excel – it has no query plan and the SQL text is shortened to easily paste into a single cell. The second query has everything that you want.

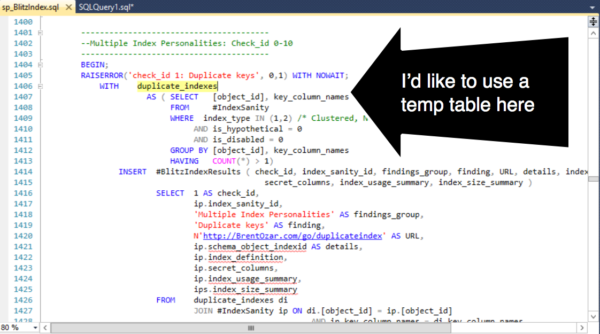

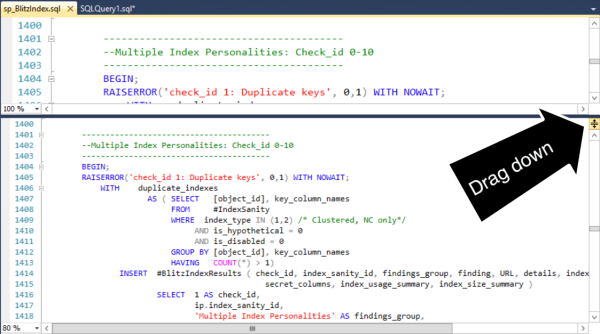

Since data is dumped into a temporary table during analysis, it’s easy to keep re-querying the temporary table as you re-sort data or refine what you’re looking for.

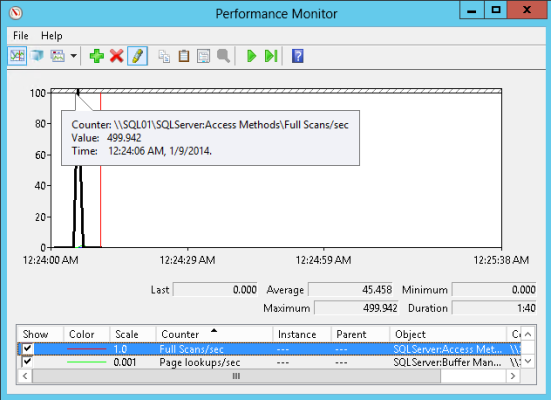

SQL Server has amazing instrumentation. Dynamic management views and functions give you great insight into what’s happening in your SQL Server and what’s slowing it down. Interpreting these views and functions takes a lot of time, but with practice and skill you can use them to become great at performance tuning.

SQL Server has amazing instrumentation. Dynamic management views and functions give you great insight into what’s happening in your SQL Server and what’s slowing it down. Interpreting these views and functions takes a lot of time, but with practice and skill you can use them to become great at performance tuning.