Most of us get caught up in fragmentation, finding the slowest queries, and looking at new features. We forget the little things that make managing a SQL Server easier – like cylcing the SQL Server error logs.

What’s the Error Log?

The SQL Server error log is a file that is full of messages generated by SQL Server. By default this tells you when log backups occurred, other informational events, and even contains pieces and parts of stack dumps. In short, it’s a treasure trove of information. When SQL Server is in trouble, it’s nice to have this available as a source of information during troubleshooting.

Unfortunately, if the SQL Server error log gets huge, it can take a long time to read the error log – it’s just a file, after all, and the GUI has to read that file into memory.

Keep the SQL Server Error Log Under Control

It’s possible to cycle the SQL Server error log. Cycling the error log starts a new file, and there are only two times when this happens.

- When SQL Server is restarted.

- When you execute

sp_cycle_errorlog

When SQL Server cycles the error log, the current log file is closed and a new one is opened. By default, these files are in your SQL Server executables directory in the MSSQL\LOG folder. Admittedly, you don’t really need to know where these are unless you want to see how much room they take up.

SQL Server keeps up to 6 error log files around by default. You can easily change this. Open up your copy of SSMS and:

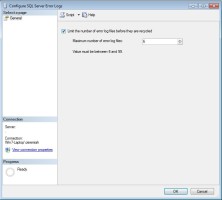

- Expand the “Management” folder.

- Right click on “SQL Server Logs”

- Select “Configure”

- Check the box “Limit the number of error log files before they are recycled”

- Pick some value to put in the “Maximum number of error log failes” box

- Click “OK”

It’s just that easy! Admittedly, you have to do this on every SQL Server that you have, so you might just want to click the “Script” button so you can push the script to multiple SQL Servers.

Automatically Rotating the SQL Server Error Log

You can set up SQL Server to automatically rotate your error logs. This is the easiest part of this blog post, apart from closing the window.

To cycle error logs on a regular basis, restart your SQL Server nightly.

Only joking.

You can set up a SQL Agent job with a T-SQL step. All it has to do is EXEC sp_cycle_errorlog. Schedule the SQL Agent job to run as frequently as you’d like and you’re good to go. The upside of this approach is that it’s automatic and the SQL Server error logs will be more granular, making it easier to find the error messages you’re looking for.

It’s Just That Easy!

Cycling the SQL Server error log is easy – you just need a regularly scheduled agent job. Rotating the logs makes it easier to find error messages. Let’s face it – you’re only looking for error messages when there’s a problem. That’s all there is to rotating the error logs.

53 Comments. Leave new

Haha, you got me with:

To cycle error logs on a regular basis, restart your SQL Server nightly.

I was like…WHAT??!!??

😀

Glad I could help you wake up this morning.

That one got me too. I was like “WHA . . . ” oh he’s kidding.

So, to be clear, each time I cycle the logs with that sproc, it starts a new log, cycles the existing ones, and deletes the tail. Or, in other words, if I have the Sql Server default of 6 logs, and I “EXEC sp_cycle_errorlog” on a daily basis, I will have a max of 6 days worth of logs. Correct?

I’m going to have to determine how this works in connection with my use of “sysmail_delete_log_sp”, “sp_purge_jobhistory”, and “sp_delete_backuphistory”. Namely, if everything ultimately ends up in the same log, this is going to mess me up. I keep 365 days of backup history, but only 60 days of email-logging and 14 days of job history (lots of noise from successful tlog backups). If I start cycling the logs on a daily basis, it seems I’d need to change my server limit to 365 logs at bare minimum. Am I understanding things correctly?

Backup history is kept in MSDB. Job history is also kept in MSDB. As is your email history. So… Nope, you’re right to be concerned, but cycling the error log won’t ruin your history retention.

The error logs can contain some of the information you’re interested in but it’s stored as unstructured data in a text file on disk. Those files can grow quite large if you don’t maintain them.

On the bright side, there’s a wealth of information about system health in the SQL Server error log, and it’s helpful to have those files around to search through.

BTW: Same goes for sp_cycle_agent_errorlog

Cheers,

Johan

sp_cycle_agent_errorlog – we tried that for awhile, but there isn’t anywhere (that I can find) to set the number of files to keep (as you can with the sql error log). It always kept the last 10 files. Is this still the case, or am I missing something?

That is correct, but nothing is preventing you to copy the most recently archived sqlagent errorlog file to a safe zone. ( and clean up after a desired number or time )

[string]$TargetSQLInstance = '.\SQL2014DE' #$args[0];

#clear-host

# Target instances

if ( $TargetSQLInstance -eq $null ) {

Write-Error "No SQLInstance given !!!"

break

}

if ( $TargetComputerName -eq $null -or $TargetComputerName -eq '.' ) {

$TargetComputerName = $Env:COMPUTERNAME

}

WRITE-verbose "--> $TargetComputerName \\ $Env:COMPUTERNAME <--"

if ( $TargetComputerName -ne $Env:COMPUTERNAME ) {

WRITE-OUTPUT "Nog niet voorzien voor remoting !!!" -BackgroundColor Red -ForegroundColor Yellow

break

}

$TargetSQLAgentErrorlogs= "$env:TEMP\SQLAgentErrorlogs"

# Load the SMO assembly and create the server object, connecting to the server.

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO') | Out-Null

# You cannot set the number of SQLAgent Errorlog files to keep before rollover, so copy to safe zone

# Keep in mind these files may be big, so can consume quite some disk space.

#Create target folder if it doesn't exist

if ( !( Test-path $TargetSQLAgentErrorlogs -PathType Container )) {

md $TargetSQLAgentErrorlogs

}

$CycleTs = Get-Date -Format 'yyyyMMdd_HHmmss' ;

$SQLInstance = $TargetSQLInstance

try {

$server = New-Object 'Microsoft.SqlServer.Management.SMO.Server' ($SQLInstance)

#Take controle over the actual connect

$server.ConnectionContext.Connect()

$JobServerErrorlogFile = $server.JobServer.ErrorLogFile ;

#Cycle SQLAgent errorlog

$server.JobServer.CycleErrorLog();

$TargetFileName = Split-Path -Path ($JobServerErrorlogFile -replace '.out', ".$CycleTs") -Leaf ;

#Now copy the latest SQLAgent errorlog history file

Copy-Item -LiteralPath ($JobServerErrorlogFile -replace '.out', '.1') -Destination (Join-Path -Path $TargetSQLAgentErrorlogs -ChildPath $TargetFileName ) ;

WRITE-OUTPUT $('[{0}] - SQLAgenterrorlog file copied to [{1}].' -f $server.Name, (Join-Path -Path $TargetSQLAgentErrorlogs -ChildPath $TargetFileName ) )

$server.ConnectionContext.Disconnect() ;

}

catch {

WRITE-OUTPUT $('Execption encountered for [{0}] : {1}' -f $SQLInstance, $_.exception.message ) #-ForegroundColor Black -BackgroundColor Red

}

We have a job exactly like Jeremiah describes, with one additional step. After we run sp_cycle_errorlog, we import the previous version into an archive table in our admin database. This facilitates historical searches, which can be especially helpful if any of your apps write customized status information to the error log via xp_logevent.

— Archive table

CREATE TABLE [dbo].[ErrorLogArchive](

[ErrorLogArchiveID] [int] IDENTITY(1,1) NOT NULL,

[LogDate] [datetime] NULL,

[ProcessInfo] [varchar](50) NULL,

[Text] [varchar](max) NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

GO

— The procedure our SQLAgent job runs

CREATE PROCEDURE ArchiveErrorLog

AS

EXECUTE sp_cycle_errorlog

IF @@Error = 0

BEGIN

INSERT ErrorLogArchive(

[LogDate],

[ProcessInfo],

[Text])

EXECUTE sp_readerrorlog 1

END

I must be missing something here – what version of SQL Server does this apply to?

Are you using SQL Server? It applies. Books Online goes back to SQL Server 2005 on this, so that’s as far as I’m willing to say it works.

You can always check the fantastic documentation by doing a search for

site:msdn.microsoft.com SQL Server sp_cycle_errorlogwhen you need to know where a certain piece of functionality applies.I use a SQL Agent job to automatically cycle the errorlog when it reaches a given size, and also sends an email. If there are very large log files or if it cycles too frequently, then there is probably something that needs attention.

https://ronthepolymath.wordpress.com/2015/09/30/cycle-sql-error-log-when-it-reaches-a-certain-size/

Yeah, daily cycling is very helpful. I set it up on all my instances, with the max possible retention of 99 files.

BTW, while the GUI limits you to 99 files, if you script it out you can set a larger limit. Though I’m not sure you’d really want to.

Do you have a script you’d be willing to share?

I also recycle the log daily (at midnight) and keep 30 logs. Before doing the recycle, my job first scans the current log for failed logins, and sends an html-format email to the DBA’s if the number of failures for any login is greater than a defined threshold. It’s proved useful for highlighting persistent login failures.

Just be aware of the 99 files limit. If your goal is to keep logs for 90 days, some “unexpected” SQL Server restarts (SQL patching restart because of Windows patching, etc.), may prevent you from having all the logs (in terms of days) you want to keep.

In these cases you either need to copy the old files to a safe place (as mentioned earlier here) or otherwise put the content safe.

Great post anbout a basic task that I think still is important.

We have increased the number of errorlog files to 42 (what else) and are recycling on a daily basis at 23:59:30. Then the file is in the filesystem with last active (LastWriteTime) on the SQL Server active day.

This way we have about one month SQL Server Errorlog history on the server including restarts.

The parsing of the files before or after recycle is a great idea, but I still struggle to catch files generated by multiple restarts.

I realize this is an old thread, but I too was concerned about dropping off logs due to unexpected restarts or patching. So I created this script, which, so long as you don’t have multiple restarts within a 24 hour period will prevent unnecessary recycling of the error log.

DECLARE @ver AS nvarchar(256) = REPLACE(@@Version,CHAR(10),CHAR(13)+CHAR(10))

DECLARE @tbl AS TABLE (LogDate DATETIME, ProcessInfo varchar(50), Text varchar(MAX))

INSERT INTO @tbl

EXEC xp_readerrorlog 0, 1, @ver

IF ((SELECT MIN(LogDate) FROM @tbl) < GETDATE()-1)

EXEC sp_cycle_errorlog

I realize this is somewhat old, but what have you folks done to address a common error:

[412] Errorlog has been reinitialized. See previous log for older entries.

I have used the syntax:

USE msdb

GO

EXEC dbo.sp_cycle_agent_errorlog

GO

I’ve ran this in both a query window and with an SQL Agent job. Each fail with the above error. Additionally, if I right click on the error log folder in SSMS, it again fails with this error. Only successful “recycle” is restarting the service in Configuration Manager.

This is SQL 2014 at patch level 12.0.4449.0, it has two instances (one named, the other default), it has replication used to push my Ozar and Ola scripts to a DBADB on TSX instances from an MSX Multi-server Administration “management” server. They all fail….on the MSX and Targets (TSX).

This appears to be a problem many others have had, but I’ve yet to find a solid resolution to the issue. Thanks for helping!

Patrick – your best bet is to post the question at http://dba.stackexchange.com.

This is not an error, it just lets you know that the log has been cycled.

probably worth cycling the agent logs as well.. sp_cycle_agent_errorlog

[…] knew I needed to recycle the Log Files and needed to quickly google the command and all credit goes HERE and the command I needed to issue was EXEC […]

Sorry to poke at an old posting.

I’m wondering why is cycling the log a best practice? Other than a smaller file for the server or client to load when accessing the log I don’t see any real advantage. Actually I prefer to be able to look at the list of logs and quickly and easily see when the instance and/or server was last restarted, which is much harder to do if you recycle the log daily or weekly as you need to read the dates and times closely to see which ones happened during the scheduled recycles.

Kenney – sure, read the post, specifically paragraph #3.

If I’m counting correctly then paragraph #3 is “Unfortunately, if the SQL Server error log gets huge, it can take a long time to read the error log – it’s just a file, after all, and the GUI has to read that file into memory.”

So I still have the same question, other than a smaller file size what is the advantage? Because if it’s only a smaller file then I’ll prefer to not cycle the file so that I can easily see instance/server restarts at a glance.

I just want to be sure of the Pros and Cons before I dismiss this practice or adopt it. So far I see the only Pro for cycling the log file is a smaller file (which means faster load time when viewing and less memory consumed while open). The only Pro I see for not cycling is being able to see instance/server restarts at a glance (looking at the list of log files in SSMS). The only Cons that I know of for cycling are that you can’t see restarts at a glance, and you have to increase the number of logs kept if you want to see more than 6 days/weeks/? of logs depending on your cycle interval. The only Cons that I know of for not cycling is that the file can be very large (which can take longer longer to load and use more resources), and you have a more variable amount of time that is covered by your logs (whether or not not you change the number of logs kept value).

Because with a large error log, it can take several MINUTES for the contents to render. That can be pretty awkward during an emergency.

+1. SSMS would crash on us while trying to search our SQL Server Logs, cycling regularly sorted this out.

+1 to cycling the error logs on a regular basis (and also moving them from where from the default location). Just finished up on a issue where a single log file blew out to 90BG (42 days logging), filling the disk and causing failures on services such as SSRS, the file was useless to me as have no real solution to open the file.

I had this set to cycle 99 files … just found that it has reset to 6. No idea when that happened, but I suspect the upgrade to SQL2017 might have overridden the setting. My “Archive #7” is a few days before we upgraded to SQL2017.

Yup, same here. Upgrading seems to reset this setting.

Very helpful to have that confirmed, thanks.

I store value locally in table per server (AG) so I can customize per envt. This script will also resolve your issue above.

DECLARE @CycleSqlErrorLogFiles int

DECLARE @CycleSqlErrorLogFilesLive int

SELECT @CycleSqlErrorLogFiles = param_value

FROM config.cycle_error_log (NOLOCK)

WHERE parameter = ‘CycleSqlErrorLogFiles’

AND CAST(param_value as INT) > 0

SET @CycleSqlErrorLogFiles = ISNULL(@CycleSqlErrorLogFiles, 30) –add default if not supplied

exec master.dbo.xp_instance_regread N’HKEY_LOCAL_MACHINE’, N’Software\Microsoft\MSSQLServer\MSSQLServer’, N’NumErrorLogs’, @CycleSqlErrorLogFilesLive OUTPUT

IF @CycleSqlErrorLogFilesLive @CycleSqlErrorLogFiles AND @CycleSqlErrorLogFiles > 0

BEGIN

PRINT ‘Updating Maximum Number of Error Log Files’

exec xp_instance_regwrite N’HKEY_LOCAL_MACHINE’, N’Software\Microsoft\MSSQLServer\MSSQLServer’, N’NumErrorLogs’, REG_DWORD, @CycleSqlErrorLogFiles

END

Not equal sign (using less than, greater than) got dropped during post. Here it is using other format

IF @CycleSqlErrorLogFilesLive != @CycleSqlErrorLogFiles AND @CycleSqlErrorLogFiles > 0

I believe the trouble is that Microsoft keeps changing the registry key path between versions. Why on earth would they store this in the registry… smh

Sorry to drag this one up again but I’ve just migrated from 200R2 to 2017 and now when my recycle job runs nightly it doesn’t attach a timestamp to the log when viewed in SSMS. So I get current, archive#1, archive#2 archive#3 etc, when what I want is current, archive#1 – 19-08-2019 : 01.00.00, and so on. Any ideas what I mightnot be doing?

David – for questions, head to a Q&A site like https://dba.stackexchange.com.

My SQL server went through some weird issue.

It was disconnected until I changed the database from stand alone to online.

When looking at error log, issued time period is completely missing.

And also, error cycle had automatically ran, creating new errorlog file.

Would anyone know how this could happen?

Paul – I wish I could do free personal consulting here, but your best bet is https://dba.stackexchange.com.

Look in the Application Event log for any messages that could have been in the errorlog, and see if there are any corresponding entries in the System Event log.

Just a quick one. If I wanted to do this with a limited user, what permission would this user require? I’d only want to cycle the error logs. I’ve tried granting execute on sp_cycle_errorlog to this user but it doesn’t seem to work

Kenji – for questions, head to https://dba.stackexchange.com.

Now how did I know you would say that 🙂

HI I need information form previous sql errors logs, the server has only 6 files there is a way where I am able to recover deleted files form SQL SERVER ERROR Logs

/

I mean recycled errors logs files is possible to recovered?

Grace – for general questions, head to a Q&A site like https://DBA.StackExchange.com.

I had a problem with my setup – this may help others

https://dbatasks.blogspot.com/2022/07/cycling-error-logs.html

Apologies for question on an older post but, my server is auto cycling the log nightly at 1030pm and it is killing my log backup…i cant find a setting for an auto recycle or a job doing this, any thoughts?

For unrelated questions, head to a Q&A site like https://dba.stackexchange.com.

For info, first thing sp_cycle_errorlog does is to delete oldest errorlog file. If it fails for whatever reason before completing the whole cycle process (most of the time “file in use” because file is in use by log collection tools like NxLog) , you will lose one errorlog. If you configure some retry on the job, you can lose most of your daily logs quite quickly. It was a bad surprise.

Another thing you can do to automatically cycle the error logs is to set the max size of the file to something small like 30KB. Then the error log will cycle every few minutes! The downside is that you’ll only have a few minutes worth of data in each log file and SQL will truncate the old log files after about 10-30 minutes.

…Yeah. Don’t do that.

I found that setup that way at a client site by an inexperienced (client) DBA who was attempting to change the number of files to keep to 30 files but changed the max size of each file to 30 KB instead. (Funny now, but not funny when we needed the logs.)

We’ve used this technique for years but in the last 6 months we’ve started seeing Severity 16 errors, sporadically, on some instances and not on others, and we are trying to figure out what is holding the lock (all occurrences are (so far) just on SQL Server 2016 instances running on Windows 2016 servers, some clustered, others non-clustered)

“Unable to cycle error log file from ‘U:\xx\MSSQL13.xx\MSSQL\Log\ERRORLOG’ to ‘U:\xx\MSSQL13.xx\MSSQL\Log\ERRORLOG.1′ due to OS error ’32(The process cannot access the file because it is being used by another process.)’. A process outside of SQL Server may be preventing SQL Server from reading the files. As a result, errorlog entries may be lost and it may not be possible to view some SQL Server errorlogs. Make sure no other processes have locked the file with write-only access.”

Our schedule was for midnight each night, but we’ve switched to 1AM, 12:55 AM, and have set up some during the day, others early evening, and even one instance is running the job every 15 minutes. We have a batch file running 2 minutes pre-/post- job scheduled time running Handle, but the output file shows/finds no other processes. Process Explorer/Monitor captures are difficult to capture as we cannot predict when it will happen, and it usually resolves itself (i.e. next time the scheduled job runs, it will work). Running the job manually after seeing a failure will work. We have excluded the Log directory from virus scans and ATP (Advance Threat Protection).

Brent and/or anyone any suggestions?

So how do you safely reduce the max 99 files? When I attempt to reduce the count to like 10 in the configuration for example, the server locks up.