As my time in Hong Kong came to an end, I sat inside on a foggy morning and hit your top-voted questions from https://pollgab.com/room/brento.

Here’s what we covered:

- 00:00 Start

- 01:32 MyTeaGotCold: Are there any signs of brain drain from SQL Server to Postgres? It seems that every SQL Server guru agrees that Postgres is better, even if SQL Server pays them more.

- 02:33 Frozt: Do you have a checklist on decommissioning an SQL Server?

- 03:06 Mike: Hi Brent, you mentioned that you are going to update Recorded Classes in 2024. Is this still true ? If yes, which ones you want to update, and when to expect that ?

- 03:38 Mike: We know Read Committed is default isolation level, and locks are taken on statement level. What happens when you issue Begin Transaction, and then run many SQL statements before Commit ? Isolation level changes during the explicit transaction ? If yes, to which one ?

- 04:49 RoJo: if a nightly CheckDB fails, how do I approach recovery or validating data? Would it be certain pages / tables? Must I simply roll back to last known good CheckDB ?

- 05:33 Eugene Meidinger: If I wanted to run the Stack Overflow database as a homelab on a laptop, what specs would you recommend?

- 06:22 Justin M: Are you a Biphasic sleeper? What is your optimal nap time and length?

- 07:10 DataBarbieAdministrator: Could you create a blog series on well-known SQL Server Best Practices that are now outdated, explaining why and any alternatives? That would be useful and absolutely interesting! Thanks for your great job for the community!

- 08:06 Fermin: Hi Brent, just want to know what do you think about the career path future for DBAs. Are we moving to a modern Full Stack or Data Engineer? Thanks.

- 08:48 corelevel: Hey Brent! In your opinion, what is the minimum table size (in pages) to start adding indexes to it?

- 09:48 ChompingBits: How many of your customers are using Kerberos for authentication vs SPNs on NTLMv2 or lower? The latter is a pain to configure, and often leads to users/devs running scripts from the SQL server when one more servers are connected to in the query.

- 10:04 Jonathan: What do you recommend for the number of databases/size on a single disk? I’ve always just ballparked around keeping 2TB per drive and spinning up a new drive when that one fills, but lately we’ve had smaller dbs that have a lot of I/O and larger dbs with less users, etc. Thanks!

- 11:27 Miles: How important archiving data is? We see history tables growing from GBs to TBs.What problems we might run into if we don’t archive the old data. App team hesitant due to potential data requests from other teams. Any advise on balancing data retention needs with perf optimization?

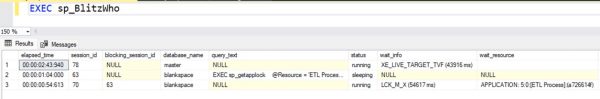

- 12:47 Miles: When there’s blocking, app team asks what lock caused blocking. Is it okay to collect lock info or is it overwhelming. sp_whoisactive runs very slow with get_locks =1. Are we doing right thing collecting lock info or should be focusing on something else like tuning head blocker?

- 13:37 SteveE: Hi Brent, Is there ever a use case to have a Non Clustered index created on the clustering key, perhaps when the table is a wide table?

- 14:34 Gustav: What’s your opinion of copilot for Azure SQL DB?

- 15:35 Steve E: Hi Brent We have a query which runs daily that inserts a recursive CTE into a table. Our monitoring tool shows the plan changes each time as it has a different plan handle but all the plans have the same plan hash. What would cause the difference in the plan handle?

- 16:39 ganesh: Hello Sir, Using excel source in ssis , error : there are XXX bytes of physical memory with XXXX bytes free. system reports 98% memory load.tried autobuffer in data flow ,rows per batch at destination, reduce columns size from navchar255-nvarchar 25 -30 .how to avoid error

- 16:58 Jim Johnston: I’m thru half of the Brent Ozar Season pass bundle, but looking for guidance with excessive context switching in SQL server. If its in the bundle, can I get a link to where can find it to review this?

- 17:53 Nintenbob: Do you have any experience/thoughts on using SQL Server 2019’s UTF-8 collation support for varchar as an alternative to nvarchar? At the surface level it seems a clear improvement for most uses that need unicode, but not sure if there are hidden downsides.

- 18:46 Moctezuma DBA: I was recently told index rebuild removes the 14-byte version control pointer. When index rebuild makes sense, should we set FILLFACTOR less than 100% to make room for those values and then mitigate page splits for that 14-byte pointer addition at each row?

- 19:23 ProdDBA: Storage for big data, what options are there for maintaining large databases over time without archiving? Splitting the large databases onto their own drives? Just buying more storage? Ideas on how to ask others in big data how they manage their database storage?

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: I’d like to teach you How to Think Like the SQL Server Engine for free.

I’d like to teach you How to Think Like the SQL Server Engine for free.