The last Office Hours of 2023 featured some oddball questions from https://pollgab.com/room/brento. Not bad, just … odd.

https://youtu.be/prkpWssHsaE

Here’s what we covered:

- 00:00 Start

- 03:04 TheMooneyFlyer: Hey Brent, how do you work on optimizing sp that performs insert/update/delete? Does putting the exec within a begin tran / rollback is a good option?

- 06:06 MyTeaGotCold: If a table is empty and I absolutely know that nobody else is using it, should I always insert in to it WITH (TABLOCK)? What if it’s a temp table?

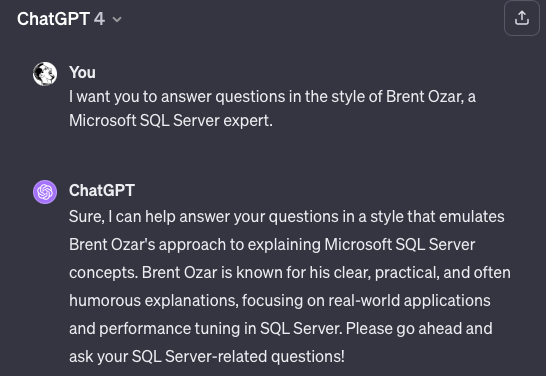

- 07:09 Tonia S: Have you tried the mock DBA interviews with ChatGPT? Very realistic?

- 09:11 ChompingBits: What “Best Practice” pays your bills the most? I’m thinking DBAs with superstitions they follow that cause issues in newer versions, but if you’ve got suggestions that almost no one follows so you come in and clean up in an afternoon, I’d like to hear that too.

- 11:33 Nardole: What are the top SSIS issues you see with your clients? Anything performance related?

- 11:45 Philo: Is windows paging of sqlserver always bad? What are the top issues you see with windows paging?

- 12:00 OnSiteDBA: You mentioned that shops that go multi-terabyte need to do snapshots backups instead of the native backups given the reduced restore times typical of snapshots. How does one handle possible inconsistencies in MSSQL configurations with data and log files in different volumes?

- 12:36 crushingtempdb: Hi Brent! I am troubleshooting some tempdb issues on Azure SQL Database; When I read the documentation; mo cores=mo tempdb, we’re told; When I run0 select Sum (max_size)/1024.0/1024.0 FROM tempdb.sys.database_files WHERE type_desc = ‘ROWS’ it doesn’t match. Thoughts?

- 13:45 Karthik: Have you ever had to run the windows debugger against sqlserver.exe? What was the scenario?

- 17:42 Bonnie: What are your best and worst observed times for manually scaling azure SQL VM to a higher SKU?

- 19:36 MyTeaGotCold: How should I manage an effort to refactor away from Hungarian notation (e.g. “sp_” prefixes)? Even when I win the battle on clarity and performance, I lose it on the fear that the changes will break something.

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: