Turns out y’all actually work over the summer – there are actually cool new features this month! I think I’m actually going to have to record updated sp_BlitzIndex, sp_BlitzLock, and sp_BlitzQueryStore modules for my “How I Use the First Responder Kit” class because these features are pretty awesome.

To get the new version:

- Download the updated FirstResponderKit.zip

- Azure Data Studio users with the First Responder Kit extension:

ctrl/command+shift+p, First Responder Kit: Import. - PowerShell users: run Install-DbaFirstResponderKit from dbatools

- Get The Consultant Toolkit to quickly export the First Responder Kit results into an easy-to-share spreadsheet

Consultant Toolkit Changes

I updated it to this month’s First Responder Kit, but no changes to querymanifest.json or the spreadsheet. If you’ve customized those, no changes are necessary this month: just copy your spreadsheet and querymanifest.json into the new release’s folder.

sp_Blitz Changes

- Improvement: better chance of running to completion when you’re not SA. sp_Blitz has always required sysadmin permissions in the past, and this is our first pass at it, so if you have limited permissions and you run into errors, feel free to file ’em as issues and we’ll see if we can work around it. (#3292, thanks Erik Darling.)

- Fix: reduce possibility of missing replication in use. (#3316, thanks DavidSchanzer.)

sp_BlitzCache Changes

- Fix: more accurate estimation of memory grant used overall across multiple executions of a query. (#3313, thanks mdpenguin.)

- Fix: more accurate percentage of duplicate query plans. We’ve been battling this issue for yeeeears, and I still can’t reproduce the issue in the lab, so I don’t think this is the last chapter on this issue. (#3314, thanks WaldenL.)

- Fix: skip warnings of ResourceDB queries. (#3315, thanks WaldenL.)

- Fix: better compatibility with AG secondary replicas. (#3291, thanks mbambion.)

sp_BlitzIndex Changes

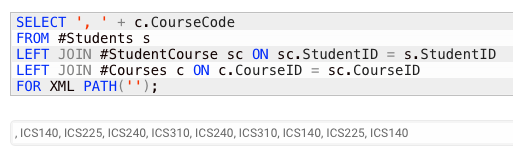

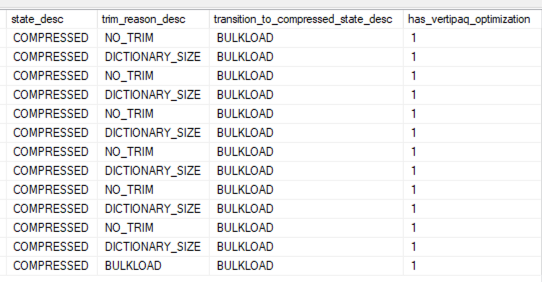

- Enhancements in #3294: in the columnstore visualization section, each rowgroup’s state, trim reason, etc are now shown:

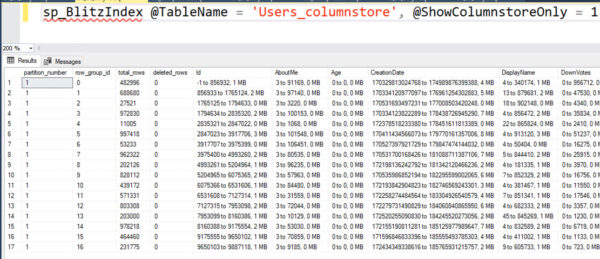

And in that result set, the size of the secondary dictionary (if required) for a column is now included in its size. Here’s the Users.DisplayName column before – note the sizes in the far right:

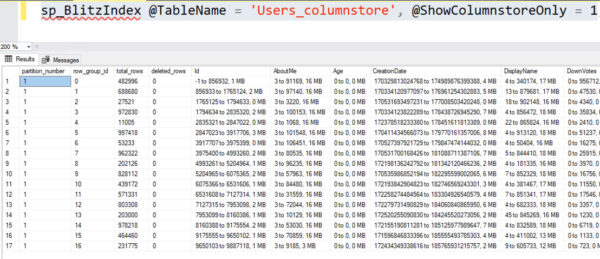

Now, with the secondary dictionary size included, the DisplayName column’s true size shows up as being dramatically larger because the strings are relatively unique and don’t compress well:

sp_BlitzLock Changes

- Improvement: if the queries involved in deadlocks are still in the plan cache, sp_BlitzLock now shows an additional result set with their plans. (#3293 and #3311, thanks Erik Darling.)

- Improvement: when targeting one session, make sure it exists first. (#3306, thanks Erik Darling.)

- Improvement: faster XML queries. (#3305, thanks Erik Darling.)

- Fix: time searches are now UTC compatible. (#3307, thanks Erik Darling and Mike Hodgson.)

sp_BlitzQueryStore Changes

- Improvement: Parameter Sensitive Plan Optimization (PSPO) compatibility! If you search for a single @StoredProcName, we now show all of the plan variants for it. (#3312, thanks sqljared.)

sp_DatabaseRestore Changes

- Improvement: new @KeepCdc parameter restores that attribute too. (#3289, thanks SQLSlinger.)

- Fix: compatibility with SQL Server 2022’s new undocumented columns in RESTORE HEADERONLY. (#3190, thanks Razvan Socol.)

- Fix: incorrect error message in debugging output. (#3320, thanks Greg Dodds.)

For Support

When you have questions about how the tools work, talk with the community in the #FirstResponderKit Slack channel. Be patient: it’s staffed by volunteers with day jobs. If it’s your first time in the community Slack, get started here.

When you find a bug or want something changed, read the contributing.md file.

When you have a question about what the scripts found, first make sure you read the “More Details” URL for any warning you find. We put a lot of work into documentation, and we wouldn’t want someone to yell at you to go read the fine manual. After that, when you’ve still got questions about how something works in SQL Server, post a question at DBA.StackExchange.com and the community (that includes me!) will help. Include exact errors and any applicable screenshots, your SQL Server version number (including the build #), and the version of the tool you’re working with.

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: