At https://pollgab.com/room/brento, post the questions you’d like to get my opinion on, and I’ll take a break every now and then at the office and go through ’em.

Here’s what we covered in this episode:

- 00:00 Start

- 01:08

- #ARRRRRGH: Hi Brent. Have you seen anybody put good visualisations on top of sp_Blitz? Im trying to build something in Power BI to look at our entire estate and looking for inspiration

- 02:33 Pradeep: Hi Brent, I am a Dev DBA. I use of SET STATISTICS IO ON to identify heavy logical reads and tune indexes to minimize it. I got better results also. Production DBA says reducing physical reads only will add value and logical reads not. Your thoughts please.

- 04:01 MancDBA: Hi Brent, how dedicated were/are you when it came to getting better knowledge about SQL Server. Did you ever study at the weekends/evenings or did you get enough knowledge throughout the working week? Cheers.

- 06:03 The Net Demoter: When should you not enable Query Store for SQL Server?

- 06:44 Venkat: What is your opinion of the new query_antipattern extended event in SQL 2022?

- 10:57 Lakshmi: Do you have any good use cases for temporary SQL stored procs?

- 12:23 Deepak: Do you think ChatGPT will result in the re-birth of Microsoft English Query for SQL Server?

- 14:17 CKI: What tool do you recommend to check database for sql injection vulnerability?

- 14:54 Bruce Un-Mighty: Does SSMS execution time in the lower right include or exclude the time required to render the results grid?

- 16:20 Dipesh: How do you know when SSMS plan operator times are individual or cumulative?

- 17:28 Maksim Bondarenko: How do You deal with “Transaction Log Larger than Data File” situation when a database in AlwaysON Cluster with Syncronous replica?

- 18:42 Parminder: Is there value in learning PowerBI for the SQL DBA?

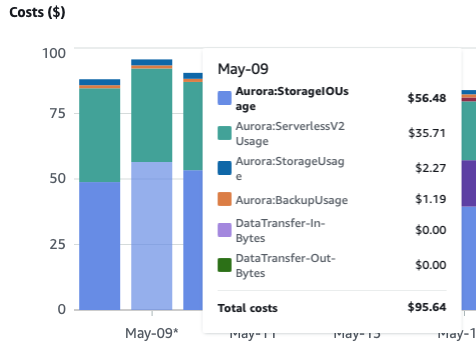

- 20:24 Deepak: What Azure SQL DB features do you think we can expect to flow down next to traditional SQL Server?

- 23:48 Dr. Zaius: Hi Brent, have you ever used Adam Machanic’s TOP (max bigint) technique as an alternative to the X-acto method of using temp tables to phase query executions? Any rules of thumb on which technique to use in a given situation?

- 24:35 Yousef: What is your opinion of OPTIMIZE_FOR_SEQUENTIAL_KEY in SQL 2019?

- 26:11 Eli: Hi Brent, do you have a rule of thumb or training that teaches about the smallest table size you’ll index? I’ve got some ~8k tables that are slowing down a query with millions of scans, but my hunch is that I should be focusing on reducing the number of scans instead of indexes.

- 27:32 Mars: Hi Brent, would you reccomend using firstresponderkit for benchmarking?

- 29:04 neil: Is there a way to MAXDOP someone else’s troublesome query (third party application dashboard hogs all the cpu cores). Like MAXDOP a specific sproc or something like that?

- 31:09 GuaroSQL: Hey Brent! how are you? Is First Responder Kit working fine in SQL server 2022? we are thinking of migrating, but we are using a lot First Responder Kit and we want to keep using it.

- 32:22 Eduardo: When running sp_blitzfirst, should it be run on the DAC connection?

Sometimes, I don’t care if questions get a lot of upvotes at

Sometimes, I don’t care if questions get a lot of upvotes at

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: I’ve never attended Build in person before because the data part of the event tends to be fairly thin, and the releases for Azure SQL DB and SQL Server aren’t usually tied to Build’s dates. This year, it’s a hybrid event, both in-person in Seattle and online.

I’ve never attended Build in person before because the data part of the event tends to be fairly thin, and the releases for Azure SQL DB and SQL Server aren’t usually tied to Build’s dates. This year, it’s a hybrid event, both in-person in Seattle and online.