Your challenge for this week is to tune a query. Say Stack Overflow has a dashboard that shows the top-ranking users in their most popular location. It’s even got an index to support it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE INDEX Location ON dbo.Users(Location); GO CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation AS SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate FROM dbo.Users u WHERE u.Location = (SELECT TOP 1 Location FROM dbo.Users WHERE Location <> N'' GROUP BY Location ORDER BY COUNT(*) DESC) ORDER BY u.Reputation DESC; GO |

You can test it with any version of the Stack Overflow database. To test it, we’ll turn on a couple of tuning options:

|

1 2 3 4 |

/* Turn on actual query plans, and: */ SET STATISTICS IO ON; EXEC GetTopUsersInTopLocation; |

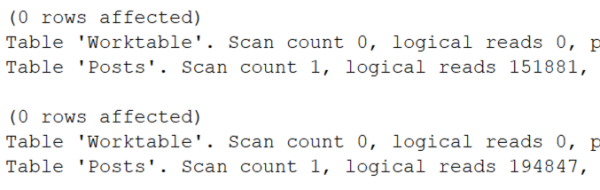

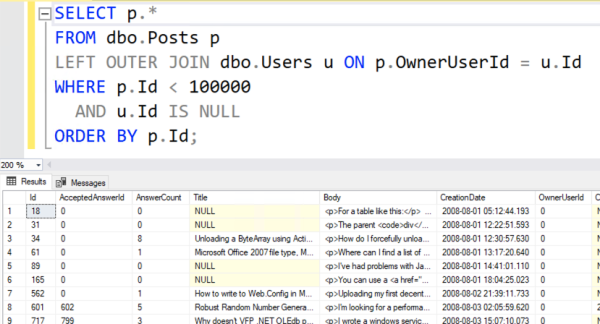

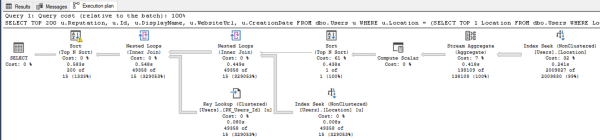

The actual execution plan does use our index – not just once, but twice:

But if you zoom in, there are a couple of problems:

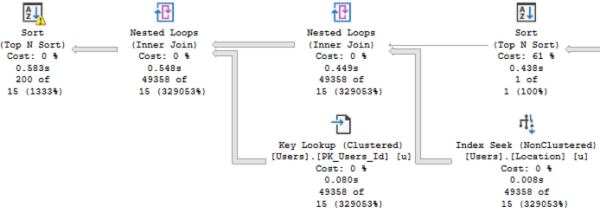

Problem #1: SQL Server under-estimated the number of rows that’d come out of that Location index seek. It thought only 15 rows would come out, when in reality 49,358 rows came out.

That lowball estimate caused a couple other problems:

- The sort has a yellow bang because it spilled to disk. SQL Server didn’t allocate enough memory to do the sort because it thought it’d only be sorting 15 rows.

- We did 49,358 key lookups, so it might have been more efficient to just scan the whole table. You can test that by adding an INDEX = 1 hint like this in the stored procedure, and compare the logical reads before & after:

|

1 2 3 4 5 6 7 8 9 10 11 |

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation AS SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate FROM dbo.Users u WITH (INDEX = 1) WHERE u.Location = (SELECT TOP 1 Location FROM dbo.Users WHERE Location <> N'' GROUP BY Location ORDER BY COUNT(*) DESC) ORDER BY u.Reputation DESC; GO |

We’re not concerned with query duration, spills, memory grants, etc in this particular challenge because those will vary a lot from one run to another, especially with 2019 & 2022 compatibility modes. Here, we’re only focused on reducing the number of logical reads. Your answer won’t have a dramatically reduced number – we’re just looking for more accurate estimates that drive lower logical reads, period. Any improvement will be a good one.

Post your solutions in the comments, and feel free to ask other folks questions about their solutions. We revisit the answers & discuss ’em in this post. Have fun!

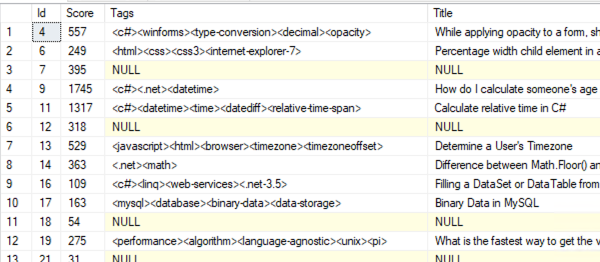

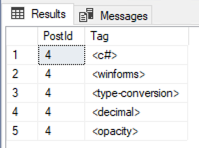

Any given Post.Id could have up to 5 rows in the PostTags table.

Any given Post.Id could have up to 5 rows in the PostTags table.

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: