Your query exercise was to take this Stack Overflow query to find the top-voted questions for any given tag:

|

1 2 3 4 |

SELECT TOP 100 * FROM dbo.Posts WHERE Tags LIKE '%<sql-server>%' ORDER BY Score DESC; |

That’s currently using this index in its execution plan:

|

1 |

CREATE INDEX Score_Tags ON dbo.Posts(Score, Tags); |

And answer 3 questions:

- What kinds of tags will perform worse than others for this query?

- Could you change the query to perform better?

- Could you change the indexes to perform better, without changing the table structure?

Q1: What tags will have problems?

The current execution plan is scanning the Score_Tags index backwards, from highest-scoring posts down. As soon as it finds 100 posts that match the tag we’re looking for, boom, the query can stop scanning.

That works really well for common tags like sql-server, but the rarer of a tag we’re looking for, the slower the query is going to get. It takes longer to scan through the entire table, hoping to find 100 rows that match, checking the contents of each Tags, because searching strings in SQL Server is expensive.

For example, let’s look for a relatively recent tag, sql-server-2022:

|

1 2 3 4 |

SELECT TOP 100 * FROM dbo.Posts WHERE Tags LIKE '%<sql-server-2022>%' ORDER BY Score DESC; |

The execution plan is the same as searching for sql-server, but because there aren’t even 100 2022-specific questions yet, the query takes forever to run.

The less data there is for a tag, the longer the query will take to run. So the answer here is that rare tags will have problems. (Isn’t that wild? Usually we think of ‘big data’ as being more problematic for query performance, but here it’s the opposite problem.)

Q2: Could we improve the query?

Database admins often have a knee-jerk reaction to seeing “SELECT *” and they scream that it’s bad for performance. We could modify the query so that it returns less columns, and let’s try an extreme example of that. Let’s only return in columns included in our Score_Tags index:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

SET STATISTICS IO ON; /* "Bad" */ SELECT TOP 100 * FROM dbo.Posts WHERE Tags LIKE '%<sql-server>%' ORDER BY Score DESC; /* "Good" */ SELECT TOP 100 Score, Tags, Id FROM dbo.Posts WHERE Tags LIKE '%<sql-server>%' ORDER BY Score DESC; |

The “good” query’s actual execution plan has one less operator – no key lookup – but in the case of the sql-server tag, the duration difference is like 1 millisecond. Nobody cares. Yes, it’s technically less logical reads:

But the difference isn’t really groundbreaking. Besides, if we even just add one column in that isn’t in the index, the key lookup is back, and we’re back to the exact same number of logical reads. Like Erik recently blogged, you can’t partially fix key lookups.

Besides, if we switch to the sql-server-2022 tag that runs slowly, both the “bad” and “good” versions of the query do the exact same number of logical reads anyway. They scan the whole index. The problem in their query plans isn’t the key lookup – it’s the index scan.

So I would argue that:

- For common tags, people aren’t concerned with performance because the query’s already fast enough

- For uncommon tags, where performance is terrible, query changes don’t help

There is one way you can cheat, and I’m all about cheating if it results in faster performance. In the challenge post’s comments, JennyFromDBLock suggested adding an additional filter to the query – like, only look for posts with a Score higher than a certain number. The more selective that filter is, the less rows we have to examine. For example, if we only look for high scores:

|

1 2 3 4 5 |

SELECT TOP 100 * FROM dbo.Posts WHERE Tags LIKE '%<sql-server>%' AND Score > 1000 ORDER BY Score DESC; |

The actual execution plan now has a seek rather than a scan. The index is on Score, Tags, which means we can seek to 1001, and read less rows. This technique is even effective for rare tags – but again, only as long as we use a selective filter on Score.

I can’t always tell users, “Hey, just look for different rows, and your query will go faster,” but if you can do that, then this technique works.

Q3: Could we tune indexes without changing the table structure?

I have to put in the second half of that sentence because I wanted to keep the challenge realistic to short-term real-world work. In the real world, we’re not usually allowed to point, shriek, and demand application code changes.

First, for regular nonclustered indexes, some readers suggested adding different indexes, like putting just Tags in the key:

|

1 |

CREATE INDEX Tags ON dbo.Posts(Tags) INCLUDE (Score); |

But since our query isn’t sargable, that makes no difference on logical reads. We have no idea whether the <sql-server> tag is at the beginning of the Tags string, or the end, so the rows we’re looking for could be anywhere. And now, since the index isn’t organized by Score, we have to find all of the sql-server tagged questions, and sort all of them by score. That query plan really blows and takes forever.

Another suggestion was to only index on Score, and just put Tags in the includes:

|

1 |

CREATE INDEX Score ON dbo.Posts(Score) INCLUDE (Tags); |

The idea there is that the index will require less maintenance since Tags doesn’t need to be sorted. Sure – but that doesn’t make our query any faster, and we’re trying to get a faster query here.

Going even farther out of the box, a couple of readers suggested adding a nonclustered columnstore index like this:

|

1 2 |

CREATE NONCLUSTERED COLUMNSTORE INDEX NCCI ON dbo.Posts(Score, Tags); |

But that actually makes performance dramatically worse. I explain why in my Fundamentals of Columnstore class.

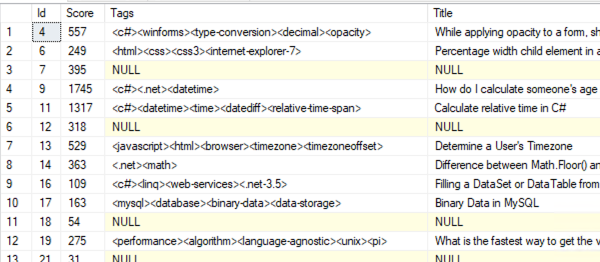

So regular nonclustered indexes aren’t gonna cut it. How about a full text index? Let’s look at the table screenshot from the challenge blog post:

Oof. Things like .net-3.5 are going to be a problem since SQL Server’s full text indexes ignore dashes. Alright, that’s out the door – let’s keep looking.

If you look at the screenshot with your special eyes, you’ll see your brand some rows don’t have Tags at all. However, our index on Score_Tags includes all rows of the table, whether they have null or populated Tags. For proof, check out sp_BlitzIndex and compare the sizes of the indexes, and you’ll see that the clustered index and the Score_Tags index both have the same number of rows.

Let’s see how much of the table has null Tags:

|

1 2 3 |

SELECT SUM(1) AS rows, SUM(CASE WHEN Tags IS NULL THEN 1 ELSE 0 END) AS tags_null FROM dbo.Posts; |

Results:

More than half of the table’s rows can’t possibly match our search. Wouldn’t it be cool if we could remove those from the index? Indeed, we can, with a filtered index.

|

1 2 3 |

CREATE INDEX Score_Tags_Filtered ON dbo.Posts(Score, Tags) WHERE Tags IS NOT NULL; |

Now, if we look for a rare tag, like sql-server-2022, we can avoid scanning those null rows:

|

1 2 3 4 5 6 7 8 9 |

SELECT TOP 100 * FROM dbo.Posts WHERE Tags LIKE '%<sql-server-2022>%' ORDER BY Score DESC; SELECT TOP 100 * FROM dbo.Posts WITH (INDEX = Score_Tags) WHERE Tags LIKE '%<sql-server-2022>%' ORDER BY Score DESC; |

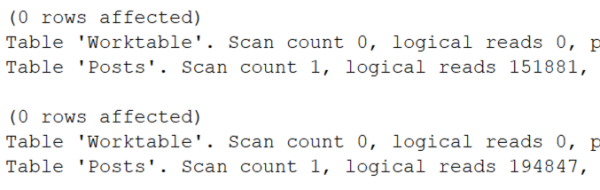

In those two queries, the top query lets SQL Server choose the index. The bottom one has an index hint saying, “SQL Server, I want you to use the old non-filtered index.” Here are their actual execution plans, and here’s the difference in logical reads:

The filtered index is good for about 25% less reads, and query runtime drops from 35.1 seconds to… 33.5 seconds.

That’s, uh, not good enough. So technically I’d say the answer to Q3 is that yes, we can improve performance with an index, but we’re only talking about percentage improvements, not order-of-magnitude improvements. We’re gonna need a bigger boat.

Finally, Thomas Franz had a really ambitious idea: build an indexed view that normalizes the contents of the Tags column, splitting it into Tag_1, Tag_2, Tag_3, Tag_4, and Tag_5 automatically. It was hard work to build that solution out, but It absolutely does work. I’m mentioning this last because it requires changes to the query and changes to the indexing, but I still totally consider it valid and I love it.

Sometimes, we gotta change the database.

This is a great example of the kind of performance problem you hit as data size grows over time. In the beginning, when tables are small, table design is less critical. As your application grows over time:

- There are more users, running more queries, simultaneously

- The size of the data they’re querying grows, so each query becomes more resource-intensive

- The distribution of data changes, and you’re more likely to have outliers with unique performance challenges

- The shortcuts and mistakes from the application’s beginning come back to haunt you

And that’s your next Query Exercise:

how would you change this design?

Keep in mind that:

- We have a lot of existing code that uses the Posts.Tags column

- That code does selects, inserts, and updates

- We want our changes to be backwards-compatible: old code still has to work

- We need to give developers an option for slow queries, letting them change those slow queries to get faster performance if they want it

That’s your challenge for this week! Post your answer in the comments (if you’re using code, try pasting the code in a Github Gist), discuss the ideas of others, and we’ll check back next week with another post with the thoughts and my own solution. Have fun!

11 Comments. Leave new

Since this weeks question is in the answers post of the previous question I’ll post my answer here.

I had a lot of fun writing all of this and testing these different solutions, I have to thanks Thomas Franz for the indexed view solution and Brent and Vedran Galic for the Trigram solution.

Let’s first review the requirements:

* We have a lot of existing code that uses the Posts.Tags column

* That code does selects, inserts, and updates

* We want our changes to be backwards-compatible: old code still has to work

* We need to give developers an option for slow queries, letting them change those slow queries to get faster performance if they want it

These requirements push us towards an initially database driven solution, as we want backwards-compatible and a opt-in policy for the application developers.

Normally I’m more a fan of constructive collaboration between the database, and application developers team, but alas, management is “forcing” us down a database driven path, oh well they are paying the licensing costs and we might “finally” get a beefier SQL Server *wink wink* (I know, who am I kidding).

Also we can’t modify the Posts table, as some process might be doing a SELECT * and they might not be able to handle extra columns in the result set.

There are a couple of solutions:

First we can create a PostTags table and populate that with the tags per post. While this solution is easy to set up initially:

https://gist.github.com/Montro1981/1414b72ee080d6ba685529c685316844

We also are going to need TRIGGERs to keep the PostTags table in sync. These TRIGGER need to handle at least UPDATEs and INSERTs. DELETEs are less of an issue due to the INNER JOIN in the query and tags from DELETE-ed posts can be cleaned up async.

So the TRIGGER would be something like (this handles INSERT, UPDATE, DELETE):

https://gist.github.com/Montro1981/ff63784d356c24519149c559306c838a

However there is another very nasty issue with this solution and it smells … parameter sniffing, say that some developer adds a Store Procedure like sp_Find_Top_Scored_Posts_With_Tag:

https://gist.github.com/Montro1981/b002b4bf7085375a203f645959a55e46

Hitting a often used tag first caches that plan, however searching a rare tag that reuses the cached plan and …. …. …. many tasty beverages later …. …. a result set was produced. The reason being the great difference in execution plans for both query: https://www.brentozar.com/pastetheplan/?id=rJJlfRwqp

Now we *could* add a WITH RECOMPILE band-aid to the Stored Procedure which would solve the parameter sniffing issue, but maybe there are other even better solutions.

Secondly, Thomas Franz made a solution with a materialized view (I created my own adaptation of it):

https://gist.github.com/Montro1981/95a27c9cdbf8205b5dfcfea5ea56cb00

The materialized view is “heavier” on the SQL, in more then one way:

1. The SELECT queries for often used tags do more logical reads and even goes parallel if the threshold is too low.

2. INSERT, UPDATE, DELETE queries forces the indexes to be updated which is more overhead per query. If we could knock the Score out from the view UPDATEs would only hit the view if the tags change, but right now the indexes might need to reshuffle pages on score updates.

Let’s see if parameter sniffing is a possible issue with the indexed views, we add a Store Procedure again sp_Find_Top_Scored_Posts_With_Tag_using_view:

https://gist.github.com/Montro1981/660188d4e636158d81f372ed98080c09

Parameter sniffing doesn’t seem to be an issue.

My third solution is to implement Trigrams searching (https://sqlperformance.com/2017/09/sql-performance/sql-server-trigram-wildcard-search). Setting this up is a bit of work and includes building a few table-valued functions:

https://gist.github.com/Montro1981/ab10d261ac5cee1096583539d1605f4c

Again we add complexity to our SELECT queries so they to “slower” due to more overhead. And we need to have to maintain our trigram table, for instance synchronously with a TRIGGER.

https://gist.github.com/Montro1981/47c3303ff7c5a1406836a90ab9c67ee6

Let’s see if parameter sniffing is a possible issue with the trigrams, we add a Store Procedure again sp_Find_Top_Scored_Posts_With_Tag_using_trigrams:

https://gist.github.com/Montro1981/b39dc663bee262c0360d98d820e1d5db

Parameter sniffing doesn’t seem to be an issue.

Problem with your first approach:

when you write direct into the dbo.PostTags table, it will not be reflected in dbo.Posts.Tags (you are still using this column).

And one part of the goal was, that the devs should be able to use faster queries (on the new structure) and in my opinion this does include INSERT/UPDATE/DELETE too (e.g. deleting the tag from all Posts (if there would be any).

And of course you are saving the tags twice (disk space / memory) and on the long term it is almost guaranted, that it will differ (maybe because someone disabled the trigger for a short maintenance or there are some edge cases …).

Ah yes, that is a nasty flaw in the design, thanks for pointing it out. It works one way but forgot to include a way to update the Tags column in the original table if the PostTags get updated. Classic oversight.

However even if I were to add something to keep the Tags in sync in that solution I wouldn’t implement that solution due to the susceptibility to parameter sniffing.

@Brent: you should really let us add attachments or at least insert formated code that didn’t get rid of all spaces and double dashes replaced by a wide dash.

Steps:

* rename original table to Posts_old (I skipped this step because I don’t want to mess up my database and used dbo.Post instead of dbo.Posts later)

* create a sequence object and init it with the highes PostId

* create a PostData table with all columns except the Tags, the id here will not be an identity but has a default for the sequence

* create a PostTags table with PostId and Tag (plus Id)

* create a cascading foreign key between both tables

* fill both tables from the original Posts table, use STRING_SPLIT to split the tags

* create a View that joins both tables (LEFT JOIN !) and uses STRING_AGG to concate the tags

* create an INSTEAD OF trigger for Insert / Update and Delete, otherwise we can’t write to the view (would break old code)

————————————————————-

CREATE SEQUENCE dbo.s_PostId AS INTEGER;

DECLARE @PostId INTEGER = (SELECT TOP 1 p.Id FROM dbo.Posts AS p ORDER BY p.Id DESC) /* TOP 1 is faster than a MAX(id), since it reads just 3 pages (index backward scan) instead of the whole table */

, @cmd VARCHAR(500)

SET @cmd = CONCAT(‘ALTER SEQUENCE dbo.s_PostId RESTART WITH ‘, @PostId)

EXEC (@cmd)

;

GO

/* =====================================================================================================================*/

GO

CREATE TABLE dbo.PostsData

(Id INT NOT NULL CONSTRAINT DF_PostsData_Id DEFAULT NEXT VALUE FOR dbo.s_PostId

, AcceptedAnswerId INT

, AnswerCount INT

, Body NVARCHAR(MAX) NOT NULL

, ClosedDate DATETIME

, CommentCount INT

, CommunityOwnedDate DATETIME

, CreationDate DATETIME NOT NULL

, FavoriteCount INT

, LastActivityDate DATETIME NOT NULL

, LastEditDate DATETIME

, LastEditorDisplayName NVARCHAR(40)

, LastEditorUserId INT

, OwnerUserId INT

, ParentId INT

, PostTypeId INT NOT NULL

, Score INT NOT NULL

, Title NVARCHAR(250)

, ViewCount INT NOT NULL)

;

GO

ALTER TABLE dbo.PostsData ADD CONSTRAINT PK_PostsData_Id PRIMARY KEY CLUSTERED (Id) WITH (DATA_COMPRESSION = PAGE); /* no need to waste space on disk and in RAM – except you are so CPU bound that can’t spare the 1-2% extra CPU */

GO

/* all columns except Tags*/

INSERT INTO dbo.PostsData (Id, AcceptedAnswerId, AnswerCount, Body, ClosedDate, CommentCount, CommunityOwnedDate, CreationDate, FavoriteCount, LastActivityDate, LastEditDate, LastEditorDisplayName, LastEditorUserId, OwnerUserId, ParentId, PostTypeId, Score, Title, ViewCount)

SELECT p.Id, p.AcceptedAnswerId, p.AnswerCount, p.Body, p.ClosedDate, p.CommentCount, p.CommunityOwnedDate, p.CreationDate, p.FavoriteCount, p.LastActivityDate, p.LastEditDate, p.LastEditorDisplayName, p.LastEditorUserId, p.OwnerUserId, p.ParentId, p.PostTypeId, p.Score, p.Title, p.ViewCount

FROM dbo.Posts AS p;

GO

/* if you have other indexes on dbo.Posts that not includes [Tags] add them here too*/

GO

GO

CREATE TABLE dbo.PostsTags ( Id BIGINT IDENTITY NOT NULL /* not INT, since there may be up to 5 times as many Tags as Posts and PostId is already an INT*/

CONSTRAINT pk_PostTags PRIMARY KEY NONCLUSTERED /* not really needed, but we will follow the usual scheme in the StackOverflow database */

, PostId INT NOT NULL

, Tag NVARCHAR(150) NOT NULL /*theoretical someone could have created a single Tag with 148 chars on a post*/

)

go

CREATE UNIQUE CLUSTERED INDEX iuc_PostsTags__PostId__Tag ON dbo.PostsTags (PostId, Tag) WITH (DATA_COMPRESSION = PAGE)

go

ALTER TABLE dbo.PostsTags ADD CONSTRAINT FK_PostsTags__PostsData

FOREIGN KEY (PostID)

REFERENCES dbo.PostsData (Id)

ON UPDATE CASCADE ON DELETE CASCADE; /* delete the Tags automatical, if the Post was deleted (and update the PostId if an Update happen, which is very unlikely, but you’ll never know */

GO

INSERT INTO dbo.PostsTags (PostId, Tag)

SELECT p.Id AS Post_id

, SUBSTRING(ss.value, 2, 150) AS Tag — remove the leading ”) AS ss

WHERE p.Tags IS NOT NULL /* don’t bother to try to split empty Tags*/

AND ss.value ” /* STRING_SPLIT() does not return NULL but empty strings after the last Tag */

GO

/* creating the index AFTER we filled the table will be faster then before the INSERT */

CREATE NONCLUSTERED INDEX iuc_PostTags__Tag ON dbo.PostsTags (Tag) INCLUDE (PostId) WITH (DATA_COMPRESSION = PAGE)

;

GO

/* =====================================================================================================================*/

/* =====================================================================================================================*/

CREATE OR ALTER VIEW dbo.Post /* used dbo.Post instead of dbo.Posts because I don’t want to mess up my StackOverflow database */

AS /* NEVER use SELECT * in a view – it can cause the content to be listed in the wrong columns when you drop a column from a source table */

SELECT pd.Id

, pd.AcceptedAnswerId

, pd.AnswerCount

, pd.Body

, pd.ClosedDate

, pd.CommentCount

, pd.CommunityOwnedDate

, pd.CreationDate

, pd.FavoriteCount

, pd.LastActivityDate

, pd.LastEditDate

, pd.LastEditorDisplayName

, pd.LastEditorUserId

, pd.OwnerUserId

, pd.ParentId

, pd.PostTypeId

, pd.Score

, pd.Title

, pd.ViewCount

, pt.Tags

FROM dbo.PostsData AS pd

LEFT JOIN (SELECT pt.PostId

, ” AS Tags /* add WITHIN GROUP (ORDER BY pt.Id) if you really need the original order (will reduce the performance) OR change the Clustered Index to (PostId + Id)*/

FROM dbo.PostsTags AS pt

GROUP BY pt.PostId

) AS pt

ON pt.PostId = pd.Id

GO

/* =====================================================================================================================*/

GO

CREATE OR ALTER TRIGGER tr_Post_before_insert ON dbo.Post

INSTEAD OF INSERT

AS

BEGIN

IF @@ROWCOUNT = 0 RETURN;

/* has to use a #temp table, since I found no way to let OUTPUT return Inserted.Id and i.Tags when inserting into the dbo.PostsData*/

CREATE TABLE #tags

(Id INT NOT NULL PRIMARY KEY /* using DEFAULT NEXT VALUE FOR dbo.s_PostId will not work for #temp tables (Object not found), since it is in the [tempdb], but it is no good idea to refer hardcoded to the database + schema + sequence*/

, AcceptedAnswerId INT

, AnswerCount INT

, Body NVARCHAR(MAX) NOT NULL

, ClosedDate DATETIME

, CommentCount INT

, CommunityOwnedDate DATETIME

, CreationDate DATETIME NOT NULL

, FavoriteCount INT

, LastActivityDate DATETIME NOT NULL

, LastEditDate DATETIME

, LastEditorDisplayName NVARCHAR(40)

, LastEditorUserId INT

, OwnerUserId INT

, ParentId INT

, PostTypeId INT NOT NULL

, Score INT NOT NULL

, Title NVARCHAR(250)

, ViewCount INT NOT NULL

, Tags NVARCHAR(150));

INSERT INTO #tags (Id, AcceptedAnswerId, AnswerCount, Body, ClosedDate, CommentCount, CommunityOwnedDate, CreationDate, FavoriteCount, LastActivityDate, LastEditDate, LastEditorDisplayName, LastEditorUserId, OwnerUserId, ParentId, PostTypeId, Score, Title, ViewCount, Tags)

SELECT NEXT VALUE FOR dbo.s_PostId AS Id

, AcceptedAnswerId, AnswerCount, Body, ClosedDate, CommentCount, CommunityOwnedDate, CreationDate, FavoriteCount, LastActivityDate, LastEditDate, LastEditorDisplayName, LastEditorUserId, OwnerUserId, ParentId, PostTypeId, Score, Title, ViewCount, Tags

FROM Inserted AS i

;

/* all columns except Tags */

INSERT INTO dbo.PostsData

( Id, AcceptedAnswerId, AnswerCount, Body, ClosedDate, CommentCount, CommunityOwnedDate, CreationDate, FavoriteCount, LastActivityDate, LastEditDate, LastEditorDisplayName, LastEditorUserId, OwnerUserId, ParentId, PostTypeId, Score, Title, ViewCount)

SELECT t.Id, AcceptedAnswerId, AnswerCount, Body, ClosedDate, CommentCount, CommunityOwnedDate, CreationDate, FavoriteCount, LastActivityDate, LastEditDate, LastEditorDisplayName, LastEditorUserId, OwnerUserId, ParentId, PostTypeId, Score, Title, ViewCount

FROM #tags AS t

;

INSERT INTO dbo.PostsTags (PostId, Tag)

SELECT t.Id, SUBSTRING(ss.value, 2, 150) AS Tag

FROM #tags AS t

CROSS APPLY STRING_SPLIT(t.Tags, ‘>’, 1) AS ss

WHERE t.Tags IS NOT NULL

AND ss.value ”

ORDER BY t.Id, ss.ordinal

;

END

GO

/* =====================================================================================================================*/

GO

CREATE OR ALTER TRIGGER tr_Post_before_update ON dbo.Post

INSTEAD OF UPDATE

AS

BEGIN

IF @@ROWCOUNT = 0 RETURN;

UPDATE pd

SET pd.AcceptedAnswerId = i.AcceptedAnswerId

, pd.AnswerCount = i.AnswerCount

, pd.Body = i.Body

, pd.ClosedDate = i.ClosedDate

, pd.CommentCount = i.CommentCount

, pd.CommunityOwnedDate = i.CommunityOwnedDate

, pd.CreationDate = i.CreationDate

, pd.FavoriteCount = i.FavoriteCount

, pd.LastActivityDate = i.LastActivityDate

, pd.LastEditDate = i.LastEditDate

, pd.LastEditorDisplayName = i.LastEditorDisplayName

, pd.LastEditorUserId = i.LastEditorUserId

, pd.OwnerUserId = i.OwnerUserId

, pd.ParentId = i.ParentId

, pd.PostTypeId = i.PostTypeId

, pd.Score = i.Score

, pd.Title = i.Title

, pd.ViewCount = i.ViewCount

FROM dbo.PostsData AS pd

INNER JOIN Inserted AS i

ON i.Id = pd.Id

/* before SQL 2022 use UPDATE() instead of pd. IS DISTINCT FROM i.*/

WHERE pd.AcceptedAnswerId IS DISTINCT FROM i.AcceptedAnswerId

OR pd.AnswerCount IS DISTINCT FROM i.AnswerCount

OR pd.Body IS DISTINCT FROM i.Body

OR pd.ClosedDate IS DISTINCT FROM i.ClosedDate

OR pd.CommentCount IS DISTINCT FROM i.CommentCount

OR pd.CommunityOwnedDate IS DISTINCT FROM i.CommunityOwnedDate

OR pd.CreationDate IS DISTINCT FROM i.CreationDate

OR pd.FavoriteCount IS DISTINCT FROM i.FavoriteCount

OR pd.LastActivityDate IS DISTINCT FROM i.LastActivityDate

OR pd.LastEditDate IS DISTINCT FROM i.LastEditDate

OR pd.LastEditorDisplayName IS DISTINCT FROM i.LastEditorDisplayName

OR pd.LastEditorUserId IS DISTINCT FROM i.LastEditorUserId

OR pd.OwnerUserId IS DISTINCT FROM i.OwnerUserId

OR pd.ParentId IS DISTINCT FROM i.ParentId

OR pd.PostTypeId IS DISTINCT FROM i.PostTypeId

OR pd.Score IS DISTINCT FROM i.Score

OR pd.Title IS DISTINCT FROM i.Title

OR pd.ViewCount IS DISTINCT FROM i.ViewCount

;

IF UPDATE(Tags) /* only when Tags was in the SET list of the UPDATE statement)*/

BEGIN

SELECT i.Id AS PostId, SUBSTRING(ss.value, 2, 150) AS Tag

INTO #tags

FROM Inserted AS i

CROSS APPLY STRING_SPLIT(i.Tags, ‘>’) AS ss

WHERE i.Tags IS NOT NULL

AND ss.value ”

;

INSERT INTO dbo.PostsTags (PostId, Tag)

SELECT *

FROM #tags AS t

WHERE NOT EXISTS (SELECT * FROM dbo.PostsTags AS pt WHERE pt.PostId = t.PostId AND pt.Tag = t.Tag)

;

DELETE pt

FROM dbo.PostsTags AS pt

INNER JOIN Inserted AS i /* without this it would delete all Tags from Posts that were not just updated */

ON i.Id = pt.PostId

WHERE NOT EXISTS (SELECT * FROM #tags AS t WHERE pt.PostId = t.PostId AND pt.Tag = t.Tag)

;

END;

END

GO

/* =====================================================================================================================*/

GO

CREATE OR ALTER TRIGGER tr_Post_before_delete ON dbo.Post

INSTEAD OF DELETE

AS

BEGIN

IF @@ROWCOUNT IS NULL RETURN;

DELETE pd

FROM dbo.PostsData AS pd

INNER JOIN Deleted d

ON d.Id = pd.Id

;

/* no need to delete from PostTags since there is a cascading Foreign Key which handles the DELETE */

END

;

GO

@Thomas – I wish I could quickly/easily turn WordPress Comments into a code-friendly system, but that’s way beyond my pay grade, heh. I’ll add a note to the posts going forward suggesting that folks put code in Github Gists, and just put the link in their comment.

okay, put it to Gists.Github for better reading

https://gist.github.com/samot1/5269da8ce06b47fe7f57f77a48f70247

You solution is quite similar to my first solution.

So what if you introduce a stored procedure that searches for a tag, and you search for a common tag first and then a rare tag?

Is the normalized table susceptible to parameter sniffing if used queried with a “proper” parametrized query?

my approach should not care about parameter sniffing (or at least not more than e.g. a customer vs. orders or orders vs. order_positions table).

I made two tables and both are indexed, so you can easily find any posts with any tags (regardless if it is often or seldom used) in the PostTags table.

Of course if you query the view with Post.Tags LIKE ‘%whatever%’ it will be still slow (as it is currently), but the goal was to change the database design to enable the developers to use a fast query (in this case they should simply look into the PostTags table) while the existing code still works the slow old way.

Thomas – fantastic work! It never would have occurred to me to switch Posts into a view, and then use triggers on the view to handle pushing the modifications to the right places. Excellent!

I’m stretching to think of problems with this approach. Foreign keys aren’t a problem – if someone had foreign keys in place, these changes are still totally doable.

I can imagin just one point where my approach will break the existing code – if the current code uses SET IDENTITY_INSERT ON/OFF for the dbo.Posts, since I had to replace the identity column by a sequence.

But playing with IDENTIDY_INSERT is nothing you should do on your productive system.

[…] the last Query Exercise discussion, we hit a performance wall when we were trying to quickly find questions with a specific tag. We threw up our hands and said it’s time to make database changes, but we had a few […]