Your challenge for this week is to tune a query. Say Stack Overflow has a dashboard that shows the top-ranking users in their most popular location. It’s even got an index to support it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE INDEX Location ON dbo.Users(Location); GO CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation AS SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate FROM dbo.Users u WHERE u.Location = (SELECT TOP 1 Location FROM dbo.Users WHERE Location <> N'' GROUP BY Location ORDER BY COUNT(*) DESC) ORDER BY u.Reputation DESC; GO |

You can test it with any version of the Stack Overflow database. To test it, we’ll turn on a couple of tuning options:

|

1 2 3 4 |

/* Turn on actual query plans, and: */ SET STATISTICS IO ON; EXEC GetTopUsersInTopLocation; |

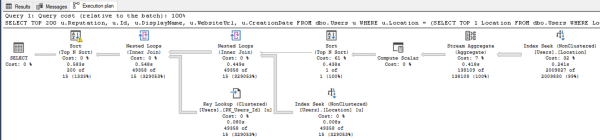

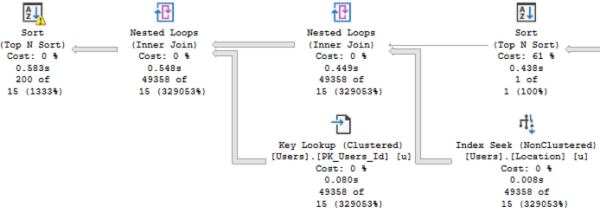

The actual execution plan does use our index – not just once, but twice:

But if you zoom in, there are a couple of problems:

Problem #1: SQL Server under-estimated the number of rows that’d come out of that Location index seek. It thought only 15 rows would come out, when in reality 49,358 rows came out.

That lowball estimate caused a couple other problems:

- The sort has a yellow bang because it spilled to disk. SQL Server didn’t allocate enough memory to do the sort because it thought it’d only be sorting 15 rows.

- We did 49,358 key lookups, so it might have been more efficient to just scan the whole table. You can test that by adding an INDEX = 1 hint like this in the stored procedure, and compare the logical reads before & after:

|

1 2 3 4 5 6 7 8 9 10 11 |

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation AS SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate FROM dbo.Users u WITH (INDEX = 1) WHERE u.Location = (SELECT TOP 1 Location FROM dbo.Users WHERE Location <> N'' GROUP BY Location ORDER BY COUNT(*) DESC) ORDER BY u.Reputation DESC; GO |

We’re not concerned with query duration, spills, memory grants, etc in this particular challenge because those will vary a lot from one run to another, especially with 2019 & 2022 compatibility modes. Here, we’re only focused on reducing the number of logical reads. Your answer won’t have a dramatically reduced number – we’re just looking for more accurate estimates that drive lower logical reads, period. Any improvement will be a good one.

Post your solutions in the comments, and feel free to ask other folks questions about their solutions. We revisit the answers & discuss ’em in this post. Have fun!

33 Comments. Leave new

Well for accuracy we can use a local variable for the location and slap an option recompile on the query but that’s not the best thing. IO is slightly lower which you mentioned we won’t get a huge improvement, and estimates are much more accurate.

But hang tight while we all find a much better solution! 😀

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation

AS

declare @toplocation nvarchar(100) = (SELECT TOP 1 Location

FROM dbo.Users

WHERE Location N”

GROUP BY Location

ORDER BY COUNT(*) DESC)

SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate

FROM dbo.Users u

WHERE u.Location = @toplocation

ORDER BY u.Reputation DESC

option(recompile)

GO

And I meant for plan/estimates accuracy

I went with the same first iteration and got this:

Table ‘Users’. Scan count 2, logical reads 630, physical reads 0, page server reads 0, read-ahead reads 0, page server read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob page server reads 0, lob read-ahead reads 0, lob page server read-ahead reads 0.

(1 row affected)

(200 rows affected)

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0, page server reads 0, read-ahead reads 0, page server read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob page server reads 0, lob read-ahead reads 0, lob page server read-ahead reads 0.

Table ‘Users’. Scan count 1, logical reads 10990, physical reads 0, page server reads 0, read-ahead reads 0, page server read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob page server reads 0, lob read-ahead reads 0, lob page server read-ahead reads 0.

It split the scans and reads, but the total was still the same, 3 scans and 11620 logical reads.

Onward!

My results were ~1100 less reads – I’m using the 2013 version of stackoverflow, 150 compat level.

But there’s definitely a better way to go about this. This was just a quick, down and dirty attempt 😀

Assuming we care about plan reuse, and we don’t want to use a temp table for some reason:

CREATE OR ALTER PROCEDUREdbo.GetTopUsersInTopLocation

AS

DECLARE

@Location nvarchar(100) = N'';

SELECT TOP (1)

@Location = u.Location

FROM dbo.Users AS u

WHERE u.Location N''

GROUP BY

u.Location

ORDER BY

COUNT_BIG(*) DESC;

EXEC sys.sp_executesql

N'

SELECT TOP (200)

/*dbo.GetTopUsersInTopLocation*/

u.Reputation,

u.Id,

u.DisplayName,

u.WebsiteUrl,

u.CreationDate

FROM dbo.Users u

WHERE u.Location = @Location

ORDER BY

u.Reputation DESC;',

N'@Location nvarchar(100)',

@Location;

If using a temp table is reasonable:

CREATE OR ALTER PROCEDUREdbo.GetTopUsersInTopLocation

AS

SELECT TOP (1)

u.Location

INTO #Location

FROM dbo.Users AS u

WHERE u.Location N''

GROUP BY

u.Location

ORDER BY

COUNT_BIG(*) DESC;

SELECT TOP (200)

u.Reputation,

u.Id,

u.DisplayName,

u.WebsiteUrl,

u.CreationDate

FROM dbo.Users u

WHERE EXISTS

(

SELECT

1/0

FROM #Location AS l

WHERE l.Location = u.Location

)

ORDER BY

u.Reputation DESC;

At least in the SO2013 database, India has ~16k rows, and the next-highest location has ~9k, so temp table statistics caching probably isn’t much of a concern.

Love it! Nice work, sir.

Hi Brent!

I have personally learned a lot from you, and I deeply appreciate your guidance.

On my laptop, the original query results in 160,283 logical reads.

I rewrote it as follows:

The use of a temporary table results in a total of 12,059 + 1,372 logical reads.

Select Top 1 Location Into #Location From

dbo.Users

Where Location ”

Group By Location

Order By Count(*) Desc

Select Top 200 u.Reputation, u.Id,

u.DisplayName

From dbo.Users u

Inner Join #Location l

On l.Location = u.Location

Utilizing a Common Table Expression (CTE) leads to 12,663 logical reads.

;With Location AS

(

Select Top 1 Location From dbo.Users

Where Location ”

Group By Location

Order By Count(*) Desc

)

Select Top 200 u.Reputation, u.Id,

u.DisplayName

From dbo.Users u Inner Join Location l

On u.Location = l.Location

The utilization of variable and the OPTION (RECOMPILE) hint results in 12,059 + 1,372 logical reads.

Declare @Location Nvarchar(100);

Select Top 1 @Location = Location From

dbo.Users

Where Location ”

Group By Location

Order By Count(*) Desc

Select Top 200 u.Reputation, u.Id,

u.DisplayName

From dbo.Users u

Where u.Location = @Location

Option (Recompile)

Using variables without the OPTION (RECOMPILE) hint leads to 12,059 + 604 logical reads.

Declare @Location Nvarchar(100);

Select Top 1 @Location = Location From

dbo.Users

Where Location ”

Group By Location

Order By Count(*) Desc

Select Top 200 u.Reputation, u.Id,

u.DisplayName

From dbo.Users u

Where u.Location = @Location

Thanks!

@RollbackIsSingleThreaded good job! Nice work.

OK, here is my take. This has exactly accurate statistics, at the expense of speed on my system.

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation

AS

SELECT u.Reputation,

u.Id,

u.DisplayName,

u.WebsiteUrl,

u.CreationDate,

u.Location

INTO #users

FROM dbo.Users u;

CREATE NONCLUSTERED INDEX IDX_temp_users

ON #users (Location)

INCLUDE ([Reputation],[Id],[DisplayName],[WebsiteUrl],[CreationDate])

WITH (SORT_IN_TEMPDB=ON,FILLFACTOR=100);

SELECT TOP 1 Location INTO #location

FROM dbo.Users

WHERE Location N”

GROUP BY Location

ORDER BY COUNT(*) DESC;

SELECT TOP 200

u.Reputation,

u.Id,

u.DisplayName,

u.WebsiteUrl,

u.CreationDate

FROM #users u

JOIN #location AS l ON l.Location = u.Location

ORDER BY u.Reputation DESC;

GO

Original Proc: 3 scans, 11620 logical reads, 240 milliseconds

New Proc: 1204 milliseconds

Table ‘Users’. Scan count 9, logical reads 7778

Table ‘#users______________________________________________________________________________________________________________0000000000BA’. Scan count 9, logical reads 2743

Table ‘Users’. Scan count 2, logical reads 630

Table ‘#users______________________________________________________________________________________________________________0000000000BA’. Scan count 1, logical reads 54

Table ‘#location___________________________________________________________________________________________________________0000000000BB’. Scan count 1, logical reads 1

Total logical reads dropped to 11,204 and plan estimation went from wildly upside down (3,656 of 9) to exactly correct. The final SELECT had an index seek against the #users temp table nonclustered index that estimated 3,656 rows and retrieved 3,656 rows.

As an exercise in better estimation I’d call it a success, but as for raw performance I’d stick to some version of the original.

you mustn’t make any changes to the indexes etc. Just the procedure itself.

Yep, all changes are made inside the stored procedure. The index I created is on the temp table. It is a common “trick” to get around the limitation of not being able to modify indexes on the main tables.

@Bill Finch just to make sure I understand – you’re loading the entire contents of the Users table into a temp table, and you’re getting lower logical reads? I might want to see the execution plans on those, or know which version of the Stack database you’re using, because I couldn’t reproduce your results as having less reads.

Brent,

I am using the StackOverflow2010 database, running on SQL Server 2022 Developer. I’d be happy to share the execution plans, I have PNGs of them.

Ah! Gotcha, yeah, that one’s pretty tiny. I haven’t tested with that one in years. I should tweak the homework posts to say we should use at least the 2013 database. Sorry about that!

Instead of using not equal (Location ne N””) we use (Location gt N”) you can save not much but some IO.

True. If you change the subquery

SELECT TOP 1 Location FROM dbo.Users WHERE Location N” GROUP BY Location ORDER BY COUNT(*) DESC

to

SELECT TOP 1 Location FROM dbo.Users WHERE Location > N” GROUP BY Location ORDER BY COUNT(*) DESC

it will save 4 reads. So technical you solved the goal to archive fewer reads (but the problem with spills-to-tempdb and wrong estimations still exists)

on Erik’s solution

Et voila, without a recompile or dynamic sql:

CREATE OR ALTER PROC dbo.GetTopLocation

@TopLocation AS nvarchar(100) OUTPUT

AS

SELECT TOP(1) @TopLocation = [Location]

FROM dbo.Users

WHERE [Location] N''

GROUP BY [Location]

ORDER BY COUNT(*) DESC;

GO

CREATE OR ALTER PROC dbo.GetTopUsers

@TopLocation AS nvarchar(100)

AS

SELECT TOP(200) u.[Reputation], u.[Id], u.[DisplayName], u.[WebsiteUrl], u.[CreationDate]

FROM dbo.Users u

WHERE u.[Location] = @TopLocation

ORDER BY u.[Reputation] DESC;

GO

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation

AS

DECLARE @TopLocation AS NVARCHAR(100);

EXEC dbo.GetTopLocation @TopLocation OUTPUT;

EXEC dbo.GetTopUsers @TopLocation;

GO

For StackOverflow2013, reads drop from 49,496 to 47,560 pages.

For StackOverflow2010, reads drop from 11,619 to 8,032 pages.

And the plan shows actuals vs estimates at 100% all the way down the line.

@Don’t Bother Asking nicely done! Very good.

Looking forward to Brent’s opinion on what is the best solution for this problem!

Without testing it on the DB, I would also try this initially:

1. Store the TOP 1 location in a variable and use that in the WHERE of the outer query

2. Try using a temp table to store the location in and INNER JOIN that temp table

However looking at the indexes (non clustered for Location and clustered for UserId), I think we could try this:

–Get top 1 Location from the Location Non clustered index

DECLARE @TopLocation NVARCHAR(100) = (

SELECT TOP 1 Location

FROM dbo.Users

WHERE Location N”

GROUP BY Location

ORDER BY COUNT(*) DESC)

–Get top 200 Users from the Location Non clustered index

DROP TABLE IF EXISTS #TopLocUsers

SELECT TOP 200 UserId FROM INTO #TopLocUsers dbo.Users WHERE Location = @TopLocation ORDER BY u.Reputation DESC

–Inner join on Temp table without needing a key lookup

SELECT u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate

FROM dbo.Users u

INNER JOIN #TopLocUsers TopU ON U.UserId = TopU.UserId;

would not help in any way, since the Reputation is not part of the Location-Index (and we mustn’t change it), so your TOP 200-query would still need to do a keylookup which will again cause the spill to tempdb / has wrong estimates.

You need to put all users at the top location into the temp (even if it are 15k).

Original Query: 49496 Reads + Spill to TempDB

New Query : 48289 Reads and no Spill

Solution: since the index does neither contain the Reputation nor the Id / DisplayName etc., it always needs to lookup in the clustered index. So we could simply split the query into two queries.

The first query will put all user_ids of the most common location into a #tmp table (no @table_variable – it would make the problem worse, since SQL Server assumes that there is just one row in it every time). The table is clustered by its ID column, so it is included in every nonclustered index as the one on the location column -> the query does not need any key lookup.

The second query joins the #tmp and the user table using the clustered primary key. Since SQL Server knows how many rows are in the #tmp it estimates the correct number, reserves enough memory and do no longer spill to TempDB.

You could lay out the SELECT TOP 1 into another step (DECLARE @location NVARCHAR(100) = (SELECT…)), but this would not provide more benefits / lesser reads.

The most perfomance gain you could get would be to add the Reputation to the Location index (in this case you could limit the first query to 200 or even use a subquery with the top 200 in the original procedure), but it is forbitten 🙂 – and would of course cause tons of updates on the index, since the reputation changes often.

—–

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation

AS

SELECT u.Id

INTO #tmp

FROM dbo.Users AS u

WHERE u.Location = (SELECT TOP 1 Location FROM dbo.Users WHERE Location N” GROUP BY Location ORDER BY COUNT(*) DESC)

SELECT TOP 200

u.Reputation

, u.Id

, u.DisplayName

, u.WebsiteUrl

, u.CreationDate

FROM dbo.Users AS u

INNER JOIN #tmp AS t

ON t.Id = u.Id

ORDER BY u.Reputation DESC;

GO

Hi Thomas, the “problem” with this solution is that the total number of reads for the whole thing is higher then the reads from the original query (on my machine with SO2013), plus the engine is still doing a big overestimation on the first query.

I know Brent mentioned that eliminating the overestimation isn’t the goal of this week exercise. Reducing the number of reads, however, is. And you just increased them 🙂

Sorry, I misread my STATISTICS IO output 😉

@Thomas Franz very good! I’d challenge this assumption though: “no @table_variable – it would make the problem worse, since SQL Server assumes that there is just one row in it every time” – test that assumption on current SQL Server versions & compat levels. But good overall!

[…] Query Exercise: Improving Cardinality Estimation (Brent Ozar) […]

StackOverflow2013, compat level SQL server2022 (160).

Holding SQL servers hand all the way (first get the top location, then get the users in this top location, then get the rest of the information) gives both 1855 less read (3% profit) and 100% correct estimations.

—-

CREATE OR ALTER PROC dbo.GetTopUsersInTopLocation

AS

CREATE TABLE #TopLocation

(

[Location] nvarchar(100) PRIMARY KEY CLUSTERED

);

INSERT INTO #TopLocation (Location)

SELECT TOP 1 Location

FROM dbo.Users

WHERE Location != N”

GROUP BY Location

ORDER BY COUNT(*) DESC

CREATE TABLE #UsersInTopLocation

(

Id int PRIMARY KEY CLUSTERED

);

INSERT INTO #UsersInTopLocation (Id)

SELECT Id

FROM dbo.Users AS u

WHERE EXISTS

(

SELECT NULL

FROM #TopLocation AS tl

WHERE u.Location = tl.Location

);

SELECT TOP 200 u.Reputation, u.Id, u.DisplayName, u.WebsiteUrl, u.CreationDate

FROM dbo.Users AS u

WHERE EXISTS

(

SELECT NULL

FROM #UsersInTopLocation AS uitl

WHERE u.Id = uitl.Id

)

ORDER BY u.Reputation DESC;

GO

SET STATISTICS IO ON;

EXEC GetTopUsersInTopLocation;

–#TopLocation = 3

–Users = 3030

–#TopLocation = 2

–Users = 50

–#UsersInTopLocation = 27

–Users 44529

–Total reads: 3 + 3030 + 2 + 50 + 27 + 44529: 47641 (1855 reads less, 3% profit)

–100% correct estimations.

SET STATISTICS IO OFF;

GO

Gits with all my attempts:

https://gist.github.com/Montro1981/2b130f99567d3c9c4155d403e1baf0a6

And the exec plan:

https://www.brentozar.com/pastetheplan/?id=rkQqf3mip

Using a Clustered Index on the #UsersInTopLocation is a good idea, it allows a MERGE Join instead of a HASH MATCH. On the other hand it seems to depending on the MAXDOP-Settings, on my PC it goes always parallel and uses a HASH JOIN, except I force MAXDOP=1. On the other hand this does not effect the read-counts (just the total execution / CPU time a little bit)

And I wondered, why are you using a table with just a single row for the top location, instead of a simple variable. Then I tested it out and found, that SQL server behaves VERY strange.

If I create the table with the UserIDs with an clustered index the insert into this #tmp table creates ~ 30k additional reads onto the #tmp when I select the User table for u.Location = @location. First I thought, this is because it has to check the uniquiness (PK), but it even happens, when I create just a nonunique clustered index on the #tmp. And I have absolute no idea, what it does those reads (or what it is reading).

@Brent: maybe you (or someone else) have an idea…

On the other hand with your WHERE EXISTS (SELECT NULL FROM #topLocation …) it skips those strange, unnecessary reads.

PS: Microsoft SQL Server 2022 (RTM-CU10-GDR)

My Threshold for parallelism is at 50.

> And I wondered, why are you using a table with just a single row for the top location, instead of a simple

variable. Then I tested it out and found, that SQL server behaves VERY strange.

Yeah, while using the variable the SQL engine seriously overestimates on the #UsersInTopLocation table, with the table (even with only 1 row) it get the estimate right.

With the variable SQL generates a TRIVIAL plan, so it doesn’t go looking for a better plan.

With the table SQL is forced to do a FULL optimization plan and the temp table (even with only 1 row) get a nice Nested Loops join.

@Montro1981 hahaha, I love the fact that the variable gets a trivial plan, that’s awesome!

Interesting fact, but I doubt, that there is a much better plan (MERGE vs. HASH seems not to make a difference regarding the reads, MERGE should be just a bit better CPU wise since it hasn’t to create this hash WORKTABLE).

My problem is not the final SELECT but the INSERT INTO the #UserId table, where it (on my PC) seems to do a lookup in the #UserId for every row (otherwise I have no idea why it should read this table > 30k times while inserting the 15k ids from Indian users)