Known Issues So Far in SQL Server 2025

16 Comments

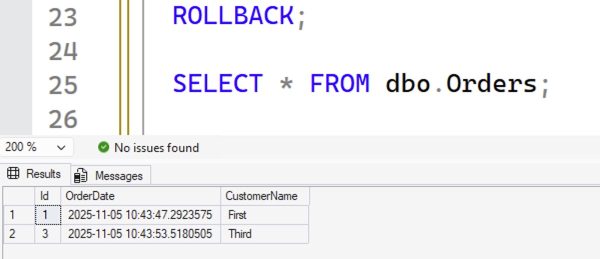

Whenever a brand spankin’ new version of any software comes out, there are bugs, and SQL Server is no exception. This has led to a mentality where folks don’t wanna install a new version of SQL Server until the first couple of Cumulative Updates come out, hopefully fixing the first big round of bugs.

So… are there bugs this time around?

So… are there bugs this time around?

Microsoft maintains a list of SQL Server 2025 known issues, and honestly, they’re not bad! There’s stuff in here that would have sucked to be the person to learn for the first time, but no showstoppers as far as I’m concerned. Some of the highlights:

On readable secondaries, you can get access violations if you enable Query Store without disabling PSPO. The fix is to disable PSPO.

Auditing events don’t write to the security log. The workaround is to write to a file instead, or like I’ve always told clients, if you need your auditing to be legally defensible, you need to use a third party appliance that sits in between SQL Server and the rest of the network, capturing all network packets.

Full text search won’t index all of big plaintext documents whose size is larger than 25MB. The workaround is to edit the registry to remove the 25MB limit.

It won’t install without TLS 1.2. I’ve had a couple of clients whose sysadmins had a little too much time on their hands, and insisted on turning off TLS 1.2 everywhere because “it’s deprecated.” For now, the fix is… re-enable TLS 1.2, do the install, and then turn it back off again.

It won’t install if you have >64 cores per CPU. This has been a problem with 2022 as well, and I’m simplifying that for the sake of the headline: the technical details are a little more complicated. The most common fix I’ve seen is to use virtualization, and configure the VM’s socket/cores setup so that you have more sockets, but less cores per socket.

PowerShell doesn’t work if you enforce strict encryption. The fix: turn off strict encryption. I find this amusing because the kinds of proactive people who use PowerShell are also the kinds of proactive people who would enforce strict encryption.

SQL auth logins are slower, although you probably won’t notice this unless you’re not using connection pooling and you’re tracking login times at scale, as Aaron Bertrand notes.

There are others in the full list, and surely there are more that are currently being investigated and haven’t been fully solved/documented yet, but overall – you know what, this isn’t bad! Knock on wood, this is shaping up to be one of the better, more reliable releases so far. Have you hit any bugs that aren’t in the list above? Let your fellow readers know in the comments.

Readers like you are what make it possible for me to spend so much time giving back to the community, building tools like this and giving ’em away for free.

Readers like you are what make it possible for me to spend so much time giving back to the community, building tools like this and giving ’em away for free.

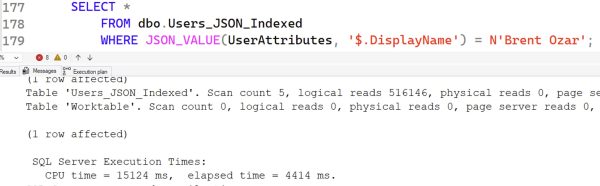

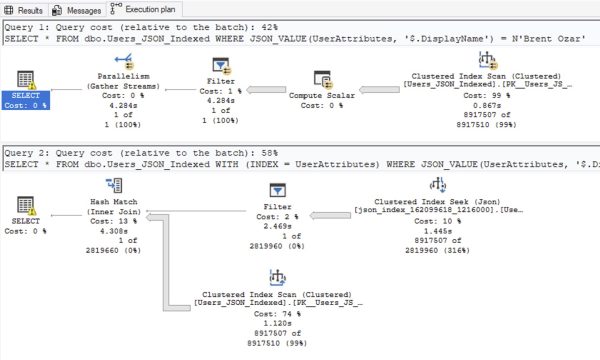

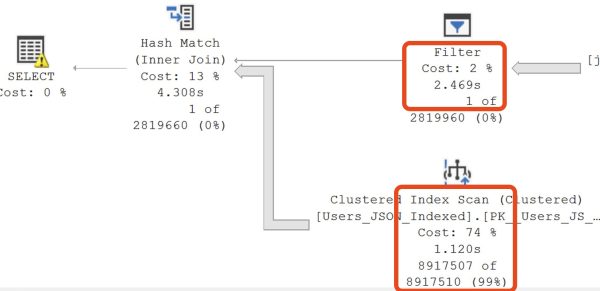

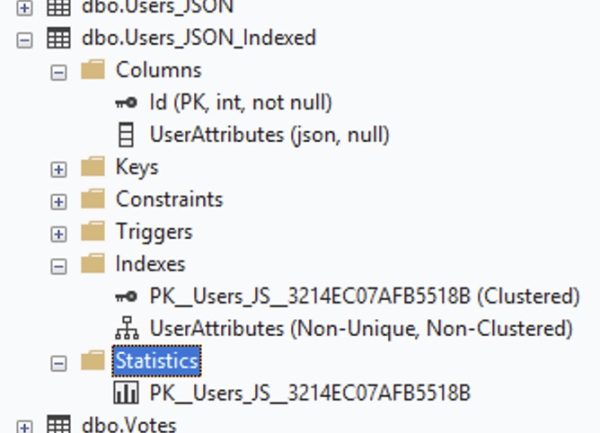

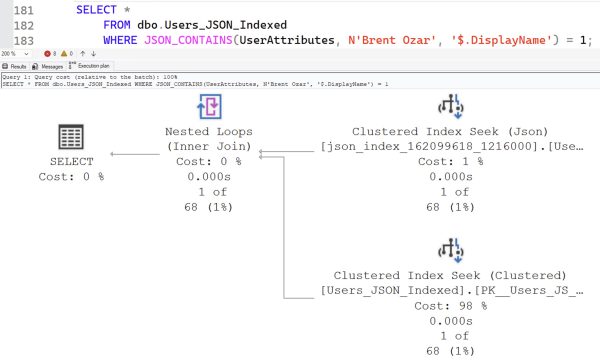

SQL Server 2025 and .NET 10 bring several new improvements to storing JSON natively in the database and querying it quickly.

SQL Server 2025 and .NET 10 bring several new improvements to storing JSON natively in the database and querying it quickly.