This one’s a pretty quiet release: just bug fixes in sp_Blitz, sp_BlitzLock, and sp_DatabaseRestore.

To get the new version:

- Download the updated FirstResponderKit.zip

- Azure Data Studio users with the First Responder Kit extension:

ctrl/command+shift+p, First Responder Kit: Import. - PowerShell users: run Install-DbaFirstResponderKit from dbatools

- Get The Consultant Toolkit to quickly export the First Responder Kit results into an easy-to-share spreadsheet

Consultant Toolkit Changes

I updated it to this month’s First Responder Kit, but no changes to querymanifest.json or the spreadsheet. If you’ve customized those, no changes are necessary this month: just copy your spreadsheet and querymanifest.json into the new release’s folder.

sp_Blitz Changes

- Fix: update unsupported SQL Server versions list. Time marches on, SQL Server 2016 SP2. (#3274, thanks Michel Zehnder and sm8680.)

- Fix: if you ran sp_Blitz in databases other than master, we weren’t showing the alerts on TDE certificates that haven’t been backed up recently. (#3278, thanks ghauan.)

sp_BlitzLock Changes

- Enhancement: compatibility with Azure Managed Instances. (#3279, thanks Erik Darling.)

- Fix: convert existing output tables to larger data types. (#3277, thanks Erik Darling.)

- Fix: don’t send output to client when writing it to table. (#3276, thanks Erik Darling.)

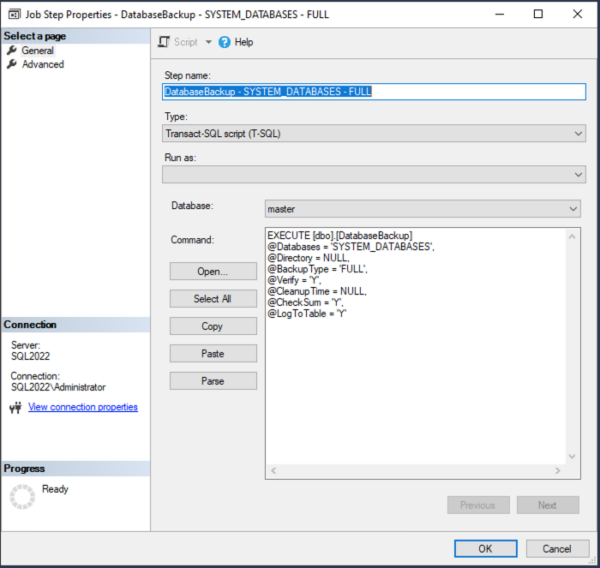

sp_DatabaseRestore Changes

- Improvement: new @FixOrphanUsers parameter. When 1, once restore is complete, sets database_principals.principal_id to the value of server_principals.principal_id where database_principals.name = server_principals.name. (#3267, thanks Rebecca Lewis.)

- Fix: better handling of last log files for split backups when using @StopAt. (#3269, thanks Rebecca Lewis.)

- Fix: corrected regression introduced in 8.11 that caused non-striped backups to no longer be deleted. (#3262, thanks Steve the DBA.)

For Support

When you have questions about how the tools work, talk with the community in the #FirstResponderKit Slack channel. Be patient: it’s staffed by volunteers with day jobs. If it’s your first time in the community Slack, get started here.

When you find a bug or want something changed, read the contributing.md file.

When you have a question about what the scripts found, first make sure you read the “More Details” URL for any warning you find. We put a lot of work into documentation, and we wouldn’t want someone to yell at you to go read the fine manual. After that, when you’ve still got questions about how something works in SQL Server, post a question at DBA.StackExchange.com and the community (that includes me!) will help. Include exact errors and any applicable screenshots, your SQL Server version number (including the build #), and the version of the tool you’re working with.

If your company is hiring, leave a comment. The rules:

If your company is hiring, leave a comment. The rules: I’ve never attended Build in person before because the data part of the event tends to be fairly thin, and the releases for Azure SQL DB and SQL Server aren’t usually tied to Build’s dates. This year, it’s a hybrid event, both in-person in Seattle and online.

I’ve never attended Build in person before because the data part of the event tends to be fairly thin, and the releases for Azure SQL DB and SQL Server aren’t usually tied to Build’s dates. This year, it’s a hybrid event, both in-person in Seattle and online.