Someone told you that you should be using Ola Hallengren’s Maintenance Scripts. You’ve downloaded his Maintenance Solution.sql, you ran it in the master database, and … now you’re not sure what’s supposed to happen next. In this post, we’ll cover how to configure backups.

First, configure the Agent jobs.

When MaintenanceSolution.sql ran, it created a few Agent jobs. You can see them in SSMS or Azure Data Studio under SQL Server Agent, Jobs:

Ola created those jobs, but didn’t configure or schedule them, because they need information specific to your environment. In this post, we’re only going to focus on the DatabaseBackup jobs.

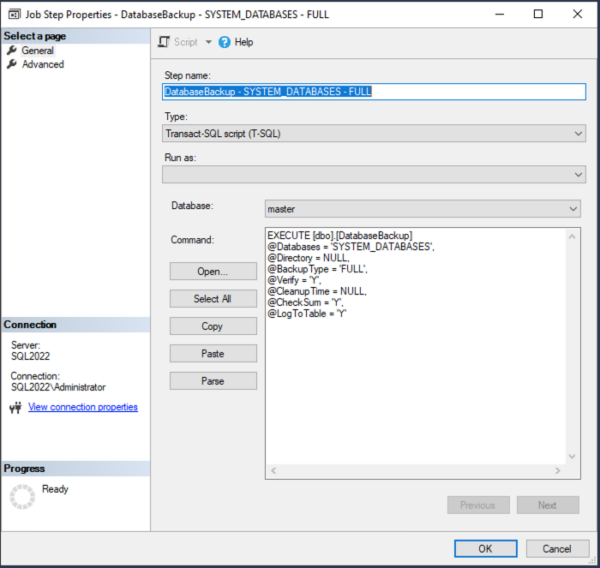

We’ll start with DatabaseBackup – SYSTEM_DATABASES – FULL. This job backs up your master, model, and msdb databases, which sounds really simple, but it’s a good place to start because even a job this simple needs configuration. Right-click on the job, click Properties, Steps, then click on the only step in the job and click Edit.

Here’s what the job does by default:

|

1 2 3 4 5 6 7 8 |

EXECUTE [dbo].[DatabaseBackup] @Databases = 'SYSTEM_DATABASES', @Directory = NULL, @BackupType = 'FULL', @Verify = 'Y', @CleanupTime = NULL, @CheckSum = 'Y', @LogToTable = 'Y' |

A couple of those parameters need explanation and configuration.

@Directory = NULL means that Ola uses the default backup path for your server. To see what that is, right-click on your server in SSMS or ADS, go into Properties, Database Settings, and it’s the “Database default locations” here:

That backup path may not be what you want, so change it at the server level in this screenshot, not in Ola’s jobs. Just change it once at the server level, and it’ll take effect everywhere in any script that doesn’t specify where the backups should go.

Side note – I prefer writing backups to a UNC path, like \\backuptarget\foldername, rather than local storage, and here’s why.

While you’re in that screen, look up just a little, and you’ll see two other checkboxes:

- Compress Backup – check that so your backups run faster, and

- Backup Checksum – check that so SQL Server does a little bit of corruption checking while it’s doing the backups (this won’t slow your backups down)

Once you’ve configured those server-level settings, let’s go back to that Ola job step. Leave the @Directory parameter null, and it’ll inherit the server-level backup path. Next parameter…

@Verify = ‘Y’ means that after the database backup finishes, SQL Server will do a test restore to make sure the backup file is valid. That sounds good, but it makes your backup jobs take way longer, and it hurts performance of other stuff while it runs. If you want to verify your backups, you’re much better off restoring them onto a different server. Me, I recommend changing this to @Verify = ‘N’. You can edit it right there onscreen.

@CleanupTime = NULL means that this job won’t delete old backup files. If you would like old backups deleted, replace NULL with the number of hours for older files to be deleted. (3 days is 72 hours, 1 week is 168 hours. You’re welcome. I suck at math too.)

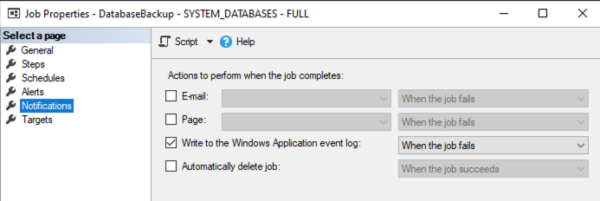

We’re done configuring the step. Click OK, then click Notifications, and it’ll look like this:

I dunno about you, but I wanna know when things go bump in the night, so click the Email checkbox and choose the group that you want to be notified when the job fails. If that dropdown is empty, here’s how to configure database mail. Note that you’ll need to do that, then restart SQL Server Agent, before you can come back to finish configuring these scripts.

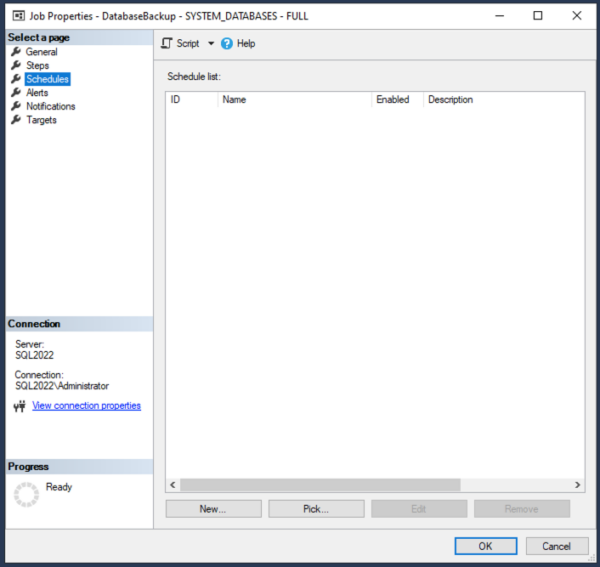

Last up, click Schedules. You’ll notice there’s no schedule:

It’s up to you to define the schedules for each job. Click New. If you want them backed up every night at 8PM, for example, here’s how you’d set up that screen:

Don’t schedule all backups simultaneously.

Folks seem to default all of their severs to run backups at midnight.

That’s a terrible idea.

Because then at 23:59:59, all of your servers are staring at their Apple Watches, waiting for the exact moment to simultaneously bum-rush the storage and the network. The lights in the datacenter will suddenly dim, fans will scream, and all of the servers will do a distributed denial of service attack on themselves.

Instead, stagger your schedules out as much as you can. If you’re reading this post to learn this topic, then you have four jobs that likely want to run every night: system database backups, user database backups, index optimization, and integrity checks. Those four jobs, across all your servers, should be spread out as much as possible.

The good news is that the system database full backups will be super quick because the system databases are small. Just pick a time when user activity has tapered off for the day. Odds are, you’re never going to need to restore a system database, so I’m less concerned with the exact time of day on these. (Plus, we can’t do transaction log backups on these.) The biggest reason I back these databases up is that people accidentally create objects in the system databases, and then ask me to restore ’em.

After configuring the job’s schedule, click OK, and we’re done configuring the system backups!

OMG, that was a lot of work.

Next up, the user database backups.

The user database jobs are a little more complex. There are separate jobs and schedules for full, differential, and transaction log backups. I’m not going to cover the differences between those here, but I am going to talk about the most common parameters that you’ll want to set on each of the jobs. This is above and beyond the parameters we discussed above, which also need to be set on user database jobs.

Ola’s DatabaseBackup stored proc has a lot of parameters that don’t show up in the default jobs, but I think you should set them.

@ChangeBackupType = ‘Y’ should be added to your list of parameters. What this does is if a brand new database gets added to your server midday, and your transaction log backup job runs, the log backup job will say, “Hey, I can’t do a log backup because there hasn’t been a full backup of this brand new database yet – so I’m going to change the backup type to full for this database.” This gets you coverage right from the get-go when new databases are created in full recovery model.

@NumberOfFiles = 4 should be added for performance on the full backup job. SQL Server backups go faster when they’re striped across multiple files due to SQL Server’s own internal limitations, nothing to do with your storage. If you want to spend time performance tuning, you may find that 6 or 8 backup files might even improve performance more, but for starters, let’s at least do 4 files. It doesn’t require any more work on your part, even at restore time, because you’re going to use DatabaseRestore, an automated tool, for your restores.

@MinBackupSizeForMultipleFiles = 10000 should be added so that we only mess with multiple backup files when the database is 10GB or larger. Smaller than that, I’m not really concerned about backup performance – 1 file should be fine.

There are many, many more backup parameters you can set for things like encryption, third party backup software, parallel backup jobs, and performance tuning, but those 3 are the most important, and the ones I usually set everywhere. So here’s what my “full” step looks like, for example:

|

1 2 3 4 5 6 7 8 9 10 11 |

EXECUTE [dbo].[DatabaseBackup] @Databases = 'USER_DATABASES', @Directory = NULL, @BackupType = 'FULL', @Verify = 'N', @CleanupTime = 168, @CheckSum = 'Y', @LogToTable = 'Y', @ChangeBackupType = 'Y', @NumberOfFiles = 4, @MinBackupSizeForMultipleFiles = 10000; |

Schedule these backup jobs as well – typically once a day for the full backup job, and every 5 minutes (or every minute, really) for the log backup job.

Finally, test the backups by running a restore.

After the backup jobs run, you’ll end up with files organized in this folder structure:

\ServerName\DatabaseName\FULL

\ServerName\DatabaseName\DIFF

\ServerName\DatabaseName\LOG

That might seem awkward if you’re used to using the GUI to point-and-click your way through database restores. Fortunately, there’s a really easy solution for that: sp_DatabaseRestore, a free stored procedure in our open source First Responder Kit. To restore a database to the most recent point-in-time, you can run:

|

1 2 3 4 5 6 |

EXEC dbo.sp_DatabaseRestore @Database = 'StackOverflow', @BackupPathFull = '\\BackupServer\SQLBackups\SQL2022\StackOverflow\FULL\', @BackupPathLog = '\\BackupServer\SQLBackups\SQL2022\StackOverflow\LOG\', @ContinueLogs = 0, @RunRecovery = 1; |

That’ll automatically pull the most recent full backup from that folder, plus all the transaction logs since, and restore them in order. Easy, right? Super helpful when you want to keep a development or QA server up to date. There are more parameters for things like differential backups, moving data files, running CHECKDB, and more – for those, hit the documentation.

One of the many reasons why you’ll want to back up directly to a network path is so that you can run restores from other servers without slowing down production. Test your restores on other environments, like your DR or dev/QA/test environments, and you’ll know with confidence that your newly created backup jobs are working well.

For support questions on how the scripts work, visit the #SQLhelp channel in the SQL Server community Slack – invites available here – or post questions on DBA.StackExchange.com or the SQLServerCentral forums. Do not leave support questions here in the comments – I’ll simply ignore them since you didn’t read the post through to the end. However, if you’ve got questions about the parameters or process above, feel free to leave those and I’ll work through ’em.

17 Comments. Leave new

Thank you! I’ve heard about these for years (here, as well as elsewhere) but didn’t find easily a “this is what you need to do to get it running with real-world values”. I appreciate this.

You’re welcome!

First of all, love Ola Hallengren’s stuff and of course Brent’s info on it. I just wanted to share an issue we had recently that may help some people if you’re using Azure blob storage for backups. This isn’t a real issue with Ola’s scripts, just some clarification on his example of Azure blob backups. I’ve been using these backup scripts for years and at numerous companies really without an issue. The problem is when your db instances get very big or VLDB’s. In the case of Ola’s example of setting up Azure blob storage backups, he uses the following setup:

EXECUTE dbo.DatabaseBackup

@Databases = ‘USER_DATABASES’,

@URL = ‘https://myaccount.blob.core.windows.net/mycontainer’,

@Credential = ‘MyCredential’,

@BackupType = ‘FULL’,

@Compress = ‘Y’,

@Verify = ‘Y’

This works fine with db’s under 4TB, but does not allow for multi-file backups. Now there isn’t a lot of good/clear information out there (at least that I’ve found) on PAGE blob storage vs BLOCK blob storage when using Microsoft native backups, which is what Ola’s scripts ultimately use to run. The issue that isn’t obvious is that when you use the @URL param with the @credential param, you are forced over to use PAGE blob storage, which appears to hit a threshold somewhere around 4TB. What I found was that you can just remove the @credentials parameter because you already have credentials on your machine when you setup the URL path on the server. Now you are using BLOCK blob storage and can use multiple files and (according to Microsoft) is cheaper to store than PAGE blob anyways. After that, we also ran into server errors where it said we have reached the limit of allowed blocks. This ended up being and issue with transfer size and block size so we added 2 other parameters @BlockSize = 65536 and @MaxTransferSize = 4194304. I’m not going into the numbers and why because someone else describes it better here in a Microsoft blog: https://learn.microsoft.com/en-us/archive/blogs/sqlcat/backing-up-a-vldb-to-azure-blob-storage

So in short, when using Azure blob storage, our Ola Hallengren scripts are setup this way:

EXECUTE [dbo].[DatabaseBackup]

@Databases = ‘USER_DATABASES’,

@URL = ‘https://myaccount.blob.core.windows.net/mycontainer’,,

@BackupType = ‘FULL’,

@Compress = ‘Y’,

@CheckSum = ‘Y’,

@LogToTable = ‘Y’,

@BlockSize = 65536,

@MaxTransferSize = 4194304,

@MaxFileSize = 500000, — or replace with @NumberOfFiles = 5 depending on the size of your database and files

@Execute = ‘Y’

Hope this helps.

Nice tool, but the last time I used the backup script there were situations (AG issues I believe) where the job could possibly silently fail. I would suggest ensuring your monitoring is tightened up – not just for agent job failures. A job that checks for evidence in msdb that the backup ran successfully has helped with situations like this.

Yes, if you try offloading your backups but you don’t configure the backup hierarchy correctly for the AG, it’ll fail – but I wouldn’t blame that on Ola.

Great article. I’ve been using Ola’s plans for years, and they are essential IMO.

One suggestion I would add is that it’s (probably) best not to put these in the “master” database, since this is really part of SQL Server itself. Instead, I’d recommend creating a “tools” database to hold Ola’s maintenance SPs and any other tools you use to manage SQL Servers. In our “tools” database we have Ola’s SPs, your “First Responders’ Kit”, and several other tools (including in0house developed monitoring SPs for AOG, mirroring, etc.) that we use to maintain our SQL Servers. This allows us to easily keep the code in a Git repo for development, as well as to easy version and deployment across our whole SQL farm without filling the master database with a bunch of cruft.

Just a thought 🙂

I actually prefer the opposite: I *want* them in the master database. I don’t want any extra databases on the server if I can avoid it, and as far as I’m concerned, utility code like this is part of the SQL Server infrastructure itself.

You can still use a Git repo for development and deploy just like you would the contents of any user database.

Is there a good reason to keep the full backups of system vs. user databases separate? My friend tends to combine them into one “DatabaseBackup – ALL_DATABASES – FULL” job.

My reason for keeping them separate is that I want to do full backups of the system databases every day but only once per week for the user databases.

“distributed denial of service attack on themselves”

Hilarious!

hi,

I have tried to setup backup using this tools and found that the backup is not working good for AG, standalone and FCI is ok.

it works in the way that confuse DBA on the target plan in such a way that the target backup folder using AG name and make it very long.

So finally I use maintainence plan instead.

If you don’t like the default folder structure you can change that.

Just configure your backup job with the parameters @DirectoryStructure and @AvailabilityGroupDirectoryStructure.

You have parameters for files names aswell.

Hi,

I have an question, how to configure this script for AG with backup from primary and UNC path.

That’s outside of the scope of this post, but I’ll keep that in mind as an idea for future posts.

some more tricks in job calls vs just system and user db calls (ips not real but left for clear example of unc alternates)

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘QMSQC1′, @Directory = N’\\servername\e$\DBBAK\’, @BackupType = ‘FULL’, @Compress = ‘Y’, @Verify = ‘Y’, @CleanupTime = 500, @CheckSum = ‘Y’, @LogToTable = ‘Y'” –b

How to select which databases to backup in job

EXECUTE dbo.DatabaseBackup

@Databases = ‘USER_DATABASES, -DB1,DB2’, — All user databases, except Db1, DB2

@Directory = ‘C:\Backup’,

@BackupType = ‘FULL’,

@Verify = ‘Y’,

@Compress = ‘Y’,

@CheckSum = ‘Y’,

@CleanupTime = 24

Cleanup time is in hours

Example of SYS dbs plus others

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘SYSTEM_DATABASES,QMSQC1,QMSQC2′, @Directory = N’\\10.10.10.20\e$\dbbak’, @BackupType = ‘FULL’, @Verify = ‘Y’, @Compress = ‘Y’, @CleanupTime = 169, @CheckSum = ‘Y’, @LogToTable = ‘Y'” -b

So any dbs we do not want to backup, we edit the line in the job stored proc call to stored proc

Can also use this syntax of one or multiple dbs

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘QMSQC’, @Directory = N’\\10.10.10.211\e$\DBBAK’, @BackupType = ‘FULL’, @Compress = ‘Y’, @Verify = ‘Y’, @CleanupTime = 168, @CheckSum = ‘Y’, @LogToTable = ‘Y'” –b

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘QMSQC1′, @Directory = N’\\servername\e$\DBBAK\’, @BackupType = ‘FULL’, @Compress = ‘Y’, @Verify = ‘Y’, @CleanupTime = 500, @CheckSum = ‘Y’, @LogToTable = ‘Y'” –b

How to select which databases to backup in job

EXECUTE dbo.DatabaseBackup

@Databases = ‘USER_DATABASES, -DB1,DB2’, — All user databases, except Db1, DB2

@Directory = ‘C:\Backup’,

@BackupType = ‘FULL’,

@Verify = ‘Y’,

@Compress = ‘Y’,

@CheckSum = ‘Y’,

@CleanupTime = 24

Cleanup time is in hours

Example of SYS dbs plus others

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘SYSTEM_DATABASES,QMSQC1,QMSQC2′, @Directory = N’\\10.10.10.20\e$\dbbak’, @BackupType = ‘FULL’, @Verify = ‘Y’, @Compress = ‘Y’, @CleanupTime = 169, @CheckSum = ‘Y’, @LogToTable = ‘Y'” -b

So any dbs we do not want to backup, we edit the line in the job stored proc call to stored proc

Can also use this syntax of one or multiple dbs

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d master -Q “EXECUTE [dbo].[DatabaseBackup] @Databases = ‘QMSQC’, @Directory = N’\\10.10.10.211\e$\DBBAK’, @BackupType = ‘FULL’, @Compress = ‘Y’, @Verify = ‘Y’, @CleanupTime = 168, @CheckSum = ‘Y’, @LogToTable = ‘Y'” –b

Hey, good thing you made it clear that the IP addresses aren’t real! Here I was, cracking my joints, about to fire up my hacking rig for your RFC 1918 targets!

Standing down.

Also… smiled at the @Directory = ‘C:\Backup’.

Can Ola Hallengren backup 2 copies simultaneously to 2 different targets? I can’t find info on this question, makes me think it’s not possible.