No, not #tables – actual tables. Here’s how:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

USE tempdb; GO /* This one is only available during my session: */ CREATE TABLE #myTempTable (ID INT IDENTITY(1,1), Stuffing VARCHAR(100)); GO /* This one is global, meaning it's available to other sessions: */ CREATE TABLE ##myTempTable (ID INT IDENTITY(1,1), Stuffing VARCHAR(100)); GO /* You can create both of those at the same time. They're different. */ /* This one is just like a user table, but in TempDB: */ CREATE TABLE dbo.myTempTable (ID INT IDENTITY(1,1), Stuffing VARCHAR(100)); GO |

The first one disappears when my session is over, but the latter two persist until the SQL Server is restarted.

Why would you ever do the latter two? Say you need to share data between sessions, or between different applications, or staging tables for a data warehouse, or just faster tables that live on local SSDs in a cluster (as opposed to slower shared storage), or you wanna build a really crappy caching tier.

If you use global temp tables or user-space tables, though, you have to check for duplicates before creating your tables. Local temp tables are just all yours, and you can have a thousand users with the exact same-name local temp tables.

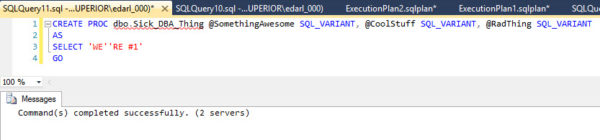

Next up, the ever-so-slightly different magic of temporary stored procedures:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

USE tempdb; GO /* This one is only available during my session: */ CREATE PROC #usp_myTempWorker AS SELECT * FROM sys.databases; GO /* This one is global, meaning it's available to other sessions, but ONLY as long as my session is available: */ CREATE PROC ##usp_myTempWorker AS SELECT * FROM sys.databases; GO /* This one is just like a user stored proc, but in TempDB: */ CREATE PROC dbo.usp_myTempWorker AS SELECT * FROM sys.databases; GO |

Here, the first TWO disappear when my session is over, and only the latter one sticks around. Diabolical. So the ## temp stored proc doesn’t really help me here because I can never tell when the creator’s session is going to finish. (Not God. His session keeps right on going.)

So why would you ever create stored procedures – temporary or user – in TempDB? You might not have permissions in the user databases, just might not be technically allowed to change things, or maybe you’ve got monitoring queries that you want to hide, or you want to create procs temporarily to check parameter sniffing issues.

All of the above will disappear when the SQL Server is restarted – or will they? Not if you create them permanently in the model database, which is the source of TempDB’s creation when SQL Server restarts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

USE model; GO CREATE PROC dbo.usp_myTempWorker AS SELECT * FROM sys.databases; GO CREATE TABLE dbo.myTempTable (ID INT IDENTITY(1,1), Stuffing VARCHAR(100)); GO /* Now restart your SQL Server, and check in TempDB */ USE tempdb; GO EXEC dbo.usp_myTempWorker; GO SELECT * FROM dbo.myTempTable; GO |

Why would you ever wanna do this? Well, say you need to make sure that, uh, in case … look, I’m just an idea man. Somebody, somewhere, is looking for a really bad idea. That’s what I’m here for.

Want to learn more? Take my class.

My Fundamentals of TempDB class is for curious folks who want to learn:

My Fundamentals of TempDB class is for curious folks who want to learn:

- What uses TempDB, and how that affects performance: not just temp tables and table variables, but also triggers, cursors, sorting indexes, workspace spills, the version store, and more

- How to host TempDB depending on how you use it: understanding what goes in the log file, the data files, why the number of data files matter, and whether your particular workload should be on SAN, local storage, or ephemeral storage, and why

- How to monitor it: after you understand what uses it and how to host it, you need to keep tabs on how its performance changes over time using DMVs, Perfmon counters, and the First Responder Kit scripts