It’s difficult to define why some things are wildly successful in a sea of forgettable products. Some recipes have a mysterious umami that comes from a subtle dash or two of magical sauce. In business and technology there’s an indefinable edge that sets people and teams apart. How do you get that special something?

Here’s one strategy businesses use all the time: layer products to build new, deeply specialized meta-products. First, they create a service or application they can sell. It generates revenue, but it also generates data. Sometimes they have to adjust (or heaven forbid, pivot) the product, but they get good at it. They start making money.

After a while they apply analysis to harvest meta-information from the product. The meta-information is used to create new, different products. These products may offer extra insights to existing customers, but they may also suit new and different customers, too. BLAMMO, the business is more diverse and successful.

These techniques aren’t just for packaged products. This is also what helps companies create services that seem a little magical, because they’re simultaneously simple and complex. We use these principles to make our own SQL Server Training super sharp and effective for students.

This isn’t something that only we can do. You can use the same techniques to find your own secret sauce.

Step 1: To Get Ahead, Look Back

![]()

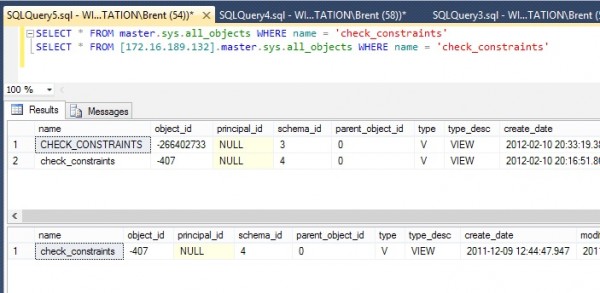

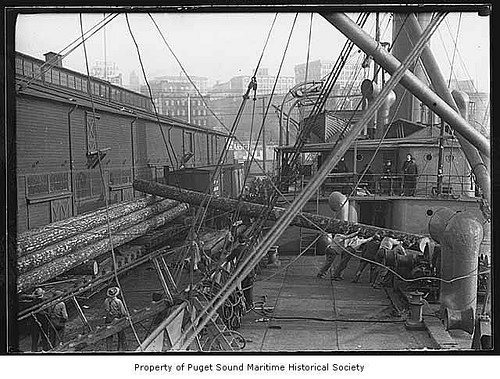

One of our primary consulting products is our Critical Care® Sessions. We work closely with a client to identify their pain points and the bottlenecks in their SQL Server environment– issues can be anywhere from the storage layer to SQL configuration to query optimization and execution. We deliver recommendations for the client to implement within the first couple of weeks, the next month, and the next quarter. We tailor each recommendation to the client’s specific needs.

After a few weeks have passed, we meet again. We work with the client to find out how much they’ve gotten done. What’s been easy? What’s been difficult? Are there any questions we can help out with?

We listen very carefully, because this is an important source of information. Following up with your customers and finding out what has worked for them and why some tasks are trickier for them than normal is one of the most important things you can do. This tells us:

- Where misunderstandings commonly occur

- What types of data successfully gets buy-in to make difficult changes

- Which scripts and tools are really critical to get people that data

The truth is this: it’s easy to know facts. It’s difficult to be effective. Because of this, we constantly collect data on what helps people get things done and evolve our tools.

This information is certainly helpful to our consulting product itself– it keeps us at the top of our game. But we also get an important component of our secret sauce from this: we can train people in the classroom to be effective using the techniques and scripts we’ve refined.

To apply this step yourself, create a habit of regularly meeting with customers and listening to feedback. Make notes. You won’t always be able to implement every idea you have, but keep track of what you find along the way. Steps 2 and 3 will give you a chance to harvest that information.

Step 2: Identify Your Differentiators

Very smart people are sometimes terrible teachers. We knew we could teach on a wide variety of subjects. But how did we build a great training?

The key is to identify what sets you apart and narrow your scope to projects where you can impact your audience deeply. We don’t want to fill a training room with warm bodies and talk about general SQL Server topics. We want to get the right people into the room so that we can address their immediate problems and have them jumping out of their seats to get going.

To create great training, we identified what we do better than anyone else. These are our differentiators:

- We’ve built tools and scripts we can quickly train people to use effectively to diagnose server health and performance and tackle tough indexing problems.

- We’ve created specialized materials to help developers learn the critical factors to SQL Server performance in a short amount of time

- We have refined queries and techniques to teach developers to recommend effective changes in their environment

- We have an army of slide decks, blog posts, and even animated gifs to help developers solve performance problems, no matter how niche– so making Q&A awesome is no problem.

Knowing these differentiators made our mission clear. We knew we could change people’s careers with a short 2 day class, targeted at a specific audience (developers), and give them immediately useful scripts and techniques to improve performance.

We applied also looked at differentiators for how we offer the training itself. What new things could we do to help people access the training and make it work for them in a new way?

- We created a business justification form to help people show the value of their training to management before signing up.

- We also included two two-hour group Q&A webcasts for just the people in the training session two weeks after the event. You know how most trainings leave you with a feeling of confusion about a few topics when you get back to your desk and try to apply what you learned? These Q&A webcasts mean our attendees can get answers for those difficult situations.

Define your differentiators for yourself. What do you do better than anyone else in your environment? What needs do you fill, or could you fill, that nobody else covers well? Where are the gaps and needs in your workplace that you find interesting?

Step 3: Don’t Kill Yourself with Focus

You’ll never get permission to have an idea.

We didn’t start our business with the plan of giving our own training events. We love consulting. We speak in front of people all the time– we give our weekly webcasts and speak at local and national conferences, but we primarily think of ourselves as people who go out and initiate change rather than people who stand behind a podium and lecture. We naturally focused on consulting as our core business.

Focus is great and it can help make you successful. But too much focus makes you predictable. It keeps you from really using your secret sauce.

We give training because we were open to a new idea. We realized that two day training is perfect to teach a developer what they need to know about performance tuning SQL Server to design changes for real-world environments. It’s just enough time to get them out of the office and teach them how to use the right tools, but not so long that they’re swamped under an incredible tidal wave of email when they return.

Set aside time at least once a month to think about how your job could be dramatically different. Don’t restrict yourself by immediate needs or how much time you have available. Give yourself room to dream of what you’d be really proud of doing in six months or a year.

Here’s the key: don’t wait until you’re having problems to make your work more awesome. Be creative and act on your aspirations when you’re already successful and busy. That’s often when you’ll have your best ideas! We added our training offering at a time when we were already very successful, simply because we knew we could offer a very high quality product and help developers in a way nobody else can.

The Secret Sauce Isn’t Out of Reach

Good news: if you read our newsletter every week or even just made it to this point in the post, you’re probably a specialist already. You may sometimes feel like a small fish in the big SQL Server pond, but that’s just human nature. If you’re even in the pond you are well on your way toward becoming very good at jobs that are difficult to fill.

Skills that can set you apart like becoming great at performance tuning SQL Servers are completely within your reach. A few steps to find the right path is all it takes.