How are my servers doing?

How are my databases doing?

If my boss came in and asked which servers needed more horsepower or which ones could be consolidated together, how would I come up with an answer?

I’ve always found it funny that DBAs and sysadmins don’t have good answers to these questions. We roll our own monitoring solutions, cobble things together from a bunch of parts, or fork over money to third party vendors to get a picture of how our environment’s doing.

Virtualization admins, on the other hand, have fantastic answers to these questions. Take this screenshot from VMware vSphere’s management console:

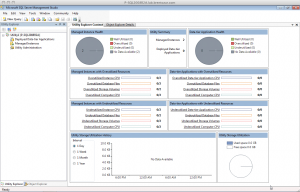

The sysadmin can see a lot of relevant information at a glance: how utilized the host server CPUs are, how utilized the server memory is, each server’s status, and more. Wouldn’t it be nice to get a simple report like that for SQL Server? Check out this screenshot from the new SQL Server 2008 R2 CTP:

The first thing to notice is the two pie charts: Managed Instance Health and Data-tier Application Health.

Not servers and databases – instances and applications. That’s your first hint that things are going to be different long-term.

Virtualization Changed Everything, But Took Time

Looking ahead, Microsoft wants us to start thinking of databases as being less connected to physical servers, and to think of our physical servers as a resource pool. Imagine if databases were self-contained packages that could be moved from server to server – just like virtual servers can be moved from host to host today.

The virtualization push took years to accomplish because there were so many things that had to be handled. We had to figure out how to handle drivers, how to handle resource sharing, how network segregation and storage throughput would work, and even systems management had to be rethought from the ground up. It took a long time to get it right, and today virtualization is pushing into enterprises everywhere.

Today, SQL Server faces struggles not unlike the early days of virtualization. There are several factors that cloud the Utopian vision of moving databases around seamlessly:

- Connection strings – our apps call for their data by a specific server name. If we’re going to abstract servers away, then we need a way to find our data.

- Logins – logins are set at the server level, yet are tied into databases. If we move a database from one server to another, we have to make sure that the login exists on the new server, has the same password, and has the same level of access rights. If the application frequently uses a specific login to call the TRUNCATE TABLE command, for example, we need to know it’ll have that same level of permissions on the new server.

- SSIS/DTS packages – these techniques can be used to pipe data in and out of our database servers, and they’re often tied in with local servers.

- Scheduled jobs – as much as I fight developers who want to put jobs on their servers, reality is that I don’t always win. (Schedulers belong in applications, not databases.)

- Anal retentive DBAs – we know best, right? We finely tune some of our applications so that the data lives on one set of disks, logs live on another set of disks, and maybe indexes or partitioned data live on yet another set of disks. If we start shuffling databases around, we’re going to need to abandon that level of control.

Abstracting all of this stuff out of the database architecture isn’t going to be easy, but SQL Server 2008 R2 is starting to take the first step.

The SQL Server Utility: Virtualization for Databases

SQL Server 2008 R2 introduces the concept of the SQL Server Utility: a pool of resources (instances) that host applications (databases).

The Utility is managed by a Utility Control Point: a single SQL Server instance that gathers configuration and performance data.

All of this is visualized through SQL Server Management Studio’s Utility Explorer:

This dashboard shows some basic metrics about CPU use and storage use at both the instance level and the application level.

Down the road – years down the road – this might provide DBAs with the same level of fast reaction times that virtualization admins currently enjoy. Got a database running out of space? Move it to a server with more space. Got an application burning up too much CPU power? Slide it over to one that’s got the latest and greatest processors.

In order for this concept to work, though, we need to do more than just think differently about our databases; we’re going to need to deploy them differently. SQL Server 2008 R2 introduces the concept of the Data-tier Application (DAC), a self-contained package with everything our applications need in order to store and process their data. It’s not going to work for everyone, and in my next post, I’ll talk about what SQL Server 2008 R2’s DACPack means for DBAs.

12 Comments. Leave new

This is very interesting stuff. Although I could see this being very useful, all of this can currently be done on an ESX farm with vMotion, without having to worry about the problems you mentioned (connection strings, logins, jobs, etc). I’d be interested to know how MS plans to get around these pitfalls. Can you recommend more reading on the R2 release?

The problem with using vMotion to accomplish it is that you only get the benefit of moving the entire server – you lose all fine-grained control. You don’t have the ability to move single databases, only entire database servers. After the era of database server consolidation, most of our customers have database servers with hundreds of databases on a single server. When one databases’ needs grow, you need to move it off onto its own hardware or onto a different version of SQL Server, and vMotion (as much as I love it) doesn’t give us an answer for that.

Right now, because the CTP of R2 only came out this morning, the only recommended reading is the documentation in the CTP product and, uh, my blog. 😀

Interesting topic, sounds like database utopia, able to float around, not tied to a server.

I can’t wait for the day when the databases of the VMware vCenter server can be a members of a SQL Utility. Then when the need for more disk space, need to be upgraded or migrated, or need for more resources for performance occurs those back ends can be auto live migrated to make sure they continue to support the VM auto vmotions! Whoa, my head hurts now. 🙂

Brent, does it seem the new R2 solutions are designed for server consolidation and not scale out? What happens when you know your one database on one server is too much and have gone to distributed queries to multiple servers? Any new features to help in the scale out architecture?

Chuck – yes, you bet! Microsoft’s acquisition of DATAllegro resulted in some new scale-out features called Project Madison. It’s not a part of this August CTP, though. You can read more about it here:

http://www.microsoft.com/sqlserver/2008/en/us/madison.aspx

The problem with running SS on a virtual machine is not technology but *licensing*. MS currently charges a license fee for each vm that has an instance of SS running on it. 100 vm’s equals 100 SS licenses. My Enterprise Edition license, though, allows me to run as many instances of SS on a physical server as I want. 100 instances equals 1 license. I don’t need the masters degree in Economics to figure out that 100x > 1y, as long as x is any significant fraction of y. Since Standard Edition is about 1/4th the cost of Enterprise Edition, figure that more than 4 instances makes EE cheaper to license. The incremental hardware cost to run 4+ instances on a single server is cheap now, and it’s a mostly fixed cost. That’s why vm’s work economically. It won’t be until we can move databases like VMotion moves virtual machines that we’ll break free of the licensing catch-22.

Morris – it depends on how you look at it. For example, in a lot of shops I’ve worked with, those SQL Servers are going to exist whether they’re on physical servers or virtual servers. The shop has, say, 20 SQL Servers that support different applications. Each server has its own OS and SQL Server compatibility requirements – some apps can’t go to 2000, some can’t go to 2005, some don’t support clustering, some need the application to run under SA, and so forth. While the shop can’t reduce their licensing costs, they CAN reduce their infrastructure costs by combining these instances onto different virtual machines.

Riddle me this batman.

Want a challenge? Show me why I’m forced to purchase an enterprise licenses to use vMotion with Sql Server 2008 R2. And if that even makes since. The 90 day restriction is now gone, right? I’m purchasing standard licences bases on virtual processors to # of cores per the document. but if all hell breakes loose I can’t move my effected virtual server over to another host? Not automatically by the way (ie no load balancing) just as an option to get the server back up faster in case of a crash? As you well know the price for Enterprise would simply have me going back to a physical box. My assumption is 90% of all virtual installs are done this way. As the rules have changed so many times many people are probably upgrading there standard versions as if they had pyhsical cpu’s 1 to 1 and calling it a day, but the minute they move the virtual host to another physical box they just became non compliant? am I right or wrong?

Brent I love your mind so give you the first crack at this, tomorrow I post on Sql Server Central.

Thanks,

Tony

Hi

I went you to sent me the cost for the SQL Server 2008 R2. My office went to buy it

Thanks

Donald

Hi Donald,

Lots of things impact the cost of the product. This looks like a good place to start on the Microsoft site: http://www.microsoft.com/sqlserver/en/us/get-sql-server.aspx