Without looking in your wallet, do you know how much cash you have? Most of us know within a few dollars. Now, without looking in your SQL Server, do you know much data is cached in memory? You probably don’t and that’s okay; you shouldn’t know how much data SQL Server is caching in memory. We can’t control how much data SQL Server is caching, but we can control how we cache data.

Different Types of Cache

There are a lot of different ways to approach caching. One of the most prevalent ways involves thinking about cache in two different levels (much like CPU cache): first level cache and second level cache.

First level cache is an immediate, short-lived cache that works within a single session to attempt to minimize database calls. Unfortunately, first level cache is only used for the duration of a current session or transaction (depending on your terminology). This is very short lived and it’s only useful to the current process. While helpful, first level cache has a limited scope.

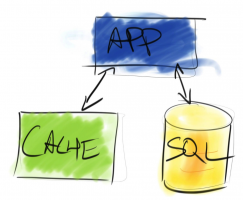

There’s another type of cache: second level cache. Second level cache exists outside of the current process and can be shared between multiple transactions, processes, servers, or even applications. When we talk about adding cache to an application, we really mean second level cache.

A Bit of Cache

Even the most basic of ORMs have a little a bit of cache available. The first level cache is used as a short lived buffer to reduce the amount of work that the ORM has to do. First level cache is used for caching objects in the current transaction and query text. Although this cache can be helpful for the current process, this cache isn’t shared across multiple processes or even multiple database batches. If we want to have a more robust cache, we have to look elsewhere.

ORMs like Entity Framework or the LLBLGen Framework don’t have a second level cache. It’s up to developers to add a cache when and where they need it. This exposes developers to additional concerns like cache invalidation, cache updates, and query caching. All of these features and functionality may not be necessary, but that’s an acceptable trade off – it’s up to developers to implement cache features in ways that support application requirements.

Although it takes up developer time, building the second level cache yourself has the benefit of creating a cache that’s suited to the application’s requirements. For many application level features, this is good enough. It’s important, though, that developers pick a caching layer capable of meeting their operational requirements. Operational requirements include horizontal scalability, redundancy and fail over, recovery of cached data, or customizable cache expiration on an object-by-object basis.

These basic ORMs aren’t really all that basic – they have full features in other parts of the ORM, but they only offer basic support for automatic caching through the ORM.

A Lot of Cache

You’ve got memory. You want to use it to cache data. What’s the easiest way to do that?

One of the easiest approaches to adding caching to your application is to use a framework that supports it out of the box. A number of ORMs, including both Hibernate and NHibernate, provide this support. Enabling cache is easy – just change a few lines in a configuration file and the cache will be available to your application. Things start getting tricky, though, when you examine the richness of the caching that’s provided by these tools.

Power comes with a price. When you’re getting starting with tools like Hibernate or NHibernate, there’s a lot to take in and many developers overlook these features. Developers can choose on an object by object basis which caching strategy should be applied. Based on business requirements we can choose to treat certain cacheable objects as read only while others can be used as a read/write cache. Some objects can be cached while others bypass the secondary cache entirely – there’s a lot of complexity for developers to manage.

While this can be overwhelming, this flexibility serves a purpose – not all features of an application have the same requirements. Some features can serve old data to users, other features need to be up to the minute or up to the second. Giving developers the ability to make these choices means that there is a choice to be made. Even if it’s a difficult one, developers can choose how the application behaves and can tailor performance and functionality to business requirements.

Making the Choice

If you’ve already got an existing project and you’re planning on adding a caching layer, don’t think that you have to re-implement your data access layer just to get better support for caching. Both approaches have their benefits and it’s far more important to be aware of which data needs to be cached and the best way to cache it.