SQLBits Session Voting is Open Now! Wanna See My Sessions at Bits?

I’d love to come over and speak at SQLBits this April, but for that to happen, the organizers need to hear from you that you’d come see my sessions.

If you’re going to Bits, you’ll need a login, and then you can vote on these sessions – but only if you want to see them! I’m not asking for non-attendees to vote to skew the results – that’s not fair to the other speakers.

This year, the theme is cartoons, and you can see how some of my sessions were heavily influenced by that, hahaha. Here’s what I submitted this year:

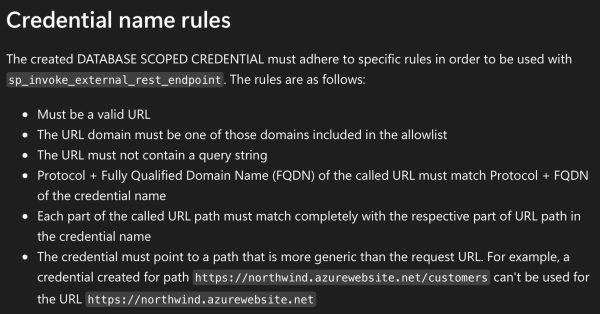

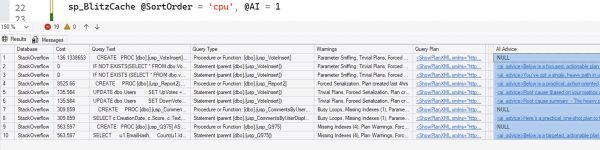

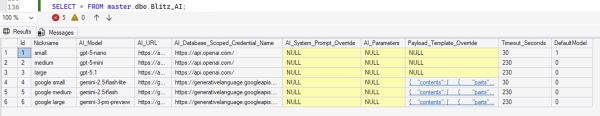

Calling AI from T-SQL: Real-Life Lessons from sp_BlitzCache – Brent Ozar cuts through AI hype with real-world lessons from calling LLMs inside sp_BlitzCache. Learn how to pick models, write effective prompts, pass the right context, handle failures, and use AI safely from your own stored procedures.

Pokémon Battle, Choose Your Index: You Can’t Have Them All – In this demo-heavy, interactive session, Brent Ozar turns index tuning into a Pokémon-style battle. Using the Stack Overflow Users table, the audience plays index “cards” against real queries to see which designs win, lose, or backfire—and why you can’t have them all.

The Big Red Button: How to Use sp_Kill – In this quick talk, Brent Ozar introduces sp_Kill, a safer alternative to restarting SQL Server during emergencies. Learn when to push the big red button, how to identify runaway sessions, and how to kill the right queries while logging everything for later analysis.

Panel Discussion: 20 Years of the Cloud: What Changed, What Didn’t, and What’s Next – Brent Ozar leads a panel of experienced data professionals reflecting on 20 years of the cloud. With no vendor marketing, they discuss what actually changed, which problems never went away, and what they expect to face in the next 20 years based on real-world experience.

Panel Discussion: AI in Your Career: Sidekick, Hero, or Villain? – Brent Ozar leads a panel discussion about how AI is reshaping data careers. Panelists share how they decide what to delegate to AI, how impacts differ by role, warning signs of over-automation, and how to intentionally cast AI as a sidekick, hero, or villain in your career.

Then, go through the other 700+ sessions to vote on others you’d like to see too. I hope to see you at Bits!

It’s a really slippery slope, and it goes downhill fast.

It’s a really slippery slope, and it goes downhill fast.

Readers like you are what make it possible for me to spend so much time giving back to the community, building tools like this and giving ’em away for free.

Readers like you are what make it possible for me to spend so much time giving back to the community, building tools like this and giving ’em away for free.