Microsoft has an official list of what’s new in 2022 overall, but here I’m specifically focusing on system objects that might be interesting to script developers like you and I, dear reader.

New stored procedure sp_get_table_card_est_and_avg_col_len – I assume the “card” refers to statistics and cardinality, not Hallmark. SQL Server has historically struggled with memory grants because it uses datatype size to budget memory grants, and bigger-than-necessary data types (like NVARCHAR(MAX)) have led to larger-than-necessary grants. It’d be cool if this proc was a down payment to mitigate that problem, but I have a sinking feeling it has to do with external data sources. I would tell you more about it, but when I run it, I get:

|

1 2 |

Msg 15871, Level 16, State 9, Procedure sp_get_table_card_est_and_avg_col_len, Line 1 [Batch Start Line 0] PROCEDURE 'sp_get_table_card_est_and_avg_col_len' is not supported. |

New view sys.dm_tran_orphaned_distributed_transactions – every now and then, I’ve run across Availability Groups replicas with problems due to orphaned distributed transactions consuming DTC resources or holding locks. This new undocumented DMV might be a down payment to resolve that problem. I don’t have an easy way to reproduce the problem quickly, so I can’t demo it.

New view sys.database_automatic_tuning_configurations – this one’s a little odd because Books Online tells me it’s been around since SQL Server 2017, but I don’t remember seeing it before, and it’s not in my 2019 test instances. Tells you if Force_Last_Good_Plan is on, and I would imagine that down the road, as more automatic tuning options might come out over the next several releases, this might have more info.

New Query Store DMV columns – now that Query Store is starting to work on read-only replicas, looks like they added plan_persist_plan_feedback.replica_group_id, plan_persist_query_hints.replica_group_id to support those goals. Plus plan_persist_plan_forcing_locations gets columns for timestamp and plan_forcing_flags.

New spinlock troubleshooting – sys.dm_os_workers gets columns for spinlock_wait_time_ms, spinlock_max_wait_time_ms, and spinlock_wait_count.

New stuff to support offloaded compression

This stuff needs its own section. RC0 introduced the ability to offload compression to Intel processors equipped with QuickAssist.

We get new sp_configure options for ‘hardware offload mode’ and ‘backup compression algorithm’. By default, these are off. To turn on offloaded compression, install the Intel QAT drivers, then do an alter:

|

1 2 |

ALTER SERVER CONFIGURATION SET HARDWARE_OFFLOAD = ON (ACCELERATOR = QAT); |

Which returns:

|

1 2 3 4 |

Hardware offload configuration has changed. Current configuration (0x0) and mode (0x0). New configuration (0x1) and mode (0x0). Restart SQL Server for the new configuration to take effect. |

After restarting the SQL Server, check this brand spankin’ new DMV:

|

1 |

SELECT * FROM sys.dm_server_accelerator_status; |

And, uh, on my VM, it’s still not enabled:

Because you can enable it even on processors that don’t support it, which strikes me as kinda odd. I suppose you would want to make it part of your standard build, and then whenever it’s available, it’ll get used, assuming you call for offloaded backup compression in the right way.

New messages in RC0

In each release, I check sys.messages for new stuff. Some of this stuff gets added for the cloud, like Azure SQL DB or Managed Instances, so read these with a grain of salt. Here’s what’s new in RC0, new from the last CTP:

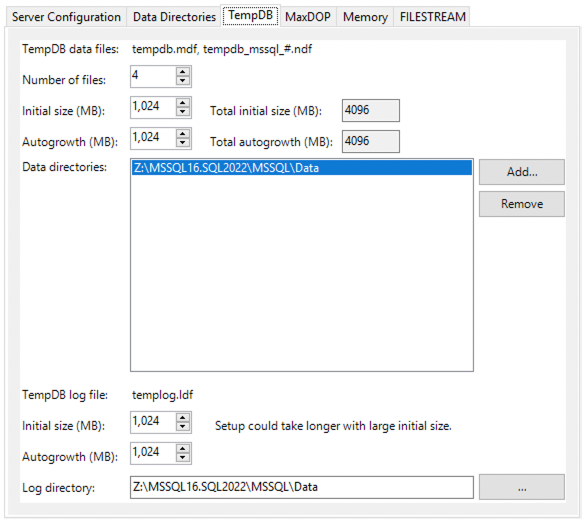

- 1136: The tempdb has reached its storage service limit. The storage usage of the tempdb on the current tier cannot exceed (%d) MBs.

- 5373: All the input parameters should be of the same type. Supported types are tinyint, smallint, int, bigint, decimal and numeric.

- 5374: WITH clause is not supported for locations with ‘%ls’ connector when specified FORMAT is ‘%ls’.

- 16722: Cannot change service objective for %ls to %ls as long-term retention is not supported yet on Hyperscale. Please disable long-term retention on the database and retry

- 17414: Retrieving the address of an exported function %.*ls in accelerator library %.*ls failed with error 0x%x.

- 17415: %.*ls component enumeration failed with zero component count.

- 17416: %.*ls component enumeration failed with mismatch in component count.

- 17417: %.*ls %.*ls not compatible with SQL Server.

- 17418: Detected %.*ls %.*ls.

- 17419: %.*ls hardware detected on the system.

- 17420: %.*ls hardware not found on the system.

- 17431: %.*ls initialization failed with error %d.

- 17432: %.*ls initialization succeeded.

- 17433: %.*ls session creation failed with error %d.

- 17434: %.*ls session sucessfully created.

- 17435: %.*ls will be used in hardware mode.

- 17436: This edition of SQL Server supports only software mode. %.*ls will be used in software mode.

- 17437: %.*ls will be used in software mode.

- 17438: %.*ls session alive check failed with error %d.

- 17439: %.*ls session tear down failed with error %d.

- 17440: %.*ls session close failed with error %d.

- 17441: This operation requires %.*ls libraries to be loaded.

- 19713: Statistics on virtual column are not avalable.

- 19714: Number of columns in PARTITION clause does not match number of partition columns in Delta schema.

- 21093: Only members of the sysadmin fixed server role or db_owner fixed database role or user with control db permission can perform this operation. Contact an administrator with sufficient permissions to perform this operation.

- 22786: Synapse workspace FQDN is not in the list of Outbound Firewall Rules on the server. Please add this to the list of Outbound Firewall Rules on your server and retry the operation.

- 22787: Change feed table group limit of %d groups exceeded

- 22788: Could not enable Change Feed for database ‘%s’. Change Feed can not be enabled on a DB with delayed durability set.

- 25755: Could not create live session target because live session targets are disabled.

- 31633: The length of the provided %ls exceeds the maximum allowed length of %u bytes.

- 31634: The %ls must contain a ‘%ls’ for use with managed identity.

- 31635: The %ls’s ‘%ls’ value must be a %ls for use with managed identity.

- 31636: Error retrieving the managed identity access token for the resource id ‘%ls’

- 33547: Enclave comparator cache failed to initialize during enclave load.

- 39057: The value provided for the ‘%.*ls’ parameter is too large.

- 39058: The parameter ‘%.*ls’ has a type that is not supported.

- 45770: Failed to move the database into elastic pool due to internal resource constraints. This may be a transient condition, please retry.

- 46552: Writing into an external table is disabled. See ‘https://go.microsoft.com/fwlink/?linkid=2201073’ for more information.

- 46553: Create External Table as Select is disabled. See sp_configure ‘allow polybase export’ option to enable.

- 46953: Pass through authorization using S3 temporary credentials is not supported. Please use S3 credentials to access storage.

- 47507: Adding memory optimized files to the database replicated to Azure SQL Managed Instance is not supported because its service tier does not support In-memory OLTP capabilities. Consider replicating database to managed instance service tier supporting In-memory OLTP capabilities.

- 47508: Adding multiple log files to the database replicated to Azure SQL Managed Instance is not supported because managed instance does not support multiple log files.

- 47509: Adding FileStream or FileTables to the database replicated to Azure SQL Managed Instance is not supported because managed instance does not support FileStream or FileTables.

- 47510: Adding multiple memory optimized files to the database replicated to Azure SQL Managed Instance is not supported because managed instance does not support multiple memory optimized files.

If any of those messages are interesting to you, feel free to leave a comment about it.

New database-scoped configuration options

These are all new since SQL Server 2019 – some were introduced in prior CTPs, but I’m mentioning them all here because there’s good stuff in here for query tuners:

- 25 – PAUSED_RESUMABLE_INDEX_ABORT_DURATION_MINUTES

- 26 – DW_COMPATIBILITY_LEVEL

- 27 – EXEC_QUERY_STATS_FOR_SCALAR_FUNCTIONS

- 28 – PARAMETER_SENSITIVE_PLAN_OPTIMIZATION

- 29 – ASYNC_STATS_UPDATE_WAIT_AT_LOW_PRIORITY

- 31 – CE_FEEDBACK

- 33 – MEMORY_GRANT_FEEDBACK_PERSISTENCE

- 34 – MEMORY_GRANT_FEEDBACK_PERCENTILE_GRANT

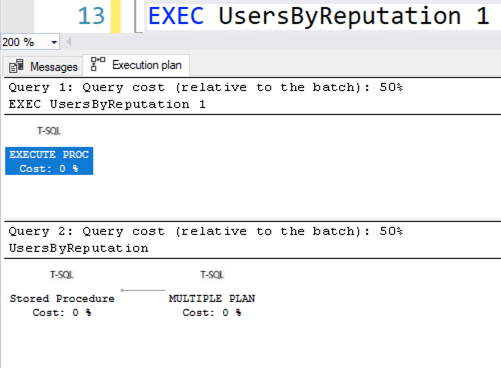

- 35 – OPTIMIZED_PLAN_FORCING

- 37 – DOP_FEEDBACK

- 38 – LEDGER_DIGEST_STORAGE_ENDPOINT

- 39 – FORCE_SHOWPLAN_RUNTIME_PARAMETER_COLLECTION

That last one’s particularly interesting to me because SQL Server 2019 originally shipped in a way that you could see runtime parameters in sys.dm_exec_query_statistics_xml, and then they turned it off around CU11-12 without documenting the changed behavior. That was a total bummer, because that feature was a lifesaver for troubleshooting parameter sniffing. I’m hoping we can get that back again.