Today’s episode of Office Hours is brought to you by Quest Software. I went through your top-voted questions from PollGab.com/room/brento, and, uh, kinda looked like I was sponsored by Fendi while doing it, hahaha:

Wow, those logos are bigger than I thought. Here’s what we covered:

- 00:00 Start

- 01:20 reluctantly_tolerant : I used FCI instead of AG for server w/500 DBs due to worker thread limit. I used NetApp ONTAP filesystem and was very impressed by performance, and ability to spin up clone of production in under 2min. Now I feel this is the gold standard. Why go back to AGs, even where possible?

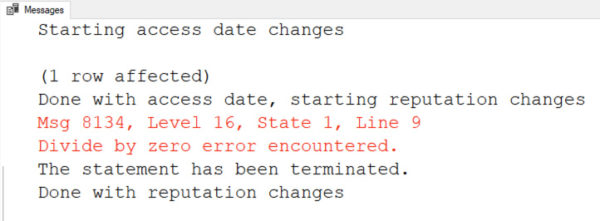

- 02:42 Simon: First off: I know we should not use the NOLOCK hint :] But can NOLOCK queries even cause index corruption on queries that is NOT modifying data? Perhaps if combined with persisted computed columns? (SQL Server 2019, latest CU).

- 03:58 LogarTheBarbarian: Hello hello Brent! What questions come to mind if you came upon MSSQL instances that modified the Ola DBCC CheckDB job to run CHECKTABLE, CHECKALLOC, and CHECKCATALOG on different days rather than CheckDB in its entirety?

- 05:15 Dru: When should a unique constraint be used vs a unique index?

- 06:32 Sigríður: What are your favorite things about living in San Diego and Las Vegas?

- 08:38 Sigríður: What is your favorite standing desk and why? What are the specs?

- 09:18 Peter Seale: VAGUE question: we found that 80% of our db data is used by indexes. Is this normal-ish? Any vague tips for reducing our data usage? Most tips focus on data used by tables, and never mention data used by indexes.

- 09:56 Piotr: What are your thoughts on upgrading from SSRS2014 where we have numerous reports? Best to migrate to SSRS2019 or skip entirely and starting learning how to migrate to PowerBI server? Currently on-prem but planning on moving to Azure.

- 12:54 Maksim: What do you use for motivation to read tech docs and tech books?

- 14:05 PartyPerson: Hey Brent, what is the story behind the “California deserves whatever it gets” sign behind you?

- 15:20 TeeJay: A lot of our reports are computationally expensive and run repeatedly for each subscription. I assume that the solution to this is to pre-aggregate the DW data as much as possible, but suspect that I’m probably re-inventing the wheel. (Budget: £0) What words should I be googling?

- 16:34 Alex: Hi Guru, I have some small tables on Azure SQL DB with very little use. Once in a while a scheduler runs a SP that performs one insert to a table in 2 concurrent threads. I have a gap in identity column. I added TABLOCKX as suggested by docs but problem still arises.

- 18:08 depthcharge: Hi Brent, have you ever encountered a scenario where you indexed to remove an eager index spool, and SQL Server ignores the index and continues spooling? Aside from index hints (which help, but we can’t change the code), any other clubs I can hit the optimizer with?

- 19:13 toepoke.co.uk ;-): Hey Brent, In a recent office hours you spoke of encrypting data on the app side rather than the db side which I found interesting. How would this work from a sorting perspective, eg sort a UI table by Last name,First name, etc. Store the first letter ? Store the hash? Cheers!

- 20:51 Q-Ent: Hi brent, are you aware of MCR(Maximum consumption Rate ) for CPU sizing? Do you think this is a reliable method ?

- 22:01 Wren: Hi Brent! Building some reports for our SQL Server environments and I found one of your old (2009) replies on Stack about finding CPU time per database… do you think it’s a worthwhile stat to use to determine “what should move to cloud first”? Any improvements since SQL2008?

- 23:23 Tim.: Hi Brent. I like the fundamentals of powershell. Will there be a mastering powershell? Will you be working with Drew more in the future?

- 24:50 Stone Temple Pilot: How do you measure bad page splits for inserts on a poor clustered index?

- 25:17 Paco: Hello Brent, I have a friend who is facing a server that has both threadpool waits and Non-Yielding Schedulers occurring around the same time, until they cause the AG to fail. Have you seen threadpool waits cause Non-Yielding Schedulers or vice versa?

Is your company hiring for a database position as of February 2023? Do you wanna work with the kinds of people who read this blog? Let’s set up some rapid networking here. If your company is hiring, leave a comment.

Is your company hiring for a database position as of February 2023? Do you wanna work with the kinds of people who read this blog? Let’s set up some rapid networking here. If your company is hiring, leave a comment.