SQL Server 2012 SP3 Adds Memory Grant and Performance Features

9 Comments

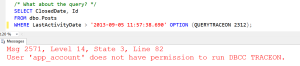

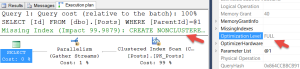

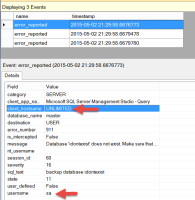

New ways to measure your queries SQL Server 2012 SP3 has just been freshly released! Not only does this release contain bug fixes, it also contains a slew of performance tuning related features. Many of these features are about memory grants. This is NOT the memory used in the buffer pool/ data cache by the…

Read More