You, dear reader, are most likely a Microsoft SQL Server user – either a DBA or developer.

Set your pencil down for a second because you’re not going to learn about a Microsoft product today, nor are you going to learn something that is going to be immediately useful to you in your job. Today, I’m writing about a completely different product just to give you a better insight on what else is out there.

Our SQL Server monitoring app, SQL ConstantCare®, uses AWS RDS Aurora PostgreSQL as its database back end. I wrote about that design decision five years ago, and since then, we’ve been really happy with both PostgreSQL in general and AWS Aurora in particular. We just didn’t have to spend time worrying about the database layer – for the most part, it just worked, in the same way Microsoft Azure SQL DB just works.

No matter what database platform you use, costs are split into two main parts:

- The storage layer – where you pay for how much you store, and how many accesses you do (reads and writes)

- The compute layer – where you pay for CPU & memory for the servers to process your ugly queries

Traditionally, us data people have wanted the fastest storage we could get, and the most compute power we could get. That led to problems with management because we bought really good stuff, and then… it usually sat around idle, unused. We had to pay a lot to handle the peaks of our workloads, and then we were locked into expensive stuff that rarely got hit hard.

Serverless can reduce those costs while simultaneously handling more bursts.

To help reduce costs and improve flexibility, several years ago, AWS introduced Aurora Serverless. Instead of paying for fixed server sizes (like 8 cores and 60GB RAM), Aurora Serverless:

- Watches your query workload

- Automatically adds & removes CPU & memory on the fly

- And reacts automatically in milliseconds without dropping queries

Not minutes. Not seconds. Milliseconds. That’s bananapants. You just define a minimum and maximum size for your database servers, and Aurora automatically handles the rest, independently, for each replica. Instead of provisioning an 8-core, 60GB RAM server, you could say you want your config to swing anywhere from 2 cores and 4GB RAM all the way up to 64 cores and 128GB RAM. (More on the exact provisioning later.)

Somebody at Microsoft is going to be tempted to pipe up, “Yeah, we have serverless in Azure SQL DB too,” and I can’t help but respond in meme form:

Yes, that’s actually in the documentation, and it’s why you’re not hearing a lot of buzz about serverless here in the Microsoft & Azure communities. Azure balances load in minutes, not milliseconds, and drops connections at the worst possible time – when you’re under heavy workloads and the server isn’t able to keep up.

I suppose that’s serverless, in the sense that you lose the database server temporarily? Let’s move on.

Aurora Serverless was a perfect fit for SQL ConstantCare®.

SQL ConstantCare® consists of a small app that clients install and point at their SQL Servers. Once a day, that app polls all of their SQL Servers, exports diagnostic data to JSON files, encrypts it, and sends it to us. We can’t predict when it’s going to happen – it’s based on the schedule that users set up on their end, which tends to be even-hours (like noon or 4PM local time in their own time zone.)

When the files come up to us in AWS, we:

- Decrypt & extract them, and then load their contents into a database

- Run diagnostic queries, checking for problems, and build a list of things to warn them about

- Send them emails with advice

That application logic layer has been serverless (AWS Lambda) all along, and I wrote about that design decision as well. That’s paid off really well as we scaled to thousands of client servers because the workload is really bursty. We don’t wanna pay for lots of app servers to sit around idle most of the time, but when client files come in, we want to process the data as quickly as practical.

The problem right from the start? The database layer! AWS Lambda would see lots of incoming files all at once, spin up lots of workers to process all those files, and then – wham, all those workers would try to tackle the database server at the same time. Richie had to work hard to smooth out the peaks, or else we just kept DDoS’ing our own database server.

By switching to Aurora Serverless, the database could now better handle bursts of incoming files – while simultaneously cutting costs by downsizing capacity for the hours per day that we sat mostly idle.

When you create serverless replicas, you size them in Aurora Compute Units (ACUs). One ACU = 1 core and 2GB RAM. Because we wanted to make sure it worked, we picked:

- Min replica size: 0.5 ACUs (that’s 1/2 a core and 1GB RAM)

- Max: 32 ACUs (that’s 16 cores, 64GB RAM)

And then sat back and watched the replica automatically handle workloads.

Aurora resizes our database servers FREQUENTLY.

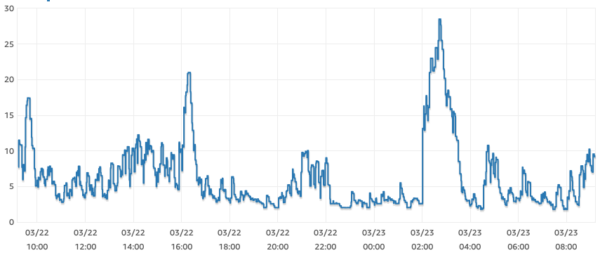

Here’s an average of the primary writer’s ACUs over a 24-hour span, in 1-minute intervals:

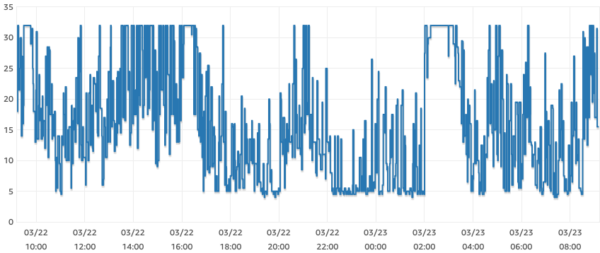

Don’t worry about the time zone or peak hours – just think about this being a 24-hour time span. On average, we have a few different bursts per day, but we generally range from around 2-3 ACUs (cores) up to around 28 ACUs (cores). But here’s where it starts to get wild – instead of looking at averages, let’s look at the MAX in each minute range:

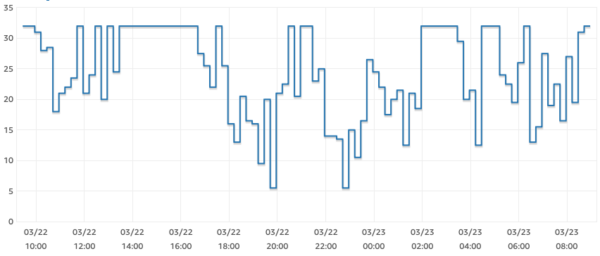

Aurora is frequently slamming the gas pedal, taking us right up to 32 cores! We’re maxing out at 32 cores all the time throughout the day. And conversely, the min in each minute:

It’s slamming the brakes right down to 1-2 ACUs all the time.

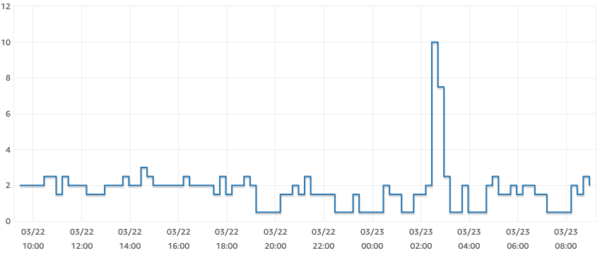

Lemme rephrase those 3 charts: in many one-minute time spans of the day, our Aurora database server scales up and down automatically from 1 core to 32. All day long. In fact, it’s rare to have a 15-minute time span where Aurora didn’t ramp capacity up and down like crazy. Here’s the same set of charts in 15-minute intervals – first, maxes:

In most of the day, in any given 15-minute time span, we were hitting 15-32 cores. But for mins:

It dropped right down to 1-2 cores.

Again, this is night-and-day different to what Azure is doing. Microsoft’s all, “Hey, let’s think about this for a while and transition up and down over the span of a minute or two.” Amazon’s reacting with lightning speed, so as each query comes in, it gets the power it needs and our apps don’t notice the difference.

Admins have to ask new questions.

Because Aurora’s reaction time is so fast, it opens up totally new questions for performance tuning and cost management.

“Are we doing the right database maintenance?” Resource-intensive queries literally cost you money. We were spending around $50/day for storage throughput at one point, and one day’s cost suddenly shot to $250. After digging into AWS’s Performance Insights (which is fantastic, by the way), we determined that a surprise automatic index maintenance job had cost us $200. That experience changed the way we think about index maintenance, and for SQL Server admins, that would be especially eye-opening. Are you sure you need those index rebuilds? Can you prove that the server is actually getting faster for your money? Odds are, it’s not.

“Should we raise or lower the max ACUs?” If we raise it, then resource-intensive queries might be able to finish more quickly – but scaling up compute power doesn’t always result in a linear speed-up of queries. Just because you go from 16 cores to 32 cores doesn’t mean big queries finish exactly 2x faster. The question morphs into, “How low can we go? How low can we set the max without hurting our most valuable queries?”

“When we change ACUs, how does that affect IOPs?” When you add more ACUs, you get more memory available to cache data, which means you hit storage less frequently. When you reduce ACUs, you’re likely going to hit storage more. There are no dials to set for storage performance – Amazon manages that for you automatically, and just bills you for every IO you do. There’s no easy across-the-board answer here, either – the answer is going to depend on your own workloads. For SQL ConstantCare®, we’re constantly importing new data and querying it, so cache is less useful to us.

“Does serverless make sense for our workloads?” Does this scale up/down, cost up/down actually benefit your application either in terms of lower cost, or faster performance? For our particular workload, it was really funny when we first switched over – Aurora Serverless was almost exactly the same cost as our previous pre-sized, inflexible servers, hahaha! However, by tuning our max ACUs, we could more easily find a sweet spot where we could reduce costs without harming performance.

But here’s my favorite part of Aurora Serverless: it gives us the ability to run new product experiments without worrying about capacity. For example, since the very start, I’ve always wanted to have a completely free tier of SQL ConstantCare that alerts you about the most vital issues, like missing backups, database corruption, or an urgent patch. Before moving to Aurora Serverless, I didn’t want to test that out because a huge wave of free adoption might cause us database performance nightmares. Now, the database server isn’t holding us back from those issues. (So stay tuned for some fun experiments!)

“Does migrating from SQL Server to Postgres make sense?” By this point of the post, you might be jealous of this cool technology. Remember, though, that right at the beginning I told you that today’s learning wasn’t going to be too relevant to your current job. You don’t really wanna change an entire application’s back end from one database platform to another, regardless of how easy Babelfish might make it seem. Back end migrations rarely make sense. However, I just wanted to talk about this technology today so you could see what’s going on in other database platforms.

Besides, Microsoft’s surely working on similar capabilities for Azure SQL DB. I love how cloud databases turned into an arms race because they’re a real differentiator for cloud vendors.

If you liked this post, you’ll probably enjoy Ed Huang’s musings on building a database in the 2020s. He asks, “If we were to redesign a new database today from the ground up, what would the architecture look like?” To be frank, it wouldn’t look like Microsoft SQL Server, and between his post and mine, you’ll understand why. I’m not saying SQL Server is dead, by any means – but if you’re a developer building new-from-scratch applications in the 2020s, SQL Server probably isn’t your first choice, and that’s why.

19 Comments. Leave new

Recently, I’ve had my head buried in Snowflake cost data, especially around the stop-start times of our various “virtual warehouses” (kinda like servers) across the whole Enterprise Data department. It has been pretty eye-opening to observe what an “on-and-off” database environment looks like for various types of workloads. (Dev envs, production trickle workloads from human users, batch jobs, hybrid workloads, etc).

I was a loyal and enthusiastic MSSQL DBA 5 years ago, with a suspicious eye towards the newer platforms, and definitely set in my ways. But it is just so much easier to do certain things w/Snowflake and similar platforms. I never want to go back. Right now Synapse (and from your post Azure SQL DB) seem like they are several years behind the curve. Hopefully they’ll catch up, but the competition is pretty fierce.

Yeah, from what little I know of it, Snowflake was a brilliant reinvention of databases to solve the data warehouse problem.

Well… I hope this will ring some bells in the head of the team that is supposed to implement Azure SQL Database Serverless.

I think they’re aware. Azure SQL DB Hyperscale was an interesting quick reaction to where Aurora is going, and I think it’s got a lot of potential, but yeah, Microsoft still has a lot of work to do there to catch up.

Great blog post. Always cool to learn what’s going on with the other side of the fence.

Hopefully, Microsoft will read this post and not take AWS Aurora lightly.

Thanks, glad you enjoyed it!

Thank you for giving good example. My company embarked on SQL-> Aurora PostgreSQL journey 3 years ago. We migrated about 60 databases and took a pause. I/O cost had been a big issue. Legacy code with bad queries can cost way more in I/O than compute. So we are taking a cautions approach – calculating current I/O and making estimates on how much it’s going to cost us in cloud. If it’s too high, need to fix on-prem code first. Serverless is perfect if there are spikes in activity but if there is traffic most of the day it’s more costly than regular RDS instance. Which you can configure with Auto scaling up to 15 readers.

Question: If your serverless spins up to 32 ACU every minute (even for a second), aren’t you end up paying 32 ACU cost for entire 24 hours? I thought there was a minimum time period for ACU you require to pay which is at least a minute?

It doesn’t spin up to 32 ACU every minute – reread the graph right after the sentence, “Here’s the same set of charts in 15-minute intervals – first, maxes:” It shows that there are plenty of 15-minute time spans where we don’t hit 32 ACUs.

yes you are right. I misread the context of previous sentence. Thank you.

Does this mean Microsoft has got too fat and happy with its SQL Server dominance?

Is it on the same trajectory Internet Explorer followed?

Really interesting post Brent.

Our organization has taken a multi-provider approach depending on the system type. Application/system processing is migrating to use AWS, whereas the operational reporting/analytics (where I sit in the business) is going down the GCP route, so will be interested to see how their auto-scaling works in practice.

I’m also impressed by the work being done by Google and the open-source community on PostgreSQL and AlloyDB for PostgreSQL. I think it is overdue to start learning the intricacies of Postgres query planning and indexing.

What are your thoughts about azure sql serverless hyperscale that is in public preview? Sounds like they promise scaling without downtime.

https://techcommunity.microsoft.com/t5/azure-sql-blog/automatic-compute-scaling-with-serverless-for-hyperscale-in/ba-p/3624440

I haven’t used it yet.

If your SQL Server database meets Babelfish limitations (check https://docs.aws.amazon.com/AmazonRDS/latest/AuroraPostgreSQLReleaseNotes/AuroraBabelfish.Updates.html), which are being removed from release to release, Babelfish could be an easy migration path for your SQL Server workload. And it will also benefit from all the advantages of Aurora as Babelfish is a feature of Aurora for PostgreSQL.

Alex – do you perchance have any commercial ties to Amazon and Babelfish?

If so, it’s good etiquette to disclose those relationships when you advertise a company’s products in a way that makes it sound like you’re independent.

I only suggest that because you’re using an Amazon email address, and what you’re doing is called astroturfing, and it’s the mark of an absolute and utter scum bag.

You’re not a scumbag, right?

So if you push your employer’s products, disclose that relationship.

Astroturfing is fun. I do it all the time

Brent,

I used my work email, so I am not hiding my affiliation with Amazon AWS. From an SME like you, I would expect a substantative answer, not just derogatory remarks. If you have nothing to say about Babelfish – nothing wrong there, just state it as it is.

BTW, Babelfish is not and Amazon product – it is open-source and bersides AWS, it is also available on other clouds.

Regards,

–Alex

Alex – was your email and Amazon affiliation disclosed to the readers?

Look closely at your comment before answering.