I bet, dear reader, that this is the post you’ve been really curious about in my behind-the-scenes posts.

Back in the post about the data we collect, you may remember that our initial goal was to analyze high-priority issues like missing backups or corruption check, priority boost on, offline NUMA nodes, etc. To do that, we take data straight out of SQL Server’s DMVs, and you can see those DMV queries in %localappdata%\ConstantCare\Current\Resources\Queries. ConstantCare.exe runs those queries, exports the data to one JSON file per query, zips them, encrypts the zip file, and uploads it to us.

The armchair architect league might wanna query those files directly, or load them into an on-demand database like Redshift, or put them in a document database. Thing is, we wanted to leverage spare consultant time from me, Erik, and Tara in order to build diagnostic queries across your DMV data, and…writing queries for those platforms is, uh, challenging at best (NSFW.) If you imagine a Venn diagram of people who know SQL Server troubleshooting, and people who can write SQL query, it’s a pretty good overlap. Now imagine that same diagram, but for people who know SQL Server troubleshooting, and people who can query a NoSQL data store. Yeah. Good luck with that.

So call us old-fashioned, but we wanted to put the data into a relational database.

We ruled out Microsoft SQL Server and Azure SQL DB.

I know, I know. The Microsoft geek in you – and me – wants us to use SQL Server on the back end, but here’s the deal: SQL Server is not self-managing or cheap. (After all, you and I make very good livings keeping SQL Server up and running, right?) Whenever possible in this product, I wanted to use something inexpensive that somebody else managed for us.

Azure SQL DB might seem like a valid contender, but like I wrote in an earlier post, Microsoft didn’t have a function-as-a-service platform available a couple years ago when we started building SQL ConstantCare. That would mean running our app code in AWS, and the database in Microsoft Azure. As Jeremiah once said, “Heeeeellloooo, laaaateeeennncccyyyy.” Even worse, until this year, Azure SQL DB didn’t even support VNETs, which made security management much, much harder for code that runs in AWS Lambda functions.

Today, if we were going to start building it all over again, Azure SQL DB would be a valid contender. It still has one big gotcha: cost. It’s twice as expensive as the leading brand:

| What you get for… | Azure SQL DB | Brand A |

| Around $200/mo | 1 core, 7GB RAM | 2 cores, 15GB RAM |

| Around $1,700/mo | 8 cores, 56GB RAM | 16 cores, 112GB RAM |

So, uh, what is this Brand A, you ask?

Meet AWS RDS Aurora.

Aurora is Amazon’s custom version of MySQL and PostgreSQL. Here’s a 2-minute marketing video targeted at managers, but it paints a pretty good picture as to why the small business guy in me was sold:

And this one-hour technical video explains why the DBA in me was sold. (Don’t bother going past 40 minutes – it switches to Q&A.) In this video, when he says “PostgreSQL,” he’s talking about the conventional boxed product (like SQL Server) that you manage yourself. When he says “Aurora,” he’s referring to this new cool implementation of PostgreSQL (kinda like how Azure SQL DB is for Microsoft.)

You don’t need to know anything about PostgreSQL for that video – your SQL Server knowledge will let you keep up. It moves fast: within the first 5 minutes, he explains some of the automatic failover magic baked in with the Amazon S3 storage integration. In the next 5, he digs into why they don’t have to screw around with checkpoints. In 10-15 minutes, he covers how it even affects inserts into a B-Tree.

My favorite part about Aurora is that from the front end, it’s just plain old Postgres. That means we’re not locked in – if AWS discontinues Aurora or starts charging too much for it, we can move to self-hosted MySQL or Postgres (or RDS PostgreSQL, too.)

Aurora offers fancy stuff like readable replicas (up to 15, across up to 3 Availability Zones), automatic continuous point-in-time recovery going back up to 35 days, encryption, auditing, and easy scalability. We’ve already used the quick scalability, too: we started with an r4.large with 2 cores, 15GB RAM, $0.29/hour (roughly $200/mo) and upsized it to handle the growing user loads last week:

(Note: that terabyte number isn’t how much data WE have, hahaha. We’re only using about 35GB at the moment. And we’re up over 500 servers, it’s just that not every server sends in data every day. More on that in another post.)

Depending on which flavor you pick, you either get MySQL compatibility or Postgres compatibility, but not both simultaneously, so you have to make an architecture decision early on. We probably could have been fine with either, but we went with Postgres. We liked its maturity and feature set more at the time.

Designing a Database for DMV Data

In your database at work, you focus on the user tables, and the system tables are kind of an afterthought. They’re just something that you use in order to get your job done better/faster/cheaper. Most of us probably don’t put much thought into how the DMVs/DMFs are designed, whether or not they’re normalized, and how they’re joined together.

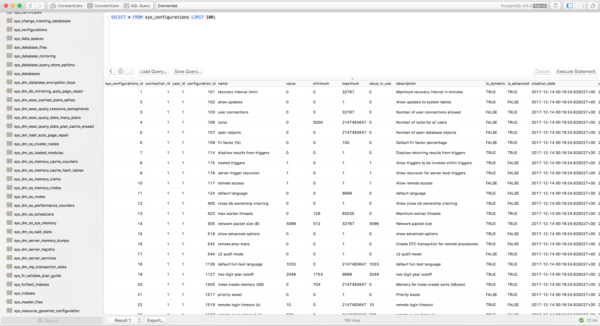

In this project, it’s the opposite: the DMVs are all we care about! But rather than redesigning them to be stored more efficiently or more normalized, Richie just took ’em exactly as-is. Each table has a few columns that aren’t in your SQL Server:

- ID – identity, primary key, clustered. Different names per table, so in sys_configurations, the ID is sys_configurations_id.

- connection_id – corresponds to one point in time for one SQL Server instance. Each time you upload data for a server, a connection is made. You’re welcome.

- user_id – matches up to a customer. Yeah, technically, a connection_id can only belong to one customer, but Richie went a little paranoid so we could double join for security purposes.

- creation_date – when the row was added. (Yeah, that’s not really normalized either, because you’d think that we could store that at a higher level, but I’m not gonna tell you all our magic secrets in this blog post, alright? Also, this was probably a mistake.)

Here’s how it looks:

Obviously, that’s not SQL Server Management Studio or SQL Operations Studio – those are SQL Server tools only. For Postgres, the most commonly used tool is pgAdmin, and it’s alright, but just alright. After trying a few different tools, I fell in love with Postico, shown above. I’m a Mac user, and Postico feels like a database tool that was designed for Mac database developers, by Mac database developers. It’s not pretty, by any means, just very minimalist. It gets out of the way.

The first cool part about having DMV data in a database like this is that I could take a lot of my existing diagnostic query knowledge and port it straight over to this new database. When I’m doing research for a customer to figure out why they’re having an issue, I don’t have to stop and think about how to query the data – it’s just like querying their SQL Server’s DMVs.

The second part – and this, dear reader, still just blows my mind – is that if I wanna see what changed compared to yesterday, I can simply join to yesterday’s connection_id for this server and find out. Wanna see if someone changed a config setting? Wanna see if this is a new problem, or it’s been persisting through time? Wanna see if a metric has been getting worse or better? It’s all right there in the database. Long term, I can even imagine us giving you an export of this data so you can query it yourself too.

Wanna learn more? Read my other posts about building SQL ConstantCare®, or check out the product.

10 Comments. Leave new

Great article, I’m working with a startup and we’re currently using RDS for SQL SQL server but seriously looking Aurora Postgres to replace the database layer. The price / performance / scaling side are pretty compelling. Also give TeamSQL a try, it’s a pretty decent cross platform (OS and database) client.

Thanks! I looked at TeamSQL, and I didn’t understand how they were going to make money (closed source free app), so took a pass. Interested to see if it takes off though.

For a side project I was looking into Postgres but I’ve been spoiled by SQL Server tooling so much that I decided to go with SQL Server instead. I don’t understand how people can work with such poor tooling. Even the $200/year IDEs for Postgres just suck. After seeing that I could see why people want to make their interfacing with their databases simple, if the tooling sucks why would you want to do complex queries?

Actually, Jon, tooling wasn’t a consideration. My workflow mostly deals with a text editor and a command line so having a nice IDE with auto-complete doesn’t matter much to me. We’re also testing most of the Postgres functions with Node.js and Mocha. No SQL Server IDE supports that workflow (I’m using Visual Studio Code for that). That being said, I’ve moved on from pgAdmin and I’m now using DataGrip. DataGrip, for my needs, has more than enough features.

I’m not saying that your choice is bad or anything. Just saying why I find it hard to move to PostgreSQL. The tooling sucks. I like to have the confidence that the columns exist that I type in are actually there and not misspelled as I type. I like to have the tooling like SSDT that can work like a C# programming compiling and telling me if anything is broken (it’s not a perfect tool and not nearly as good as the C# compiler but I find it better than nothing). A lot of testing can be avoided just by having static types and proper tooling. Then your tests can focus on the business use case rather than, “Does that column exist that I expect to exist?” Granted with a functional language you can even program out any bad business cases too.

I wish PostgreSQL did have better tooling since I hear so many great things about it. But it is just to big a hurdle for me right now unless I was doing it full time for work. But even then I’m not sure if it would be worth it.

PostgreSQL has a lot of the metadata information available to make it possible to get some great tooling/code generation from it. I don’t think many people have capitilized on it yet. Neither for SQL Server, for some reason people haven’t taken advantage of much of the great tooling out there. It confuses me to no end why we write so much code when we don’t need to.

Jon – makes sense, sounds like you’re the kind of customer that Microsoft continues to target with easy-to-use tools like Access as well. They make a ton of money catering to folks who want a really easy-to-use data platform, and it’s working well for them.

Everything’s a tradeoff. I use Apple gear for daily computing – it costs me more, but for me, the ease of use and reliability is worth the extra money. (That’s not the case for everybody – I totally get it.)

In our database back end, when we’re talking about thousands of dollars per month that come out of my own personal pocket, I don’t mind spending a little extra time writing queries the “hard” way (remembering syntax, for example) in order to save that money. I’m guessing – just guessing – that your database choice is driven by your company’s tooling choices, and they foot the bill for your tooling. When the SQL Server licensing starts coming out of your personal pocket, you might find your opinion changing.

Thanks for stopping by though!

And I’ll mark myself very fortunate indeed if and when I ever find myself in that predicament.

Thanks for sharing your business decisions. It’s been very insightful!

One man’s mistake is another man’s metadata…

I have worked with multiple database engines in multiple software houses since the 1980’s including using Oracle, SQLServer, MySQL, DB2 and all the others over the years. The simple fact is that SQLServer is the fastest interface for producing code for developers producing any meaningful code complexity.

That might be true, but it’s not the only factor involved in choosing a database.

If the fastest to finish was the only thing that mattered, we’d all be driving Ferraris.