Made a New Year’s resolution to lose weight this year? Forget taking it off your waistline – take it off your database instead with:

sp_BlitzIndex @GetAllDatabases = 1, @Mode = 2, @SortOrder = ‘size’

Or:

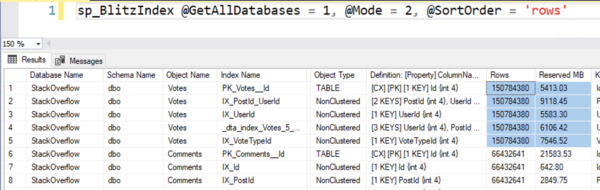

sp_BlitzIndex @GetAllDatabases = 1, @Mode = 2, @SortOrder = ‘rows’

This produces an inventory of all the indexes sorted by size on disk or the number of rows, descending:

You can copy/paste that into Excel, send it around amongst your teams, and start having discussions like:

- “Why do we have a 50GB heap called Sales_Backup_BeforeTheDeploy?”

- “Why do we have a 200GB table called ClickLog with over a billion rows? I thought we were purging that table nightly?”

- “Weren’t we supposed to archive the SalesHistory table?”

Things to be aware of when you’re reading the results:

- The Rows & Reserved MB columns are way off to the right. I just rearranged the SSMS output for this screenshot. That’s one of the reasons I typically do this analysis in Excel – that, and the fact that I like to highlight offensive rows.

- The Last User Seek, Last User Scan, and Last User Lookup columns can help identify when the index was last read, but it’s not entirely accurate for a lot of reasons that we discuss in this module of the Mastering Index Tuning class.

- The Last User columns are also incremented by any query, including things like your homegrown fragmentation scripts checking the contents of a row or a homegrown ETL package. Even if it’s run automatically by your apps, that’s still a “user” query to SQL Server.

That’s it. That’s the whole post. See, my blog post lost weight too.

14 Comments. Leave new

Tried it as shown above, it errored out on the single quotes around “rows”. Removed them and it’s running now.

Thanks as always for your posts!

Having just tried your suggestion, I would add to the list of possible discussion topics “Who put AdventureWorks on the production server?” In light of the “great Resignation” we probably can’t fire whoever did that. Besides, I have a bad feeling that it might have been me! 😉

I help support a system which has over 20 million rows in an active and historical table (I mean a heap) has about 128 columns wide it’s about 32 GB and with the indexes around 70 GB.

The product owner doesn’t want the historical data trimmed but another person (BI) working for the business realises the size is restricting the performance of the system.

As we are cautious the system won’t change but I can’t see an easy solution.

Partitioning the table (heap) doesn’t seem like a solution other than reducing the creation of forward lookups. I was thinking is there a way to split the table into two and use a view to amalgamate them but I don’t think this will resolve the issue.

The only solution I can think of would be to create a column store index of the required data, hopefully a subset, so the BI guy and pull his data out fast.

I think my index will remain fat for the foreseeable future unless some has a suggestion?

Once the historical data has gone through the ETL process and is available to BI queries from elsewhere (data lake, warehouse, etc.), what is the justification for keeping it in the OLTP database? Or are you saying there is no ETL process and the only thing the BI guy can query is the OLTP database? If the latter, you may want to suggest the former.

Hi Mark,

Thanks for the response.

I don’t think the business really understands our BI processes and we don’t have other areas to use ETL processes to archive the data into.

So no we don’t have ETL processes to migrate the data to an area for analysis and the business is against complexity so the simplest solution is to not change anything and for reports to run slower…

Regards.

Maybe a silly question, but did you enable page compression? Might save some GBs.

Hi Koen,

Thanks for your suggestion but I think Brent is lighting up his flame gun as I think this is going off-topic.

I think I’ll be stuck with fat indexes but it seems like a good reason to start to use column indexes so I can understand them better.

Create archive table in the same db and move historical data, then create columnstore index on top of the archive table, that might save lot of space and you can have better Read performance, if table structure support col store index.

Great tip! One question though…

What is the syntax to list the indexes sorted by size on disk for a single user specified db?

Tried the following but didn’t get any rows back.

exec sp_BlitzIndex @DatabaseName = ‘AdventureWorks’, @Mode = 2, @SortOrder = ‘SIZE’

Your syntax is correct, but AdventureWorks is a romantic comedy of a database. The objects are too small to bother with. You can change that with the threshold parameter for sp_BlitzIndex, but what I’d do instead is start working with your real world databases rather than AdventureWorks. 😉

Hi Brent, great article as always.

But a question remains, the phrase: “Weren’t we supposed to archive the SalesHistory table?” is a classic one but when we sit around in a meeting no one knows how to move. I haven’t found any article on your blog giving guidelines. On internet there are a few tepid approach but a “4 way to archive data in SQL Server” is the instructions people search for

I hope I gave you inspiration for your next blob post 😉

Archiving data is pretty far beyond a blob post. That’s where consulting comes in.

From Database to Dietbase is a moment! 😉

Thanks Brent, an inspiring way to start a new year!

Happy NewYear Brent

Great post, I like it!

This one is good. “Why do we have a 50GB heap called Sales_Backup_BeforeTheDeploy?”

Well I have tried and still trying to convince my customer to push back at the supplier to get them to clean/archive it. Mine is just a lillte heap with 2,8TB

But I just love it. 🙂