Even if you’re not writing to different physical drives, you can get faster backups with Microsoft SQL Server when you back up to multiple files. Your exact numbers are going to vary based on your server and your utter inability to rub two sticks together to make fire, but let’s see how I do.

Here’s the hardware I’m using:

- 96-core, 768GB RAM i3en.metal AWS EC2 instance with 8 7.5TB NVMe SSDs

- SQL Server 2019 Developer Edition CU6

- Stack Overflow 2020-06 database (about 381GB)

The i3en.metal has 8 7.5TB NVMe SSDs. The Stack Overflow database ships on 4 data files and 1 log file, so I’m putting each of the data files on a separate NVMe SSD. That means the database in this post probably isn’t configured exactly the same as yours, but the results are still relevant. I’ve seen similar performance gains for larger databases that have just one data file in ’em.

During the tests, I use between 1 and 4 backup files, and in each test, I explain whether all of the backup files are on the same drive, or a different one – but in all cases, they’re in different drives from the data & log files.

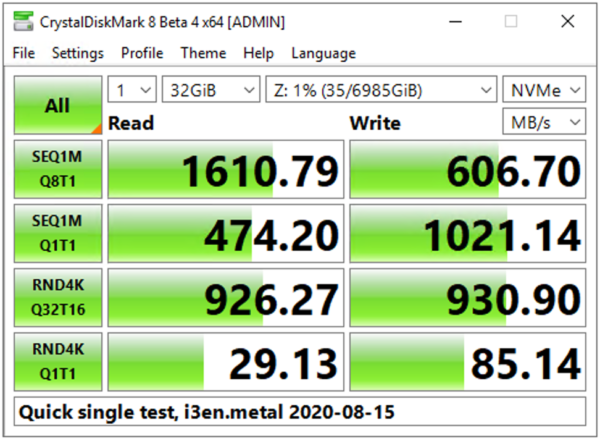

Each of the NVMe drives are pretty quick by themselves:

I wanted to use this monstrously big hardware (especially relative to the database size) to illustrate that we’re not talking about hardware limitations here. Even if you’re on big metal, multiple backup files can help. Also, all of these tests use backup compression turned on, and I wanted to remove any hint of a CPU bottleneck.

You, dear reader, are going to ask all kinds of “but what about” questions. My role here is not to do your own backup testing for you: my role is to inspire you to do your own testing in your own environment so you can find the right answers to your own questions.

Test round 1:

Backing up to multiple files on one volume

In this test, the backup files were on one of the local NVMe SSDs, but not the same volume that the database’s data & log files lived on.

- 1 backup file: 9.1 minutes

- 2 backup files: 7.9 minutes (13% faster)

- 4 backup files: 5.1 minutes (43% faster)

- 8 backup files: 6.2 minutes (32% faster)

This isn’t meant to be a definitive, “Always use 4 backup files” kind of post, but just a starting point as to how much quicker your backups can get with this one easy change. As with all of the metrics in this post, the idea is to get you started on the road of testing your backups for quick wins on large servers.

Test round 2:

Backing up to files on different volumes

In this round, each backup file was on its own local NVMe SSD – until we got to 8 files, since I’ve only got 4 extra NVMe drives on the i3en.metal:

- 1 backup file: 9.1 minutes

- 2 files, 2 volumes: 6.9 minutes (24% faster)

- 4 files, 4 volumes: 4.3 minutes (53% faster)

- 8 files, 4 volumes: 3.0 minutes (67% faster)

Spreading the load over different backup volumes gave me double the speed improvements that I got when writing to a single volume. In this impractical example, I’m using local NVMe, and your server probably isn’t going to have that. However, you can get similar results by writing your backup files to different storage targets that have their own individual throughput that isn’t collectively throttled.

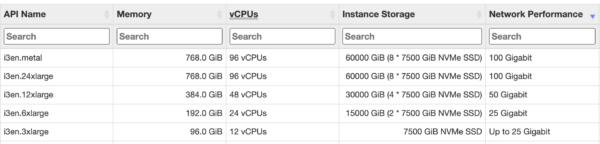

In the prairie days when your grandmother backed up her SQL Server, she hesitated to write her backups over the network because her network consisted of two tin cans connected with string. Today, thanks to the simple ec2instances.info, you can see the network bandwidth available to your instance type, and it ain’t no string:

This is why I love the i3 series: tons of local solid state, plus great network throughput. The network bandwidth alone isn’t enough, of course: you also have to provision fast storage targets on the other side if you need to back up a multi-terabyte backup. Some shops use i3en servers as staging area file servers – landing their backups there to get ’em written quickly, and then migrating the backups to more cost-effective and redundant storage for the longer term. (There are more cost-effective instance types if your backup needs are smaller, of course.)

Test round 3:

Backing up to NUL:

When you’re doing backup speed testing, you can back up to DISK=’NUL:’ and SQL Server doesn’t actually write the backup file to disk – it just discards the data. This helps measure how fast SQL Server’s backup processes can read the data from disk and compress it on this particular server.

- 1 NUL file: 9.4 minutes

- 2 NUL files: 6.8 minutes (28% faster)

- 4 NUL files: 4.3 minutes (54% faster)

- 8 NUL files: 2.9 minutes (70% faster)

The numbers here are useful in comparison to test 2’s numbers: when writing to very fast backup volumes, you can actually approach the speed of simply discarding the data! In the 8-file example, if I just throw the data away by backing up to NUL, I finished in 2.9 minutes. Actually writing the data out to local NVMe SSDs got me there in 3.0 minutes. I’ll take it.

This is performance testing,

not configuration advice.

When your databases are just 50-250GB, and when your company is only active 9AM-5PM, this kind of thing doesn’t matter much. As your databases grow, and as your company demands constant uptime with short recovery times, this stuff matters a lot. It’s awesome that with just a little effort, you can dig in and start optimizing your backups for quicker recovery and less impact to your end users.

To start your own backup tuning project, check out Nic Cain’s automated backup tuning scripts. He automates the process of running dozens of backups with different settings – way more than just file count – in order to find the combination of parameters that works best given your database contents, where your data & log files live, and where you want your backups to be written.

In shops with ambitious RPO/RTO goals, you can use this same level of tuning for your restores, too. I wrote about that in my Choosing and Testing a Cloud Vendor post when I helped a company analyze the best VM architecture for their SaaS hosting needs.

Didn’t know about this? You might have missed out on some of the other things we talk about in our Fundamentals of Database Administration class.

37 Comments. Leave new

Hi Brent

I was looking into Nic Cain’s blog but didn’t find the tuning scripts. Are they on github? I couldn’t find them neither there.

Greetings

Diego – they’re cleverly hidden at the end of the post in a section called “Downloads.”

Sorry, by “couldn’t find them” I meant: Oops! That page can’t be found. So, they are missing links.

By the way, thanks for the awesome post!

Gotcha – for questions on other peoples’ stuff, can you contact them? Thanks for understanding!

Of course, I’ll keep in mind. Thanks Brent

This is a solid tip. I just did a COPY_ONLY backup of my 527 GB Reporting DB and it only took 10.5 min for 8 files to the same drive. Normally, it’s 27 minutes.

I wonder what it will do for my 6.58TB primary DB. Should be interesting. The backup now takes 7 hours.

SteveSC – awesome, glad I could help!

Looks like you get a bonus on the restoration side as well. The single file restore takes 19.75 minutes, the 8 file restore took 8 minutes.

Hmmm, that is interesting.

Makes sense if the bottle neck is in the SQL engine. Tasks wait for their turn, their quantum. More tasks with out much more overhead….

Let me test as well.

Why would backup to multiple files on the same drive? Please try again with 1 backup file and parameter BUFFERCOUNT=50. Let me know the result 😉

Martins – feel free to do your own testing and share the results. I wish I could do everyone’s testing for ’em, but that’s why I share my work publicly – to inspire you to learn and do your own testing as well. Jump on in, the water’s fine! It’s a great way to get started with advancing your career.

Perfect timing on this article.

My head still tries to put the steps to flush pages based on LRU, get new data pages, log what went to which file, do other processes and the spin lock function that mediates each one getting a time slice or quantum.

I noticed this behavior a while ago.

I thought it’s related to CPU threads though – that if your box have 4 CPUs, then having 4 files in the backup command utilizes more CPU threads to be used for the activity, but I never checked in depth regarding it.

I wonder what the monthly Amazon bill is for that beast!

“Operating Expense” – Tax write off.

Ohhh to learn and join that group one day – taking others with me.

You lost me at 96 cores,

And at 3/4TB of RAM,

And once again at 50TB of SSD,

The SQL 2019 bit didn’t help…

🙂

Feel free to write your own blog post about your SQL Server 2008 setup running on your Commodore 64. 😉

Yeah, I only have a Vic 20 though with a trusty tape player.

96-core, 768GB RAM i3en.metal AWS EC2 instance with 8 7.5TB NVMe SSDs … holy ….

Andre – I’m going to guess you’re the kind of person who reads sports car magazines and writes letters to the editor complaining that they aren’t testing minivans with 4-cylinder engines.

This is a performance tuning blog, not Minivans Today.

Minivans are highly underrated …

Great! I Testet it with a production DB of 31GB, the full backup time was 50% faster with 8 files instead of 1.

As I have a lot of DB Instances running on my server, this was an important hint to me!

Thank you!

IF you go with more than 1 file, please remember to write a restore script, test it and print it out on paper, just in case you need to do a restore.

Paul Randal said it best: You need to design a restore strategy, not a backup strategy.

https://www.sqlskills.com/blogs/paul/the-accidental-dba-day-8-of-30-backups-planning-a-recovery-strategy/

Or, you could just use sp_DatabaseRestore, which handles that for you automatically. 😉

yes, indeed. But my point is; test it regularly (just in case somebody in IT forgot to backup all the files)

Oh, yeah. Multi-file backups are standard procedure for all of our larger (multi-terabyte) database. I takes our backup process from days to hours!

we’re going to be digging into doing this soon, hopefully using ola’s scripts for us

Hi Brent! I recently installed SQL Server 2019 Express on a Windows Server 2019 and the database is working well with one exception. Around 4:00am every day a backup of all my databases is being performed and I did not set anything up. Is this a new feature in SQL Server 2019?

Hi, Brad. For general questions, head to a Q&A site like DBA.StackExchange.com.

Hi, running a backup on our server using DISK=’NUL:’ only does 117Mb/sec, while on another server it runs around 600Mb/sec, any idea where I can start looking for the bottleneck ?

Unfortunately, analyzing and tuning storage is outside of the scope of this post.

Willem,

Wow, that could be so many things.

What are the wait states like?

Can you run the backup as a script with Stats IO on?

Does performance monitor show high disk usage or queue length ? (reading of course)

Is it windows or LInux?

Did someone put the server in “Balanced mode” [yes, much like other train wreck this happens]

Are the service packs the same ?

Are the database settings the same (Parallelism comes to mind, Collation does not affect this [I think] )

How do CPU type, core, memory and memory type compare?

Do you get the same 117 mb/sec at different times of the day?

Is this hosted or on prem ?

While the output is DISK=NULL, the read is from disk so ….. [down the rabbit hole ]

Are the RAID and disk configurations the same ?

Is it local Disk or SAN or NAS ?

If it is SAN/NAS is one iSCSI or are they both fabric connected ?

If fabric connected Do they go through the same fabric ?

BTW, I’m leaning SDN, software defined networks, and SDDC , software defined data centers, give even less visibility into the hardware and bottle necks. Tests like this may become benchmarks along with database stress test.

Willem, Brent just updated his “First responder kit ” on 10 November. I have not unloaded it

Thank you so much Brent! I’m a relatively new DBA and your content is amazing. I feel inspired to learn and can see I am gaining a lot of value. I ran some tests on this and got a 75% reduction on our backup time over 6 files on a single volume. This is something we are going to implement into our backup plan.

You rock and love your work! ??

Awesome, glad I could help!

Hi, thanks for the inspiration!

VolumeShadowCopy technology seems to me like magic at first glance. But I would guess that it won’t make any sense to combine multifile backup with VolumeShadowCopy-backup solutions like Azure Backup Server for OnPrem (MARS).

Could you comment on this?

Thanks!

Sorry, I’ve never heard of that product, let alone used it.

It’s the new name of Microsoft System Center Data Protection Manager (DPM) when you buy it as a subscription in Azure. Have you heard of DPM?

Yes, but I haven’t used it in over a decade.