In my last post, I talked about how you can get 43%-67% faster backups. Today, I’m using that same SQL Server setup to discuss how throwing more CPU cores might help you check for corruption faster if you’ve already got modern fast storage. I don’t expect everyone to go to this level of detail by any means, but it’s the kind of tuning you can do when you’re facing multiple terabytes of production data per server and shrinking maintenance windows.

You need to check for corruption regularly because SQL Server isn’t doing it for you (although I’ve asked Microsoft to do that, and you can vote for it.) All kinds of things can cause corruption: storage issues, storage networking issues, and even SQL Server bugs like this or this or this or this or this.

However, folks are often hesitant to run DBCC CHECKDB because it has a reputation for being resource-intensive. Good news, though: there are a few easy ways to influence CHECKDB’s impact.

Performance tweak #1:

run it across more cores.

In Standard Edition, CHECKDB is single-threaded. That’s because you don’t need fast corruption checking in Standard Edition because it doesn’t have any corruption bugs at all. Nope, none whatsoever. Those of you on Standard Edition can just ignore this tweak and move right on to the next one.

Enterprise Edition has parallel corruption checking because it’s so much more vulnerable to corruption bugs (is that right? that must be what it is – surely it can’t be a money grab) and since SQL Server 2016, CHECKDB has accepted a MAXDOP parameter that lets you set the number of CPU cores involved. You can also hint it upwards, higher than the server-level MAXDOP, so that it can use more threads even on servers hosting single-threaded apps.

The more you use, the more you save, but the story here is a little trickier than the multi-file backup post. Here, I not only examined CHECKDB’s runtime, but also the amount of wait time generated by the command, and the wait time ratio. Wait time ratio means for every minute on the clock, how many minutes did SQL Server spend waiting? The higher that number goes, the uglier your performance is.

| MAXDOP | Minutes | Wait Time, Minutes | Wait Time Ratio |

| 1 | 53 | 4 | 0 |

| 2 | 37 | 121 | 3 |

| 4 | 26 | 155 | 6 |

| 8 | 18 | 212 | 12 |

| 12 | 14 | 234 | 16 |

| 16 | 14 | 332 | 23 |

| 24 | 12 | 354 | 30 |

| 32 | 11 | 462 | 41 |

| 48 | 9 | 494 | 56 |

| 56 | 8 | 564 | 65 |

| 64 | 7 | 579 | 75 |

| 72 | 13 | 1,052 | 79 |

| 80 | 17 | 1,409 | 84 |

| 96 | 19 | 1,879 | 99 |

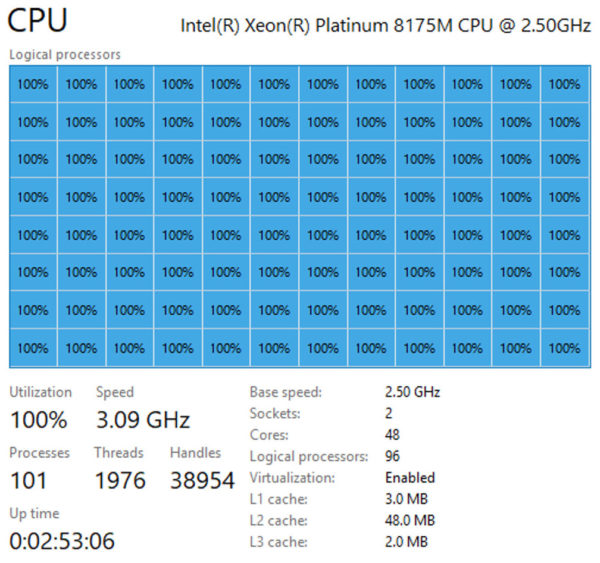

Keep in mind that this particular SQL Server has 48 physical cores, 96 with hyperthreading turned on.

At MAXDOP 64, we’re checking 56GB of data per minute, and:

- CHECKDB runs 86% faster than when it’s single-threaded

- 59% faster than MAXDOP 8 (which is what a lot of folks would be at if they set server-level MAXDOP here)

- 48% faster than MAXDOP 16

- Wait time ratio is 75, meaning for every minute on the clock, we’re generating 75 minutes of wait time, most of which is CXPACKET and CXCONSUMER

- The 64 CPU cores involved stick at 100% for most of the duration (thanks to our awesome hardware provisioning)

I’m certainly not saying MAXDOP 64 makes the most sense for all hardware, but in this scenario, it would let us keep the maintenance window as short as possible, assuming we have 7-10 minutes each night where we can sustain heavy loads.

MAXDOP 96 runs slower, not faster, and the CPU usage makes the box feel pretty unusable:

Performance tweak #2:

check for less (or more) corruption problems.

By default, CHECKDB checks for the most common issues, but you can make it go way faster if you just ask it to check the checksums on each page. This doesn’t catch logical issues, like corrupt statistics, but it at least gets you the earliest warning signs of storage corruption.

To do this, use the WITH PHYSICAL_ONLY switch – which doesn’t do nearly as much CPU work, so we also see different performance results here:

| MAXDOP | Minutes | Wait Time, Minutes | Wait Time Ratio |

| 1 | 17 | 1 | 0 |

| 2 | 12 | 35 | 3 |

| 4 | 8 | 41 | 5 |

| 8 | 6 | 47 | 8 |

| 12 | 5 | 61 | 12 |

| 16 | 5 | 74 | 15 |

| 24 | 5 | 113 | 24 |

For this particular server, as I threw more cores at it, the only things that went up were my parallelism waits. The sweet spot here was around 8-12 cores.

But this leads to an interesting comparison:

- PHYSICAL_ONLY with 12 cores: takes 5 minutes, only checks page checksums

- FULL CHECKDB with 64 cores: takes 7 minutes, checks everything

On this particular server, as long as I’m running Expensive Edition, I just don’t think it would make sense to use the PHYSICAL_ONLY setting because as long as I’m taking a 7-minute slowdown, if I’ve got the CPU cores available (and they’re not working otherwise), then might as well just check everything. If this was truly a 24/7 environment where I couldn’t handle a 7-minute slowdown, then…I’m probably offloading CHECKDB to another AG replica anyway, especially given the wonderful 2019 licensing changes that make it free.

Furthermore, I might even want to use the EXTENDED_LOGICAL_CHECKS parameter. It catches even more corruption culprits, albeit at the cost of higher runtimes:

| MAXDOP | Minutes | Wait Time, Minutes | Wait Time Ratio |

| 1 | 53 | 4 | 0 |

| 2 | 40 | 130 | 3 |

| 4 | 29 | 175 | 6 |

| 8 | 17 | 198 | 11 |

| 12 | 16 | 292 | 17 |

| 16 | 14 | 321 | 22 |

| 24 | 10 | 327 | 31 |

| 48 | 9 | 492 | 57 |

| 56 | 11 | 731 | 60 |

| 64 | 15 | 1,047 | 69 |

| 96 | 19 | 1,880 | 99 |

It’s interesting to note that the CPU sweet spot for this particular box was 64 cores for the regular CHECKDB, but around 24 cores for EXTENDED_LOGICAL_CHECKS, producing the nicest balance between short runtimes and an overloaded, unresponsive server. (I’m abbreviating some of the results here.) That sweet spot is going to depend on not just your hardware, but also the contents of your database and which SQL Server features you’re using, which brings me to…

Performance tweak #3:

make your database smaller.

The number of tables you have AND the number of indexes on ’em both affect CHECKDB’s speed. All of the tests above involved the 390GB 2020-06 Stack Overflow database, which ships with no nonclustered indexes whatsoever.

To make the database more complex, I added:

- 80 3-column indexes

- 2 indexed views

- 2 nonclustered columnstore indexes

- Bringing the database from 390GB to 560GB (up about 44%)

And then ran a few of the tests again:

- Physical_only, 16 cores: 7.2 minutes – up ~60% from 4.5 minutes, bigger than the 44% database size growth, but I wouldn’t read too much into one-off tests – I wouldn’t use these results to say definitively that physical_only performance doesn’t scale linearly with database size.

- Regular CHECKDB, 64 cores: 30.4 minutes – up dramatically from 7.5 minutes with no indexes, and wait time ratio remained around 75, so the server was really hustling the whole time.

- Extended logical checks, 64 cores: 35.3 minutes – up from 14.9 minutes, but the extended checks runtime penalty (compared to regular CHECKDB at 30.4 minutes) wasn’t as big here as it was for the test with no nonclustered indexes at all. This lines up with what I see out in the field: extended logical checks aren’t that big of a deal on real-life databases with indexes, and they’re totally worth it if you’re taking the gamble on indexed views.

These timings are why it often makes sense to run CHECKDB with the PHYSICAL_ONLY switch during the week in order to get that blazing fast runtime with hardly any CPU usage, and then upgrade to the resource-intensive full CHECKDB with EXTENDED_LOGICAL_CHECKS on the weekend, throwing lots of CPU cores at it to get the work over as quickly as possible.

Didn’t know about this? You might have missed out on some of the other things we talk about in our Fundamentals of Database Administration class.

20 Comments. Leave new

This topic is well timed. Based on one of your demos last week it caused the light bulb to go off in my mind. Why am I not pumping up resources when I run my CheckDB process on my admin server then dropping them down when done. It takes 2 days to test restores and run CheckDB on all my production databases. So my plan is to build a new azure server with 96 cores and 128MB. And I will start the server as part of my workflow, run all my restores and CheckDB and even try to do some in parallel, then power off and deallocate my server.

However, when I asked what type of specs you should look for in doing this on a Twitter post I got an interesting response from Mr. Randal. His order of priority is the IO speed, TempDB drive performance CPU and then Memory.

I am still working on approvals from management on this concept. Then I will take your info and Mr. Randal’s and do some testing and see where the sweet spot configuration is for this setup.

Thanks as always for the thought provoking posts.

Yeah, Paul’s right – you want IO throughput first since it involves reading the entire database as quickly as possible. Thankfully in AWS, the i3 series comes with plenty of free insanely fast NVMe SSD space, so it’s great for these kinds of operations.

2 sockets, 48 cores. What would 4 socket – 48 core (4×12 cores) with similar L2 and L3 on die cache do?

(yes they do make 4 socket DDR4 mother boards for under $1200 [unpopulated]

1) Win lottery

2) Get 4 socket motherboard to test.

3) Populate mother board with dual 1KW power supplies

Test to see if L2 and L3 cache should be over run with so many reads. Yes MaxDOP maxed out at 64 – but inside SQL engine there are many other processes wanting a quantum time slice.

Guessing test #1 woule max out at 80 cores Why? lower core to L2 cache ratio resulting in more hits and fewer pipeline flushes.

4) Tell spouse and friends I won lottery – They would never understand buying *another* computer.

So, if I had a need to improve performance of checkDB on a non-prod instance, is it suitable to drop all non-clustered indexes first and then run checkDB?

Then run a full checkDB periodically ?

If any corruptions are then identified in the non-clustered indexes, then they can just be rebuilt.

Steve – so if storage corruption just happens to strike a nonclustered index…

Do you think it’s always only going to hit them?

Or do you maybe wanna find out as soon as possible and fail outta there before it hits a clustered index and really ruins your day?

That’s a pretty fair point.

I knew it smelt like a bad idea, but wasn’t sure why

Hi Brent

Think you forgot a point

CHECKDB makes a internal snap of DB per table, meaning having to walk buffer each table n times

having huge servers with a lot memory and db on 15+ TB with 1000+ tables, this takes time

In this case doing a snap ourself saves a lot of time

Any recommendation in this area.

Klaus – yep, that too! I didn’t forget that, but just only so many things I can do per blog post. By all means, sounds like you’re qualified to write posts too – start blogging! It’s a great way to share what you know and advance your career.

Hi Brent,

I’m a little confused by your charts. You have a “Minutes” column and a “Wait time, Minutes” column. Which is the total time that DBCC took to run? Is it the “Minutes” column or the sum of the two columns?

Sorry for what might seem like a stupid question but I can’t tell in my current caffeine deprived state. 😀

Howdy sir. Say you’ve got a query that takes 1 minute to run, and during the span of that minute, the query generates 6 minutes of PAGEIOLATCH waits (because it goes parallel across 8 cores, and they all sit around waiting on storage most of the time, let’s say). That’d be 1 minute of time, and 6 minutes of wait time.

The higher wait time is, the more the server is stressed.

You would know that if you attended my Mastering Server Tuning class. 😉

Heh… I’m just trying to clarify what you posted to make it more complete, Brent. I may have missed it but it doesn’t appear that you said whether or not the minutes was the total elapsed time or it the wait time had to be divided by the number of CPUs and added to the minutes to get the total run time. You also said that you didn’t expect people to “go to this level of detail by any means” but I had to to make sure I knew what you were talking about.

Based on your “% faster” calculations, it would appear that the “Minutes” column is the total time for the run. I just wanted to be sure.

And, yep… I know about wait times vs server stress. 😀

p.s. Just to be sure (because the written work frequently sucks when trying to determine intent), no jabs where intended in my original post or my previous one about this fine article.

Heh… lordy… can’t spell today… that was supposed to be “written word” not “written work”. Please pass the coffee! 😀

What would happen to availability secondary replicas by such load (whether checkdb runs on primary or secondary replicas) ? They may get stopped receiving logs from primary, so every time multicore burner dbcc gets running, we may need to check availability replica state and resume any paused ones. Right?

If I understand you right, you’re saying you sized your replicas so small that you can’t run corruption checks anywhere without taking the AG down.

Did I understand that correctly? If so, you tell me – what’s your game plan to check for corruption?

Every IT shop hasn’t enough budget to throw for buying servers just for availability replicas that are responsible just and only for running CHECKDB. There are lots of businesses that have just two servers, one act as primary and other as secondary for reporting purposes.

Can you do me a solid and answer the question, please?

Where are you running CHECKDB today?

Of course on secondary, but with a MAXDOP of no more than 4 to avoid halting the HADR Sync replica. Brent, In this article, I understand your point; you attempted to find a configuration to minimize the downtime window; however, in my experience, I believe this would cause some issues for secondary servers as well, unless you have large boxes like HPE Superdome servers with InfiniBand network components and high-end Pure/EMC storages. I’m worried about pushing average-sized VMs or medium-sized baremetal systems that far.

I think you’re trying to use extremes of an iPhone-sized CPU box versus an HP Superdome to illustrate your point, and I think we’re going to need to agree to disagree here.

Thanks for stopping by, though, and best of luck.

Thanks for your time and your briliant contribution to the community, I’ve always appreciated the opportunity to learn from you.