In the last post, I talked about how we don’t get accurate estimates because SQL Server’s statistics only have up to 201 buckets in the histogram. It didn’t matter much in that post, though, because we were using the small StackOverflow2010 database.

But what happens as our data grows? Let’s move to a newer Stack Overflow database, the 2018-06 one that I use for my Mastering training classes. We’ll create the same index, giving us the same statistics, and then look at the histogram to see how Miami grew over time:

|

1 2 3 4 5 6 |

USE StackOverflow; GO CREATE INDEX Location ON dbo.Users(Location); GO SELECT COUNT(*) FROM dbo.Users; GO |

Now we’re up to about 9 million users:

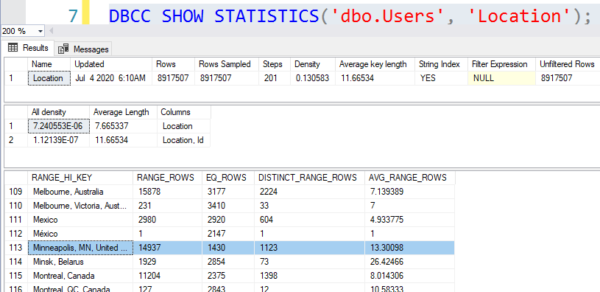

And the statistics histogram for Miami’s area;

The data distribution has changed, but Miami still isn’t big enough to have its own bucket. Now:

- Miami, FL is between México and Minneapolis (note that Michigan no longer has its own bucket)

- AVG_RANGE_ROWS = 13.3 means that any location in between México and Minneapolis will get an estimate of 13.3 rows

So when we run our Miami query, what’s it look like now:

Estimated number of rows in Miami, FL is 13, but in actuality, 625 rows come out of the index seek. The variance is starting to grow now that our data sizes have grown larger.

So what do we do about Miami’s inaccurate estimates?

Again, in this case…nothing. 625 rows vs 13 rows isn’t really that big of a deal. In my Fundamentals of Query Tuning class, I talk about how you want to read query plans from right to left, looking for places where estimates vs actual are 10x off or more. In this case, we’re 48x off, but…it doesn’t really matter that much. SQL Server still allocated enough memory to do the sort, and it didn’t spill to disk. (Note there’s no yellow bang on the sort operator.)

However, as I noted yesterday, it will become a big deal if we join to other tables. Let’s see what happens if we add a join to Comments, for example, to display the top comments left by people from Miami:

|

1 2 3 4 5 |

SELECT TOP 1000 u.DisplayName, c.Score, c.Text FROM dbo.Users u INNER JOIN dbo.Comments c ON u.Id = c.UserId WHERE u.Location = N'Miami, FL' ORDER BY c.Score DESC; |

The actual plan:

We read plans from right to left, top to bottom, to understand what SQL Server did in what order. (You can also read from left to right in some cases, and I’m just legally required to say that, because if I don’t, Grant Fritchey and Hugo Kornelis will smother me as I sleep.)

So from right to left:

- SQL Server did an index seek on Location = Miami, FL, and expected only 13 rows to come back

- In reality, 625 rows came back

- So we also had to do 625 key lookups

- We had to do 625 index seeks on Comments.UserId because each person has their own set of comments

- In total, we found 14,176 comments left by people in Miami, FL, so that poor Sort operator never stood a chance. He has a yellow bang because he ended up spilling to disk.

So, really, now, seriously,

what do we do about Miami’s inaccurate estimates?

In this example, the query still runs fairly quickly. But if you run into this kind of problem with a larger query, larger spills to disk, larger estimate variations, and so forth, here are some techniques you can consider for performance tuning:

- Breaking the query up into phases using techniques like temp tables

- Query hints, like different cardinality estimator versions or optimization hints (like optimize-for, asking for a larger grant, etc)

- Newer compatibility levels with tricks like adaptive joins or adaptive memory grants

But the big takeaway for me is that 201 buckets in a histogram just isn’t enough to portray real-world data skew, especially as your data size grows into the millions of rows and beyond.

14 Comments. Leave new

It would be interesting to see what the economic cost is on a cloud platform in terms of CPU cycles (if any).

On that one, it’s practically nothing – it’s just an example to show how estimates gradually start to go haywire. When I see real-world production examples, it’s much more dramatic, though.

Have you had any good results with filtered statistics for this type of problem? When I tried in the past, I couldn’t get the estimator to actually use the filtered statistic as the source of the estimate. This was a few years ago, so I don’t remember all the details, but if I recall correctly they won’t be used for joins.

I wonder what MS’s thoughts on adding more buckets are. Surely the resource tradeoffs for adding more buckets are much different today than they were in the 80s or 90s when this bucket count was established.

You’re not alone: I haven’t had good luck with filtered stats either, especially in real-world scenarios like this where there are hundreds or thousands of values that get bad estimates, not just Miami.

I didn’t test it with filtered statistics specifically, but with filtered indexes you may need to add an OPTION RECOMPILE to your query, if you do not hardcode Miami (but use a variable / parameter instead). If this is worth the effort depends, how often this query will be used (number of recompiles per minute) and how much you save.

I still haven’t tried column indexes, but in my thinking a column index is like statistic on steroids. There should be entries for each unique value. Right?

You mean like the index we create at the beginning of the post, here:

CREATE INDEX Location ON dbo.Users(Location);

I think he means column store indexes.

Yes, thank you!

Gotcha – he’s welcome to give that a shot. (Going to be honest there – if you don’t know what they’re called, they’re probably not a good solution for your challenges.)

A real world example when filtered statistics did help:

A star-schema DWH with a DimDate table having an integer key in format YYYYMMDDVV where VV is a version during a given day. So 2020010103 would be the third version on the 1. January 2020. Usually there are not a lot of versions per day (like 1 or 2, almost always <10).

As there are more than 200 records in the DimDate we get exactly the same problem as in the article. Worse than that, if you do a "select … from facttable where DateID=…" for a given DateID which didn't get a histogram bucket, SQL Server computes a probability this integer value will be in the range. As we can theoretically have 100 versions for a day (but usually have 1), the cardinality estimates were 100x too low in these cases.

The solution (or better workaround. Or better dirty hack 😉 ) was to create in ETL filtered statistics for each loaded DateID (approximately 700 statistics for 2 years of data, they are automatically removed with outdated data during housekeeping), this way the above query gets correct estimates,

700 statistics per table, interesting. Did you happen to notice any issues with your update statistics jobs, or did you have to just abandon them altogether?

We update individual crucial statistics in ETL. Everything else is handled by Hallengren scripts on weekends (no problemo AFAIK). The DWH is also not that large, just over 100GB page compressed.

Ah, I see. More of a data mini-mart. 😉