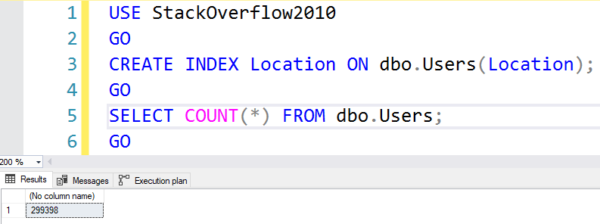

I’ll start with the smallest Stack Overflow 2010 database and set up an index on Location:

|

1 2 3 4 5 6 |

USE StackOverflow2010 GO CREATE INDEX Location ON dbo.Users(Location); GO SELECT COUNT(*) FROM dbo.Users; GO |

There are about 300,000 Users – not a lot, but enough that it will start to give SQL Server some estimation problems:

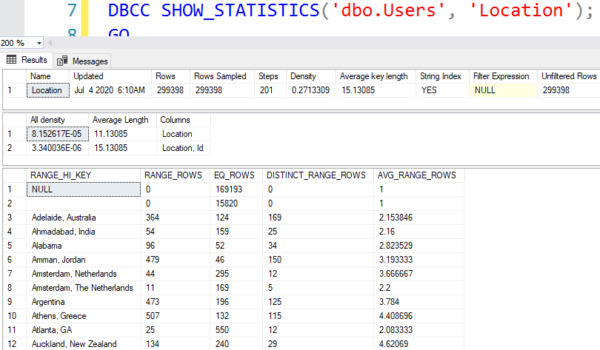

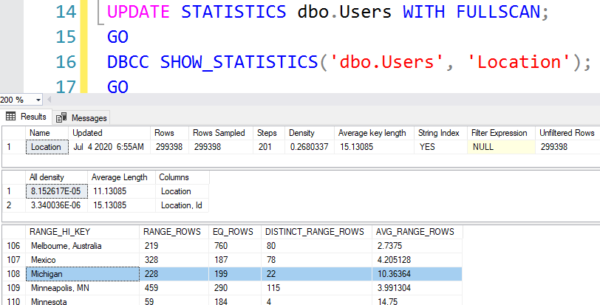

When you create an index, SQL Server automatically creates a statistic with the same name. A statistic is one 8KB page with metadata about the object’s contents. In this case, it’s a list of up to 201 Location values, and the number of people who live in each of those locations. You can see the statistics with DBCC SHOW_STATISTICS, as we talk about in my free class How to Think Like the SQL Server Engine:

This helps SQL Server estimate how many rows will be returned. If the location you’re looking for happens to be one of the outliers that SQL Server chose as large enough to get its own bucket in the histogram,like Ahmadabad, India above, then SQL Server knows a lot about it:

- RANGE_ROWS = 54: this means that in the range of Locations between Adelaide, Australia and Ahmadabad, India, there were 54 rows, excluding Adelaide and Ahmadabad.

- EQ_ROWS = 159: this means that Ahmadabad (the RANGE_HI_KEY) had exactly 159 rows.

- DISTINCT_RANGE_ROWS = 25: this means that of the 54 rows in the RANGE_ROWS group, there were 25 distinct locations.

- AVG_RANGE_ROWS = 2.16: this means that if you pass in a location between Adelaide and Ahmadabad, SQL Server will guess that it has 2.16 rows.

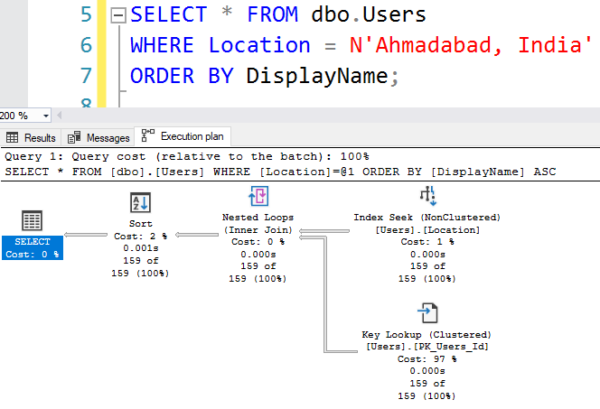

For example, if I query for Ahmadabad, India, which happens to be one of the outliers that got its own bucket, your estimates will be pretty doggone accurate, like this:

|

1 2 3 |

SELECT * FROM dbo.Users WHERE Location = N'Ahmadabad, India' ORDER BY DisplayName; |

Because Ahmadabad is an outlier, and it has its own bucket as shown in the screenshot above, SQL Server estimates exactly 159 rows. (See the EQ_ROWS value in the screenshot above.)

This works really well – BUT, only if you’re one of the outliers. What if you’re a big location, but not big enough of an outlier to get your own statistics bucket? Like, say, Miami, FL?

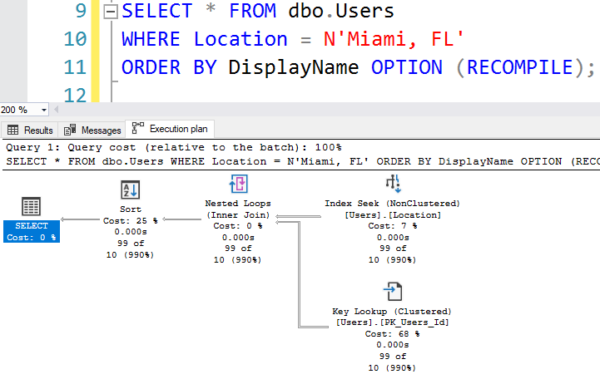

Here, I’ve even used OPTION (RECOMPILE), and yet I’m getting an estimate of just 10 rows when there are actually 99 Floridians? To find out, scroll down in the DBCC SHOW_STATISTICS output until you’re in the Miami area:

There’s your problem. Miami, FL is in between Mexico and Michigan (strangely appropriate), and so the histogram means:

- Miami, FL isn’t there

- Miami, FL is in the bucket between Mexico and Michigan

- AVG_RANGE_ROWS = 10.36 means that if you’re looking for any location between Mexico and Michigan, SQL Server will estimate that the location has 10.36 people in it – and in this case, that’s not so accurate for Miami.

Miami, FL isn’t big enough to be one of the 201 outliers featured in the statistics buckets, but it’s large enough that it has a relatively unusual number of people (and boy, as Richie can tell you, the people in Miami are unusual.)

Will updating statistics fix this?

To find out, we’ll give SQL Server the best possible chance at tackling the problem:

|

1 2 3 4 |

UPDATE STATISTICS dbo.Users WITH FULLSCAN; GO DBCC SHOW_STATISTICS('dbo.Users', 'Location'); GO |

Which still produces the same stats:

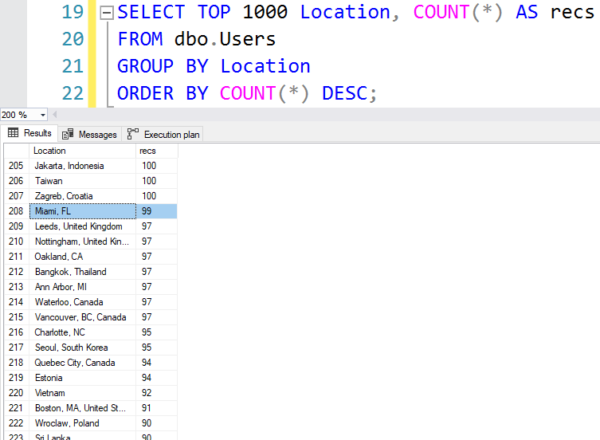

No matter how many times you update stats, there are still just only 201 buckets max, and Miami doesn’t have enough data to be one of those outliers. To find similar locations, do a TOP 1000, and the folks in the 200-1000 range are probably going to be your outliers that get bad estimates:

|

1 2 3 4 |

SELECT TOP 1000 Location, COUNT(*) AS recs FROM dbo.Users GROUP BY Location ORDER BY COUNT(*) DESC; |

And sure enough, down over the 200 range is our Floridian friend:

Note that the top 200 rows by count(*) aren’t necessarily the ones who get their own buckets. Depending on data distribution and who’s next to each other, some of these may be grouped together. Overall, the 200-1000 range are great examples of possible problematic estimates, though.

So what do we do about Miami’s inaccurate estimates?

Well, in this case…nothing. 99 rows vs 10 rows isn’t really that big of a deal, in the grand scheme of things. It will become a big deal if:

- The data size starts to grow – note that I’m using the StackOverflow2010 database in this post, but stay tuned for tomorrow’s post

- We join to other tables – in which case the number of rows in each related table will start to cause a problem

Stay tuned for tomorrow’s post in which we’ll start to explore situations where this would be a bigger issue.

7 Comments. Leave new

Great reading as usual. Thanks Brent!

I really like this kind of posts, understanding from and how SQL is getting the data

[…] Click here to view the original article. […]

Hi Brent, what about having a filtered stats for Miami for example?

That might work for Miami, but check this part of the post out:

“To find similar locations, do a TOP 1000, and the folks in the 200-1000 range are probably going to be your outliers that get bad estimates:”

The problem isn’t just confined to Miami.

Great post, struggling with this couple days ago and dont find adequate solution. Adding new indexes and rewrite query helps me.

[…] the final submit, I talked about how we don’t fetch handsome estimates because SQL Server’s statistics very top dangle as a lot as 201 buckets within the histogram. It […]