I get this question a lot, so let’s set up an example with the Stack Overflow database. My query’s goal is to:

- Find the top 5 Locations

- List the users who live in those top 5 Locations, alphabetized by their DisplayName

There are a LOT of ways I could write this query, but for the purpose of this post, I’m only going to contrast common table expressions (CTEs) versus temp tables:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

/* Set up an index to make our query easier: */ CREATE INDEX IX_Location ON dbo.Users(Location); /* Common Table Expression, CTE: */ WITH TopLocations AS (SELECT TOP 5 Location FROM dbo.Users GROUP BY Location ORDER BY COUNT(*) DESC ) SELECT u.DisplayName, u.Location, u.WebsiteUrl, u.Reputation, u.Id FROM TopLocations tl INNER JOIN dbo.Users u ON tl.Location = u.Location ORDER BY u.DisplayName; GO /* Temp table version: */ CREATE TABLE #TopLocations (Location NVARCHAR(100)); INSERT INTO #TopLocations (Location) SELECT TOP 5 Location FROM dbo.Users GROUP BY Location ORDER BY COUNT(*) DESC; SELECT u.DisplayName, u.Location, u.WebsiteUrl, u.Reputation, u.Id FROM #TopLocations tl INNER JOIN dbo.Users u ON tl.Location = u.Location ORDER BY u.DisplayName; GO |

We’re talking about a relatively small table here – less than 1GB in the Stack Overflow 2013 (50GB) version – so both versions of the query perform fairly quickly.

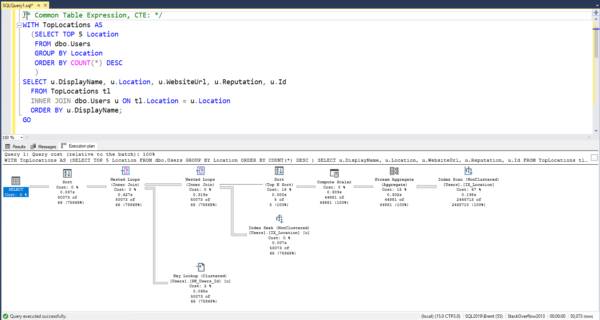

Here’s the execution plan for the CTE:

The CTE does both operations (finding the top locations, and finding the users in that location) in a single statement. That has pros and cons:

- Good: SQL Server doesn’t necessarily have to materialize the top 5 locations to disk

- Good: it accurately estimated that 5 locations would come out of the CTE

- Bad: it didn’t know what those 5 locations would be, so…

- Bad: it wildly under-estimated how many Users would be found in those locations (est: 66 rows, actual: 50,073 rows)

- Bad: it chose to do index seeks + key lookups for a total of 158,916 reads

Here’s the execution plan for the temp table:

The temp table version splits the work up into two phases, which means that by the time the second operation happens, SQL Server has the benefit of knowing what happened in the first phase. That also has pros and cons:

- Good: SQL Server accurately estimated that 5 locations would come out of the temp table

- Great: it even estimated what those 5 locations would be, so

- Great: it guessed much more accurately about how many Users lived in those locations (est: 24,657 rows, actual: 50,073 rows)

- Great: it chose to do a table scan, leading to less logical reads (just 49,900 total for both operations)

So what’s better, and when?

CTEs are usually better when:

- SQL Server can do a good job of estimating how many rows will come out of it, and the contents of what those rows will be, or

- When what comes out of the CTE doesn’t really influence the behavior of the rest of the query, or

- When you’re not sure what portions of the CTE’s data will actually be necessary for the rest of the query (because SQL Server can figure out what parts to execute, and what parts to simply ignore)

Temp tables are usually better when:

- You have to refer to the output multiple times, or

- When you need to pass data between stored procedures, or

- When you need to break a query up into phases to isolate unpredictable components that dramatically affect the behavior of the rest of the query

I’d suggest starting with CTEs because they’re easy to write and to read. If you hit a performance wall, try ripping out a CTE and writing it to a temp table, then joining to the temp table.

To learn more and watch me build queries where different solutions are more effective, check out the CTEs, Temp Tables, and APPLY module of Mastering Query Tuning.

37 Comments. Leave new

Ran into a situation where the actual database was completely locked down – could not make ANY alterations to database – including adding indexes. Searches were running poorly. Solution was to pull data into temp tables and add indexes to them – it was actually faster to do that then wait on original query.

Brent, please shut off that camera you apparently have put somewhere behind me. ;P

I just battled with that two days ago.

Spent an entire day reworking a colleagues horrible 220 Lines of Cursor based stored procedure into a 10 Layer deep CTE. Thing of beauty!

Then I tested it and realized that it was actually slower than the 17 minutes the cursor took to complete its work and almost chewed through my keyboard.

Noticed residual IO warnings, Key Lookups and operators that had wild discrepancies between row estimates, actual and rows read. Changed the first two or three CTEs into temporary tables, seeing as they were referenced multiple times, and voila the query completed in just over a minute.

(And then I was told that they need it in form of a TVF, not a stored procedure, which means I can’t use temporary tables and there I was chewing at my keyboard again because they decided to go with the cursor (insert wilhelm scream))

Are there any other warning signs in the execution plans we should look for when using only CTEs?

HAHAHA, camera. The big warning sign to watch for is estimated vs actual rows coming out of the CTE’s operators – when it’s way off (greater than, say, 10x off), then you’re probably going to get better performance by refactoring it into a temp table.

Great practical example!

Excellent tip. Thank you!

What about memory grants please? I find my server way over estimating how much memory to allocate and a single query grabs it all but then again the sql is shocking but when I rewrite into either temp tables or CTEs or even local working temp tables to not hit tempdb the CTEs still seem to have huge memory requests. Greatly appreciate your thoughts as it’s one thing you didn’t highlight in this example and I’d love to know

Kevin – that’s kinda beyond the scope of this post, unfortunately. We’ve got a ton of other posts here about grants though! Hit the search up top and search for those.

I agree with your conclusions, and appreciate how clearly you explained them.

At my previous job, I inherited a lot of large stored procedures that used a dozen or more temp tables each. I refactored them to use all CTEs instead, and they mostly performed better. But when I then strategically used temp tables to break up the huge nest of CTEs, they got even better. So instead of a dozen temp tables, we usually ended up with to two or three. The .Net devs that wrote the original code before I was hired (I was the company’s first DBA) had never heard of CTEs and thought they were magic bullets as they watched me go through the first refactoring phase, but were perplexed that they turned out not to always be better. As a SQL expert, I explained this was because like with most database-related issues, “it depends”. 🙂

You have to do some analysis to see which parts need to be CTEs and which are better as temp tables. Your guidelines on when to use each match up well with what I discovered after a lot of experimenting on all that code.

Great post Brent, thanks for the clarity and simplicity of the examples. I discovered CTEs a few years ago thanks to a friend that teach me how to used it. I used to think that they were a lot easier than dealing with Temp tables and tried to use as much as possible when the situation allow it, however with time also learned (Like Daniel Lopez post clearly shows) that not always they were the best option. At least with your post we have some kind of idea before jumping in the CTE bandwagon.

In a contract gig last summer, I was tasked with doing whatever I could to cut the time nightly ETL processes took. One stored procedure written with CTEs that were joined to permanent tables was taking over 90 minutes to run. Changing the CTEs to temp tables with indexes on the join fields reduced the time to around 15 seconds. Astounded, I tried this technique with several other CTE-heavy sprocs, but either only got minimal gains or even longer running times. I did not have time to dive deep to find precisely why that first example showed such a dramatic change, but it would appear that the fact the ability to use indexes was the key. For me, that’s still the number one reason I will choose temp tables over CTEs when joins are required.

Nice article, although I can’t agree with the conclusion. You had 3 bad points for the CTE with no downsides for the temp table. Readability for temp tables isn’t that bad, at least not enough to warrant a performance hit, or the extra time it takes to rip it out and re-code. I used to love CTEs, however, it seems they hardly ever offer a performance increase over a temp table; as time goes on I find myself no longer justifying using them.

Such a succinct overview has been a long time coming. I appreciate it!

On both sides of the aisle, I’ve seen query writers run away with CTEs and cause chaos because they assume that the result sets will be manifested, and I’ve seen myself bring a SAP HANA server with half a terabyte of RAM to it knees with a simple CTE because I assumed that CTEs *weren’t* manifested.

[…] Brent Ozar has some advice on when to use common table expressions versus temporary tables: […]

Thanks for the post Brent.

That’s dissappointing. I love CTEs. They make the code so clean. FSharp.Data.SqlClient lib won’t even let you use temp tables since they are considered “bad practice”. But apparently they can be better than the alteranitive.

I was using a CTE the other day and it ran the whole CTE before running the last query which parsed down the query quite a bit with a predicate. But I thought the CTE was supposed to respect the predicate. But it ran the whole CTE query without parsing it down with the predicate first. I guess I was thinking the SQL parser was smarter than that. Now I know it isn’t and I’ll be more careful in the future. One of the things about SQL sometimes it is really smart about knowing what you are asking for and other times it is quite dumb.

Another note. My manager (we are all C# devs, not DBAs) was saying that it was faster to query the database multiple times with just the Ids of each table with a simple query rather than doing joins. That doesn’t make any sense to me. Wouldn’t making a single query with joins be faster overall?

Jon, one tip with CTEs is to examine the WHERE clauses in the entire statement and ensure each predicate is moved as early as possible. As you found, the optimizer isn’t always smart enough to determine where that should be. Filtering rows in an early CTE can be a big win over leaving it in the main SELECT.

I try to avoid CTEs and Temp Tables if the performance of a query is acceptable but then whenever I have tried a CTE I have never found them to be any better performance-wise compared to a sub-query and generally prefer the syntax of sub-queries – is there often a difference performance-wise with these two approaches?

Temp tables on the other hand mostly seem to have a very noticeable impact, especially when linked servers are involved. Sometimes I even add a primary key to them for even bigger gains.

@Robin Wilson,

From my understanding all a CTE is is sugar syntax for a sub-query, i.e., they are the same thing.

Ok thanks Jon. I did wonder if this was the case.

My thing with temp tables is when their usage gets “Institutionalized”. When you start opening queries and in all of them you see temp tables, you know there is a problem.

I ran into a situation (my first week on the job) almost 2 years ago, with a report that took 5 hours to run (best case scenario). As soon as I opened the SP it was clear to me that the issue were the 5 “huge” temp tables. Gathering a little bit of info… millions of rows on a couple of them, at least 10 SP running at the same time (I assumed they had the same “issue”), all of them fighting for space, and of course… all of them waiting.

By just removing the temp tables (4 of them where really misused) report time went down to 15 mins… and after a couple of touches, 7 minutes has been my worst case scenario.

I don’t wanna put all the blame to the temp tables… we all know that is not true; but that was the solution on this case.

So how would you handle the following (real) scenario without CTEs such that you could join the results in several stored procedures.

Simplified:

* You have an Organisation table (Id int PK, Name nvarchar(255) not null, ParentOrganisationId int not null FK to self)

* Users can be associated with an organisation as a way of assigning what data they can access.

* imagine you have the following organisation table structure:

_Org A

| |_Org A – sub 1

| | |_Org A – sub 1 – sub again

| |_Org A – sub 2

|

_Org B

The ruling is if you are associated with an org you can access it and any orgs that are under it in the tree.

Thus I have 2 functions that use a recursive CTE, GetAncestors and GetDescendants which take an org ID as the single parameter. I can then get all the orgs that come above it in the tree (and itself) or all those that come below it in the tree (and itself)

You could generate and store this data but then every time the tree changes by an org having its parent changed you’d have to regenerate the stored data for all users that had the org being changed.

Whoops mistake – the ParentOrganisationId *is* nullable

As much as I’d like to take on code challenges for free in the comments… 😉 Your best bet will be posting code challenges over at https://Dba.stackexchange.com or https://stackoverflow.com.

As far as I know the functions run completely fine. But the sentiment of most commentors seems to me extremist against CTEs. In my view the answer to everything in SQL is “it depends”

Oh also the functions simply return 2 columns, both ints.

Typically the rows returns given the input will be 10 rows or less.

peter I would say it depends on run time vs data change time. I’ve run a similar scenario where i had a trigger instantiate the relationship into a table since that relationship changed only a few times per day/week. While access to the result was heavy. So i took the 2sec recalculate runtime for relationship 3 times a day over having 200ms run time 100K times a day.

Nice post and the best practices on when to use each option was really helpful. I typically lean toward indexed temp tables for scenarios involving large data sets as input.

Just my opinion, but a CTE is effectively a temp table you can’t index. I’ve never heard it referred to as an equivalent for a sub-query and I’m not sure that’s an accurate description.

CTEs are definitely just like sub-queries, or to be more accurate they are on-demand views. They are only ran when referenced in a query and run each time it is referenced.

So what you’re saying is “it depends.” Imagine that.

I try to teach people that CTEs, temp tables, scalar functions and any other of a myriad of SQL Server features are just tools in a large arsenal. Much like a knife, they can be harmful if used improperly but when a chef needs to cut a tomato he doesn’t use a spoon.

CTE read 3 records from 2.5 million records and then removed characters from result. 45 seconds to do the three records. Same query into a #table to get same result, faster than you could blink. I think # tables are a lot faster.

Nearly every problem within our organization, related to the Optimizer don’t really give a plan one expected, I advice to use temp #tables. This is not only valid for CTEs, but also for JOINs and (Inline) Table Valued Functions.

And I don’t remember once case, where the results have been worse.

I don’t say you should use them without experiencing problems, because most of the time, the Optimizer does a decent job in running the Queries.

What I was **really** hope for is a Query Hint like WITH(TEMPTABLE), so that one can easly integrate this, without rewritting all of the Query, and without converting Views to Stored Procedures or anything like that.

Just use the Hint, and you are done. This should even be ORM-Mapper friendly.

CTEs can be problems for a variety of reasons. I was once handed a report that was timing out after running for half an hour, when it was only meant to return less than 100 rows of data. Turned out that the report’s stored procedure was referencing a view that referenced another view that had two CTEs in it that referenced each other. That’s bad enough in itself (essentially 4 layers of views), but because of how the CTEs were written, it was forcing a large cartesian join before it could apply any filters at all to the data. I reversed the sequence of the CTEs, with a very minor logic change to make that work, and the report went from over 30 minutes down to 12 milliseconds average. Not exactly what this article is about, since you can’t use temp tables in views, but it’s a caveat on CTEs.

I have always noticed on the servers that I run queries with CTEs that pull 100K records hang and/or fail. Is there any reason for that?

Start here: https://www.brentozar.com/archive/2009/03/getting-help-with-a-slow-query/

This post very helpful. Thank a lot.

CTE Tables were not created for that purpose. CTE tables can be executed as a loop, without using stored procedures directly in the sql query. The way you are using the CTE exists from the very beginning, with the SQL subqueries (SELECT * FROM YOUR_TABLE) AS CTE.

Anyway, in both cases, the performance of the CTE tables use not to be the best one.

Agree with conclusion – start with CTEs, test to find the problem ones (not necessarily all), rewrite them as temp tables.

Also, as someone noted, CTEs can often be improved by filtering as early as possible in the CTE chain. This will be even more important for populating temp tables to avoid building massive ones. I now use SELECT INTO rather than CREATE INSERT as seems to perform better.

For updating, unless you are using the single statement MERGE after the CTE chain, you have no choice but use temp tables for an ‘upsert’ as two separate statements.