Folks sometimes ask me, “When a table has more indexes, and SQL Server has more decisions to make, does that slow down execution plan generation?”

Well, maybe, but the table design choice that really screws you on compilation time is partitioning. If you choose to partition your tables, even tiny simple queries can cause dramatically higher CPU times. Even worse, as the famous philosopher once said, “Mo partitions, mo problems.”

We’ll start with any Stack Overflow database, create a numbers table, and then dynamically build a partition function that partitions our data by day:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

USE StackOverflow; GO /* Create date partition function by day since Stack Overflow's origin, modified from Microsoft Books Online: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-partition-function-transact-sql?view=sql-server-ver15#BKMK_examples DROP PARTITION SCHEME [DatePartitionScheme]; DROP PARTITION FUNCTION [DatePartitionFunction]; */ DECLARE @DatePartitionFunction nvarchar(max) = N'CREATE PARTITION FUNCTION DatePartitionFunction (datetime) AS RANGE RIGHT FOR VALUES ('; DECLARE @i datetime = '2008-06-01'; WHILE @i <= GETDATE() BEGIN SET @DatePartitionFunction += '''' + CAST(@i as nvarchar(20)) + '''' + N', '; SET @i = DATEADD(DAY, 1, @i); END SET @DatePartitionFunction += '''' + CAST(@i as nvarchar(20))+ '''' + N');'; EXEC sp_executesql @DatePartitionFunction; GO /* Create matching partition scheme, but put everything in Primary: */ CREATE PARTITION SCHEME DatePartitionScheme AS PARTITION DatePartitionFunction ALL TO ( [PRIMARY] ); GO |

Then we’ll build a partitioned copy of the Users table, partitioning them by CreationDates:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

DROP TABLE IF EXISTS dbo.Users_partitioned; GO CREATE TABLE [dbo].[Users_partitioned]( [Id] [int] NOT NULL, [AboutMe] [nvarchar](max) NULL, [Age] [int] NULL, [CreationDate] [datetime] NOT NULL, [DisplayName] [nvarchar](40) NOT NULL, [DownVotes] [int] NOT NULL, [EmailHash] [nvarchar](40) NULL, [LastAccessDate] [datetime] NOT NULL, [Location] [nvarchar](100) NULL, [Reputation] [int] NOT NULL, [UpVotes] [int] NOT NULL, [Views] [int] NOT NULL, [WebsiteUrl] [nvarchar](200) NULL, [AccountId] [int] NULL ) ON [PRIMARY]; GO CREATE CLUSTERED INDEX CreationDate_Id ON dbo.Users_partitioned (Id) ON DatePartitionScheme(CreationDate); GO INSERT INTO dbo.Users_partitioned (Id, AboutMe, Age, CreationDate, DisplayName, DownVotes, EmailHash, LastAccessDate, Location, Reputation, UpVotes, Views, WebsiteUrl, AccountId) SELECT Id, AboutMe, Age, CreationDate, DisplayName, DownVotes, EmailHash, LastAccessDate, Location, Reputation, UpVotes, Views, WebsiteUrl, AccountId FROM dbo.Users; GO |

Let’s compare partitioned and non-partitioned performance.

I’ll create a non-partitioned index on both the Users and Users_partitioned tables. Note that even if I don’t specify partitioning on the Users_partitioned index, it still ends up partitioned by default, which is kinda awesome:

|

1 2 |

CREATE INDEX DisplayName ON dbo.Users(DisplayName); CREATE INDEX DisplayName ON dbo.Users_partitioned(DisplayName); |

Now we’ll run an exceedingly simple query that only returns one row:

|

1 2 3 4 5 |

SET STATISTICS TIME, IO ON; SELECT * FROM dbo.Users WHERE DisplayName = N'Brent Ozar'; GO SELECT * FROM dbo.Users_partitioned WHERE DisplayName = N'Brent Ozar'; GO |

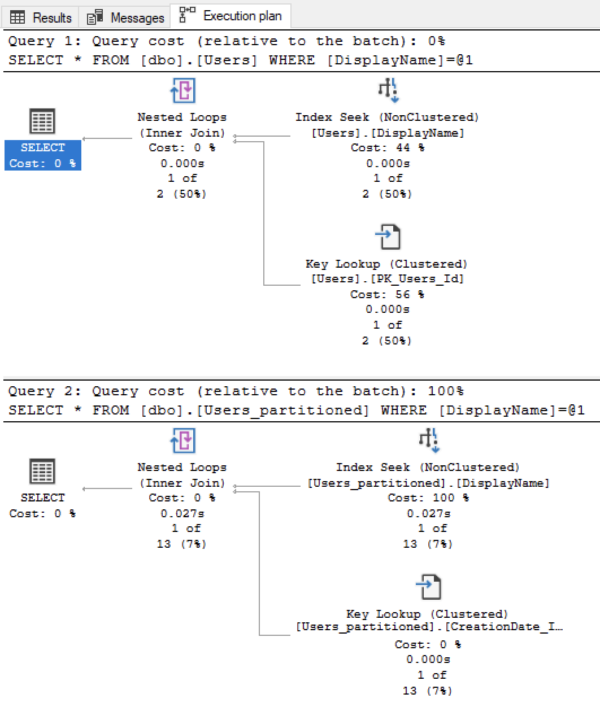

At first glance, the plans look the same – but notice how the non-partitioned table is 0% of the cost, and the partitioned table is 100% of the cost:

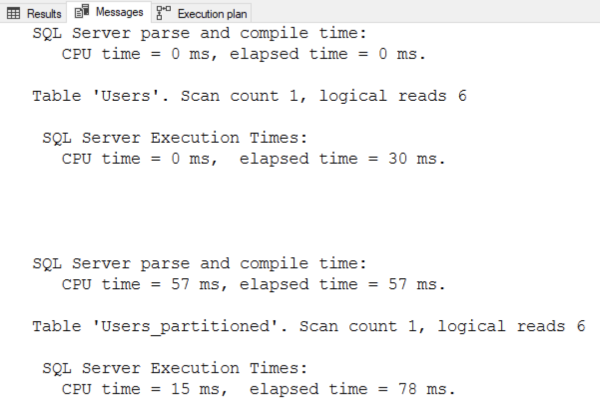

That’s because the non-partitioned estimated cost was way less than 0.001, and the partitioned query’s estimated cost is over 15. What’s worse, the compilation time, execution time, and logical reads are totally different – the top one is the non-partitioned query, and the bottom is the partitioned one, both cleaned up for readability:

Hubba hubba. Just compiling the partitioned table’s plan took 27ms of CPU time. I know what you’re thinking: “Brent, who cares about 27ms of CPU time?” Well, remember, this is an overly simple query! In real-life examples, it’s not unusual to see 250+ milliseconds of CPU time spent just compiling the plan – which means you can only compile 4 queries per second, per CPU core. That’s when plan cache pollution due to unparameterized queries really screws you.

I’m writing this particular post because I had a client who chose to partition every table in their data warehouse, regardless of size, and used exactly the same partition granularity on all sizes of tables to keep their dynamic ETL queries simple. Unfortunately, even querying simple configuration tables was taking 250+ milliseconds of CPU time just to compile a plan.

Fine – but what about non-partitioned indexes?

I’ll drop that partitioned index and create a non-partitioned index on the Users_partitioned tables – note that I have to specify ON PRIMARY as the filegroup for the partitioned table, or else any nonclustered index will by default automatically be partitioned as well – then try the queries again:

|

1 2 |

CREATE INDEX DisplayName ON dbo.Users(DisplayName); CREATE INDEX DisplayName ON dbo.Users_partitioned(DisplayName) ON [PRIMARY]; |

Now we’ll run an exceedingly simple query that only returns one row:

|

1 2 3 4 5 |

SET STATISTICS TIME, IO ON; SELECT * FROM dbo.Users WHERE DisplayName = N'Brent Ozar'; GO SELECT * FROM dbo.Users_partitioned WHERE DisplayName = N'Brent Ozar'; GO |

Now the estimated costs are neck and neck:

But that’s just an estimate – the only thing neck and neck here are the logical reads:

Designing and executing a query plan takes longer when any of the objects involved are partitioned.

This overhead isn’t large as long as you’re comparing it to the overhead of big data warehouse queries where partitioning data can reduce reads. But if you’re comparing it to smaller objects – say, 100GB or smaller rowstore indexes – that are queried more frequently, with more varying queries that require building new execution plans – then the overhead of partitioning starts to add up. Mo partitioned objects, with mo partitions in each object, mo problems.

Partitioning is one of those features where I never hear people say, “Wow, every which way I turn, partitioning has just made performance better!” Rather, it’s a feature where people keep saying, “I had no idea partitioning was going to cause me problems over THERE, too.”

In my Mastering Index Tuning class, we spend just enough time on partitioning to explain where it’s a good fit – but much more time on indexing techniques that are more appropriate for 99.9% of workloads out there.

12 Comments. Leave new

Thanks Brent – Very interesting information.

We are using partitioning in a different way. We partition some of our staging tables (and even fact/dimension tables) by source system, where the source schemas are identical but the data is different (think different databases using the same application front-end). So our partitions are 1, 2, 3, 4, 5, etc. where each value is a different source.

We are partitioning the tables this way to allow us to reduce the number of objects, but still manage the data for each source separately – so we can truncate or recalculate a specific partition and reload or reprocess it without impacting the other partitions.

Based on this post, we may want to reevaluate this approach… as much as we may not want to. I guess we’ll need to do some testing. Thanks!

Interesting read. So would you deem partitioning appropriate for a larger data set (1TB+) in which the data is reported on by month basis? Would creating month based partitions be appropriate?

Robert – generally speaking, no, and I explain why with examples in the Mastering Index Tuning class.

Great article! For performance improvement they are less than stellar since column store indexes came along but, you still can’t beat them for moving millions of rows of data in and out without downtime. Nightly maintenance loves partitions.

Beware when creating non-partition aligned indexes. They may be faster, but you won’t be able to drop a partition without first dropping the index and then creating it again. Been there, done that, have the T-shirt.

The main problem with this example (and the real world) is, that you should ALWAYS specify the partition column in the WHERE or JOIN condition, if your table is partitioned, otherwise the SQL Server does internal a SELECT onto every partition and combine the results by an UNION ALL. And particularly for JOINs it is helpful, if you could store the min / max value of the partition column in a @variable and add it to the JOIN condition too (otherwise the partition elimination does not work – at least in SQL 2016, but I can’t remember that there was something new in 2017/2020).

If you would have had added an and creation_date = @creation_date to your query it would have had read only a single partition and the compile should be faster too. Of course you would need to know the creation date in this case, so this is a good example, why the creation date may be not the best partition column, because it is more or less random and has usually only informational character (on the other side the order_date or even the creation date on the posts table (when you want to see the posts of the last x months) may be much better canditat).

Of course non-partition aligned indexes were an option too or you could have partitioned it by the display name (26 partitions from A to Z), but as Peter Nauta mentioned, it has its own drawbacks (no partition switching / truncate partition)

Thomas – I have so many problems with this.

First, how do you know a user’s CreationDate in advance of the query?

Second, I *did* do a non-partition-aligned index, so I’m guessing you didn’t read the whole post.

I fully agree with you, usually you do / can NOT know the Creation Date. So this is (for this type of query) a bad partition column and shouldn’t be used when such queries happens often (on the other hand it usually makes no sense to partion a user table, because it is often relativ small).

This (and many other stumbling blocks as the loss of uniquiness on the user_id) makes a good partitioning hard for unexperienced developers (and helps you earning money by bringing your experience / teaching :-)).

PS: I confess, that I didn’t read fully the non-aligned-block of your mail, because my webmailer truncates sometimes some mails 🙁

Thanks, very interesting. But why do we need Numbers table, created at beginning?

Hahaha, great question! I didn’t need it after all – I have another part to that demo that I use in a class, and I didn’t include that in the post, so I should rip that part out.

I was running into this exact same issue this week in our DWH environment and I could remember you telling this drawback in one of the classes I followed when you covered partitioning.

So I did a quick test: A select * FROM with a where filter on the partitioned column. It took less than 2 seconds to generate an estimated plan when it had 1 partition and 45 minutes (!!) with all the partitions in place (1600).

After searching online I was happy to find this article that confirmed my finding. Thanks a lot Brent!

Glad I could help!