In my last post, we started with a memberships table, and each membership had start & end dates. I’m going to create the table and populate it with everyone having an active membership – their StartDate is the same as their Stack Overflow account creation date, and their EndDate is around a year or two from now:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

DropIndexes; GO USE StackOverflow; GO DROP TABLE IF EXISTS dbo.UsersMemberships; CREATE TABLE dbo.UsersMemberships (Id INT IDENTITY(1,1) PRIMARY KEY CLUSTERED, UserId INT NOT NULL, StartDate DATETIME NOT NULL, EndDate DATETIME NOT NULL, CancelledEarlyDate DATETIME NULL); GO INSERT INTO dbo.UsersMemberships(UserId, StartDate, EndDate) SELECT Id, CreationDate, DATEFROMPARTS(YEAR(GETDATE()) + 2,MONTH(LastAccessDate), DAY(LastAccessDate)) FROM dbo.Users WHERE NOT(MONTH(LastAccessDate) = 2 AND DAY(LastAccessDate) > 28); /* Avoid Feb 29 problems */ GO CREATE INDEX IX_StartDate_EndDate ON dbo.UsersMemberships(StartDate, EndDate) INCLUDE (CancelledEarlyDate); CREATE INDEX IX_EndDate_StartDate ON dbo.UsersMemberships(EndDate, StartDate) INCLUDE (CancelledEarlyDate); GO |

If I run either of these queries to check how many active users we have:

|

1 2 3 4 5 6 7 8 9 |

SELECT Id, StartDate, EndDate FROM dbo.UsersMemberships WHERE GETDATE() BETWEEN StartDate AND EndDate; GO SELECT Id, StartDate, EndDate FROM dbo.UsersMemberships WHERE StartDate <= GETDATE() AND EndDate >= GETDATE(); GO |

The actual plans show that SQL Server does a great job of accurately estimating that all 9 million of the rows in the table will match:

But now let’s introduce that CancelledEarlyDate.

I’m going to modify my reporting query to include a COALESCE, taking the first non-null column between CancelledEarlyDate and EndDate. If the user cancelled their membership early, we’ll use that cancellation date – otherwise, use EndDate. This is similar to the ISNULL function, but COALESCE lets me pass in multiple values rather than just two. (ISNULL performs the same in this case.)

|

1 2 3 4 5 6 7 8 9 |

SELECT Id, StartDate, EndDate FROM dbo.UsersMemberships WHERE GETDATE() BETWEEN StartDate AND COALESCE(CancelledEarlyDate, EndDate); GO SELECT Id, StartDate, EndDate FROM dbo.UsersMemberships WHERE StartDate <= GETDATE() AND COALESCE(CancelledEarlyDate, EndDate) >= GETDATE(); GO |

Note that I haven’t updated anyone’s CancelledEarlyDate yet – they’re all still null. SQL Server’s actual execution plans do a beautiful job of estimating that all 9M rows will still match our search. Note that we’re getting index seeks here (without key lookups) because I included the CancelledEarlyDate in my indexes this time around.

And if I update 50% of the rows to have a CancelledEarlyDate of yesterday:

|

1 2 3 4 |

UPDATE dbo.UsersMemberships SET CancelledEarlyDate = DATEADD(dd, -1, GETDATE()) WHERE Id % 2 = 0; GO |

Now our estimates go off the rails.

Then the actual plans show that SQL Server believes all of the rows will match our filter – even though only half of them do. He estimates 9M rows will come back, but in reality, only 4.5M do:

In the last post, I had to introduce a more real-world data distribution to show how estimation went off the rails as your population started to shift. Here, I don’t – even a very simple population causes our query plans to go wildly wrong.

Now let’s take a breather here for a second because I can almost hear the comments rolling in.

First, you might be thinking that this particular illustration isn’t really all that bad: SQL Server uses the right access method (an index seek rather than a table scan), and it shouldn’t have approached this problem in any other way. However, in the real world, this incorrect row estimation has a huge impact on things like memory grants, how SQL Server approaches other tables in the query, and the order of operations in a query.

Second, you might be typing, “Brent, how might different indexing strategies fix this problem?” That’s why I use the free Stack Overflow database for my demos, and why I give you all of the scripts. You can go test out different approaches for yourself to learn.

Back to the stuff I wanna teach you here. I don’t think it’s fair for us to ask SQL Server to estimate this particular part of the query on the fly:

|

1 2 |

WHERE StartDate <= GETDATE() AND COALESCE(CancelledEarlyDate, EndDate) >= GETDATE(); |

That’s just too hard. Sure, SQL Server may have statistics on each of those columns individually, but it doesn’t understand how those columns relate to each other on particular rows. He just has a rough idea of each one’s date distribution. In my simple scenario, it might be theoretically doable, but in real-world data distributions with memberships & policies spread across decades, it’d be really tough. You would want whole separate statistics based on the relationships between columns (and no, multi-column stats aren’t anywhere near that powerful.)

We can help by combining the two columns.

While SQL Server isn’t able to do this itself automatically, we can build our own computed column that performs the calculation for us:

|

1 2 3 4 |

ALTER TABLE dbo.UsersMemberships ADD CoalescedEndDates AS COALESCE(CancelledEarlyDate, EndDate); GO |

And suddenly – with no other changes, like no indexes or no query modifications – the actual plan’s estimates are way better:

That’s because when we add a computed column, SQL Server automatically adds a statistic on that column, and it can use that statistic when estimating rows.

It’s still not perfect because the plan has a variety of issues:

- We’re doing an index scan here, not a seek

- The scan reads all 9M rows of the index by doing 37,591 logical reads

- We’re calculating the COALESCE every row, every time we do the query, burning about 1 second of CPU time

In this case, indexing the computed column solves all 3 problems.

I’ll create two indexes – one that leads with StartDate, and one that leads with the computed column – because SQL Server may choose different ones depending on our data distribution:

|

1 2 3 4 5 6 |

CREATE INDEX IX_StartDate_CoalescedEndDates_Includes ON dbo.UsersMemberships(StartDate, CoalescedEndDates) INCLUDE (EndDate); CREATE INDEX IX_CoalescedEndDates_StartDate_Includes ON dbo.UsersMemberships(CoalescedEndDates, StartDate) INCLUDE (EndDate); GO |

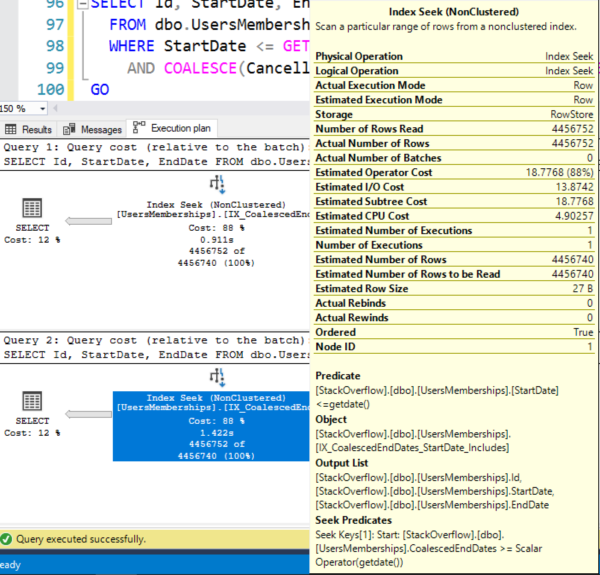

The new actual plans of our reporting queries show that we fixed ’em:

We get an index seek, we only read the rows we need to output, and we don’t have to compute the coalesced dates on every row. Yay!

But only in this case.

All of our reporting queries better be exactly the same. Remember how I said earlier that I’m using COALESCE because it takes multiple arguments, and how ISNULL performed the same in that query when I was discussing it? Now, not so much. If I try ISNULL here, SQL Server ignores the computed columns altogether.

And even if they’re all the same, I’m still back to the same problems I had in the last post: as our data distribution becomes more real-world, with a mix of expired, current, and future memberships, I’m going to have estimation problems.

For more about the computed column technique, including when to persist them, when not to, and how to level up with indexed views, check out the Mastering Index Tuning class module on filtered indexes, indexed views, and computed columns.

8 Comments. Leave new

When you wrote the “columns relate to each other” I thought “The columns in a table refer to the primary key, the whole primary key, and nothing but the primary key, so help me Codd”. But heck, you were presenting a real world example, so I have faith: Codd will forgive you :).

😉

Is it to much to hope that I could talk to the developer and convince them to remodel this table to have [EndDate] and [OriginalEndDate]? Hopefully they’re talking to me as this is developed instead of me finding out about it two days after deployment when performance is tanking.

(This is assuming that the early end date (if it exists) is of greater importance to our business than the original end date.)

Andrew K – it’s definitely possible, but start with the recommendation from the original blog post yesterday: write up your experiment, prove that the performance is better, and post it as a Github gist (or a blog post on your own site.) I’ve already had a bunch of people email me privately saying, “Wow, I thought I knew how to fix that, but then I ran an experiment and I couldn’t fix it either.”

And here I was thinking the advice was to prefer ISNULL over COALESCE since Coalesce ended up working like a CASE statement behind the scenes.

If I’m still using SQL 2014 in a lot of cases (with highest possible compatibility level applied), should I just switch to COALESCE all of the time then?

I even have some IsNull(A, IsNull(B, C)) checks in my code as the advice from years ago was that Coalesce was not well implemented… It’d be nice to get rid of them 🙂

Ian – your best bet is to evaluate query plans like I do in these posts, comparing & contrasting the plans and the STATISTICS IO results. Even when there’s a flat global rule (“always do X”), you’ll find that it tends to morph over time with different versions (and even cumulative updates) of SQL Server.

@Brent, Have you considered the common real case where enddate is unclosed (is null)

Jim – yes, but I didn’t blog about it in this post. (Only so many things I can cover per post, heh.)