Normally when you run a DELETE statement in a database, the data’s gone.

With the soft delete design pattern, you add a bit column like IsDeleted, IsActive, or IsArchived to the table, and instead of deleting rows, you flip the bit column. This can buy you a few advantages:

- Easier/faster undeletes

- History tracking (keeping deleted rows around for auditing purposes, although the method I’m going to show here doesn’t do anything fancy like track who did the delete)

- Easier reconciliation during disaster recovery failovers (refer to the Senior DBA class module on recovering from failovers for more info)

- Lower workload for Availability Groups secondaries (neat scenario from Aaron Bertrand)

I’ll illustrate it by implementing soft deletes on the Stack Overflow Users table. (Any version/size will do, but if you want to replicate my exact query metrics, I’m using the 2018-06 version.)

First, we need to add the IsDeleted column:

|

1 2 3 |

ALTER TABLE dbo.Users ADD IsDeleted BIT NOT NULL DEFAULT 0; GO |

No matter how big the Users table is, as long as there’s no one else in the table at the moment, that change takes effect instantly – no matter how large the table is! Hot dog, I really like SQL Server. Makes these kinds of things easy – it’s implemented as a metadata-only change.

Stop deletes with a trigger.

We can use an “instead of” trigger that will fire when someone tries to delete a row, and we’ll do something different. I’m using the “deleted” virtual table to grab the rows that are supposed to be deleted.

|

1 2 3 4 5 6 7 8 9 |

CREATE OR ALTER TRIGGER SoftDelete_Users ON dbo.Users INSTEAD OF DELETE AS BEGIN SET NOCOUNT ON; UPDATE dbo.Users SET IsDeleted = 1 WHERE Id IN (SELECT Id FROM deleted); END GO |

When someone tries to delete a row, we can see that it wasn’t actually deleted:

The slick part of this approach is that it doesn’t require changing your existing DELETE code – it just thinks it’s successfully deleting rows. However, we still have a pretty big problem: apps are still querying the table, and they’re not expecting to see deleted rows.

Filtering selects is a little harder.

Ideally, if you can change the queries, you add “WHERE IsDeleted = 0” everywhere. The hard part there is changing every query, obviously, so a workaround is to rename the Users table to something new, and then put a view behind in its place:

|

1 2 3 4 5 6 7 |

EXEC sp_rename 'dbo.Users', 'Users_tbl'; GO CREATE OR ALTER VIEW dbo.Users AS SELECT * FROM dbo.Users_tbl WHERE IsDeleted = 0; GO |

(Quick note: you probably don’t want to use SELECT * in a view like this. Under the covers, SQL Server builds the code for the view once, and then if you add more columns to dbo.Users_tbl, the new columns won’t show up in queries until you call sp_refreshview. I’m just using the * here for simplicity.)

Doing the table-to-view switcheroo means the soft-deleted rows are instantly filtered out from my queries.

Index design is a little harder, too.

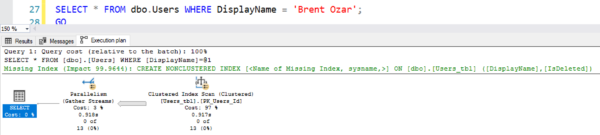

Whether use the view approach or add IsDeleted = 0 to all your queries, your execution plans are going to have something new: a filter on IsDeleted = 0. That also means that your missing index recommendations are going to ask for IsDeleted in the key of your index:

When you first implement soft deletes, this isn’t a huge concern for most queries because none of your data can be excluded (because no rows have IsDeleted = 1 yet.) However, the worst case for performance is a query that used to have a covering index, but now has to do a key lookup for every single row in order to double-check that the row hasn’t been deleted yet.

One workaround is to add IsDeleted to every index. In the beginning, it doesn’t matter if IsDeleted is part of the key or the includes – because it’s not filtering anything out yet. Over time, it starts to become more important. You’re going to think that someday, if the majority of your records are deleted, then you might even want IsDeleted to be the leading key in all of your indexes. That would be incorrect – you’d be better off with the next solution.

Another workaround is to use filtered indexes. A filtered index is just an index with a WHERE clause, like IsDeleted = 0:

|

1 2 3 |

CREATE INDEX IX_DisplayName ON dbo.Users(DisplayName) WHERE IsDeleted = 0; |

When a majority of your rows have IsDeleted = 1, this filtered index has a nifty benefit: it’s physically smaller because it doesn’t contain the deleted rows. It takes up less space, meaning shorter backups/restores/CHECKDB, and it also has less concurrency overhead if some kind of bulk operation needs to be done to the deleted rows.

Yet another workaround is to split the table up. If you swap a view in, there’s no reason you can’t have that view union across a couple of tables: one with active rows, and one with deleted rows. Well, there’s one reason you can’t do it: this style of storage becomes harder, and whaddya know, would you look at the time? I’ve spent an entirely long time writing out this blog post, and I’ll leave that split scenario for another post.

59 Comments. Leave new

What about putting deleted items in a JSON column in another table. That way you don’t need to worry about changing columns. Something like:

**DeletedUsers**

| Id : int PRIMARY KEY | JSON : nvarchar(max) |

I guess you really don’t need an ID column. But then you don’t need to do special indexing on the JSON.

You would still have to move the rows out of the original table, which basically violates all of the 4 reasons we’re doing this (listed at the beginning of the post.)

I guess I was commenting on “Yet another workaround is to split the table up.” But yes, there are trade offs to using another table, like perf, like you stated in the OP.

My first database language was FoxPro which did something very similar to this. It was built into the product but there was a “deleted” flag that you could only see as an admin and if you enabled seeing it. Then the deleted rows actually got cleaned up with a separate command. Until then though it was perfectly possible to “undelete” rows without much effort.

I can’t tell you how surprised and unhappy I was when I accidentally deleted some rows my first few days working with SQL Server and discovered that this wasn’t the way other database systems worked.

ah yes the PACK command…..

Yes, PACK

We use this method when 1) data is entered by the user, and it would take them a long time to re-enter it (i.e. annual evaluations) and/or 2) when we give the users the power to delete it themselves. I know it’s hard to believe, but they sometimes delete something they didn’t want to. This saves us a lot of headaches without having to track down when it was entered and when it was deleted and then restoring a copy of the database to recover the lost data. We never thought of the index ramifications. That’s certainly something to ponder.

My initial thought is why not use temporal tables for the same effect. Will you be exploring this with the last workaround regarding splitting the table, or, because the temporal history table tracks all DML operations, is it overkill?

Bingo, a temporal table would grow astronomically large, quickly, and present new problems.

But disk space is cheap. I’m not saying that I would use it here instead of Soft-Deletes. But it is really brilliant for showing why the system behaves different this week compared to last week…

I have implemented it on my “settings” tables, and used it to show who did what and when. 🙂

Henrik – on settings tables, sure, but that’s not really what this post is about. On real tables, like the multi-billion row ones I’m dealing with at a customer today, simply can’t afford to say “keep all prior versions of rows to see who did what and when.” I hear you though – on small desktop size apps, it’s probably fine.

Great example and use cases! We used a very similar technique for facts and dimension tables used to populate a data warehouse. Additionally, we added DateDeleted, DateUpdated, DateInserted for tracking purposes, updated by our ETL. The speed of “deletes” in a really large table and AOAG updates would be beneficial.

Another potential solution to your indexing dilemma is to make the dbo.Users view indexable and build the indexes on that instead of the underlying table.

A problem the trigger doesn’t resolve is that Instead Of triggers often return data to the calling code that the transaction was rolled back. I’ve had problems with that in a few scenarios. Before doing anything like this, it’s important to make sure the trigger won’t cause unintended issues with existing applications and services.

Yep, code changes require testing, absolutely.

“Filtering selects is a little harder.Ideally, if you can change the queries, you add “WHERE IsDeleted = 0” everywhere. ”

It looks a good candidate to use RLS(Row Level Security). Queries will still be like

SELECT col1 FROM tableand only “priviliged” users are allowed to see deleted rows. Combination of these two will be emulation of Oracle “In-Database Archiving”(https://oracle-base.com/articles/12c/in-database-archiving-12cr1)In my experience, app developers tend to want to use a single account for their app. Changing accounts based on the need to see auditing data makes things harder, and you still have to solve the filtering problem. I don’t see this one as a win.

That is true mainly because the application especially a web application is going to connect via a single connection string likely using a trusted connection of some sort (in the IIS world, we’re talking App Pool User, Network Service, etc.). Additional users start getting a little too granular for the job at hand and cumbersome to maintain.

Not necessarily changing connection. There are other options. You still could have single app user and filter based on for instance session_context variable and is_deleted column.

Lukasz – again, you’re adding a ton of complexity here, creating more problems than you’re solving. There’s a great saying that helps when designing software: K.I.S.S.: https://en.wikipedia.org/wiki/KISS_principle

“Quick note: you probably don’t want to use SELECT * in a view like this”

Another reason not to use SELECT * in the view is that you probably don’t want to return the new IsDeleted column, which will always have a value of 0.

The “table-to-view switcheroo” keeps everything working when you can’t change all the code at once. You do want the IsDeleted column in that view so that you can start changing code to respect the soft deletes.

Because none of us would _ever_ just leave the working code pointed at the view indefinitely, of course!

Hi, Brent!

From what I know, in some versions of SQL Server was something like a bug, when using filtered indexes. You had to add the filtered columns as included. I saw that you didn’t done that. Has anything changed in the newer versions?

Alex – well, there’s just only so deep I can go in a blog post. It’s something we discuss in my Mastering Index Tuning class though.

Ok so it’s still necessary in newer versions, but you didn’t want to complicate the article?

Alex – as much as you really want an answer that fits in a blog post comment, I can’t give it to you. That’s why I teach training classes. Is that fair?

Ok, it is fair. I was expected an yes/no answer. I assume it’s more complicated than that.

Yep! Exactly.

I enjoyed the post and the solutions to the common problems with a soft delete scenario. Thanks for writing it!

You’re welcome, glad you enjoyed it and took the time to share!

What is your view of a partitioned table partitioned on IsDeleted?

Iain – you would be moving rows between partitions, which again violates a lot of the reasons to do this in the first place.

We use this method, but instead, we use “IsActive”

You posted an article on May 6th about a silent bug you find in most triggers related to users writing triggers where some ID field is equal to the ID from either the inserted or deleted table. In this example, you are showing where the ID is IN a list of ID’s from the deleted table. Looks good to me but the reason for getting a list of the ID’s was because there could be more than one record in the deleted table. I know this trigger is “INSTEAD OF DELETE” but would there be a chance of the inserted table also having a record? I wouldn’t want to accidentally flag something as deleted if it was really meant for an update and is in both inserted/deleted tables.

Yep! Again, it’s beyond the scope of the post here. I’m just explaining soft deletes here, not how to design triggers in general. Hope that helps!

I’m going to discuss this approach with my team and we may use it for a future release. If so, I owe you an adult beverage!

There is a tiny* issue that is worth mentioning if a “filtered index” is mentioned in the blog, is that when someone writes a query\sp that references the table with a “IsDeleted” predicate in the WHERE clause instead of pointing to the view and they add a constant via a variable that holds (0) and is then used in the predicate i.e “IsDeleted = @IsDeleted” – the query optimizer will not pick the filtered index since it cannot know at any given point in time while the query runs what the “variable” value is (since it can.. vary, duh).

and I’ve had developers here write such code, they still do even after we removed some of their fingers (did not help).

Yan – yep, that’s not a tiny issue, but it’s outside of the scope of this post. Only so much I can teach in a single blog post, but I cover that in detail in my Mastering Index Tuning class. Thanks!

I once had an application where most of the tables had the equivalent of an IsDeleted column. It was designed this way from the beginning, so we didn’t use filtering views. The IsDeleted = 0 was a constant source of bugs, because you would forget to add it to your application queries. Generally, you didn’t have a lot of deleted rows in a test environment (i.e, most Users rows wouldn’t have a deleted post row), so it was easy to miss the bugs in testing.

@Wyatt, in an ideal world you would never expose your tables directly to an application. You would use some indirection to make breaking things harder. Like Views/Stored Procs/Inlined TVFs. That would stop a whole host of problems from happening.

I really like how PostgREST set up their API that is closing tied to the database. Really, even if you don’t use that software, it is a great resource on how to work with databases. Granted that is software that is designed for simple interfaces as the business rules get more complex you would need to use the strangler pattern to add in the added complexity.

Jon – that’s interesting, but I have never – not even once, in all my years of working with SQL Server – seen a SQL Server database set up that way.

I mean, I’m sure they exist – like the Queen of England – I just wouldn’t want people to think it’s normal to run into the Queen of England on a regular basis.

The database at my work right now is pretty much set up that way. But it has other oddities that make it so you are pretty much forced to interact with it through views just to make life easier. But the views are pretty much used for reads not writes. Though you could do writes on them too.

I’m not the one that set it up originally. But I am glad that they used views everywhere.

I imagine databases aren’t set up that way with PostgresQL either but the PostgREST guys are big into setting it up that way.

If I have a unique constraint on my Users table (say on UserName), I would have to abandon that, wouldn’t I? Even if I expanded the constraint to include IsDeleted, I could have only 1 “username” that is deleted and 1 that is not. I couldn’t delete the active “username” without violating the constraint.

(of course, I could change it from a unique constraint to a unique index with a WHERE clause… that would work)

Thanks for the blog post Brent, today I learned couple of new things.

You’re welcome, and glad you enjoyed it!

Couldn’t you just use patitioning on the IsDeleted column? Even gives you the nifty benefit of being able to move the “Deleted” records off to less expensive storage.

Scott – unfortunately when you set a row’s IsDeleted = 1, you’re going to move it from one partition (storage) to another, which kinda violates the points we’re discussing up at the top of the post.

I’ve seen cases where the Instead of Delete trigger is been is used in combination with row level security to exclude all deleted rows. Works nice since there is very little code changes. However if the trigger is not used and an update is performed in combination with Row Level Security to set the deleted flag, an app using Entity Framework for example, the concurrency checker will go nuts, because and update automatically excluded a row from the context and that should happen on delete not update.

[…] Brent Ozar shows how to use soft deletes in SQL Server: […]

how handle Unique Key constraint for Soft delete cases , If you dont want to add one more column . In MysQL there is option like we can create Unique Key constraint with where clause. but in SQL Server its not there . Is there any other option for this ??

You would need to add another column.

what if we still want to delete those soft deleted items if the data has been deleted at least 30 days ago and no one cares about it..!?

Then delete it.

i am not able to call the values where i d set deleted_at=datetime.now , i am only able to filter the values which is set to NULL, now i want to hard_delete it.

I’m not sure what you mean – it’s probably beyond what I can troubleshoot here in the blog post comments, sorry.

since version 12 Oracle has “In-Database Archiving” that addresses the same problem

Quick guide: https://oracle-base.com/articles/12c/in-database-archiving-12cr1

Official documentation: https://docs.oracle.com/en/database/oracle/oracle-database/21/vldbg/control-validity-visibility-data.html#GUID-5A76B6CE-C96D-49EE-9A89-0A2CB993A933

In multiuser environment with large number of transactions soft delete has another but very inconvenient consequence. There is no easy way to prevent a row from being inserted into the master table while someone else marks that row in the slave table as deleted. Especially if the master table has a large number of records. Example: Orders-OrderItems-Items. First insert of a new Item to OrderItems and soft deleting the same Item from Items.

If you’re facing that problem, feel free to post table & code examples at a site like dba.stackexchange.com, stackoverflow.com, or SQLServerCentral.com. I’ve faced that problem before and there are pretty straightforward solutions.