Today is the opening day of the 2018 PASS Summit, the largest annual gathering of Microsoft data professionals. Every year, thousands of us gather at the Seattle Convention Center to connect, learn, and share. On this first day of the conference, all the attendees go to the same room to watch the keynote speech.

To watch it live, free, starting at 8:15AM Pacific: PASS.org.

The abstract:

SQL Server and Azure Data Services: Harness the future with the ultimate hybrid platform for data and AI

In today’s world, the forces of Cloud, Data and AI are driving innovation and ushering in the era of the intelligent cloud and intelligent edge. Microsoft’s goal is to bring you the best products and tools to tackle these new opportunities—helping you build a data infrastructure that supports your organization now and into the future.

Join Rohan Kumar, Corporate Vice President of Azure Data, as he demonstrates the latest advances from Microsoft across SQL Server, Azure Data Services, Business Analytics and AI. Preview new products and features and see the innovations that make Microsoft the best data partner of any provider– on-premises or at cloud scale.

What to expect: 100-200 level tech details on Azure & SQL Server 2019. Keynotes are general marketing about features because the audience experience level is so broad. Here at the Summit, you’ve got database administrators, developers, report writers, BI professionals, data analysts, managers, you name it. Microsoft aims for the lowest common denominator here, talking about features and customer stories in a way that 99% of the audience will be able to grasp. For example, when they talk about an Azure feature, they want you to be able to follow along even if you don’t use Azure. To see what to expect, check out my live blog from the 2017 day 1 keynote.

What not to expect: truly groundbreaking announcements. The much-larger Microsoft Ignite just finished up recently (like 30K attendees to Summit’s 3K). Microsoft tends to show off the really big stuff there, like the first downloads for SQL Server 2019. This keynote is likely going to be more about fleshing out feature details, telling customer stories, and sharing delighter features that might not move licenses, but will move the excitement needle. For example, take this tweet from the Power BI CTO:

November is just around the corner, and it’s a HUGE month for Power BI.

The volume and magnitude of features about to be released is absolutely staggering.

This is going to be the greatest Power BI update since its introduction. Period.

— Amir Netz (@AmirNetz) October 30, 2018

The day 1 keynote is a good way to get a fast overview of what’s new lately, and to help shape which conference sessions you want to attend.

What to expect in this blog post: I’m sitting at the Blogger’s Table in the middle of the keynote room, armed with Sony’s latest superzoom camera, taking notes. Every few minutes, I’ll update this blog post. The page won’t refresh itself, so if you’re following along at home and you want to see my latest takes, hit refresh. The keynote is scheduled to end at 9:45AM Pacific, at which point I’ll sign off, pack up my gear, and head off to watch sessions.

The keynote is scheduled to start at 8:15AM Pacific. Here we go!

8:13AM – surrounded by awesome folks at the blogger’s table.

8:18 – room goes dark, and they’re playing a welcome video. “PASS is a community like no other.” Amen to that.

8:20 – PASS President Grant Fritchey welcoming folks to Summit v20.

8:25 – thanking the PASS Passion Award Winner for 2018: Michael Johnson.

8:28AM – Video showing past attendees & Microsoft staff talking about how the product and the event have changed over time.

8:30AM – Rohan Kumar, Corporate Vice President of Azure Data, taking the stage to start the demo party. He says Azure has made a big difference in helping Microsoft understand how customers use the product in real time.

8:33AM – “Hybrid is the way of the future, and this is exactly how it should be.” Good message for this crowd. “Hybrid enables comprehensive AI and analytics.” AI and analytics are bursty workloads and unfamiliar tools for most customers, and that’s a place where cloud services work so well.

8:36AM – SQL Server 2008 and 2008 R2 end of extended support on July 9, 2019, and Rohan’s suggesting that you should move to SQL Server 2017 and Azure Managed Instances. Huh – why didn’t he suggest SQL Server 2019?

8:41AM – Microsoft’s Argenis Fernandez has the answer, and I agree 100%.

That’s easy – migrations need to happen now, and not wait for GA of 2019.

— Argenis Fernandez (@DBArgenis) November 7, 2018

Sadly, I don’t think enough people are aware of how big migrations are, and how they need to start hustling now.

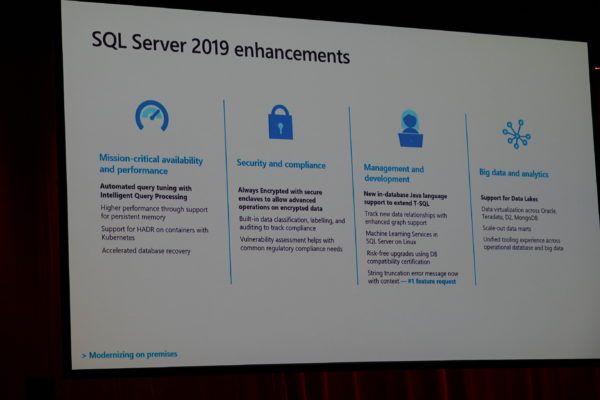

8:42AM – Rohan says these are the same four pillars they’ve been focusing on for the last several releases.

8:44AM – “We think this will be huge for ISV developers because you can write applications in Java and not worry about security.” Oh, so many jokes here.

8:46AM – We’ll get a new CTP every month. Last night’s 2.1 added scalar UDF inlining, buffer pool in persistent memory, graph improvements, R and Python apps in big data clusters, etc.

8:47AM – Bob Ward and Conor Cunningham to show a Dell/EMC R940 with 4 sockets, 96 cores, unspecified amount of RAM, SC9000 storage with 48×1.92TB SSDs. Showing a new SQL Server 2019 build with less TempDB contention. Conor: “This is just a prototype, but we’re replacing some system tables with Hekaton tables. It’s not in CTP 2.1, but it will be in SQL Server 2019.”

8:52AM – That TempDB improvement is just sweet. That’s the kind of real world improvement that will make a difference. People have been struggling with TempDB contention issues and latch contention issues they can’t solve. This is why I love Azure so much – now that Microsoft has to host SQL Server themselves, they learn so much at scale.

8:55AM – Brief coverage of Polybase, Big Data Clusters.

8:56AM – “Our vision for Azure Data Studio is a single pane of glass that manages all your data platform assets.” Well, that’s what SSMS was – think SSIS/SSAS/etc – but now we’re starting again, now with even more platforms. It’s really ambitious to have one pane of glass to do all of this. I like ambitious.

Not just Microsoft databases, either – Microsoft’s Vicky Harp points out that PostgreSQL is on this slide:

Announcement of upcoming support for non relational and OSS databases, on prem and in cloud, in AzureDataStudio @RohanKData #PASSsummit pic.twitter.com/7IZL1SRbPU

— Vicky Harp (@vickyharp) November 7, 2018

9:00AM – Demoing querying unstructured data stored in HDFS, queried with Spark in Azure Data Studio.

9:01AM – “Let’s finish up demoing one of my favorite features, Java support in SQL Server.” Talking about how it eases migration from competitive platforms. Translation: “LOOK OUT, ORACLE, WE’RE COMIN’ FOR YOU.”

9:02AM – Rohan back onstage talking about Azure’s support for other database platforms, Azure Database Migration Service, near-zero downtime, and migration at scale. I keep picturing Azure as a giant vacuum cleaner, determined to suck up your databases into the cloud and get you hooked. (That’s not a bad thing, either – they’re going to do a better job of managing that kind of data than you are, and it’s a race between Microsoft, Amazon, and Google to see who can take over more on-premises workloads.)

9:06AM – Azure SQL DB Managed Instance’s new Business Critical instances (Availability Groups) will be in General Availability starting December 1st! That’s fantastic. Right now only the single-instance ones are in GA.

9:08AM – Recapping the Ignite public previews of Accelerated Database Recovery and Machine Learning Services in Azure SQL DB, and Azure SQL DB Hyperscale. So many announcements in the data platform. Niko Neugebauer burst out clapping at the Machine Learning Services announcement here at the blogger table, hahaha.

9:12AM – Lindsey Allen up to do 4 minutes of demos – showing quick columnstore indexes, but the sizes are bloating up fast, so she’s enabling Azure SQL DB Hyperscale. “Most customers don’t like dealing with partition keys, and they’re really hard to get right.” Lots of laughs – it’s true.

9:13AM – “With Azure SQL DB Hyperscale, restores are really fast because they’re from snapshots. But I don’t have time, so I can’t demo it.” Chuckles. But seriously, snapshot restores will be mind-blowing for folks who haven’t tried that with SAN snapshots before. (And SAN snapshots are an intimidating feature for new shops.)

9:16AM – Accelerated Database Recovery because slow rollbacks and restores are bad. “We sure experienced those pains in Azure. Sleep deprivation is a very powerful motivator.” Showing how with ADR, the transaction log size stops growing at a certain size because it does aggressive truncation. (Has a lot more tricks than that, too – ADR just looks spectacular as a new feature. Really looking forward to digging into that.)

Here’s more info about Accelerated Database Recovery.

9:18AM – “We want to make Azure the best place to run any database,” referring to open-source databases. Recapping recent Azure Cosmos DB announcements. Microsoft has so many different data platform tools now that it’s not really effective to do demos across all of them. Even talking quickly, Rohan can’t cover all of the announcements – he’s spending only tens of seconds talking about things that took millions of dollars of investments. Microsoft’s investing a lot in data, and I bet most attendees don’t realize how wide-ranging this work is.

Demoing Cosmos DB multi master replication with a drawing app. You just know they’re not gonna share the URL for that, hahaha. #PASSsummit pic.twitter.com/QSzqN4GzTj

— Brent Ozar (@BrentO) November 7, 2018

9:24AM – Talking about Cosmos DB’s choices of different isolation levels. I get that it’s extremely technically hard to build, but we’re talking to an audience that has been used to changing isolation levels on the fly for decades. I’m personally excited about how Cosmos DB delivers that stuff, though.

— rimma nehme (@rimmanehme) November 7, 2018

Alright, dear readers, you know what to do: DRAW STUFF.

9:30AM – Tons of improvements for Azure SQL Data Warehouse: row level security, Accelerated Database Recovery, virtual networks. Sponsored comparisons say Redshift is more expensive and slower, and Rohan says the secret is local caching on SSDs. John Macintyre coming onstage to demo it. Processing over 1 trillion rows per second.

9:32AM – Thinking big picture for a second: Microsoft’s challenge with data warehouse implementations has never been their side of the delivery. They did a great job of building reference architectures, then working with hardware vendors to design reference systems. The challenge was that customers didn’t build it out right, either buying the wrong hardware, or not buying enough of it, or configuring it wrong. Azure SQL Data Warehouse’s wonderful speed is due to multiple factors: better architecture, but also taking customers’ grubby fingers out of the equation so they can’t screw up the hardware. You pay based on the capacity and speed you want, and Microsoft takes care of the rest.

9:37AM – Fast coverage of HDinsight 4.0, Azure Event Hubs for Kafka, Azure Data Explorer – which looks AMAZING, by the way – I love love love that, Ariel Pisetzky of Taboola coming onstage to talk about it.

9:40AM – I don’t blog about these tools, but based on what I’ve seen from it, Azure Data Explorer is the tool your analysts really want. Everything from the pricing, the speed of setup, the flexibility in querying – it looks awesome to me. I haven’t put my hands on it myself – I just don’t have the need for it – but I’d strongly recommend just watching a few demos of it so you can talk about it to your analysts. Try this one:

9:42AM – “There is no keynote complete without Power BI.” Recapping the Dataflows feature (doing ETL pulling from data lakes to into Power BI), SSRS Reports in Power BI public preview.

9:45AM – Patrick LeBlanc coming onstage to talk about SSRS reports in Power BI. The guy is a fantastic presenter, and people are excited to see him liven things up. The blogger table cheered at his name.

Big whoops for @patrickdba. #PASSsummit pic.twitter.com/AK4b3Ag8Zo

— Brent Ozar (@BrentO) November 7, 2018

The SSRS experience in Power BI looks fantastic, including the printing integration.

9:47AM – Folks on Twitter starting to pass judgment on the keynote, and it’s a happy camp:

There was definitely less marketing talk and slogans and more announcements and demos than in previous years in this keynote. Good job by Microsoft! #PASSsummit2018 #PASSSummit

— Glenn Berry (@GlennAlanBerry) November 7, 2018

This IS the most intensive demo-and-announcement-based #Keynote ever!

Wow!#sqlpass #passSummit— Niko Neugebauer (@NikoNeugebauer) November 7, 2018

Just watched how fantastically dynamic a speaker @patrickdba has become. I can say I knew him when. Makes me so happy to see how much he loves what he does. It’s infectious. #PASSSummit #powerbi

— Mindy Curnutt (@sqlgirl) November 7, 2018

9:49AM – Deepsha Menghani demoing Shell’s prototype of incident detection with Azure. “I’m going to do an image search for a gas station on fire.” O_O Using machine learning to detect problems by holding up a fake cigarette up to a webcam so that when it detects a potential problem, it can shut off the pumps.

And that’s a wrap! We’re done. Have fun at Summit!

2 Comments. Leave new

>8:47AM – Bob Ward and Conor Cunningham to show a Dell/EMC R940 with 4 sockets, 96 cores…

Is the big core count just to load up on processes to better show the TempDB contention? Dell has a couple SQL whitepapers that also spec high numbers of CPU cores. I remember conventional wisdom in the past (both here and Glen Berry’s posts) being to use the low-core-count “speed optimized” CPUs because 1) unless you have awesome storage, more cores will just be waiting for bytes, and 2) licensing costs, especially for Enterprise. Has that calculation changed with 12gbps SSDs becoming commonplace? Or are these just speed demos/benchmarks that ignore the practicalities of licensing costs?

Believe it or not, some folks actually need servers that large! We love working on those kinds of systems.