In part 1 and part 2 of this series, I showed you the various ways to get the application’s SET options so that you can get the same execution plan as the application. If you skip that step and run the query that is timing out in the application, it could be fast in SSMS. Your SET options must match the application’s in order to reproduce the slowness users are seeing.

Once you’ve reproduced the slowness, it’s time to start testing for a workaround or a solution. You need to be aware of the density vector issue when testing.

What is the Density Vector?

When you use a local variable in a query, SQL Server cannot use the statistics histogram. The histogram is where SQL Server has estimates for how many rows are equal to a value, how many rows are between two values, etc.

What does SQL Server use to estimate how many rows there are if it can’t use the histogram? It uses the number of rows in the index multiplied by the distribution of the values, aka the density vector.

Let’s look at an example that uses the histogram.

|

1 2 3 4 5 6 7 8 9 10 |

CREATE PROCEDURE GetPostsByUser (@UserId int) AS SET NOCOUNT ON; SELECT Users.Id, Users.DisplayName, Posts.Id, Posts.CreationDate, Posts.Title, Posts.Score, PostTypes.Type FROM Posts JOIN Users ON Posts.OwnerUserId = Users.Id JOIN PostTypes ON Posts.PostTypeId = PostTypes.Id WHERE Posts.OwnerUserId = @UserId; |

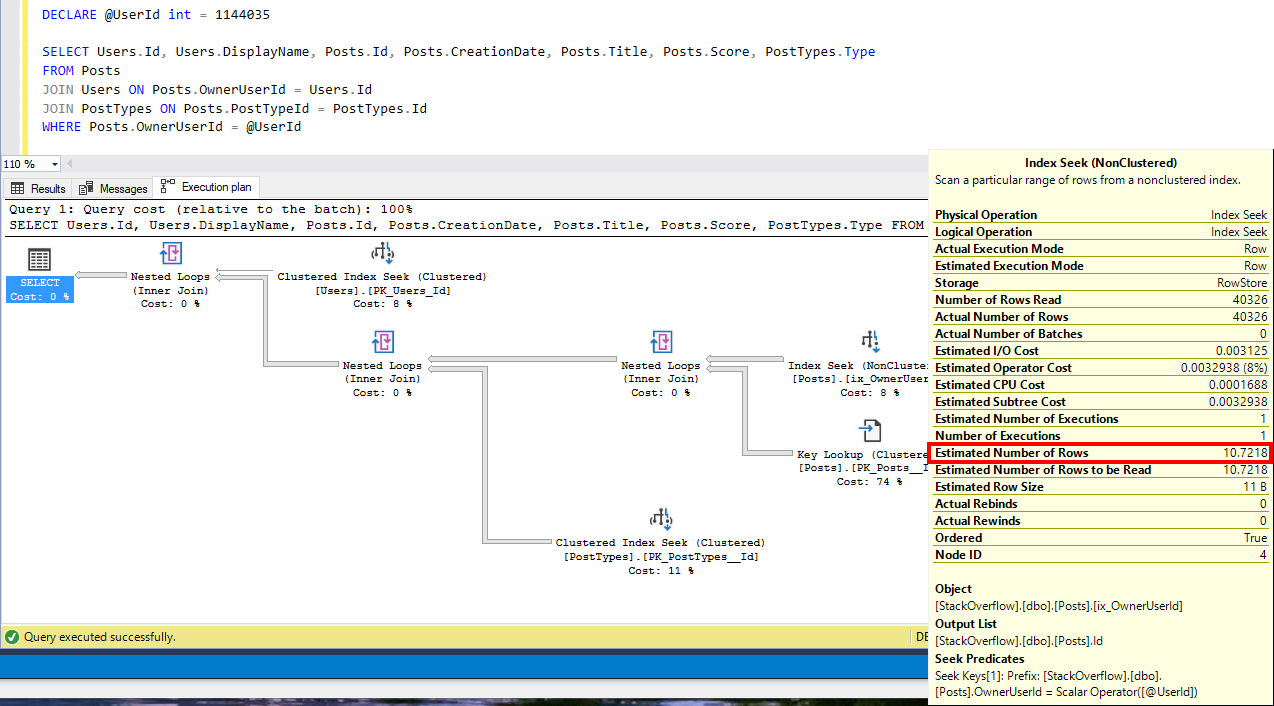

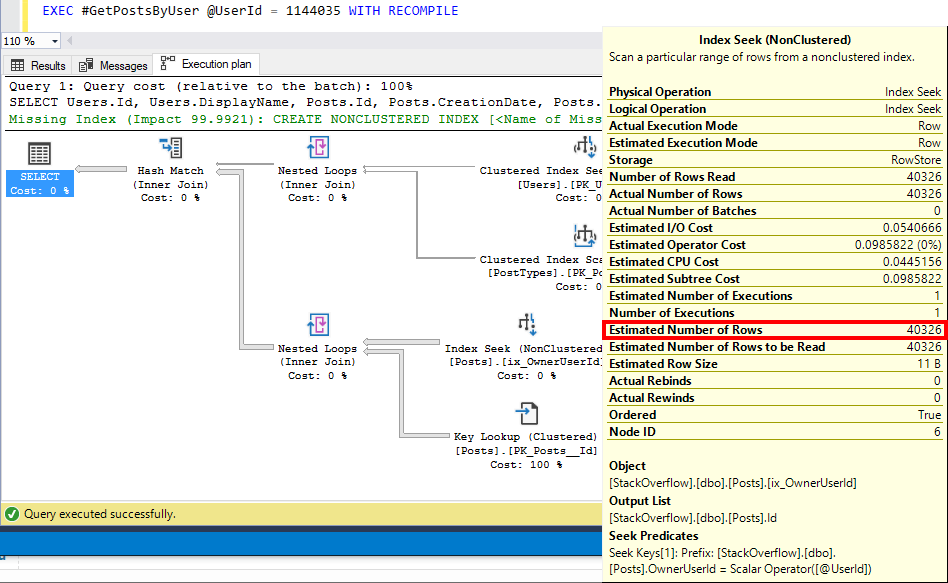

For UserId=1144035, there are 40,326 estimated rows to be returned from the ix_OwnerUserId index on Posts.

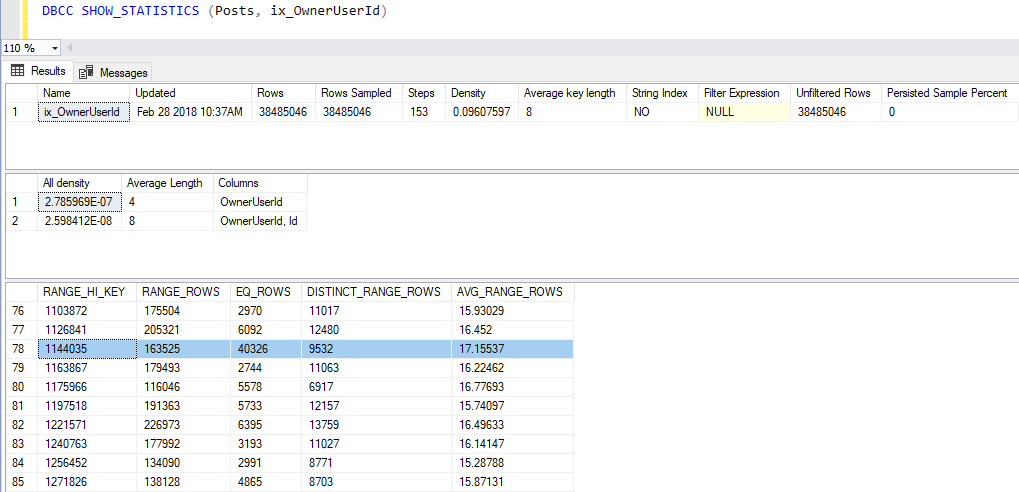

Let’s look at the histogram for the index that was used, ix_OwnerUserId.

RANGE_HI_KEY=1144035 has EQ_ROWS=40326. This user has answered so many questions on Stack that it has its own row (step) in the histogram.

Now let’s look at the estimate when we use a local variable.

We now have an estimate of 10.7218 rows for pretty much the same query. It was 40326 before. That’s quite a discrepancy.

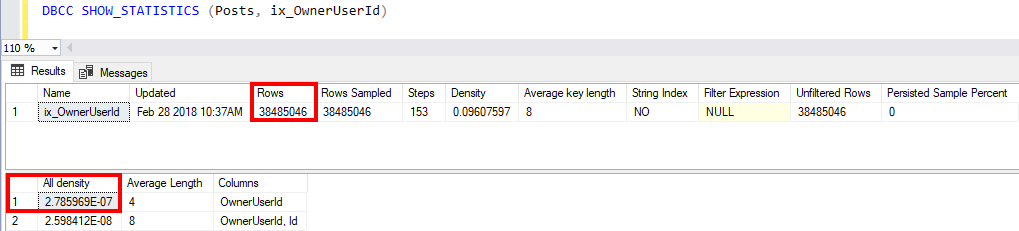

Where did it get 10.7218 from? This used the density vector instead of the histogram!

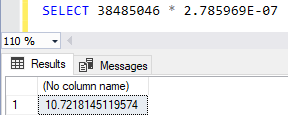

Calculate “number of rows in the index multiplied by the distribution of the values”.

10.7218!

Why does this matter?

SQL Server chose a different execution plan for the local variable than it did for the stored procedure even though both used @UserId = 1144035.

You aren’t comparing apples to apples when troubleshooting parameter sniffing issues in production if the source query is parameterized, like a stored procedure, and you are testing with a local variable.

For more information on this topic, check out Benjamin Nevarez‘s post about OPTIMIZE FOR UNKNOWN. You might be confused why I’m directing you to a post about OPTIMIZE FOR UNKNOWN. Using a local variable is equivalent to using OPTIMIZE FOR UNKNOWN. Both use the density vector.

Avoiding the Density Vector

How do we avoid the density vector when we are actively troubleshooting a parameter sniffing issue in production?

The easiest way is to use a temporary stored procedure.

|

1 2 3 4 5 6 7 8 9 10 |

CREATE PROCEDURE #GetPostsByUser (@UserId int) AS SET NOCOUNT ON; SELECT Users.Id, Users.DisplayName, Posts.Id, Posts.CreationDate, Posts.Title, Posts.Score, PostTypes.Type FROM Posts JOIN Users ON Posts.OwnerUserId = Users.Id JOIN PostTypes ON Posts.PostTypeId = PostTypes.Id WHERE Posts.OwnerUserId = @UserId; |

Keep in mind that this object only exists in the session/query window you created it in, so you’ll need to execute it there too.

To make your life easier, use WITH RECOMPILE when you execute it to avoid having to remove the plan from cache each time you make a change or want to test which plan you get for various parameter values.

|

1 2 |

EXEC #GetPostsByUser @UserId = 1144035 WITH RECOMPILE EXEC #GetPostsByUser @UserId = 26615 WITH RECOMPILE |

Mission Accomplished

My goal with this three-part series was to educate you on how to troubleshoot parameter sniffing issues the right way. There’s plenty of blog posts on this already. It has become very apparent since becoming a consultant that many, maybe even most, people don’t know that they are doing it wrong. I’m not saying that you are doing it wrong, but many are.

My goal with this three-part series was to educate you on how to troubleshoot parameter sniffing issues the right way. There’s plenty of blog posts on this already. It has become very apparent since becoming a consultant that many, maybe even most, people don’t know that they are doing it wrong. I’m not saying that you are doing it wrong, but many are.

17 Comments. Leave new

I didn’t know any of that (cause I’ve never had to troubleshoot parameter sniffing).

At least now I’ll have AN idea, rather than no-eye deer.

Thanks for the well-written article!

You’re for sure my favorite comment of the day, probably of the month, maybe even of the year. And the name. Love the name. Haha!! Glad this series has been helpful to someone. It’s painful hearing how some people troubleshoot problems. “You’re, uh, not doing that right. Let me show you.” Or something like that. I’m not the most gentle with my words.

OMiGosh. When was the last time you guys did something that wasn’t “…helpful to someone.”

I’m totally deer-in-the-headlights only because I haven’t taken time to really get into this series.

I keep hearing about it from you guys so it must be a thing – I probably have it and have no idea.

I’m looking forward to digging into this some day, but we’ll see. Busy. Busy.

Brent says, “Can you believe people buy training and never watch a single video.”

Thank You, Tara, for putting this together for us.

You Rock!

I put my hand up to answer Brent’s question but it didn’t post.

Great post Tara! I’ve fumbled around seeing estimates sometimes use the density vector and other times not. I never put it together to realize it was local variables vs parameters – genius! Also, “…This user has answered so many questions on Stack that it has its own row (step) in the histogram….” <–Goals.

Great blog post Tara! Could you tell me if there is any difference between an application sending prepared statements to issuing a sql statement with a local variable or are the effects basically the same regarding parameter sniffing?

Erik has a blog post on that topic coming out next week, I believe on March 15th. The short answer is that he shows that sp_prepare uses the density vector just like local variables. No bueno.

Really useful information in the series, my only nitpick is the URL’s which have two different structures :p

Posts 1&3 both use “issues-the-right-way-part-x”

Post 2 uses “issues-right-way-part-x”

It just doesn’t look right in my list of useful blog posts!!!

I have that same nitpick. A blog reader pointed out that the part 2 link in this blog post was wrong, so I went in to check. I had to remove the “the” from the part 2 link in part 3/this blog post to fix it, which means it was there when I wrote the blog post but not there when it posted. Argh!

“How do we avoid the density vector when we are actively troubleshooting a parameter sniffing issue in production?

The easiest way is to use a temporary stored procedure.”

Instead adding option (recompile) at the end of select command?

OPTION (RECOMPILE) is one way to work around a parameter sniffing issue, but it comes at a CPU cost. Be very careful with this option if the query is frequently executed. To answer your question though, you don’t add option (recompile) to avoid the density vector issue when setting up your environment to test a parameter sniffing issue.

Great post. Thanks a lot.

Is there any way to tell if the estimated number of rows in a query plan was generated based on a histogram or density factor?

Not from the query plan, but you can tell by how the query/stored proc is written. Look for local variables that then get used in a query. Erik indicates you can add trace flags 3604, 2363 into the query itself to expose cardinality estimation, but that would be when troubleshooting only. Instead just look at the query. OPTIMIZE FOR UNKNOWN uses the density vector, so that one is very clear when you see it in the query.

Hi, Tara! When I use the parameter passing to the stored proc the Optimizer checks precompiled plan and gets histogram derived value for the precompiled parameter value to compute estimated rows count. When I create the parameter inside the stored proc or use OPTIMIZE FOR UNKNOWN the Optimizer uses density vector to compute estimated rows count. Both options can lead to a performance hurting unefficient query plan in a case of an uneven distribution of the column values. If I want the Optimizer to check the histogram to get the actual data for the current parameter value I should use OPTION RECOMPILE that can lead to a performance hurting usage of the CPU. So what can be useful pattern for a stored proc that deals with the nonuniform distirbution of the column values or with the distribution with the presence of the modes?

You’d need to test what works best for that stored procedure. I have used OPTIMIZE FOR for this, either directly in the stored procedure or in a plan guide. OPTION RECOMPILE is fine as long as the object isn’t called frequently.

Thank you for this wonderful post. I have been troubleshooting “parameter sniffing” on some stored procedures that would randomly produce poor query plans. After tweaking the queries to include a local variable, I was able to get consistent good plans and I wasn’t sure as to why it worked versus just using a “OPTIMIZE FOR UNKNOWN ” hint which also corrected the plans. Now I know why and it’s going to save me a ton of debug time and will be incredibly helpful explaining to my team on the why and how to solve this type of issue. 🙂