Many of you are troubleshooting Parameter Sniffing issues the wrong way in production. In this three-part series, I’ll show you how to troubleshoot it the right way:

- Getting the Compiled Parameter Values

- Getting the App’s SET Options

- Avoiding the Density Vector

This is part 1 in the series.

Parameter Sniffing 101

When a query suddenly degrades in performance, my first thought is always a bad execution plan. If it’s a query that gets executed frequently, there’s usually higher CPU utilization when there’s a bad plan in the plan cache.

You’ll know if that’s the case if the production emergency goes away after you’ve removed the bad plan from the plan cache. But wait! Before you take corrective action on the problematic query, save the plan. You are going to need that plan to continue troubleshooting after you have removed it from the plan cache.

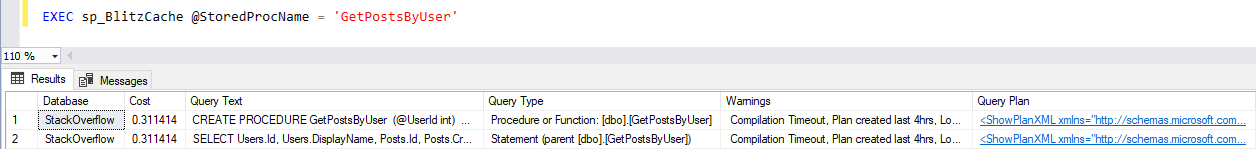

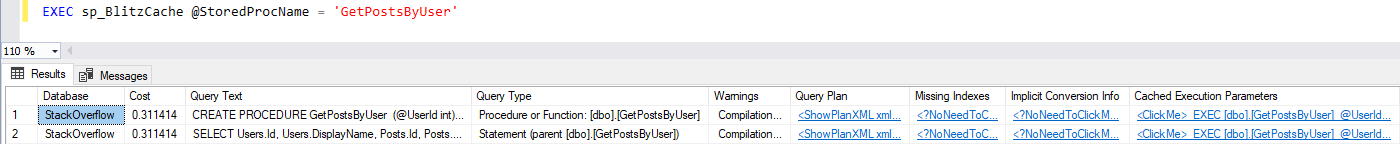

There are various ways to get the plan for a query. I’m going to use sp_BlitzCache.

We’ve got two rows here as sp_BlitzCache has info about the stored procedure and the one statement inside it. Click on the “Query Plan” value for either row. Save it as a sqlplan file.

Now that you’ve saved the plan, you can fix the production emergency by removing the plan from the plan cache. If it’s a stored procedure that I’m dealing with, then I’ll use sp_recompile as I’m old school like that. Otherwise, I’ll use DBCC FREEPROCCACHE(<sqlhandle>) or DBCC FREEPROCACHE(<planhandle>).

|

1 |

EXEC sp_recompile 'GetPostsByUser' |

If the production emergency stops, you know you’ve found a bad execution plan and now need to see what can be done to avoid this in the future.

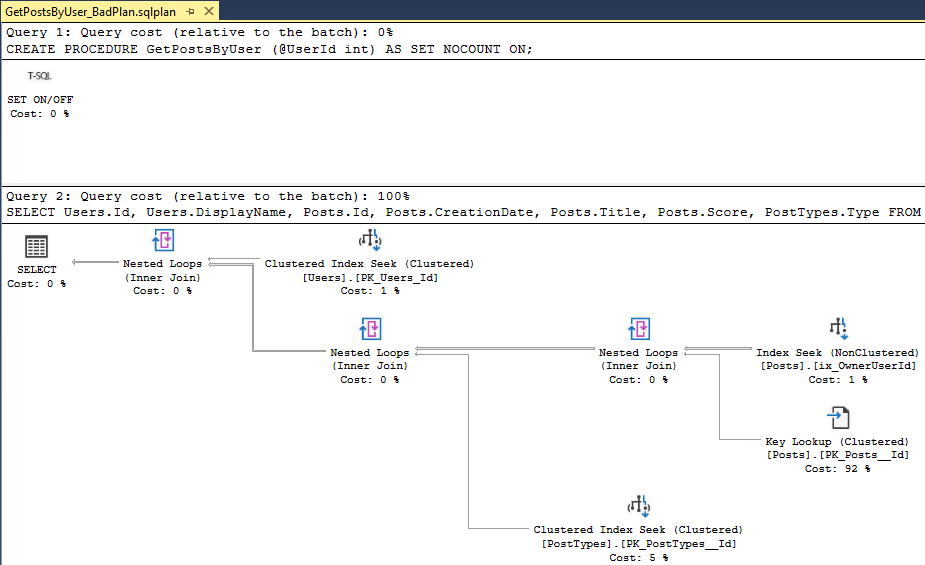

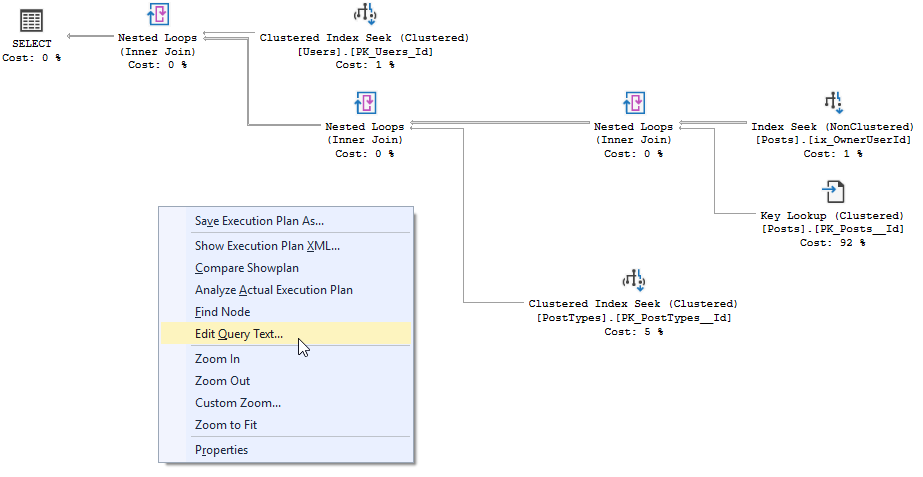

You can quickly get the stored procedure code by right-clicking on the plan anywhere that’s white (not an operator) and selecting “Edit Query Text…”

|

1 2 3 4 5 6 7 8 9 10 |

CREATE PROCEDURE GetPostsByUser (@UserId int) AS SET NOCOUNT ON; SELECT Users.Id, Users.DisplayName, Posts.Id, Posts.CreationDate, Posts.Title, Posts.Score, PostTypes.Type FROM Posts JOIN Users ON Posts.OwnerUserId = Users.Id JOIN PostTypes ON Posts.PostTypeId = PostTypes.Id WHERE Posts.OwnerUserId = @UserId; |

You may see that the query text is truncated if your query is obnoxiously long. Mine is short, so we get the whole thing.

Getting the Compiled Parameter Values

The next step is getting the parameter values that were used when the query was compiled.

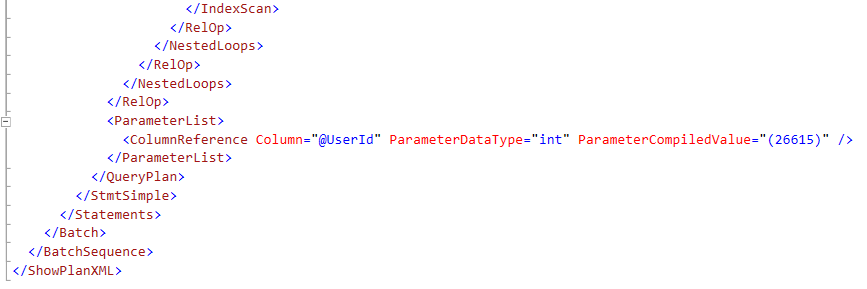

Right-click in the white area of the plan and select “Show Execution Plan XML…”

Scroll to the bottom and locate the value for ParameterCompiledValue.

When this query was compiled, the stored procedure was executed with @UserId = 26615. The execution plan was optimized for @UserId = 26615.

When the execution plan is optimized for @UserId = 26615, does it perform well for other @UserId values? If the answer is no, then this one is considered a bad execution plan. It was good for @UserId = 26615 though.

You can also use sp_BlitzCache to get the compiled values.

Click on the “Cached Execution Parameters” value for either row.

What’s Next?

What’s Next?

You aren’t quite ready to start testing yet. Next up:

33 Comments. Leave new

Would you need to follow the step of saving the Exec Plan if you’re using the Query Store? Or better yet, if the execution plan is the issue, would you rather force a better execution plan via the Query Store?

Those are good questions, Sean! With the low adoption rate of Query Store and the fact that most servers aren’t on versions that have Query Store, I’m focusing on the old fashioned way of dealing with parameter sniffing issues for this series. Query Store is a great way to troubleshoot these issues though.

And here I was questioning my perceived understanding of the Query Store. Thanks for the reply!

Can you point me in the right direction on how to properly use the Query Store?

Joel, if it were me, I’d be using Erin Stellato’s blogs about Query Store: https://www.sqlskills.com/blogs/erin/category/query-store/

Having done some similar troubleshooting in a Query Store environment, and doing some research into “forcing the plan” – the one caveat there is that “forcing” a plan with QS merely means that the optimizer tries to adhere to the forced plan as much as possible, but won’t necessarily run the exact same plan (I saw a blog article somewhere at the time that called it getting “spiritually close”, though I can’t find the reference now…). The upshot of that is that if the “forced” plan is no longer valid, due to an index or schema change, the optimizer will still figure out something to do and your query will still run. The drawback is that if your query is especially sensitive to parameter sniffing issues, forcing the plan via QS may not be 100% effective, if there are certain edge cases that can count as “close enough” to your forced plan but still tip over into the bad execution plan (I had one case where a query supporting an intermittent ETL process had recompiled over 20 different variations in a 48 hour period, and forcing the best one didn’t really keep that one running, it would go through several of the other similar but not very performant iterations of the plan as that query kept getting bumped out of the plan cache).

So, while I find the stats & cached execution plan data of the Query Store to be very useful, I consider forcing a better execution plan via Query Store a combination of stop gap measure and last resort; you will still want to generate a permanent fix for the issue via the normal methods (aka fix the query).

‘morally equivalent’ i think the wording was 🙂

great write up

I have a query that always runs for about 30 seconds that we have been troubleshooting and when we run sp_blitzcache it shows parameter sniffing. I ran through this to remove the execution plan and now it runs in 4 seconds. Amazing! I can’t wait for the next 2 in this series!

Hopefully you saved the execution plan before you recompiled it so that you can play along after seeing the next 2 parts in the series. But great that it is faster now!

I did indeed!

How could you go about this process in a an environment where all Stored Procedures are encrypted?

Update your resume? Just kidding. You can still recompile them. Your best bet is probably going to be to use the Query Store, though admittedly I have never had to troubleshoot encrypted stored procs. That wouldn’t be any fun. The best part of being a production DBA is troubleshooting performance issues. Encrypted stored procs would make me very sad.

This is like the classic Jeremiah “leave town” answer. There are definitely challenges to this problem, but I will figure it out.

In your example, there is just one parameter, the UserId. Many of our stored procedures have multiple parameters and I can only see the first one. Is there a method of obtaining all the parameter values?

good question and would love to see the answer!

I have the same situation^^

They are in the plan.

It looks like this in the XML:

ParameterList

ColumnReference Column=”@Param2″ ParameterCompiledValue=”(2)” /

ColumnReference Column=”@Param1″ ParameterCompiledValue=”(1)” /

/ParameterList

Chris, and VKDBA, it’s the same method. They are in the plan. I updated my proc just now to have @CreationDate and then used the same method described above. Here’s what the plan now has for ParameterList:

ParameterList

ColumnReference Column=”@CreationDate” ParameterDataType=”datetime” ParameterCompiledValue=”‘2010-01-01 00:00:00.000′”

ColumnReference Column=”@UserId” ParameterDataType=”int” ParameterCompiledValue=”(26615)”

ParameterList

Unfortunately, for many of my stored procedures, the plan does not contain all parameters.

For example, a stored procedure defined as:

[GetQcARPByOrderIds] (@languageId int, @orderIds nvarchar(MAX))

only has the languageId parameter:

I have encountered this issue many times and I have not been able to figure out why. One thought I had was that it was the datatype of the second parameter (nvarchar(max)) which is used extensively for procedures in our system, to pass multiple values for the search criteria.

The system does not seem to like XML

ParameterList

ColumnReference Column=”@languageId” ParameterCompiledValue=”(0)”

ParameterList

Chris — this only catches variables passed in to the stored procedure.

They won’t show up here if they’re declared in the body of the proc, etc.

Are there not some general ways to simply prevent parameter sniffing? For example using local variables to catch the passed in parameter values and referencing the local variables in the code? And other changes to the code, such as hints, with recompile, etc? Obviously these would be difficult to arrange if you don’t own the code, but mostly we do own it. And recompiling on every execution obviously could impact performance in a bad way. But there are a myriad of prevention approaches?

There’s lots of ways to workaround or resolve parameter sniffing issues. You’re going to want to stay tuned for part 3, which comes out tomorrow. Using local variables is NOT the way to workaround parameter sniffing issues. Part 3 covers that topic. The best solution is to get the right covering index in place and/or rewrite the query. I like plan guides, hints, etc. I love OPTIMIZE FOR. Lots of way to work around it. Local variables should be avoided. If you are on a newer version, you’ve got the Query Store. The goal of this series is not to show the various workarounds, but rather to get people to troubleshoot it correctly and THEN work on workarounds/solutions.

Part of me wonders, in newer versions, and this could completely be related to query store and I’m just not there yet, but why when talking query plans – if you’re going to “force” a query plan, or save or hint one… why you can’t have it figure the boundaries of a few plans to find the best.

In this day of much memory, you’d think optimising the best set of plans would be more important than parameter sniffing the one single plan to rule them all, for a stored procedure.

You can do that now with IF branching. Have duplicates of your stored procs. In the case of CustomerId, have one for large customers, one for medium customers, one for small customers. Then have a parent stored proc with IF branches. If @CustomerId …StoredProcLargeCustomer…IF @CustomerId…StoredProcSmallCustomer. Brent says it’s a dangerous idea though as you have to know your data well and keep in mind that things could change in the future. What happens when a small customer is now a large customer? Are you going to trigger an alert to change the logic?

Thanks Tara,

Soon my firm will be on sql 2016. I would like to learn about TSQL coding techniques/best practices for prevention of sniffing during development phase, to head off even the need for later production troubleshooting. I will stay tuned for part 3.

Part 3 doesn’t cover that. But it does cover the density vector issue, which is what local variables use. The goal of this series is getting people troubleshooting things right and then use other blog posts on the various ways to workaround or fix a parameter sniffing issue. Every query may need a different workaround or solution. There isn’t a one sized fits all topic unfortunately.

This will all get solved when the query optomiser ML puts us all out of jobs though, right? I think until it can write better queries, it’s going to do the best it can 🙂

I have many parameter sniffing problems, but they’re all compounded by terrible database design (think 1 table that’s a terabyte because it logs and controls every screen, so every query touches it, for every area – and an invoicing table that’s hundreds of columns wide, because normalising is for people who don’t like deadlocks). Between that and “this is a centre piece of the database and it can’t be changed, I’m having fun.

[[There isn’t a one sized fits all topic unfortunately.]]

Well that is not what I wanted to hear Tara 🙂 I will pretend to be a manager and ignore that statement 🙂

When I looked into one stored procedure to work through this post I found that it had multiple compiled values for the one parameter! One statement at the start checked if a record exists matching the parameter and exit with an error code if it doesn’t. A later statement then found all the matching records with other joins (that could fail but would not be an error). It seems like the first time the procedure was called it was for an error condition so it didn’t call the second statement.

Is this a problem? sp_BlitzCache only reported the last parameter.

I’ve never seen that happen, but that’s interesting. Not sure if it’s a problem or not or if sp_BlitzCache isn’t reporting it correctly. If you want us to look into it, you could post the compiled section of the xml in the firstresponderkit channel in Slack (sqlslack.com). We might direct you to github to report a bug though. I think we’d want to see the xml first though to make sure we are on the same wavelength.