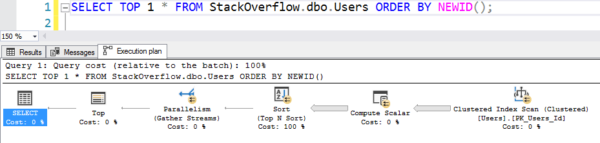

Method 1, Bad: ORDER BY NEWID()

Easy to write, but it performs like hot, hot garbage because it scans the entire clustered index, calculating NEWID() on every row:

That took 6 seconds on my machine, going parallel across multiple threads, using tens of seconds of CPU for all that computing and sorting. (And the Users table isn’t even 1GB.)

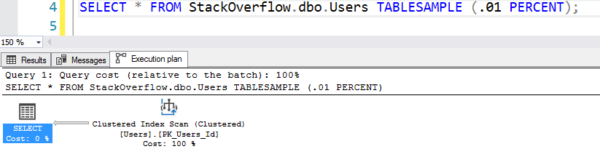

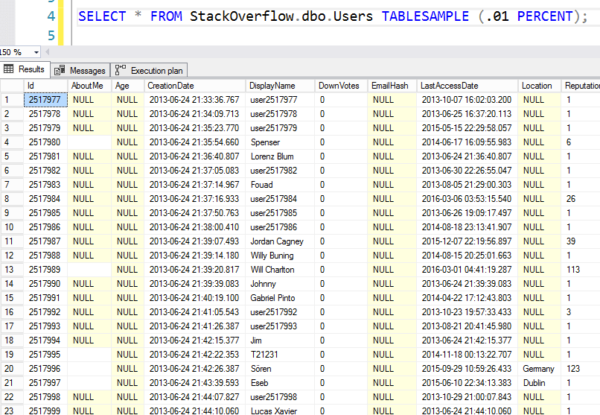

Method 2, Better but Strange: TABLESAMPLE

This came out in 2005, and has a ton of gotchas. It’s kinda picking a random page, and then returning a bunch of rows from that page. The first row is kinda random, but the rest aren’t.

|

1 |

SELECT * FROM StackOverflow.dbo.Users TABLESAMPLE (.01 PERCENT); |

The plan looks like it’s doing a table scan, but it’s only doing 7 logical reads:

But here’s the results – you can see that it jumps to a random 8K page and then starts reading out rows in order. They’re not really random rows.

You can use the ROWS sample size instead, but it has some rather odd results. For example, in the Stack Overflow Users table, when I said TABLESAMPLE (50 ROWS), I actually got 75 rows back. That’s because SQL Server converts your row size over to a percentage instead.

Method 3, Best but Requires Code: Random Primary Key

Get the top ID field in the table, generate a random number, and look for that ID. Here, we’re sorting by the ID because we wanna find the top record that actually exists (whereas a random number might have been deleted.) Pretty fast, but is only good for a single random row. If you wanted 10 rows, you’d have to call code like this 10 times (or generate 10 random numbers and use an IN clause.)

|

1 2 3 4 5 6 7 8 9 |

/* Get a random number smaller than the table's top ID */ DECLARE @rand BIGINT; DECLARE @maxid INT = (SELECT MAX(Id) FROM dbo.Users); SELECT @rand = ABS((CHECKSUM(NEWID()))) % @maxid; /* Get the first row around that ID */ SELECT TOP 1 * FROM dbo.Users AS u WHERE u.Id >= @rand; |

The execution plan shows a clustered index scan, but it’s only grabbing one row – we’re only talking 6 logical reads for everything you see here, and it finishes near instantaneously:

There’s one gotcha: if the Id has negative numbers, it won’t work as expected. (For example, say you start your identity field at -1 and step -1, heading ever downwards, like my morals.)

Method 4, OFFSET-FETCH (2012+)

Daniel Hutmacher added this one in the comments:

|

1 2 3 4 5 6 7 8 |

DECLARE @row bigint=( SELECT RAND(CHECKSUM(NEWID()))*SUM([rows]) FROM sys.partitions WHERE index_id IN (0, 1) AND [object_id]=OBJECT_ID(‘dbo.thetable’)); SELECT * FROM dbo.thetable ORDER BY (SELECT NULL) OFFSET @row ROWS FETCH NEXT 1 ROWS ONLY; |

And said, “But it only performs properly with a clustered index. I’m guessing that’s because it’ll scan for (@rows) rows in a heap instead of doing an index seek.”

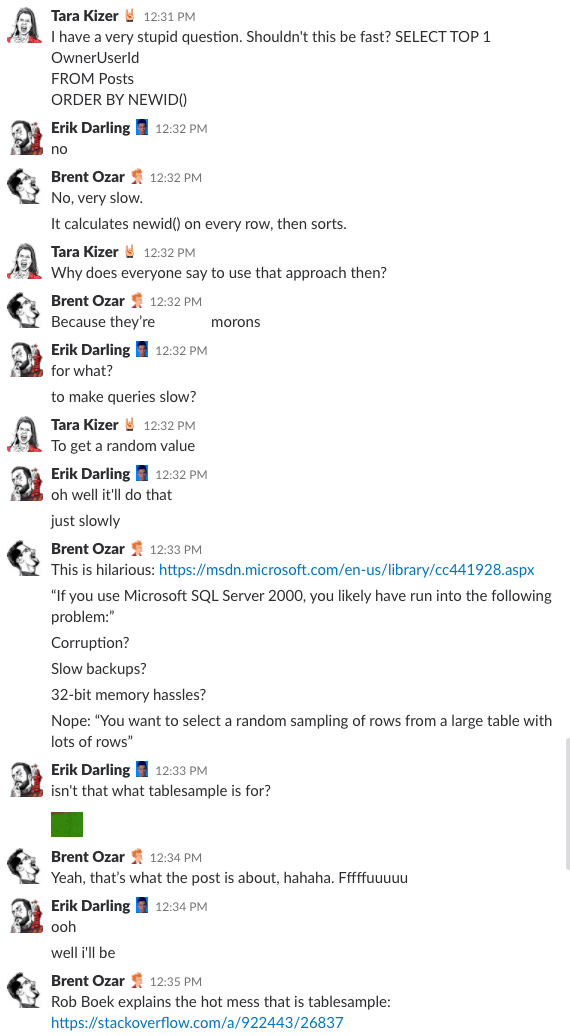

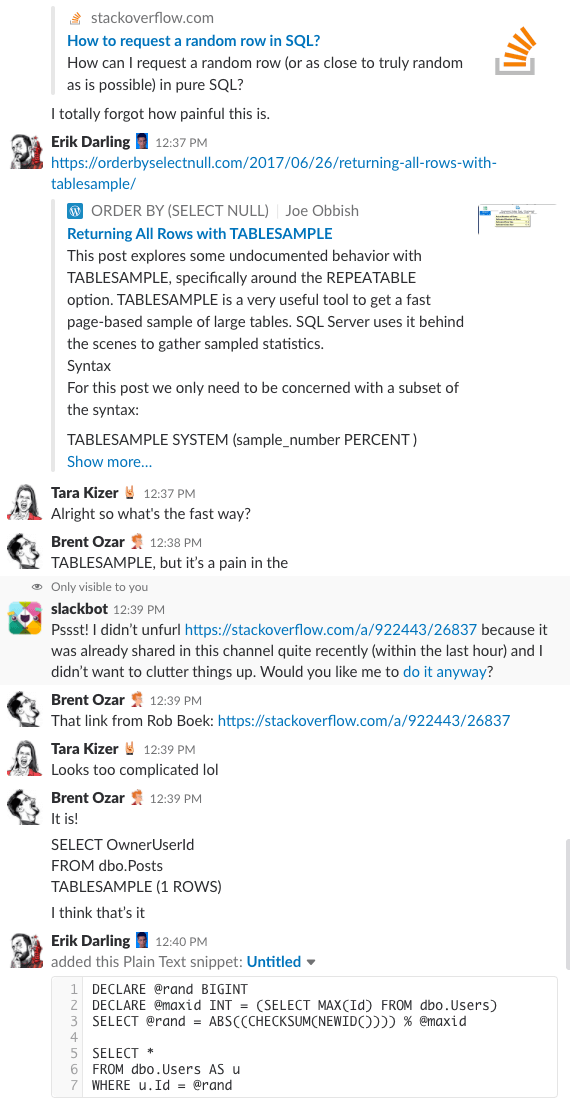

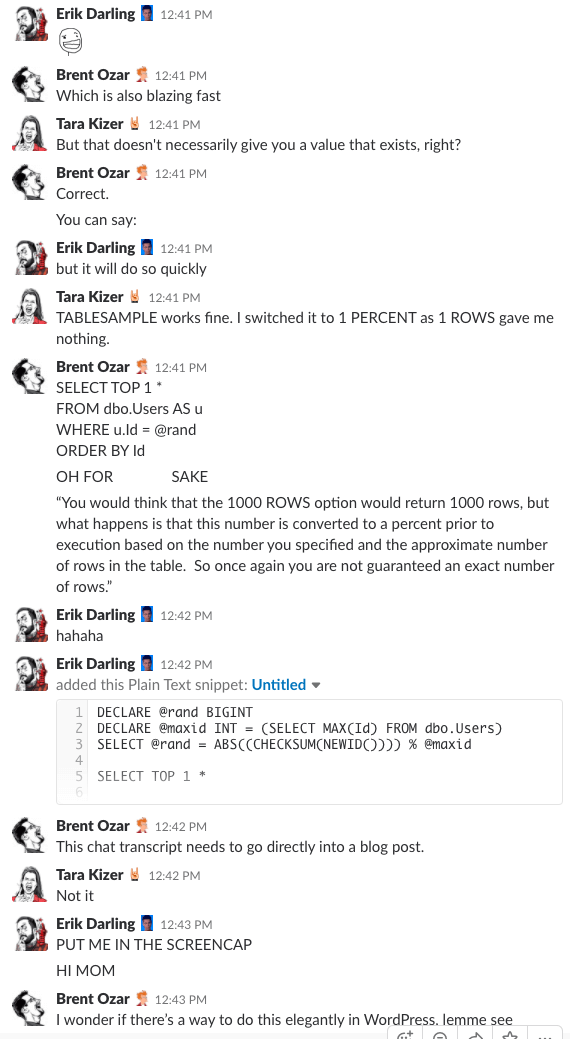

Bonus Track #1: Watch Us Discussing This

Ever wonder what it’s like to be in our company’s chat room? This 10-minute Slack discussion will give you a pretty good idea:

Spoiler alert: there was not. I just took screenshots.

Bonus Track #2: Mitch Wheat Digs Deeper

Want an in-depth analysis of the randomness of several different techniques? Mitch Wheat dives really deep, complete with graphs!

23 Comments. Leave new

Method 4: using OFFSET-FETCH:

DECLARE @row bigint=(

SELECT RAND(CHECKSUM(NEWID()))*SUM([rows]) FROM sys.partitions

WHERE index_id IN (0, 1) AND [object_id]=OBJECT_ID(‘dbo.thetable’));

SELECT *

FROM dbo.thetable

ORDER BY (SELECT NULL)

OFFSET @row ROWS FETCH NEXT 1 ROWS ONLY;

But it only performs properly with a clustered index. I’m guessing that’s because it’ll scan for (@rows) rows in a heap instead of doing an index seek.

Awesome! Added that to the post. Thanks sir!

It seems that chat transcript was heavily censored 🙂

Yeah, the company chat room is, ironically, not safe for work for our readers.

Looks like someone came across Method 3 a little bit ago. I like it! Thanks for the post.

https://stackoverflow.com/questions/9463938/checksumnewid-executes-multiple-times-per-row

This should allow for gaps and always return a row regardless of min and max id values: –

DECLARE @maxid bigint, @minid bigint, @randid bigint, @rand float = rand()

SELECT @minid = MIN(Id), @maxid = MAX(Id) FROM dbo.Users

SELECT @randid = MIN(Id) FROM dbo.Users WHERE Id >= (@minid + ( (@maxid – @minid) * @rand) )

SELECT * FROM dbo.Users WHERE Id = @randid

I always wonder why MS doesn’t look at these things and make them built in. I’m certain it wouldn’t take much effort for a built in rand_row(#) function.

It all depends on how random you want your results to be. With Option 2, assuming you randomly select a row from the returned page(s), and you can get the percent correct, you’ll be oversampling larger rows (and rows in pages with more free space). With Option 3, if you have any gaps in your key, you’ll oversample the rows after a gap (think identity columns where SQL Server decides to bump the identity value by 1000).

I blogged a similar topic a while back and did some basic stats testing: https://mitchwheat.com/2011/08/07/t-sql-generating-random-numbers-random-sampling-and-random-goodness/

It includes your Method 3 which I saw in a MSDN article (not sure where though)

Mitch – wow, you put a ton of work into that post! Nice job. I’ve linked to that in the body of the post because it’s so interesting.

Seems to do the trick :

DECLARE @MxRws BIGINT, @row BIGINT;

AndOneMoreTime: /*Only if the row does not exist*/

SELECT @MxRws = IDENT_CURRENT ( ‘[TableHere]’ ); –Assume using identity on PK

SELECT @row = RAND () * @MxRws;

/*Check that the row exists and has not been deleted*/

IF (SELECT 1 FROM [TableHere] WHERE Id = @row) !=1 GOTO AndOneMoreTime: ELSE SELECT * FROM [TableHere] WHERE Id = @row;

Elliot – ooo, I love that, but as I think about it, both this and method #2 fail when you have negative identity Ids. Doh!

What about taking advantage of the pk (or index) histogram statistics?

Something like:

drop table if exists #stats

create table #stats(

id int identity(1,1) primary key,

RANGE_HI_KEY int,

RANGE_ROWS int,

EQ_ROWS numeric(10,2),

DISTINST_RANGE_ROWS int,

AVG_RANGE_ROWS int

)

insert into #stats

exec(‘dbcc show_statistics(”Users”,”IX_ID”) WITH HISTOGRAM’)

declare @rows int

select @rows=count(*) from #stats

declare @id int =cast(round((@rows-1)* Rand()+1,0) as integer)

declare @low int

declare @high int

select top 1 @low=a.range_hi_key , @high=lead(range_hi_key,1) over (order by id) from #stats a where a.id between @id and @id+1

order by id

select top 1 * from Users where ID >= @low+cast(round(((@high-@low)-1)* Rand()+1,0) as integer)

I have a quick’n’dirty query that I use to get semi-random values from a table. I use time. It depends, of course, on whether one needs a result every execution, how many gaps in the table there are, how representative it has to be and how large the table is.

declare @gosh int = datepart( hour, getdate() ) * datepart( minute, getdate() ) * datepart( s, getdate() ) * datepart( ms, getdate() );

select *

from schemaname.BigTable

where BigTablePK = @gosh

;

This allows me to address a row with 41 million rows. It is not especially repesentative but it does give me new rows on each execution and it is quite fast.

Maybe this is obvious, but I think method 3 is not completely random. What if there are only 2 rows with the id of 1 and 100? “100” will be selected with 99% probability. Can it be improved?

Dismiss it, but ORDER BY NEW_ID() appears to be the only way to get a truly random sample set (more than one record) in your list of methods.

Am I the only person who thinks that 3rd or 4th case is bad if PK key has a large number of big gaps?

because in case of big gaps in PK, it means that the probability of receiving is very large compared to another rows..

e.g.

You have a table for 1’000’000 rows, then you delete 700’000 of first rows

so the probability to receive that 1st rows after from table (after deletion)

is ~70%, where 30% “probability” is spread across another row

Am I correct?

Yeah, method 3 is not random at all, just imagine a table with UserId = {1, 2, 99999 ,100000}. There 99999 will b picked close to always.

To pick a decently random record you need at least a COUNT() of the table, and that requires a table scan. Its the scan that takes time, not the calculation of NewId().

Well, i guess it’s the sorting

Giving each row a random value is the only right way to do random selection unless you already have them numbered without gaps.

If you know you have millions of rows you can filter out maybe 999/1000 of the users and then sort the rest:

SELECT TOP (1)

U.*

FROM dbo.Users U

CROSS APPLY(SELECT CHECKSUM(NEWID())) R(Rnd)

WHERE

R.Rnd%1000=0

ORDER BY

NEWID()

This code should be a lot faster than if you drop the CROSS APPLY and WHERE.

Also, don’t use ABS(CHECKSUM(NEWID())) in production.

This works perfect for me.!! thanks “neverrr neverrr”

This post and comments have helped me thus far. My business case is a tad different. I need a random sample of claims that represent 2.5% of the population. So, if my population contains 3,517 distinct records, I need to return, consistently, 88 distinct records. Here’s what I’ve done thus far:

DECLARE @FirstofLastMonth date = DATEADD(DD,1,EOMONTH(Getdate(),-2)),

@EndofLastMonth date = EOMONTH(Getdate(), -1)

DECLARE @Sample table (ID uniqueidentifier, ClaimNO varchar(50), Company_ID varchar(10), FinancialResp varchar(30), ProviderType varchar(50),

DateOfService date, ClaimType varchar(20), TotalBilled numeric(10,2), TotalPaid numeric(10,2) )

Insert into @Sample (ID, ClaimNo,Company_ID, FinancialResp, ProviderType, DateOfService, ClaimType, TotalBilled, TotalPaid)

Select distinct NEWID() as ID, cd.ClaimNo, cd.Company_ID, cd.FinancialResp,

CASE

when cd.FinancialResp in (‘KEYMA’, ‘SHP’, ‘SFKD’) and lob.LOB_Network = ‘1’ then ‘Contracted’

when cd.FinancialResp in (‘KEYMA’, ‘SHP’, ‘SFKD’) and lob.LOB_Network = ‘0’ then ‘Non-Contracted’

when cd.FinancialResp = ‘KDMA’ and pu.UDF_Value = ‘Y’ then ‘Contracted’

when cd.FinancialResp = ‘KDMA’ and pu.UDF_Value ‘Y’ then ‘Non-Contracted’

else ‘Missing’

end as ProviderType,

Format( Min(cd.FromDateSvc), ‘MM/dd/yyyy’) as DateOfService,

CASE

when substring(cd.ClaimNo, 9,1) = ‘8’ then ‘Institutional’

when substring(cd.ClaimNO, 9,1) = ‘9’ then ‘Professional’

end as Claim_Type,

sum(cd.Billed) as TotalBilled,

SUM(cd.Net) as TotalPaid

from Claim_Details cd

inner join Claim_Masters_V cm on cd.ClaimNo = cm.ClaimNo and cd.Company_ID = cm.Company_ID

inner join Prov_VendInfo pv on cm.Prov_KeyID = pv.Prov_KeyID and cm.Company_ID = pv.Company_ID

inner join Vend_Masters vm on pv.Vendor = vm.VendorID

and pv.Company_ID = vm.Environment_ID

inner join Prov_Master_V pm on cm.Prov_KeyID = pm.Prov_KeyID

and cm.Company_ID = pm.Company_ID

and pv.Default_YN = 1

inner join BM_Prov_HP_LineOfBuss lob on cm.Prov_KeyID = lob.Prov_KeyID

and cm.Company_ID = lob.Company_ID

and cm.HPCode = lob.HPCode

and pv.Vendor = lob.Vendor

and lob.LOB_Start = CAST(CHECKSUM(NEWID(),ID) & 0x7fffffff AS float) / CAST (0x7fffffff AS int)

When I run the above query repeatedly, the results vary in number from around 100 to the upper 80’s and lower 90’s. How do I refine the above to ensure that just 2.5% of the qualifying records are returned in a random sample?

For personalized help, click Consulting at the top of the screen.