I’ve written a terrible query. It might be the worst query in the world. Sure, there are other queries that may be slower, but this query is terrible and elegantly short.

The query is gloriously bad for a two reasons:

- It’s incredibly inefficient in the way it uses a scalar function

- Observing the query’s performance can make it much, much slower

Don’t believe me on #2? Let’s take a look!

Here’s the query:

|

1 2 3 4 5 6 |

SELECT TOP 10 Id, dbo.BadgeCountForUser(Id) FROM dbo.Users WHERE dbo.BadgeCountForUser(Id) > 50 ORDER BY dbo.BadgeCountForUser(Id) DESC GO |

The query uses a restored copy of the StackOverflow database, and the following (terrible) function and related index.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

IF OBJECT_ID('dbo.BadgeCountForUser') IS NOT NULL DROP FUNCTION dbo.BadgeCountForUser; GO CREATE FUNCTION dbo.BadgeCountForUser (@UserId INT) RETURNS INT AS BEGIN DECLARE @badgecount INT; SELECT @badgecount=COUNT(*) FROM dbo.Badges WHERE UserId=@UserId; RETURN @badgecount; END GO CREATE NONCLUSTERED INDEX ix_FunctionAbuser ON [dbo].[Badges] (UserId) GO |

Now, let’s run it and see how long it takes. All my tests are running against a virtual machine on a laptop with 6GB of RAM, SSD storage, and no other activity running. I ran each test a few times and made sure I got consistent results.

SQL Server Dynamic Management Views (DMVs)

The easiest way to measure performance stats for a query using the dynamic management views is to dump the procedure cache, run the query, and then run our free tool, sp_BlitzCache®.

Note that I’m dumping the ENTIRE execution plan cache– I’m running this on a dedicated test instance, so my FREEPROCCACHE can’t harm anyone else.

|

1 2 3 4 5 6 7 8 9 10 |

DBCC FREEPROCCACHE; GO SELECT TOP 10 Id, dbo.BadgeCountForUser(Id) FROM dbo.Users WHERE dbo.BadgeCountForUser(Id) > 50 ORDER BY dbo.BadgeCountForUser(Id) DESC GO exec sp_BlitzCache @hide_summary=1; GO |

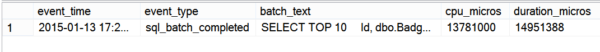

Here’s the duration and CPU from the output:

This gives me a lot of info — my total duration is over 14 seconds. Of that, 9+ seconds were the SELECT statement from inside the scalar function. And by the way, that executed 2.3 million times. WHOA. 2.3 million times? Yep, that’s how bad my query is.

Extended Events (XEvents)

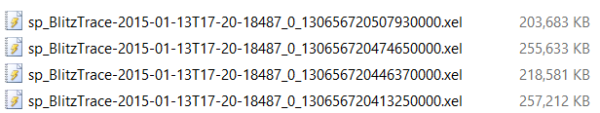

I can easily run an Extended Events trace against my session using our tool, sp_BlitzTrace™ on SQL Server 2012 and higher. Here’s the syntax to do that:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

exec sp_BlitzTrace @Action='start', @SessionId=@@SPID, @TargetPath='S:\XEvents\Traces\'; GO SELECT TOP 10 Id, dbo.BadgeCountForUser(Id) FROM dbo.Users WHERE dbo.BadgeCountForUser(Id) > 50 ORDER BY dbo.BadgeCountForUser(Id) DESC GO exec sp_BlitzTrace @Action='stop'; GO exec sp_BlitzTrace @Action='read'; GO |

According to the trace, the query’s duration and CPU time are in the same ballpark as we saw before:

But I only have one line here– that’s because by default sp_BlitzTrace™ only collects sql_batch_completed and does not collect statement level completion events. You can turn that on, but remember how the dynamic management views told us the scalar function was executed 2.3 million times?

If you capture sp_statement_completed, that means capturing 2.3 million events. Which means your trace starts churning large amounts of data to the file system. And yes, if you collect the statement level stuff, you’ll slow the query down — and besides, querying the trace files is just awful.

STATISTICS TIME AND STATISTICS IO

Another very common way to measure query performance is to turn on STATISTICS TIME and STATISTICS IO. I’m lazy, so I usually do these in a single statement, like this:

|

1 2 3 4 5 6 7 8 9 10 |

SET STATISTICS TIME, IO ON; GO SELECT TOP 10 Id, dbo.BadgeCountForUser(Id) FROM dbo.Users WHERE dbo.BadgeCountForUser(Id) > 50 ORDER BY dbo.BadgeCountForUser(Id) DESC GO SET STATISTICS TIME, IO OFF; GO |

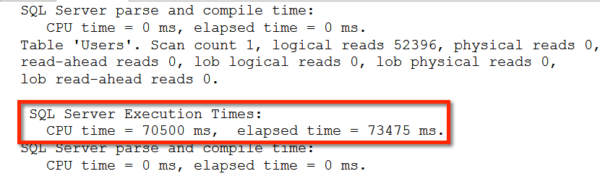

Here’s how that performed:

Wait just a second– those times were different!

Yep, there’s something weird about these numbers. Let’s sum up the CPU time measurements:

- Dynamic Management Views via sp_BlitzCache®:~14 seconds

- XEvents sql_batch_completed via sp_BlitzTrace™: ~14 seconds

- SET STATISTICS IO, TIME ON: 70 + seconds

- XEvents sp_statement_completed: gave up after one query generated 1GB of trace data

Dang, this stuff is complicated.

I still like STATISTICS IO and STATISTICS TIME. I do also like sometimes looking at statement level trace data, too! But with any method of measuring performance, I try to be aware that watching what’s going on can impact the results I’m seeing.

This post was inspired by Grant Fritchey’s recent post. If you’ve got your own story about “the observer effect” slowing things down, I’d love to hear it, too!

Brent Says: Whenever I’m there to watch a client’s server, it seems to go faster, and they’re mad because the problem is gone. Hmm.

9 Comments. Leave new

Some people incorrectly call the observer effect the Heisenberg Uncertainty Principle, which is really defined as the more precise a particle’s position is measured the less you really know about its momentum. But that’s kinda close enough to the observer effect for me.

Therefore I like to call events like you describe as Heisenbugs. They act differently the more you measure them.

Hahaha, love the Breaking Bad reference, too.

https://en.wikipedia.org/wiki/Werner_Heisenberg

I have always found functions to be the bane of existence, in some ways even worse than cursors. If I really need a function I will write it in C and add the function to my typical DLL library of such things and reference those functions to SQL Server. A little complicated and all, but sending the processing outside of SQL Server and using a 4GL makes it worth it.

SET ANSI_NULLS ON

SET QUOTED_IDENTIFIER ON

GO

CREATE VIEW [dbo].[mv_BadgesForUser] WITH SCHEMABINDING

AS

SELECT UserID, COUNT_BIG(*) AS BadgeCount

FROM dbo.Badges

GROUP BY UserID

GO

SET ARITHABORT ON

SET CONCAT_NULL_YIELDS_NULL ON

SET QUOTED_IDENTIFIER ON

SET ANSI_NULLS ON

SET ANSI_PADDING ON

SET ANSI_WARNINGS ON

SET NUMERIC_ROUNDABORT OFF

GO

CREATE UNIQUE CLUSTERED INDEX [CIX_BadgesForUser] ON [dbo].[mv_BadgesForUser]

(

[UserID] ASC

)

GO

USE [Scratch]

GO

CREATE UNIQUE NONCLUSTERED INDEX [IX_BadgeCount] ON [dbo].[mv_BadgesForUser]

(

[BadgeCount] DESC,

[UserID] ASC

)

GO

SELECT TOP 10

Id = UserID, BadgeCount

FROM [dbo].[mv_BadgesForUser]

WHERE BadgeCount > 50

ORDER BY BadgeCount DESC

/* There, I fixed that for you */

A fix that doesn’t require any database changes and won’t impact DML statements is to replace the function with a Temp Table and then join it to the users table. This used 1/5th the logical reads.

CREATE TABLE #BadgeCountForUser (UserId int, BadgeCountForUser int)

INSERT INTO #BadgeCountForUser

SELECT UserId, COUNT(*)

FROM dbo.Badges

GROUP BY UserId

SELECT Id, BadgeCountForUser

FROM dbo.Users

JOIN dbo.#BadgeCountForUser ON Users.Id = #BadgeCountForUser.UserId

WHERE BadgeCountForUser > 50

ORDER BY BadgeCountForUser DESC

GO

A similar observer effect for scalar UDFs was written about by Jeff Moden here: http://www.sqlservercentral.com/articles/T-SQL/91724/

I thought it was particularly interesting given the bad name they have by people taking these kind of measurements. Heh.

Thanks for the article. I’ve been having fun with BlitzTrace, also, while I try to suck less at XE on my own.

Most surely had this happen last week. I am trying to introduce database table and index compression so I had the analyst run some tests with uncompressed tables and had a simple profiler trace running. This produced millions of rows of output because the way the process was coded. Then we did the same tests with compressed data and it was three times faster. I didn’t have a trace running this time. I had a hard time thinking the results were reasonable thinking that maybe data being in cache might have caused the difference (the process was getting data from another database than the one I had compressed). So I had the tests rerun with the compressed run first and the differences were a lot less. Then I read this post and understood.

Chris

[…] Measuring Performance Can Slow SQL Server Down […]