Performance tuning of independent software vendor (ISV) databases is a little tricky.

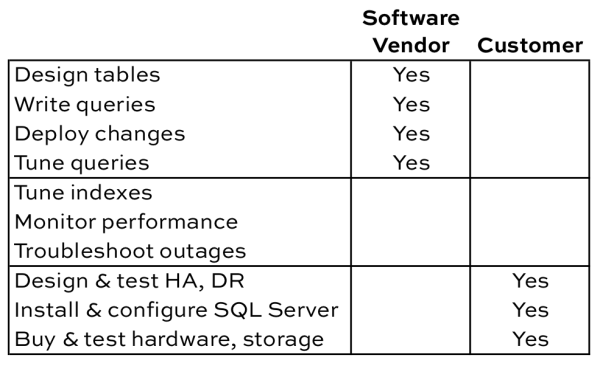

To understand who does what, let’s think through all of the work required with building and hosting a database application:

Typically the two parties involved – the vendor and the customer – start at opposite ends of the table. The software vendor starts by designing their tables, and gradually works down. The customer starts by buying hardware, and works their way up.

In the middle, things kinda fall apart.

When I was a DBA, I thought it was the vendor’s responsibility to put the right indexes on their tables. It’s their app, right? Aren’t they supposed to know how people query their own app? Why am I responsible for reinventing that wheel? Will I lose support if I add indexes?

Now that I’ve worked with a lot of ISVs, I see things differently. Here are their challenges:

Every customer uses the app differently. Some customers really hammer a particular feature hard, whereas others never use it at all. If you index for every possible feature/query, then the database will be dead slow for delete/update/insert operations.

Customers don’t give feature usage or index usage data back to the ISV. Software vendors have no way of knowing what indexes are working well out in the field, or sometimes even know what queries are running on the server. This comes back to our responsibilities grid at the beginning of the post – often even the customers aren’t running any performance monitoring software on their app at all.

Customers won’t upgrade to current versions quickly. Sometimes the ISV fixes performance by improving queries or adding the right indexes, but they only add it in the current version of the software. It takes development time to backport the fixes to older versions, and software vendors only have so much time. If you ask end users if they’d rather pay for new features or backporting stuff to older versions, they vote with their wallet.

Some customers have really bad DBAs (or none at all). Time and again, I’ve seen “DBAs” add dozens – or in one case hundreds – of indexes to an ISV’s tables in an effort to improve performance. The first few indexes make things better, so they start solving every problem by adding more indexes. You can guess how that ends up. As a result, the ISV’s support team starts making rules like “no customer is allowed to add indexes.”

Building a Successful Relationship With Your ISV

When you install a software vendor’s database, show them the list of responsibilities at the top of this post. Say, “I agree to do the stuff in my column – if you’ve got any guidance on things you’d like me to do for your application, send ’em over, and I’ll be glad to follow them.” For example, the vendor may have a list of trace flags they’d like to enable for their app.

Then say, “For the stuff in the middle, who’s responsible?”

If the vendor agrees to tune indexes, then you’ll probably need to give them index usage data and plan cache data on a regular basis to help them tune. I like to be my software vendor’s best customer – I’ll say, “I’ll send you the output of sp_BlitzIndex® and sp_BlitzCache® every week or every month, whatever works for you. I’ll be glad to take my time working with you on the output, and together, we can make the software experience awesome for everybody.”

You’ll be surprised by how often they accept because they’re desperate to find customers willing to give them some diagnostic data about how their software works in the wild.

If the vendor says you’re responsible for tuning indexes, then:

- Instead of dropping their indexes, disable them, and let their support team know which ones you’re disabling

- Create your own indexes with your company’s initials, like BGO_CustomerNumber_CustomerName, so you can quickly identify which ones are yours versus which shipped with the product

- Run sp_BlitzIndex® every month to make sure your indexes are actually helping, not hurting

- When it’s time to deploy a new version of their app, enable the vendor’s indexes again first, drop your own indexes, run their deployment scripts, and then set things back the way you had ’em (this way you minimize the chance of their deployment scripts breaking)

There’s no right or wrong choice – for example, Sharepoint customers aren’t allowed to touch the database, whereas SAP customers are encouraged to do their own index tuning through the SAP UI. The key is knowing which method your vendor supports right from the start. Otherwise, both sides just assume the other side is taking care of the problem.

24 Comments. Leave new

Hi Brent

Thanks for the good post!

I am in the software-vendor boat, and as you say, we would love to get more usage data out of our customers.

What would you say if we would like to include sp_BlitzIndex and sp_BlitzCache as part of our application to be able to send periodic usage data back to us as a vendor?

Thanks!

Michel

Michael – sure, contact us at help@brentozar.com for licensing costs. (It’s free for folks to use on their own systems, but our license specifically prohibits redistribution of our code. We’ve run into issues where companies distributed our code and took out all the credits, or didn’t keep it up to date, or had us answer support calls for their customers, heh.)

Brent, I’ve acted as both Customer Data Guy and ISV Data Guy during my career so I think I understand both sides of the issues. I was the Data Architect for a hospital information management system that had customers with databases ranging in size from 20GB to 8TB. I agree wholeheartedly with much of what you’ve written, but I have vehement disagreements that I want to share, and then remedies I’ve devised. For context, the HIMS system had large customers that hired MVPs (who shall remain nameless) to critique our databases (and indexes)…mostly because, well, they sucked. In most cases we (I) did really asinine stuff and we fixed it per the MVP recommendations. However, in some cases even though the indexing strategy on the surface looked downright stupid, there actually were good reasons for it and after *WORKING WITH* the customers/MVPs we all agreed that it was best to keep things as is…for now.

“Customers don’t give feature usage or index usage data back to the ISV.” It shouldn’t be the customer’s job to do this. The ISV should have processes that minimally squirrel away the missing index DMV info (as well as other stuff…blocking, indexes that diverge from model, etc) that can be remotely retrieved by the ISV if the customer has complaints. The customer also shouldn’t need to run perf monitoring software *if* the ISV has planned accordingly. My app deployed its own monitoring solution that logged interesting metrics to some tables. Customers liked it better than the commercial tools because it was lightweight and was customized to log things we know our software sucked at.

“It takes development time to backport the [index] fixes to older versions”. I disagree. Unless the proposed index is on a table where the development team knows that the data access has undergone refactoring in newer versions, then the fix is benign and should require just a small amount of regression. My ISV then of course had the legal documents ready that were also given to the customer that absolved them of any sins. My point is…good ISVs want to keep the customers happy, without forcing constant upgrades. Backporting simple things like better indexes for performance is a no-brainer. I don’t know why IT Pros fight this. It’s probably because we are all taught to be agile and “release often”. Well, hospitals can’t always do that. We still have customers running SQL 2000 and versions of our software that are 8 years old. And we still backport when we can.

“When it’s time to deploy a new version of their app, enable the vendor’s indexes again first, drop your own indexes, run their deployment scripts, and then set things back the way you had ‘em (this way you minimize the chance of their deployment scripts breaking)”

That’s a really bad idea. Here’s an example we had…customer added two included columns to hundreds of indexes (ignore the bad design for now). It solved performance radically. They did not tell us. We independently discovered the same problems and we added those same two included columns to the SAME hundreds of indexes, however, with different ordinal positions. The customer followed your process. They even used Redgate tools to spot “duplicate indexes” between our new model indexes and their custom indexes. Of course the Redgate tool did NOT spot the overlap since both the index name and its col list was different. Immediately the customer began experiencing deadlocks. It only took me a few mins with my monitoring tools to spot the problem. My tools look for those “duplicate indexes”. We removed the custom indexes and everything was ok.

Another example…let’s say the customer applies IndexA to TableB to solve performance problems in Version X. For Version Y the ISV refactors the performance problem by changing Java code, moving cols from TableB to TableC, etc. ISV performance testing shows everything is good *without Index A*. Now the customer reapplies IndexA to TableB but the data access is different in Version Y which leads to blocking/deadlocking/etc.

I think the biggest problem is that ISVs don’t provide customers with good tooling to do monitoring. The next biggest problem is ISVs treat customers like dumbasses and don’t value their opinions and observations. The right thing to do is work collaboratively with good tooling. The latter is difficult to do because no good commercial tooling exists to handle issues like spotting “duplicate indexes” that are not “exact” duplicates.

Dave – wow, looks like I’ve hit a nerve with you! Glad to see you got a lot out of the post.

It sounds like you’ve found a set of best practices that work well for you. I’d just like to point out that not every ISV has the manpower to roll their own monitoring solutions, but I’m glad to hear you do. Thanks for stopping by!

I agree with you re manpower.

Wouldn’t it be an interesting entrepreneurial activity to expand your “blitz” offerings to add functionality like the things I’ve mentioned that ISVs could license and redistribute? Centrally monitor blocking, index metrics, PLEs, deadlock graphs, io stalls, objects that diverge from “model”, blah blah. I’ve still yet to see a good commercial tool that can do this that an ISV can integrate and will report back without a clunky UI that gets in the way.

Add the cachet of the Brent Ozar moniker to it and there’s your next million.

My customers LOVE my tool and some have even ditched their 3rd party monitoring tools entirely. I guess there’s no market…

Dave – why not open source it? Sounds like you’ve got a winner on your hands and the community might really be able to help extend it even further.

I have done that. Problem is it lacks the cachet of a “Brent Ozar” to take it to the next level. There are lots of radically ingenious FOSS tools out there that toil in anonymity b/c the inventor(s) lack branding skills. You rule when it comes to branding (among many other things).

Or maybe the problem is this a solution in search of a problem?

Dave – oh, great! What’s the URL of the open source project?

PerformanceCollector: http://git.davewentzel.com/repo/performancecollector

Documentation: http://www.davewentzel.com/PerformanceCollectorSeries

Metadata Driven Database Deployments: http://md3.codeplex.com – This is the utility I use for ISV database deployments instead of say redgate or VS dbprojects. However, it follows the unix paradigm of “do one thing and do it well” so you can rip out the index tool and it will compare your database to model and note index divergences (among many other things), as well as automatically bring a database back to model.

There is another piece to this tool too that I haven’t had time to release but would love to share the code. It does (near) zero downtime database upgrades. It upgrades any database to “current” from any previous version, even if that requires refactoring HUGE tables with lots of data movement. It uses service broker and “alter schema transfer” to accomplish this. It’s Oracle GoldenGate for SQL Server basically. Yes I have implemented it and it does work. It usually takes our java guys longer to upgrade their jvms than it takes our DBAs to upgrade our databases. (And this is all MY code, developed by me on my own time).

Dave – hmm, okay, some quick feedback.

I edited your comment to keep the URLs separate. It’s critical when you post links to places that you keep URLs on their own, or else they blend together and people get 404s when they click on ’em. (I did, heh.)

Second, I’d highly recommend posting your code somewhere that people can find it – ideally Github. Having your own code repository can be fun and easy to control, but you lose the easy forking and permanent history. Other folks may not be comfortable working on someone’s code repository that can disappear at any time.

Next, there’s no license mentioned anywhere on the readme.md. It just says “open source,” but that can mean many different things to different people. I’d start here: http://choosealicense.com/licenses/ It’s a simple comparison of the most common open source licenses.

Once it’s licensed and on a public code repository, then people can get involved and do things like make the introduction more compelling. Right now, it basically leads off with “run this PowerShell script as a sysadmin and things happen” – that’s kinda terrifying, heh. I’m not saying you have to build a simple marketing page, because other folks can help you with that once it’s in a stable place with intuitive licensing.

Hope that helps!

I understand what you are saying…there’s only so many non-billable hours in a day for this stuff.

I’ll see what I can do and I do appreciate the feedback.

Dave – totally agreed, and I’m only saying it because you mentioned that “it lacks the cachet of a “Brent Ozar” to take it to the next level” – that’s not the real challenge here. No sense in me reinventing something that you might have already invented, and just need to switch things around a little to get the adoption you’re looking for.

Thanks for sharing, Dave!

Coming from the ISV side, please don’t make changes to the database without consulting with us (the ISV). First off, the likelihood that someone will remember to remove their custom index before a software upgrade is just about nil, and you have no way of knowing what that change will do the upgrade process if it’s left in place.

Second, when that change does break something, the customer won’t even think to ask the DBA if his change could have caused the problem, they will just immediately call the ISV to say, “the software stopped working”. Changes to the database will not be mentioned. Why do I say this? Because I routinely encounter customers who’s software hasn’t changed in months saying, “the software stopped working”, neglecting to mention something else in the network they upgraded/reconfigured.

I deal with over 75 ISV’s/vendors in Healthcare and the vast majority do not have DBA’s, and if they do, they are buried so deep and I rarely get to talk directly to them. Its usually a guy that knows enough to get by, or they outsourced it and this is what they got. Most aren’t even familiar with SQL Licensing, nor do they know the differences between ISV and Retail Licensing.

I do proactively optimize queries on some of these, but I always submit the changes for approval to the ISV. Once, it took me 6 years to get one clustered index changed on a large database with very heavy I/O, we had to throw massive amount of hardware at it before that. This was on a 24×7 mission critical database.

The vast majority of Healthcare ISV’s will not support any Microsoft HA solutions (AG, Mirroring, Log Shipping, etc), and some require SIMPLE recovery model (mostly because they are afraid the log file will grow to big). Yes you could do the above, but the ISV would not support the application if you did.

Thanks for letting me vent, and am sorry but I could go on all day.

Jeff

Jeff – that’s pretty much the case in all software industries. DBAs are really hard to find, and they’re expensive to boot. The funny part is that you’ll find that most software vendors say the same things about their customers, too – not enough of ’em have DBAs.

The bright side is that you’ve got great job security. 😉

Great post Brent. I come from the customer side as dba. Performance monitoring is part of my job and I had some busy applications and databases during my time. Two examples.

First one was a db (45gb) with one table with a missing index – well there where 5 index’es on the table but none of them really worked. So I contacted the vendor and asked if they could tune these indexes. I even offered them my sqlprofiler file so they could replay the load. No feedback. Added this missing index myself (just used the tuning advisor) and 1.5million hits (on the index) per hour. This reduced the io greatly on the server.

Second was another vendor which I contacted. They where very openminded and invited be to a conference. I did send to them index usage statistics and started to discuss the index and table design. With ISV software there could always be a module of the software that could be affected so discussing my findings with the dev team really helped – both sides. So what I did was to provide profiler traces (normally 2 hours) and they incorporated the tuned indexes into future service packs. They came about 2-3 months later which I was very satisfied with.

Thanks for your blog and tools Brent!

Rune – you’re welcome, glad we can help!

I’ve recently come across this issue too. We have some clustered instances on mount points that have underlying storage replicated across sites for DR and databases share those mount points to reduce the overall storage costs. Despite IT waffle, storage in a third party data centre isn’t cheap and it costs our company well into the thousands per TB per server per month (and we have hundreds of servers plus a recent eye on cutting costs)!

Anyhow, an ISV had an application upgrade where they brilliantly cleared out the data from a database and then added fancy new indexes that had included columns of every column on every table (which tripled the number of computed columns, and as many will know this slows down CHECKDB exponentially).

Everything tested fine. A few weeks later in production as data accumulated, CHECKDB had blown out from hours to days, and their log space requirements increased from 0 to 40GB+. I say + because that’s when all our log disk space filled up and caused a mass production outage across all the databases on that mount point. As a result I’ve disabled CHECKDB on that database temporarily.

I went back to the vendor and asked why these new indexes were needed. They said they were hand tuned for extra performance. I tested it myself, found zero benefit from them, went back and explained the results of my tests, the problems we were experiencing (CPU time, disk space, outage), and that I would like to get integrity checks going again and remove the unnecessary included columns.

Unfortunately we were then stuck at an impasse about what to do about it. Add a quantity of disk at a massive and ongoing cost? Have the vendor to do investigation and issue a patch with all of the paperwork and costs involved (spinning up a project team to do all of the necessary tasks like contracts and payment and UAT etc). I don’t have any authority to even start that process.

Or do we (the DBAs) just do what we need to do to get our production environment going safely again with integrity checks by removing the unnecessary included columns… knowing full well that it could potentially though unlikely have some impact down the line with vendor upgrades.

So it is an interesting topic. As a DBA, I’m on the side of doing what’s necessary when it’s important (e.g. changing the indexes when there is no viable alternative). However because our environment is so large we don’t have a process and repository and resources for checking each server for our custom changes and removing them before each refresh of DEV from PROD, or when applying upgrades up the server path (DEV->TEST->PROD etc).

It’s a bit of a pain point. But the real problem is that I’m a very small cog in a very large machine with all kinds of parties involved – our business (management, users, the project, the project managers, my team, and my managers), and then the vendor, and all of the unknown contracts between. I have to be careful about whose toes I’m going to step on and how much I get involved.

At the risk of repeating something that’s already been said in previous comments (because I didn’t read them all) I want to add my voice to “Every customer uses the app differently”

As a vendor it’s surprising by just how much. To use an analogy, it’s as if we sold pools and expected little differences (this customer likes to dive, that customer likes to do laps). But then we discovered that there were HUGE differences in how customers use it. Like this customer raises alligators, and that customer keeps shoveling crap into it and should have bought a septic tank.

Michael – yep, that is so true, and it seems that every ISV learns that lesson the hard way. People use apps in crazy, crazy ways.

The last company i worked for was an ISV with applications using Microsoft SQL Server. We also experienced that our customers used our apps in many different ways our developers were not even capable of thinking in their wildest dreams. We would have been very happy if we had customers that provided us with a typical workload of their/our app so that we had some “typical” indexes that most of the customers had in common. But it was not the case. Due to the lack of experienced DBAs or the lack of time or whatever.

Nowadays: Lucky me as a DBA that was able and also authorized by vendor’s documentation to start investigation of additional indexes on the database.

From the Performance Documentation:

“Indexing a database can significantly improve performance by providing faster searching. However, take care with indexing, because it can affect data writing and deleting because the indexes also need to be updated. Indexing also makes the database larger.”

As we all know, very true.

With the help of sp_BlitzIndex i was able to identify and delete duplicate indexes that did not make any sense (just nine of them) and add a whole bunch of useful indexes with included columns.

I recorded two hours of workload during business times and replayed them using Distributed Replay from a SQL Server 2012 Instance and analyzed the two workloads using Qure Analyzer.

I was able to reduce:

– duration by 59% – 6,93 days

– CPU by 32% – 2,47 hours

– Reads by 18% – 6 TeraByte less I/O

Of course some metric was even worse:

– Writes by 46%

This is for an application that has a Read / Write Ratio of 6926

So i was able to improve the end users experience a lot.

Best of all: The vendor provides his customers with a T-SQL Script to check if the custom-applied indexes do have an impact on future software upgrades.

This test was also passed.

I just ran BlitzIndex on an ISV database I manage. After 15 minutes of running it returned 302,000+ rows. That’s few findings to wade through. I haven’t approached the vendor yet on changes, but I’m not too optimistic.