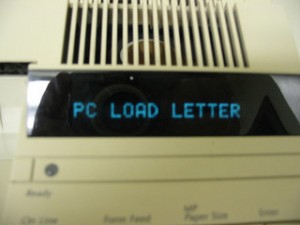

Scary SQL Surprises: Crouching Tiger, Hidden Replication

22 Comments

The more SQL Servers I see, the more scared I get. There are a staggering number of ways that your server can sneak around behind you and … well, let’s just say you shouldn’t drop the soap in the datacenter. As part of my health checks, I’m used to verifying that full backups are done…

Read More