Our developers have come to us with a problem query that isn’t as fast as they’d like. Using any Stack Overflow database:

|

1 2 3 4 5 |

CREATE INDEX DisplayName ON dbo.Users(DisplayName); GO SELECT * FROM dbo.Users WHERE LEN(DisplayName) > 35; |

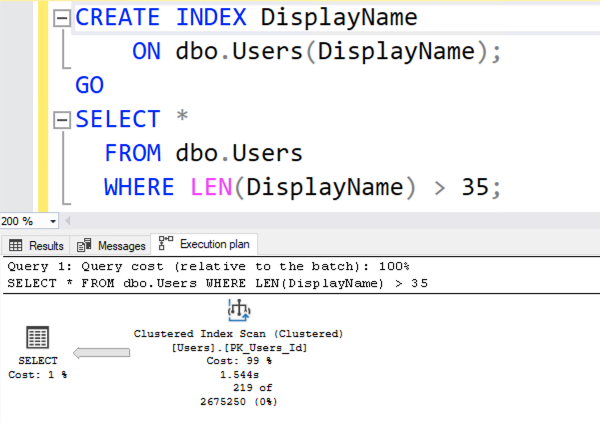

It has an index, but SQL Server refuses to use that index in the execution plan:

If we force the index with a query hint, we do indeed get dramatically lower logical reads. In my particular database’s case, the clustered index scan is 141,573 logical reads – but scanning the DisplayName index alone is just 38,641 logical reads.

Your Query Exercise challenges this week are threefold:

- Why is SQL Server’s estimated number of rows here so far off? Where’s that estimate coming from?

- Can you get that estimate to be more accurate?

- Can you get the logical reads to drop 100x from where it’s at right now?

Post your solutions in the comments, and feel free to ask other folks questions about their solutions. If your solution involves code and you want it to look good, paste it as a Github Gist, and then include the Gist link in your comment.

The Query’s Backstory

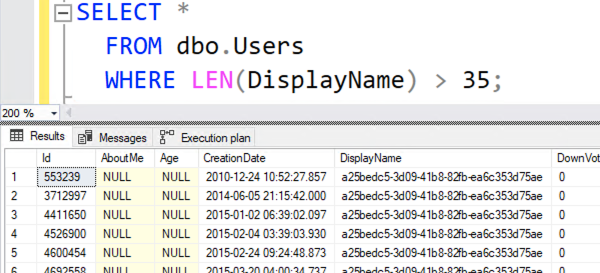

Someone’s going to sneer, “Well the answer is clearly that you shouldn’t be querying by the length of a column.” Yes, but… in this situation, whenever a user pushes the GDPR right-to-be-forgotten button, the app is currently setting the Users.DisplayName column contents to be a GUID:

In the web site, the DisplayName length is limited to 35, so anyone with a GUID DisplayName (length 36) is someone that we need to process for the GDPR. Our right-to-be-forgotten process takes some time to run – we have to purge their data from several systems, like reporting – so we keep the user around while the app slowly works through those systems. We can’t just delete their row.

Yes, it would be nice to add a column like “UserStatus” or “ForgetMe” and have the app set that, but here in the real world, we don’t always get to redesign entire processes just because a query is slow. Try to get across the finish line quickly, as if you have 100 other things that are fighting for your attention too.

You know, like your day job. The one you’re avoiding right now. (Did I say that out loud?) When you’re done giving it a shot, check out my answers & discussion in this post.

43 Comments. Leave new

I would try to solve this with a Calculate column and a Index over that column, assuming that basic best practices are around and the application doesn’t use SELECT * or similar.

> assuming that basic best practices are around

Call me when you find that shop. 😉

Just joking though. Feel free to work through that to see if it works!

Also assuming that the small query change is feasable

Let’s do this: stop doing small messages, and do the exercise described in the post. Hope that’s fair!

SELECT *

FROM dbo.Users

WHERE DisplayName LIKE ‘____________________________________%’;

This snippet of code (we can remove % sign if we’re that the string is not longer than 35 character long ) does what’s required (uses newly created index in efficient way)

* obviously I’ve meant 36 character in previous post – I’ve made mistake myself counting those underlines 🙂 . So here’s more elegant and clear way to put it:

SELECT *

FROM dbo.Users

WHERE DisplayName LIKE CONCAT(REPLICATE(‘_’,36),’%’)

;

Pioter – gonna give you a little tough love here. You literally didn’t answer a single question of the 3 homework questions.

Sorry, but you’ve guessed it – I’m exactly in the middle of my work duties – and I literally hate writing anything that is not a code (or website comments)

Understood – this kind of challenge isn’t a good fit for you then. (And again, trying to be gentle – your answer is flat out incorrect.)

Okay, now it’s a matter of ambition 😀

1. Why is SQL Server’s estimated number of rows here so far off? Where’s that estimate coming from?

Because of the function used on column engine can’t use cardinality from statistics and uses static value for unknown

2. Can you get that estimate to be more accurate?

It can be done if you create a computed column as LEN(DisplayName), and then create index on it

3.Can you get the logical reads to drop 100x from where it’s at right now?

Probably depends of the version of the DB, I got drop from 1142 to 6 after only performing steps mentioned in question 2, but it’s possible to play with covering index in order to get rid of Key Lookups in the plan

There you go! That’s much better. Good work!

1. LEN isn’t sargable, therefore the estimate is all the rows in the tbale.

2. – Yes

3. Using StackOverflow 2013 – down to 6 reads, from 44530:

alter table users add nameislong as (case when len(displayname)>35 then 1 else 0 end)

CREATE NONCLUSTERED INDEX [longdisplayname] ON [dbo].[Users]([nameislong] ASC)

SELECT *

FROM dbo.Users

WHERE nameislong=1

Good shot! Now, a tricky clarification question: in your answer to #1, you said SQL’s estimate is wrong because the query isn’t sargable. Are you saying non-sargable queries can’t get good estimates?

They can sometimes – this was a quick response. There’s tricks we can use but it’s easiest to use sargable criteria 😀

Would have to type more on that long subject later ?

The question mark was supposed to be an emoji 😉

Rather than being general, be specific: why isn’t THIS query getting good estimates, and can you get THIS query to have good estimates? (You answered #2 with “yes” but you didn’t actually do that work. You got a DIFFERENT query to have good estimates.)

1. 2675250 is 30% of the total number of records (8917507) in the Users table. That’s a suspiciously round number, so I’d say we’re dealing with hard-coded estimates in this case.

2. Yup, 219 out of 219

3. Down to 6 logical reads.

Assuming we can’t change the query (which we usually can’t) the best option is an indexed view – https://gist.github.com/VladDBA/a09b4f58686e03642a8565a2614780a1

Is it ok if I cover this in a blog post?

Another options would be, like Pioter mentioned, to create a computed column and slap an NC index on it – https://gist.github.com/VladDBA/176e943b80d763c46c10997dfc70c563

But while that would satisfy the WHERE clause, the fact that we’re doing “SELECT *” incurs a key lookup, which the indexed view route doesn’t require.

Absolutely excellent work! Five stars!

Thank you!

In case anyone’s curios, here’s the blog post – https://vladdba.com/2024/02/15/find-long-values-faster-my-solution-to-brents-exercise/

Great post! I’m going to link to that in next week’s answers discussion.

* cries in standard edition *

For enterprise edition (or developer edition) the indexed view solution works great because expensive edition will take the indexed view into consideration when building the query plan for queries against the base table (with caveats that don’t apply here) and you probably wont need to change the query at all.

With standard edition, you would need to update the query to run against the indexed view with the (noexpand) hint.

If I’m changing the query (because I’m using standard edition in production) I would probably go with an indexed computed column of len(displayname) and eating the key lookups as “good enough” to avoid schema binding and having to drop and rebuild the indexed view when we wanted to do something in the future if the good enough version gets me over the finish line.

Odd, when I tried the indexed view approach, the estimate was still ~740000 rows instead of the full table ~2.5 million rows (the 30%) so i didn’t think that was going to be the way to go. Maybe my version of stackoverflow isn’t big enough. Compat 150.

Pfffft well nevermind. Before I hit Post Comment I cleared my cache (yes local machine) and now magically the indexed view is estimating 1 row. Cool! (I guess :D)

Here’s my answer, an indexed view tailored to return GDPR users. Returns 358 rows in 186ms and a match of 358 rows between estimated and actual rows.

(This is using the large database from 2022-06 running on SQL 2022 Developer edition in 160 compatibility)

CREATE OR ALTER VIEW v_gdpr_users

WITH SCHEMABINDING

AS

SELECT Id,

AboutMe,

Age,

CreationDate,

DisplayName,

DownVotes,

EmailHash,

LastAccessDate,

Location,

Reputation,

UpVotes,

Views,

WebsiteUrl,

AccountId

FROM dbo.Users

WHERE LEN(DisplayName) > 35;

GO

CREATE UNIQUE CLUSTERED INDEX udx__v_gdpr_users__ID ON dbo.v_gdpr_users (Id)

WITH (SORT_IN_TEMPDB=ON,FILLFACTOR=100);

GO

SELECT * FROM v_gdpr_users

GO

Stats:

(358 rows affected)

Table ‘v_gdpr_users’. Scan count 1, logical reads 8, physical reads 0, page server reads 0, read-ahead reads 0, page server read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob page server reads 0, lob read-ahead reads 0, lob page server read-ahead reads 0.

To answer the questions (which I neglected to do, LOL):

1. The LEN function does not allow for an index seek, so the optimizer is forced to perform a scan on whatever index it chooses (or you force upon it).

2. Yes with an indexed view.

3. Yes, the clustered index scan on the indexed view is 8 logical reads.

The part you DID do was great though! Excellent work.

Now, about #1 – so are we saying that if we can’t get an index seek, our estimates will be wrong? Is it impossible to get good estimates when there’s no seek?

It’s possible to get a good estimate with a seek, as the solution shows. But the view has already eliminated any values outside our search parameters, so the estimate is identical to the actual row count returned.

Since the DisplayName column is an NVARCHAR(40), the optimizer does not know the string length directly, and thus can’t know how many rows might fit the WHERE clause at compile time.

Vlad calculated above that the estimate was exactly 30% of the total table size, which is consistent with my estimates as well (5,376,7390 out of 17,922,426 rows). I was curious about the reason for this (there has to be a reason, right? 🙂 ), so I did some digging. The reason appears to go back to the prehistoric times of data science, according to a blog post by Dmitry Piliugin on SQLShack in 2018:

https://www.sqlshack.com/cardinality-estimation-concepts/

“Guesses

When there are no statistics available or it cannot be used, the optimizer has to guess. One of the most important guesses is inequality (“>” or “ 35), it falls back on the 30% rule.

Wow that didn’t format well at all… LOL

Vlad’s observation:

When there are no statistics available or it cannot be used, the optimizer has to guess. One of the most important guesses is inequality (“>” or “ 35), it falls back on the 30% rule.

My conclusion:

Because the optimizer has no statistical data on the length of string column, and our WHERE clause is an inequality (WHERE LEN(DisplayName) > 35), it falls back on the 30% rule.

Bill – I need you to read this very carefully.

> Now, about #1 – so are we saying that if we can’t get an index seek, our estimates will be wrong? Is it impossible to get good estimates when there’s no seek?

You keep saying, “When I get an index seek, the estimates are great.” Yes, yes, I hear you, but YOU ARE NOT HEARING ME. Read that line above very carefully. It’s a pair of yes or no questions. I’m trying to gently lead you to water without shoving your head in the pool and screaming at you to drink.

WINK WINK

Sorry, I mis-worded it, and proofreading IS fundamental… 😀

The first sentence of my response should read:

It’s possible to get a good estimate with a SCAN, as the solution shows.”

Yay! Okay, good! There you go! You nailed it. Good work!

[…] this post I cover my solution to Brent Ozar’s recent query exercise, where we needed to optimize the query so that it’s able to find long values […]

[…] Query Exercise: Finding Long Values Faster (Brent Ozar) […]

1 – when you put a function around a table column it is no longer SARGable, so the server has no statistics etc. and assumes 30% of the whole table to fit the condition

2 – create a computed column for the length and index it

If you don’t want to / can’t do it:

since the Names longer than 35 are always an UNID and UNIDs are hex, you can filter for

DisplayName LIKE ‘[a-f0-9]%’

it will reduce the estimates from 740k to 306k (still to much, but lower :-). If you add a few (but not too many) more [a-f0-9] to the LIKE condition, it will become more accurate

3 – easiest way (on SQL 2022 Dev/Enterprise) – decreases the reads from 44.5k to ~2,3k on StackOverFlow2013:

Just use a subquery and make the key lookup manually by joining the users table itself. It would produce a plan with an adaptive join, so it would be fast, even if a whole lot of users suddenly decides that they want no longer be known by StackOverFlow.

SELECT u.*

FROM (SELECT u.Id FROM dbo.Users AS u WHERE LEN(DisplayName) > 35 AND DisplayName LIKE ‘[a-f0-9]%’) sub

INNER JOIN dbo.Users AS u

ON u.Id = sub.Id

A longer LIKE (multiple [a-f0-9]) would not help in this case, since it will start to use hash / merge joins then – except you force a loop join, which could hurt you in a few years.

Of course there are many other approaches (as a computed column with a filtered index (only those with len > 35) on it etc.), but this would cause more stuff to do / maintain that we may not want in our database for rarley used queries.

Thomas – whenever I start to write something in absolute terms, I often say, “If what I’m about to say is wrong, how would I prove that it’s wrong?”

So for your answer for #1, if you believed that was WRONG, what queries might you write to disprove it?

For example:

SELECT * FROM dbo.Users

WHERE RTRIM(DisplayName) = ‘Brent Ozar’

SELECT * FROM dbo.Users

WHERE YEAR(LastAccessDate) = 2037;

Those are functions – do they get 30% estimates?

(I should add, the other answers aren’t quite right either, but this first answer will start you on the journey.)

you are right, there are exceptions, RTRIM because SQL Server ignores the spaces right to a CHAR/VARCHAR column when comparing and date functions are sometimes granded with a special treatment 🙂

SELECT * FROM dbo.Users WHERE DisplayName + ‘ ‘ = ‘Brent Ozar ‘

would return the same user as without the spaces after your name or after the DisplayName.

On the other hand it is hard to explain / understand for an unexperienced developer what exactly / why there are exceptions, so it is easier to remember the “do not use functions on the table side whenever there is another way” rule.

In your example the RTRIM() is obsolete and shouldn’t be used anyway and the junior may have wasted 5 seconds to write LastAccessDate >= ‘20370101’ AND LastAccessDate < '20380101' instead of the YEAR() function, but could be sure, that he didn't implement a potential problem.

And the senior dev would not have given a bad example, that someone else may encourage to adapt it to

SELECT COUNT(*) FROM dbo.Users AS o WHERE DATETRUNC(YEAR, o.LastAccessDate) = '20100101'

or

SELECT COUNT(*) FROM dbo.Users AS o WHERE DATE_BUCKET(YEAR, 1, o.LastAccessDate) = '20100101'

or even

SELECT COUNT(*) FROM dbo.Users AS o WHERE CONVERT(CHAR(4), o.LastAccessDate, 112) = '2010' (seen already)

since he thinks / was told, that date functions are all SARGable (all three estimates just one row instead of ~85k, which would/could lead to slow nested lookups, if he would join the Users table).

Regarding answer 2: I had to do a UPDATE STATISTICS dbo.Users to get a better estimate on the indexed computed column, but the LIKE still reduces the estimates (and more important the reads), since it does not longer scans the whole index but just everything that starts with a number odr 'a' to 'f'.

Adding a second [a-f0-9] may exclude a few more e.g. "Brent", but since the statistics have only 200 real steps, more than 2 "letters" will no longer be exclude based on real statistical values but based on guessings. So with too many letters in your LIKE it will start to under- or overestimate again (and of course you have to be aware of the dashes in the unid)

And for answer 3:

I forgot, that you can't filter on a computed column (should have tested it before, but it was no my "main approach"). The use of a subquery with an adaptive join is in my opinion still a valid solution, particularly when you don't want to make changes to your database (computed columns / indexes / indexed views …)

And 2,3k Reads instead of the orignal 44.5k are not that bad – but it always depends, when / how often you use it to decide if it is good enough or you need the best possible solution.

1.Why is SQL Server’s estimated number of rows here so far off? Where’s that estimate coming from?

Is it hard coded to use roughly a third of the table?

2. Can you get that estimate to be more accurate? Yes with the statement:

“alter table users add [length_displayname] as (len(displayname));”

3. Can you get the logical reads to drop 100x from where it’s at right now? Nope, but using the statement in answer 2, it drops by 4x. Ran out of time

Excellent work on #1 and #2!

lightbulb and facepalm combo….For question 3.

create index length_displayname on dbo.users(length_displayname);

Down to 6 logical reads (from 10376)

There you go, bingo!

really appreciate these exercises, hope you can keep them coming!

Glad you like ’em! I have a really good time seeing everybody’s answers.