Writing new code = bugging. That part’s easy.

Taking those bugs back out, that’s the hard part.

Developers are used to their tools having built-in ways to show what line of code is running now, output the current content of variables, echo back progress messages, etc. For a while, SQL Server Management Studio also had a debugger, but it was taken out of SSMS v18 and newer versions. Even when it was around, though, I wasn’t a big fan: SQL Server would literally stop processing while it stepped through your query. This was disastrous if your query was holding out locks that stopped other peoples’ queries from moving forward – and you just know people were using it in production.

I do wish we had an easy, block-free way of doing T-SQL debugging in production, but T-SQL debugging is different than debugging C# code. So if your T-SQL code isn’t doing what you expect, here are a few better ways to debug it.

Option 1: Use PRINT statements.

Since the dawn of time, developers have put in lines like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

BEGIN TRAN PRINT 'Starting access date changes' UPDATE dbo.Users SET LastAccessDate = GETDATE() WHERE DisplayName = N'Brent Ozar'; PRINT 'Done with access date, starting reputation changes' UPDATE dbo.Users SET Reputation = Reputation / 0 WHERE DisplayName = N'jorriss'; PRINT 'Done with reputation changes' COMMIT |

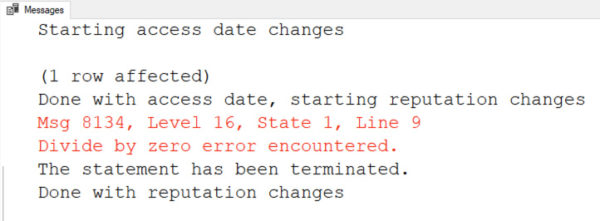

So that when the statement fails, they can at least see which part failed:

There are a few problems with this approach:

- PRINT doesn’t output data immediately. SQL Server caches the data that needs to be pushed out to the Messages. If you’re troubleshooting a long-running process, you probably want to see the messages show up immediately, as soon as they’re executed.

- PRINT pushes data out over the network whether you want it or not, adding to the overhead of your commands. This isn’t a big deal for most shops, but when you start to exceed 1,000 queries per second, you’ll want to shave overhead where you can. You only really want the debugging messages coming out when you need ’em.

Let’s raise our game with RAISERROR.

Option 2: Use RAISERROR, pronounced raise-roar.

What? You didn’t notice that it’s misspelled? Okay, confession time, I didn’t realize that either – Greg Low of SQLDownUnder pointed it out to me. Let’s add a little more complexity to our code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

DECLARE @Debug BIT = 1; BEGIN TRAN IF @Debug = 1 RAISERROR (N'Starting access date changes', 0, 1) WITH NOWAIT UPDATE dbo.Users SET LastAccessDate = GETDATE() WHERE DisplayName = N'Brent Ozar'; IF @Debug = 1 RAISERROR (N'Done with access date, starting reputation changes', 0, 1) WITH NOWAIT UPDATE dbo.Users SET Reputation = Reputation / 0 WHERE DisplayName = N'jorriss'; IF @Debug = 1 RAISERROR (N'Done with reputation changes', 0, 1) WITH NOWAIT COMMIT |

I’ve added a @Debug parameter, and my status messages only print out when @Debug = 1. Now, in this example, I don’t really need a parameter – but in your real-world stored procedures and functions, you’re going to want one, and you’ll want the default value set to 0, like this:

|

1 2 3 4 |

CREATE OR ALTER PROC dbo.DoStuff @MyParam VARCHAR, @Debug BIT = 0 AS ... |

That way, you only turn on the debug features manually when you need ’em, but the app doesn’t call @Debug, so it just gets left at its default value, 0.

I’ve also switched to RAISERROR instead of PRINT because RAISERROR has a handy “WITH NOWAIT” parameter that tells SQL Server to push out the status message to the client right freakin’ now rather than waiting for a buffer to fill up.

When you’re troubleshooting long or complex processes, you’re probably going to want to dynamically drive the status message. For example, say it’s a stored procedure that takes hours to run, and you wanna see which parts of it took the longest time to run. You’re not gonna sit there with a stopwatch, and you’re not gonna come back later hoping that the queries will still be in the plan cache. Instead, you wanna add the date/time to the RAISERROR message.

Unfortunately, RAISERROR doesn’t support string concatenation. Instead, you have to pass in a single string that has everything you want, like this:

|

1 2 3 4 |

DECLARE @Now NVARCHAR(50); SET @Now = CONVERT(NVARCHAR(50), GETDATE(), 26); RAISERROR (N'Done with reputation changes at %s', 0, 1, @Now) WITH NOWAIT |

Which gives you the date at the end of the output:

You can even pass multiple arguments in – check out the RAISERROR syntax for more details on how the arguments work.

Option 3: Use Table Variables.

You’ve probably heard advice from me or others warning you that table variables lead to bad performance. That’s true in most cases – although sometimes they’re actually faster, as we discuss in the Fundamentals of TempDB class. However, table variables have a really cool behavior: they ignore transactions.

|

1 2 3 4 5 6 7 8 9 |

BEGIN TRAN DECLARE @Progress TABLE (StatusDate DATETIME2, StatusMessage NVARCHAR(4000)); INSERT INTO @Progress VALUES (GETDATE(), N'A one'); INSERT INTO @Progress VALUES (GETDATE(), N'And a two'); ROLLBACK SELECT * FROM @Progress ORDER BY StatusDate; |

So even though I did a rollback, not a commit, I still get the contents of the table variable:

This is useful when you’re:

- Troubleshooting a long-running process

- The process has try/catch, begin/commit type logic where something might fail or roll back

- Desiring the results in tabular format, possibly even with multiple columns, XML, JSON, whatever

And there you have it – 3 ways to work through debugging without using the discontinued SSMS Debugger. I typically use RAISERROR myself – it’s easy enough to implement, and it’s a technique you’ll use forever. There are more ways, too, and you’re welcome to share your favorite way in the comments.

39 Comments. Leave new

Nice article. I just wanted to add that Azure Synapse doesn’t support default/optional stored procedure parameters, unfortunately.

I used sp_trace_generateevent, when I needed to troubleshoot night job, and needed to catch values of passed params of a nested stored procedure (16 level of nesting) when it got rolled back in a TRY/CATCH block so I could reproduce that particular call.

Nice one!

In the first option, should the label “Done with reputation changes” not be printed, since the error happened before?

It’s not in a try catch or anything, so the statements just keep getting executed after the error.

Correct. For try/catch and transaction handling, that’s a whole ‘nother blog post series: https://www.brentozar.com/archive/2022/01/error-handling-quiz-week-tryin-try-catch/

I think you meant to use 126 instead of 26 in the convert.

Thanks for this comment!

If you try running with 26 it will throw the error “26 is not a valid style number when converting from datetime to a character string.”

The combination of conditional “RaisError” and “Set Statistics Time, IO On”, is a fantastic way to combine debugging with performance analysis. (Yes, I noticed RAISERROR is misspelled years ago. But I also used to be a professional proofreader and copy editor.)

Got another misspelling in the master.sys.sysprocesses table; Loginame. At least sysprocesses is going to be removed from SQL Server someday, maybe . . .

Here’s how I use @Debug.

[,@Debug =] 0|1|2|3 tinyint – The default is 0.

Specifies whether to “Print” more information as the stored procedure is running. This is

useful when troubleshooting issues.

Value Description

—– ————————————————————————————-

0 Little to no messages shown.

1 Local variables are displayed.

2 Dynamically created SQL statements are displayed.

3 Table and table variable contents are displayed (“Select”ed).

Great idea!

If I use a table variable, I like to have an identity column so when the datetime column value is the same on multiple rows, I still can have it selected in the order inserted easily.

The @Debug and RAISERROR will be put to use!

Oh no! now I can’t un-see RaiseRRor!

I usually add a line to show the data only in SSMS or ADS, so client apps will not get disturbed.

IF (CASE WHEN APP_NAME() = ‘Microsoft SQL Server Management Studio – Query’ OR APP_NAME() = ‘azdata-Query’ THEN 1 ELSE 0 END = 1) BEGIN

…

END;

Useful for procedures where lots of data is being manipulated.

Big CI/CD rant…

One point that should have a bold and underline: regardless of what language you write your stuff in, you should have a test script or program that can run your original script or program and tell you with reasonable confidence, yes your code is working. There are a lot of fancy names and techniques for this: test driven development, unit test, acceptance tests, dependency inversion blah blah blah… but essential you need to be able to automatically run your process and say, yes it is working. When you get to this level, the stability and scalability of your code will be orders of magnitude better. Many “coding experts” like Robert Martin argue that this dramatically reduces the need for a debugger.

In the T-SQL world there are a number of ways to script out tests, such as checking for table entries or using transactions to setup and rollback etc. This is essentially moving some of your RAISERROR code complexity outside of your main code into the test code.

BEGIN TRANSACTION

— do some setup code

— run procedure

— check conditions and RAISERROR if failed

ROLLBACK TRANSACTION

or

— create #TempTable

INSERT INTO #TempTable

EXEC procThatReads

— check conditions for #TempTable and RAISERROR if failed

or

— check conditions for IF EXISTS(SELECT * FROM view WHERE …) RAISERROR if failed

After you have written a number of SQL scripts that test your original code, just put them in your execution tool of choice: SSMS, PowerShell, some expensive CI/CD tool etc. and run before you deploy. Your output should be ugly if it fails (red error text or fancy red icon) and clean if it is a success (add a success message at the end of your script or look for a fancy green icon), but essentially you want a binary true/false result if it passed or failed.

The best time to write such tests is when the code is fresh in your head, because you are not going to fully understand what the code does later on. However, you may end up missing conditions that make it into production or are caught by a QA tester. Use these mistakes as feedback to improve the set of tests you have.

Derek – that’s well outside of the scope of this blog post. It sounds like it’s a great time for you to start your own blog where you can share advice like this.

You’re welcome to continue leaving comments, but just be aware that leaving comments on unrelated blog posts isn’t going to get your message out the way you think it will. Your effort’s kinda wasted here. It’s a miracle when people read my *blog posts* and absorb their content, let alone the comments.

Hi Brent,

Sorry about that, you are right the response above is out of scope, too big, and it was rude of me to not respect or tie into the article’s focus.

How about this?

Yes, your code should have some basic checks, but in general debug code inside your original code is often a trap that leads to hard to read and maintain code. Whenever possible it is better to move your debug code into test code. It will dramatically improve the readability and scalability of your code, and it is a programming best practice that dramatically reduces the need for a debugger. How that is done is probably best left for big articles and 800 page books.

There you go! (I disagree with the opinion, but you’re certainly welcome to it.) Thanks!

Great blog Brent, thank you.

The old SSMS debugger was a cool feature, when it worked.

I admit I use the PRINT statement when I know the transaction is short/fast and I’ll get the results/error message relatively quick.

RAISERROR is my go to, even though that misspelling is super frustrating every time I type out the word.

But I’m pleasantly surprised by option 3 and the inserting into a table variable and displaying a result even though there’s a rollback. I did not know that worked.

Even after 20+ years in SQL I learn something new each day.

I never knew about the RAISERROR WITH NOWAIT – very nice. I think this will become my new default.

I’m embarrassed to suggest a 4th option that I have used, but it does have the advantage of logging to a table and is not affected by any transaction rollbacks. I create a linked server referring back to itself, and then execute a stored procedure call to the linked server. This creates a new connection to the database, unaffected by errors/rollbacks on the original connection.

————————————————————————-

declare @LogEntry varchar(100)

select @LogEntry = ‘Progress message…’

execute [MyDB_LINKED_SERVER].[MyDB].[dbo].[InsertProgressLog] @LogEntry

————————————————————————-

create procedure [dbo].[InsertProgressLog]

@LogEntry varchar(100)

as

set nocount

insert [dbo].[MyLogTable] ([LogEntry])

values (@LogEntry)

go

————————————————————————-

I’ve been doing running logs this way for years, not just for debugging. I put the date first so they all align and making it easier to follow the timeline. I append object names from variables or functions to the print variable when needed. Whether it’s for scheduled scripts and procs, I put the same print flow code everywhere so if I run anything myself I can always follow it’s progression. I don’t use RAISERROR for every print line, just at key times when I want to force the write to see it live. If I need a successive block of print lines on separate lines, just the last line being RAISERROR is good enough …

SELECT @printstr = convert(char(19),GETDATE(),121) + ‘: Processing started on database [‘+DB_NAME()+’] …’

RAISERROR (@printstr, 10, 1) WITH NOWAIT

Sometimes I used CLR proc for debug output.

What about actual debugging via Visual Studio? I think at one point I used VS 2017 with Sql Server 2016(?) and was able to actually F10 through a stored proc in the same manner as stepping through C# code.

The problem is that it freezes execution on the server, blocking other queries as your locks are held open. Plus, that capability isn’t available in Azure SQL DB on the server side.

Good discussion.

I have had problems with PRINT statements – like the ending PRINT statement enclosing a block of SQL code, executing before the actual execution of the code, But this is very rare in my experience.

The principal problem I have with RAISERROR is its NAME, suggesting that it is only for flagging errors. Someone familiar with the use of RAISERROR for flagging errors, may get confused seeing its use in lieu of PRINT. I use PRINT for informational purposes, to break up a long SQL script into chunks signalled by start and end PRINT statements. And it has worked well thus far. Though for my next long SQL script, I shall try RAISERROR as a learning experience.

Thanks!

Another real nugget. Thank you Brent. These basic tips are just as important as the stuff around the forefront of our technology. There are always new people who need to learn stuff like this ( and ones who have been doing it for years who still need help with basics.

Nice tip for table @variables … will save me [temporarily] adding “with (nolock)” in my procs 🙂

I’ve been using the NOWAIT approach for a while for logging progress of a proc and feeding it back to C# world via the “SqlInfoMessageEventHandler”. Kinda gives a stack trace from the proc perspective if there’s an unhandled exception elsewhere … and yes, I should blog about that sometime 🙂

Great article and thanks to Hamish for raising it on OH.

SCOTTISH PEDANT ALERT: FOR BRENT: It’s pronounced Hay-mish , rather that HA!-mish – you need to get into the highlands for a walk, sir – and drink more whisky (note, no ‘e’)..

SCOTTISH PEDANT ALERT OFF

I use different approaches depending on the complexity of the stored proc I am dealing with. If it is very complex, and I need stats, I dump the info into a global temp/variable table, and as it is going through the motions I am querying that info. Once completed, or errs out, I dump that info into a physical table to peruse at my leisure.

In the event it is something quick, and I want to know the output (usually used on SQL jobs), I output via the print statements, though I like the RAISERROR option (had not thought about that as an option).

What SQL Server/T-SQL is sorely missing is dedicated logging functionality. I find putting stuff on the messages stream inelegant and unwieldy, so inevitably prefer a logging schema and tables with helper procedures to make it easy and consistent to log output and exception handling and debug any procedure, whether executed manually or automatically. But SQL Server’s implementation of transactions and rollbacks means all that’s for naught and has to be supplemented with yet more cruft, usually involving table variables like you say. It makes an already clunky language a whole lot clunkier. I really wish MS would implement a dedicated logging module in T-SQL.

I was going to make a snarky comment about them only implementing blockchain and buzzword features (though blockchain would perversely enough have a reasonable use case with logging) but have just checked the T-SQL changes coming with 2022 and see that they have actually put a few things in to address common cruft that’s been a burden for the past 30 years. So I guess there’s hope yet!

Interesting – have you seen other databases that do a better job with built-in query diagnostic logging functionality?

No — I haven’t ventured into others much, and definitely not to the point I’d need anything like that. I have some knowledge of MySQL (yuck), a passing knowledge of SQLite, ancient knowledge of some old Oracle version, a smidge of S4/HANA (see MySQL) and a sliver of Postgres (which I suspect I’d like a lot but haven’t had the opportunity to get my teeth into).

I think it could be a real boon to SQL Server, but a lot of the headline features seem to be about making sure the same boxes are ticked as the other major players. That’s understandable and all, but I’m used to seeing programming languages implement features that make life easier, especially for common tasks that inconsistent workarounds have sprung up for. The cruft that STRING_SPLIT and STRING_AGG let people remove is a good example in T-SQL, regardless of your opinions on string ops in the DB, the upcoming GREATEST and LEAST are in a similar vein (though I think maybe they’ve come from ANSI?).

I’ve found myself wishing numerous times that I had something like .NET’s or Python’s logging APIs from within T-SQL, and creating wrapper functionality to mimic the same in line with what I need — the simpler the calls to the logging code, the easier it is to read the actual procedure code and (hopefully) the more likely others will be to use logging. It’s a big thing though and I’d be wary of it going down the same route as MS’s implementation of temporal tables, which is great for what it is but limited and limiting enough for me that I unfortunately still prefer a roll-your-own solution.

I guess it’s partly about embracing T-SQL as a language in its own right (my favourite SQL expansion is Scarcely Qualifies as a Language :D), though there’s a tricky line that needs to be toed between offering procedural tools that can be used responsibly and reminding the users that query code should generally be set-based.

Apologies, I appear to have not replied to the comment I thought I was replying to!

Yeah, realistically, Microsoft probably isn’t going to invest the developer time in that when no other database platform has done it either.

Yeah, they like to try and lead the pack but not be too far ahead. It’s not the worst, just an annoyance. There’s plenty of tools that can help with diagnosis in ways that don’t cruft up your procedure code, including EE — I’ve found that it can miss stuff, but you don’t necessarily need absolute perfection just for the odd debug.

I just discovered that I missed your week of blog posts about the very new T-SQL functions that I was optimistic about!

What a dumpster fire. 🙁

Yeah, years ago when I was learning “debugging” in SQL 2005, I immediately noticed the typo in RAISERROR (I’m not persnickety, I just spent almost 20 years in a prior career writing and reviewing technical geological reports. OK, maybe I *am* persnickety, but in a good way.)

I remember distinctly adding this comment to the start of my T-SQL script of demo lessons:

— NOTE: Microsoft misspelled this!

Now I’m sad I never learned to pronounce it “RAISE ROAR”!

For simple debugging we use combination of option 1 and 2.

Printing statements and forcing the push out with ‘RAISERROR (”,0,0) WITH NOWAIT’

This way we can still leverage the concatenation.

If we need persistent debugging information across nested procedures, SESSION_CONTEXT is also quite useful.