Sometimes, your INT columns run out of space, and you need to alter them to be a larger datatype, like BIGINT. Usually, when you do this, you’re met with screams of agony from your end users because this will totally lock the table and rewrite the whole thing:

|

1 2 |

ALTER TABLE dbo.Posts ALTER COLUMN OwnerUserId BIGINT; |

In SQL Server 2016 & newer, you can even kinda-sorta do it online with this syntax – but only if there are no indexes referring to the column:

|

1 2 |

ALTER TABLE dbo.Posts ALTER COLUMN OwnerUserId BIGINT WITH (ONLINE = ON); |

However, Gianluca Sartori (@SpaghettiDBA on Twitter) wrote about a brilliant technique:

- First, turn on row data compression on the table (which can be done ahead of time, and on Enterprise Edition, can even be done with ONLINE = ON, and even works when there are indexes on OwnerUserId)

- Second, alter the datatype, which will be instantaneous

(although it does require a schema modification lock)

Just a couple of gotchas: it only works with row compression (not page), and it only works for upsizing datatypes, not downsizing. If you use page compression or if you try to downsize a datatype, it’s a size-of-data operation that scans the entire table. I’m not happy about that, but I’m just writing it out here because I’ve hit that myself when trying this trick.

Step 1: enable compression.

Let’s see it in action on the Stack Overflow database. Let’s say I want to alter the OwnerUserId column on the Posts table, which would normally be a giant pain in the rear. First, we’ll enable compression:

|

1 2 |

ALTER TABLE dbo.Posts REBUILD WITH (DATA_COMPRESSION = ROW, ONLINE = ON); |

You can use either row or page compression (thanks to Paul Rizza for suggesting that I try page).

This rebuild is a big ugly operation that rewrites the whole table, so it’s going to generate a lot of transaction log usage and change a lot of data pages. The good things, though, are:

- You can pick when you want to do that rebuild

- It doesn’t have to be the same time that you alter the table

- The rebuild can be online if you specify like I did above (assuming that you have

Expensive EditionEnterprise Edition)

Step 2: alter the column.

After that rebuild finishes, I can alter the datatype:

|

1 2 3 4 5 6 |

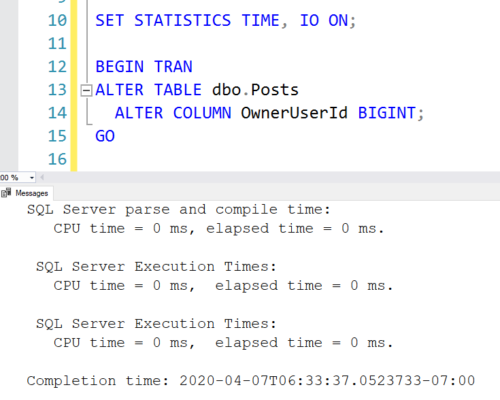

SET STATISTICS TIME, IO ON; BEGIN TRAN ALTER TABLE dbo.Posts ALTER COLUMN OwnerUserId BIGINT; GO |

(If there are indexes on that column, I still have to drop that index first – but now at least we’re talking about a smaller outage window, because I only need to drop the index at the moment in time when I need to alter the table – not when I do the rebuild and rewrite all the 8KB pages.)

In my case above, I did it in a transaction because I wanted to show you the locks that are required in order to make this change. The change does finish instantly and does no page reads, as evidenced by SET STATISTICS TIME, IO ON:

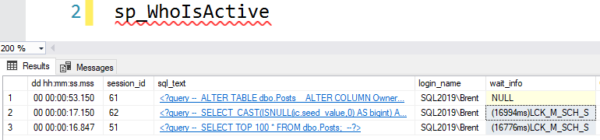

But it isn’t pain-free: we still do need a schema modification lock in order to do this, as shown by sp_WhoIsActive. If I try to do a SELECT against the same table, it’s going to be blocked until I commit the ALTER TABLE command:

So what this means is that you just need to prepare the ALTER TABLE command for a time window where you can deal with the higher writes due to data & log file work, buuuuut it doesn’t need to be a zero-load window. People can still be deleting/updating/inserting into the table while the REBUILD runs.

Then, your ALTER TABLE still needs locks, but it’s only for a really short amount of time (think milliseconds.) If I’d had an index on OwnerUserId, I could immediately start creating that index again (even with ONLINE = ON to minimize the outage.)

You’re gonna need this technique someday, trust me. You should probably just go say thanks to Gianluca in his blog post comments now. You’re welcome. When you’re done with that, you can read more details about how this works thanks to Paul White.

Livestream of me writing this blog post

I talk through my thought process and demonstrate it in this 40-minute video:

If you wanna watch me live stream as I write blog posts or work on the First Responder Kit, follow me on Twitch.

14 Comments. Leave new

I think it is important to state NULL or NOT NULL, as the current setting is not kept.

You are going to have application issues with this with applications that are not expecting a BIGINT. This will require coordination with the application side, possibly a rev-lock, although depending on the environment it may be possible to update the application(s) in advance.

Bob – yep, absolutely, but obviously that’s a little beyond the scope of this post. This post is about altering datatypes. Thanks though.

Yeah, I get the scope issue. But the whole point of the post is minimizing disruption, and a rev-locked app is certainly going to be disrupting :-).

Bob – I’m going to be brutally honest: if the database person is changing the database without coordinating with the developers first, then they’re not the kind of person who’s taking the time to plan out changes like this. It doesn’t feel like you’re contributing – it feels like you’re nit-picking.

The bit around 26:00 with the echoes is some of Ozar’s most progressive work, challenging the audience’s preconceptions of what a livestreamed video of compiling a blog post can — and should — be. Artfully layering his voice atop itself while discussing the logistics of acquiring custom Porsches, he challenges us to unravel the layers of meaning like so many strands of spaghetti and reminds us why he continues to be the Certified Master. Expect a 10 from Pitchfork.

Is data compression somethink I can/should use for all my big log tables?

I’d say that’s a really good use case for it. There’s still extra overhead involved in writing though (assuming that these tables are written to and rarely read from), so you’d want to test and make sure that it’s acceptable for your scenario.

I’ve never actually used compression in production (yet), but have played around with it occasionally and it’s really easy to get to grips with. There are some good blog posts on it out there in the Googlesphere.

Very cool! The title is a bit overachieving though — it’s only for larger datatypes of the same family, not just any alter of datatypes 🙂 Paul White’s post indeed has all the details. Thanks!

Nice one indeed.

Last year I needed to perform such a change on several 100M-1B rows tables (alter to the identity column to BigInt).

To minimize downtime we duplicated the tables/indexes, maintained their sync with triggers and switched the tables.

That server was on version 2014, so this solution wouldn’t have helped, but it’s interesting read.

Thanks for sharing.

Nice, thank you!

Does it work for reduce column size ? Nvarchar(max) to nvarchar(500) ?

Why not try it in your development environment to find out?

I tried this with a datetime to datetimeoffset(7) modification and sadly it had no effect