In the last episode, while talking about the basics of executing queries, I said that SQL Server caches execution plans in memory, reusing them whenever the same query gets executed again. I’ve also talked about the dynamic management views (DMVs) that let you query what’s in SQL Server’s memory. Let’s put those two things together and find out what queries have been running on the server lately.

Start by running this on any SQL Server – development is fine:

|

1 |

SELECT TOP 100 * FROM sys.dm_exec_query_stats; |

Some of the columns in the result set include:

- sql_handle – used to join to other DMVs to get the text of the query.

- plan_handle – used to join to get the execution plan.

- creation_time – when the query plan went into cache.

- last_execution_time – the last time the query was run.

- execution_count – the number of times Picard has said “Make it so.”

- total_worker_time – total amount of CPU time burned while the query executed – and note that there’s also a last_worker_time, min_worker_time, and max_worker_time. Most of the columns in this DMV have that same pattern.

So if you want to find which queries have read the most data, you could do:

|

1 2 |

SELECT TOP 100 * FROM sys.dm_exec_query_stats ORDER BY total_elapsed_time DESC; |

Pretty nifty, eh? Except now you wanna see what the queries are, and their execution plans. You could try to write your own DMV query from scratch, joining all that stuff together, but…this is the year 2019. (Or at least it is when I write this.) Ain’t nobody got time for that – there’s a much, much easier way to analyze your plan cache.

Enter sp_BlitzCache.

A few episodes back, you downloaded the totally free First Responder Kit. One of the scripts in there is sp_BlitzCache.sql – open that one and run it in the master database to create the sp_Blitz stored procedure. Then run it:

|

1 |

EXEC sp_BlitzCache @SortOrder = 'duration'; |

This shows you the top 10 queries that have run the longest time in total. I happened to pick Duration here, but sp_BlitzCache has a lot of useful sort orders:

- @SortOrder = ‘cpu’ helps you find queries burning the most CPU time, often due to implicit conversions, sorts, or functions running row-by-row.

- @SortOrder = ‘reads’ finds queries that are reading the most data, typically great candidates for index tuning.

- @SortOrder = ‘memory grant’ finds queries that need large amounts of memory to run (or at least, SQL Server thinks they need a lot – but it can be dramatically wrong.)

In the top result set, scroll across a little to the right, and look at these columns:

- Executions – how many times it ran.

- Total Duration (ms) – the total runtime across all the executions.

- Avg Duration (ms) – total duration divided by the number of executions.

As they say in the land of wine and cheese, voilà. Keep scrolling around left and right, and you’ll find all kinds of other interesting metrics – many of which you recognize from sys.dm_exec_query_stats, and some that took a whole heck of a lot of joining and calculating in order to display to you, dear reader.

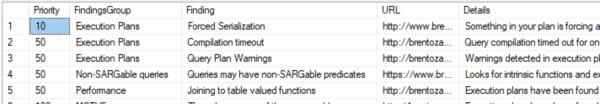

It’s tempting to click on the Query Plan column and dive right in – but hold up. Before you go looking at operators and errors, take a few moments to read the Warnings column. These are a combination of warnings built into the query plan (like implicit conversions) and warnings we figured out on our own (like spills over 500MB or joins to a user-defined-function.) The latter set of warnings is especially important because they’re not displayed with a yellow bang on the plan: they’re hidden issues that you need to be on the lookout for as you go spelunking through the plan and the query.

As you read through the warnings, you’ll doubtlessly come across one that doesn’t make intuitive sense to you. That’s where the second result set comes in: it’s like a decoder ring of the warnings you’re seeing.

Want to tune the queries?

This is where specialization comes in.

Back in episode 3, when we talked about treating our servers like cattle, I said that sooner or later, you were going to have to pick a specialty. The journey of learning to performance tune queries – for example, removing barriers that cause forced serialization – is typically a different journey than efficiently automating the rollout of Always On clusters in Azure VMs via PowerShell scripting.

Now’s a good time to pause and read my post on the job duties of production DBAs vs development DBAs.

If you want to focus on production DBA work, then your goal for this week is to hand off the sp_BlitzCache output to your developers. This is good, juicy information for them to understand which queries are using the most resources. Many of my clients actually publish the sp_BlitzCache output on an internal web page so the developers can go check it at any time – it turns into a bit of a competition, making fun of whoever’s queries are up on the top of the suckerboard. (I call sp_BlitzCache the suckerboard, not the leaderboard, because it’s the query plans that suck the worst.)

If you want to focus on development DBA work, performance tuning the queries and indexes, then here are your learning resources for this episode.

- Book: Learn T-SQL Querying – this is probably the worst-named book in history because it isn’t at all about teaching you how to write a query. Rather, it’s about making queries go faster. The title is bad, but the book is great!

- Book: SQL Server Query Performance Tuning – I recommended this one a few episodes ago when discussing indexes, and I said it’d pay off later. Later is now.

- Free Video: Watch Brent Tune Queries – free series of videos from conferences where I stand up onstage and tune queries from the Stack Overflow database, showing you how my thought process works.

- 1-day Online Class: Fundamentals of Query Tuning – where you follow along with me on your laptop or desktop, tuning queries.

- 3-day Online Class: Mastering Query Tuning – after you’ve conquered the fundamentals, this is the tougher challenge.

If you want to do both production and development DBA work, you just need to be aware that you’ve only got so many hours in the week. You can do a kinda-sorta-halfway job at both, but you’re not going to do a phenomenal job of both – at least, not as long as you’ve got real day job duties too, like responding to help desk tickets and sitting in meetings. I’m a great example of that myself – I’ve long since given up production DBA work, and I focus on the performance side instead. They’re both fun, high-paying jobs, don’t get me wrong – I just picked the latter, that’s all. (And there are plenty of other rewarding careers around data, too.)

5 Comments. Leave new

Thank you again. That is my #4,344 ‘thank you’ to you and your team. Question: at what point do you relent to the fact that it’s not the querys but rather the ‘foundation’? i.e. just not the right resources and terrible meta data/table constructs and management doesn’t want to hear that?

You’re welcome! We’ll get to resources later in the course, but in terms of terrible table design – you go to war with the tables you have, not the tables you want. You can’t just say, “we have to redesign everything.” That just doesn’t work.

If your job was easy, they’d call it play, and you’d do it for free. They don’t. They call it work, and they pay you for it. 😉

You can try and sell them on the merits that bad data design will cost you more over time in hardware and labor resources, but hold your breath while seeing if it works.

Is anyone else having fun with the FOURTH major outage this year in the south central Azure region this morning?! (And fifth in the last 12 months) I KNOW I AM!!!

Hmm… Cloud SQL for SQL Server doesn’t give full sysadmin privileges and fails to put sp_BlitzCache in master database.

You can run it in any database, even TempDB.