For years, when you right-clicked on a database and click Properties, the “Compatibility Level” dropdown was like that light switch in the hallway:

You would flip it back and forth, and you didn’t really understand what it was doing. Lights didn’t go on and off. So after flipping it back and forth a few times, you developed a personal philosophy on how to handle that light switch – either “always put it on the current version,” or maybe “leave it on whatever it is.”

Starting in SQL Server 2014, it matters.

When you flip the switch to “SQL Server 2014,” SQL Server uses a new Cardinality Estimator – a different way of estimating how many rows are going to come back from our query’s operations.

For example, when I run this query, I’ll get different estimates based on which compatibility level I choose:

|

1 2 3 4 |

SELECT COUNT(*) FROM dbo.Users WHERE DisplayName LIKE 'Jon Skeet' AND Reputation = 1; |

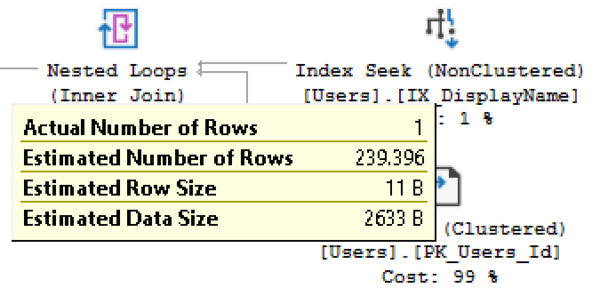

When I set my compatibility level at 110, the SQL Server 2012 one, I get an estimated 239 rows:

Whereas compatibility level 120, SQL Server 2014, guesses 1.5 rows:

In this case, SQL Server 2014’s estimate is way better – and this can have huge implications on more complex queries. The more accurate its estimates can be, the better query plans it can build – choosing seeks vs scans, which indexes to use, which tables to process first, how much memory to allocate, how many cores to use, you name it.

You read that, and you make a bad plan.

You read that the new Cardinality Estimator does a better job of estimating, so you put it to the test. You take your worst 10-20 queries, and you test them against the new CE. They go faster, and you think, “Awesome, we’ll go with the new compatibility level as soon as we go live!”

So you switch the compat level…and your server falls over.

It goes to 100% CPU usage, and people scream in the hallways, cursing your name. See, the problem is that you only tested the bad queries: you didn’t test your good queries to see if they would get worse.

Instead, here’s how to tackle an upgrade.

- Go live with the compat level you’re using today

- Wait out the blame game (because anytime you change anything in the infrastructure, people will blame your changes for something that was already broken)

- Wait for the complaints to stabilize, like a week or two or three

- On a weekend, when no one is looking, flip the database into the newest compat level

- If CPU goes straight to 100%, flip it back, and go about your business

- Otherwise, wait an hour, and then run sp_BlitzCache. Capture the plans for your most resource-intensive queries.

- Flip the compat level back to the previous one

On Monday morning, when you’re sober and ready, you compare those 10 resource-intensive plans to the plans they’re getting in production today, with the older compat level. You research the differences, understand whether they would kill you during peak loads, and start prepping for how you can make those queries go faster under the new CE.

You read Joe Sack’s white paper about the new CE, you watch Dave Ballantyne’s sessions about it, and you figure out what query or index changes will give you the most bang for the buck. Maybe you even resort to using hints in your queries to get the CE you want. You open support cases with Microsoft for instances where you believe the new CE is making a bad decision, and it’s worth the $500 to you to get a better query plan built into the optimizer itself.

Or maybe…

Just maybe…

You come to the realization that the old CE is working good enough for you as it is, and that your developers are overworked already, and you can just live with the old compatibility level today. After all, the old compatibility level is still in the SQL Server you’re using. Yes, at some point in the future, you’re going to have to move to a newer compatibility level, but here’s the great part: Microsoft is releasing fixes all the time, adding better query plans in each cumulative update.

For some shops, the new CE’s improvements to their worst queries are worth the performance tuning efforts to fix their formerly-bad queries. It’s totally up to you how you want to handle the tradeoff – but sometimes, you have to pay for the new CE in the form of performance tuning queries that used to be fast.

13 Comments. Leave new

We ended up moving to the new compatibility mode and swapping back to the old cardinality estimator so we can use all the new features without getting crushed by regressed query plans. “Best” of both worlds!

ALTER DATABASE SCOPED CONFIGURATION

SET LEGACY_CARDINALITY_ESTIMATION = ON;

We went to 2016 SP1 from 2008R2 and 2012 and after about a month switched to having legacy CE set at the database level. Only problem with this is that now we move up to 2016 SP2 and newer showplan stuff isn’t available because of the legacy CE setting. Hopefully we will get to use the new CE more but just slowly. Microsoft realized this problem because they put the query hint option into 2016 SP1 along with the above mentioned database scoped setting. A lot of our issues come from the fact we have lots of cross-database queries and linked server queries. Then you have to worry about the setting for the source database against the setting for the target database.

We recently discovered a similar issue mentioned in the article and had to turn on the legacy cardinality estimator ON. Things have improved since then. This is SQL Server 2017 CU10 with AlwaysON. Thanks Brent for this wonderfully crafted article.

great article on CE, and as always thanks for the invaluable free services you guys offer!

But this has to be the worst advice i’ve seen from you guys yet: On a weekend, when no one is looking, flip the database into the newest compat level

If you are lucky, things go the way you want… If it goes bad it could take out the whole box and then your boss is asking WTF are you doing unscheduled changes and no less on a weekend when there are likely very few people to help support things.

I get that some places are not 24/7 shops, but this is just bad news 🙁 I do think you mean this on the lighter side with a bit of humor so i’ll assume that’s what it’s meant as. And of course in an ideal world stealth ninja changes work right each time, or if they don’t at least you can roll them back without anyone noticing.

Jeff – keep in mind the reader who’s just now learning about the CE for the first time. You’d be amazed at how many folks are hitting this wall – they’re going live with the new CE without understanding that there even *IS* a new CE – they’re just flipping the compat level and going live.

It sounds like you’re well beyond that level, and that’s awesome! That’s great. Just remember that there’s a lot more folks out there who aren’t as advanced as you are.

I got to hear about how bad it could be by Victor Isakov in the last slot at PASS Summit 2016. Well worth the cost of the Summit.

oh I agree, It’s a often overlooked part of SQL and the info in here is fantastic… I’ve gotten so much amazing info from you and your team over the years – I still do!

My main run in with CE’s was when we went to 2014 from 2012 and could NOT use the new CE. No way could we get developer time to redo the queries that were killing highend hardware so we just had to leave it in 2012 mode.

Once 2016 came out we tested and confirmed that 2016 fixed the CE issues that 2014 introduced so we were finally able to bump it up.

I just feel the article could of done without the part about telling people to do stealth things on a weekend. Especially young DBA’s that maybe just read and do without much thinking.

I won’t lie, i’ve done these types of things on weekends/after hours, and had them go bad – and had to deal w/ the bosses and take hell for it. It’s not fun. Although it is a learning experience, so maybe its not all bad 🙂

“You take your worst 10-20 queries, and you test them against the new CE. They go faster…” –

You measure target your worst problems in a before/after test, you run the risk of falling prey to “regression toward the mean.”

Having recently lived through a couple 2008R2 to 2016 migrations where we decided to take the less wise approach of flipping the switch and seeing what breaks, we had quite a bit of fun from the new CE versus the old CE.

One of the worst query anti-patterns that will kill you with the new CE is a table self-join (in our environment, usually from chained views or a CTE where someone wasn’t really paying attention to the fact that they just selected from the same table 2 or 3 or 4 times). We also had some problems with scalar subqueries that saw the new CE and just went totally wild.

In addition to testing first and being sure the new CE won’t tip your server over, one other option to keep in your toolbag is the “FORCE_LEGACY_CARDINALITY_ESTIMATION” query hint. It’s a basic trace flag (that requires SA permissions to use) in native 2016, but if you’re on 2016 SP1 or newer, it’s a full fledged query hint that can be used without granting any special permissions to procedures or consumers, and it can be a lifesaver for getting the new compatibility mode features while only having to flag the known bad queries back to the old CE. We used it as our “ok, make this work while we fix it” card during our migration, and then set about to properly tune the queries and remove the flags after the fallout died down.

Or you could run a replay on a side server, before and after the switch, and compare. Maybe even using the fancy new DEA tool.

You could. That’s left as an exercise for the reader. I look forward to your blog post about that. 😉

I’m in so much shape after all that exercising I can barely fit through door frames. It was a huge headache, though.

Sometimes the new estimator produces very bad plans.

I have a query that runs extremely slow because of a bad plan (an intermediate result is like “cross joining” two non related tables)

I know join order (not forced) does not matter, but when I move an inner join at the end, I get a good plan and query execution is fast (??)