Using Trace Flag 2453 to Improve Table Variable Performance

I recently saw a server with trace flag 2453 configured. I hadn’t come across this trace flag before, so I did a little research. Microsoft says it allows “a table variable to trigger recompile when enough number of rows are changed”. This can lead to a more efficient execution plan. Trace flag 2453 is available in SP2 or greater for SQL Server 2012, CU3 or greater for SQL Server 2014 and RTM or greater for SQL Server 2016.

I was curious how a query using a table variable performed as compared to the “same” query using:

- trace flag 2453

- OPTION (RECOMPILE)

- a temporary table

DEMO SETUP

Transact-SQL

|

1 2 3 4 5 6 7 8 9 10 11 |

DECLARE @t1 TABLE (Id INT PRIMARY KEY CLUSTERED, DisplayName NVARCHAR(40)); INSERT INTO @t1 (Id, DisplayName) SELECT Id, DisplayName FROM Users; CREATE TABLE #t1 (Id INT PRIMARY KEY CLUSTERED, DisplayName NVARCHAR(40)); INSERT INTO #t1 (Id, DisplayName) SELECT Id, DisplayName FROM Users; |

BASELINE

Transact-SQL

|

1 2 3 4 |

SELECT t1.Id, t1.DisplayName, Users.CreationDate FROM @t1 t1 JOIN Users ON t1.Id = Users.Id WHERE Users.CreationDate < '08/14/2008'; |

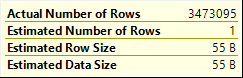

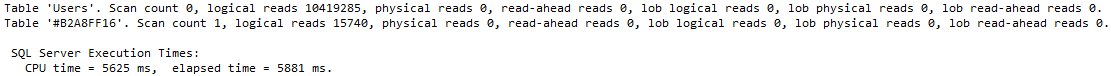

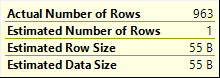

Table variable details:

TRACE FLAG 2453

Transact-SQL

|

1 2 3 4 5 6 7 8 |

DBCC TRACEON(2453); SELECT t1.Id, t1.DisplayName, Users.CreationDate FROM @t1 t1 JOIN Users ON t1.Id = Users.Id WHERE Users.CreationDate < '08/14/2008'; DBCC TRACEOFF(2453); |

Table variable details:

OPTION(RECOMPILE)

Transact-SQL

|

1 2 3 4 5 |

SELECT t1.Id, t1.DisplayName, Users.CreationDate FROM @t1 t1 JOIN Users ON t1.Id = Users.Id WHERE Users.CreationDate < '08/14/2008' OPTION (RECOMPILE); |

Table variable details:

TEMPORARY TABLE

Transact-SQL

|

1 2 3 4 |

SELECT #t1.Id, #t1.DisplayName, Users.CreationDate FROM #t1 JOIN Users ON #t1.Id = Users.Id WHERE Users.CreationDate < '08/14/2008'; |

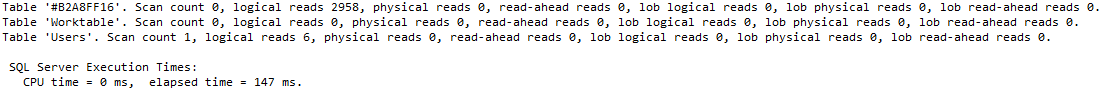

Temporary table details:

THE RESULTS

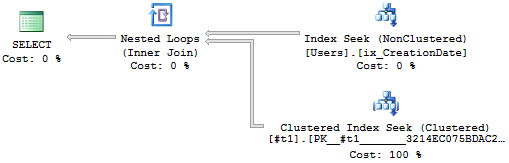

The baseline query has a clustered index scan for the table variable since almost 3.5 million rows are returned from it. The other 3 queries have the same execution plan and stats IO output, with a clustered index seek on the table variable or temporary table since 963 rows are returned from it.

WHAT WOULD TARA DO?

Should you always use trace flag 2453 or OPTION (RECOMPILE) if you are using table variables? Maybe. But I’m leaning towards “it depends”. Microsoft says the “trace flag must be used with caution because it can increase number of query recompiles which could cost more than savings from better query optimization”.

Some people say to use table variables if the table will contain a small data set. I’ve been burned by that before. I’ve experienced poor performance when the table variable always only had one row in it. Switching it to a temporary table improved the performance dramatically. Your mileage may vary.

I’ll just stick with temporary tables.

Related

Hi! I’m Brent Ozar.

I make Microsoft SQL Server go faster. I love teaching, travel, cars, and laughing. I’m based out of Las Vegas. He/him. I teach SQL Server training classes, or if you haven’t got time for the pain, I’m available for consulting too.

Get Free SQL Stuff

"*" indicates required fields

18 Comments. Leave new

Hi Tara,

Someone is going to have to make a Home Depot run for all these nails getting driven into the table variable coffin.

I have a few questions;

1. What cardinality estimator are you using for this demo?

2. Given that temp tables can have statistics, why does the optimizer still assume just 1 row is coming out of it? Is this related to the CE version?

3. After attending Wayne Sheffield’s session at PASS last year, it was my understanding that using recompile on table variables would force the optimizer to make its estimate after the table had been populated, thus leading to a more sensible/accurate row estimate. This may be part of the new CE as well, I don’t remember.

Nice meeting you at PASS, btw 🙂

Hi Brandon, nice meeting you too!

1. The demo was on the new CE. I was using a 2014 instance with compatibility level 120 for the StackOverflow database.

2. The same behavior occurs on the old compatibility level.

3. Not sure on that one, but something I’ll play around with when I get a chance.

Hi.

If I have a stored procedure that get a tvp input parameter.

Is it better to move data into temp table in the stored procedure or stick with the tvp ?

10x

Itzikp – I’ll answer that with a question: if I told you the answer was X, how would you go about testing my answer?

I recently read a blog post that suggested using memory optimized table variable. Unfortunately, I’m not able to find the post with a quick Google. Regardless, the post showed a potential solution to the poor performance of table variables. Would you expect that a memory optimized table variables would have the same problems as “traditional” table variables? I would expect the answer to be yes. However, given the performance that memory optimized table variables over “traditional” table variables, would these problems be more tolerable or even trivial?

Robert – check out this past post: https://www.brentozar.com/archive/2014/06/temp-tables-table-variables-memory-optimized-table-variables/

The solution for table variables is simply to never use them except in a few edge cases (SERIOUSLY high call rates where recompilation locks are a demonstrable problem or you need to hold contents after a rollback). Temporary tables always other wise. I can demo bad plans with table variables with a single-row-single-column entity where a temporary table gets the optimal plan.

I also have it from an individual I trust that in-memory temp objects at least in their case were causing a myriad of problems.

In pursuing in-memory table variables you completely miss the root cause of the problems. It is not that THEY perform slowly, it is that THEY create horrifically bad plans.

If you’re implying that table variables are purely in memory they are not. That’s a myth. Table variables are persisted the same as #temp tables.

Sorry, meant to also say I agree that #temp tables generally do generate better execution plans. Just wanted to clarify my point on how they are persisted.

Hi

Thnx for great article. Are you sure that “Estimated number of rows” is correct in “Table variable details” , “Temporary table details” (yellow screenshots) and Result table? I expect value 963 for three last cases.

Also it’s unclear why “Actual rows count” is not equal for all cases. Isn’t all queries equal (except options) ?

Vitalijus – you expect that, eh? 😉

I am very confused here. Why is the first example called the base case if it returns so many more rows that the other queries? I expected the only difference to be the performance, not the number of rows returned. Also, why are you showing the exact code if we can’t run it? Do you have any to create the table you’re using? And what’s with < ? Thanks!

Hi Kalen!

Each case returns the same amount of rows. The “actual number of rows” difference that you are seeing is on the seek/scan of the table variable/temporary table, not on the SELECT operator. The table variable baseline version does a full clustered index scan. The other options do a seek with 963 rows. My screenshots should have been more clear to indicate this. But rest assured that all cases do return the same amount of rows.

Unfortunately the less than and greater than signs get converted to “<” and “>” respectively when I copy code into the WordPress code option. You have to change those when copying back out. Not sure if there’s a fix for that, but it’s definitely something I’ve noticed and have been annoyed with.

We use the Stackoverflow database for our demos. You can download the database if you’d like to play along: https://www.brentozar.com/archive/2015/10/how-to-download-the-stack-overflow-database-via-bittorrent/

Ack, and when I put the lt/gt thing in that comment, it converted them to the proper signs. Why won’t it do that in the code then? Weird!

Thank you for your great article.

I just noticed a side effect of Trace Flag 2453.

One of our applications is using cursors on temporary table variables. This works normally fine, but as soon as this Trace Flag is enabled we get “Msg 16943, Level 16, State 4” “Could not complete cursor operation because the table schema changed after the cursor was declared.”

Now we plan to switch to “real” temporary tables.

Thanks, but I am having this problem in a view so I cannot crate temporal tables in a view.

I am using a function process a xml column and return an id extracted from the function. Because of this I get “CouldNotGenerateValidParallelPlan” as it is evaluates a table valued function.

Any ideas how to run parallel with a high subtree cost?

Strike 1 – “I am using a function”

Strike 2 – “process a xml column”

Strike 3 – I’m not sure how this blog post is even relevant to your problem since views can’t have table variables or temporary tables.

Your best bet is to post your question on StackExchange, but you’ll need to provide more info (code and plan).

DAAAAAAMN

Couldn’t have said it better myself. (sniff) Nicely done. Miss ya.