This came up during Office Hours

And I love questions like this, because they reveal some interesting underpinnings of how the cardinality estimator works. It was something along the lines of “I have a slow query, and when I add an index the query goes faster even though it doesn’t use the index I created.”

We can see an example of this with unique indexes and constraints, but another possibility is that the created index had better statistical information via the histogram. When you add an index, you get Fresh Hot Stats, whereas the index you were using could be many modifications behind current for various reasons. If you have a big table and don’t hit auto-update thresholds often, if you’re not manually updating statistics somehow, or if you’re running into ascending key weirdness. These are all sane potential reasons. One insane potential reason is if you have autocreate stats turned off, and the index you create is on a column that didn’t have a statistics object associated with it. But you’d see plan warnings about operators not having associated statistics.

Again, we’re going to focus on how ADDING an index your query doesn’t use can help. I found out the hard way that both unique indexes and constraints can cease being helpful to cardinality estimation when their statistics get out of date.

One Million Rows!

Here’s some setup script. You love setup script.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

USE tempdb CREATE TABLE dbo.t1 (col1 INT NOT NULL, col2 INT NOT NULL, col3 VARCHAR(8000) NOT NULL) CREATE CLUSTERED INDEX cx_t1_col1 ON dbo.t1 (col1) INSERT dbo.t1 WITH (TABLOCK) ( col1, col2, col3 ) SELECT TOP 1000000 x.rn, x.rn, x.text FROM ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) rn, m.text FROM sys.messages AS m CROSS JOIN sys.messages AS m2 ) AS x |

I know, I know. What kind of lunatic creates a non-unique clustered index? Well, actually, a lot of you. Even after I told you how great they are! It’s a good thing I have the emotional fortitude of a week old banana.

So, table. Table needs a query. Let’s find a love connection.

|

1 2 3 4 |

SELECT * FROM dbo.t1 AS t WHERE 1=1 AND t.col1 >= 1 AND t.col1 < 10001 |

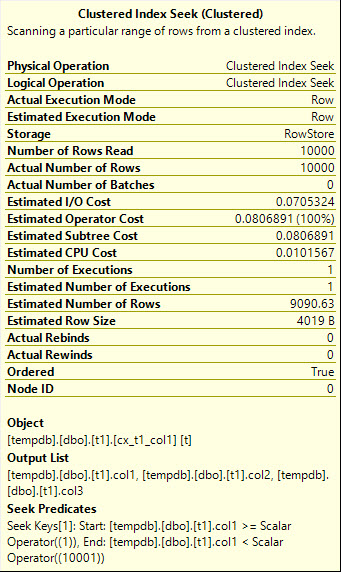

Now, this is BY NO MEANS the worst estimate I’ve ever seen. It’s pretty close, but it’s weird that it’s still not right, because we literally just filled this table up with delicious and nutritious rows.

But we can pretend

Let’s say this rugged old table has been around since SQL Server 2005, and is chock full of customer data. Let’s pretend that being 90% right is still too wrong. We’re allowed to create an index! I bet one on just the column the clustered index is on would help.

|

1 |

CREATE NONCLUSTERED INDEX ix_partytime ON dbo.t1 (col1) |

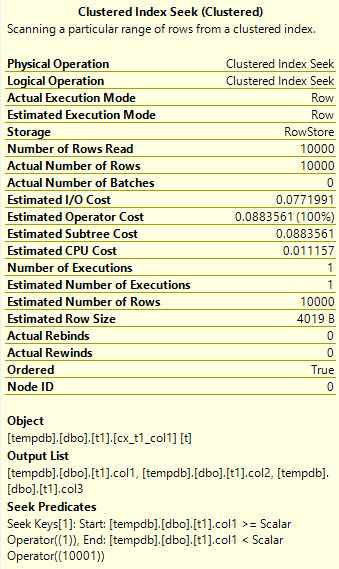

And now, magically, if we run that exact same query…

And yes

If you drop the index and re-run the query, the estimate goes back to 9090.63. Of course, in our case, we could have just updated statistics, but that may not be the obvious solution all the time. Given a choice, I’d much rather update stats than create a fresh index just to update stats. Which is basically what rebuilding indexes is. Heh. Anyway, I hope this helps.

Thanks for reading!

4 Comments. Leave new

And what about filtered indexes?

As the index statistics are filtered as well, it can bring some big improvements in some execution plan.

I figured that out by adding a filtered index.

The query I wanted to improve did not use the index but the estimated rows were far more accurate.

And thus the plan was better.

I choose to let the index, but I’m still not sure if I shouldn’t let only the statistics…

You did that on 2014 no? I’ve got different estimates on 2016 CU2 – 10368 rows, which is slightly better, but still not perfect. It’s the same after UPDATE STATS t1 WITH SAMPLE 1 PERCENT and becoming better when you increase the sample size.

And why it’s perfect after creation of additional index? Because when stats are created for an index, the sample rate is 100 PERCENT (a.k.a. FULLSCAN).

Forgot to mention that 1 PERCENT is the sample size for 10 million rows table, according to the new algorithm, which you’ve tested not long ago 🙂

https://www.brentozar.com/archive/2016/03/changes-to-auto-update-stats-thresholds-in-sql-server-2016/

I’m pretty sure I was using my 2016 instance for this. Admittedly, running the test about a dozen or so times, the estimate was never the same twice. Heh. There was one where it was off by about 3000 rows that I wish I had gotten the screen caps of instead.