It sure can.

The lock risks of shrinking data files in SQL Server aren’t very well documented. Many people have written about shrinking files being a bad regular practice— and that’s totally true. But sometimes you may need to run a one-time operation if you’ve been able to clear out or archive a lot of data. And you might wonder what kind of pains shrinking could cause you.

One pain it could cause you is blocking and lock waits. It’s easy to reproduce, here’s how.

A Recipe for LCK_M_X Lock Waits and Blocking

I was able to easily reproduce blocking in my restored copy of the StackOverflow database on SQL Server 2014 by doing a few things.

I rebuilt a non-clustered index on the Posts table with this command:

|

1 2 |

ALTER INDEX [kl_Posts_OwnerUserId_CreationDate] on Posts REBUILD WITH (ONLINE=ON); GO |

This ate up some space, and gave shrink something to do!

I then started a data modification and left an open transaction running:

|

1 2 |

BEGIN TRAN DELETE FROM dbo.Posts where Id=16; |

And then, in another session, I started to shrink the data file:

|

1 2 |

DBCC SHRINKFILE ('StackOverflow'); GO |

Behold, Blocking!

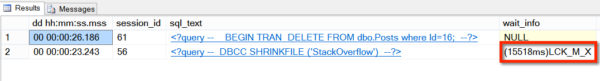

Shrink starts up and slogs its way through things, and soon enough, lock waits appear. This view is from Adam Machanic‘s sp_WhoIsActive:

If I run that with @get_locks=1, I can see details on exactly what lock SHRINKFILE is after:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

<Database name="StackOverflow"> <Locks> <Lock request_mode="S" request_status="GRANT" request_count="1" /> </Locks> <Objects> <Object name="(null)"> <Locks> <Lock resource_type="FILE.FILE_SHRINK" request_mode="X" request_status="GRANT" request_count="1" /> </Locks> </Object> <Object name="Posts" schema_name="dbo"> <Locks> <Lock resource_type="OBJECT" request_mode="IX" request_status="GRANT" request_count="1" /> <Lock resource_type="PAGE" page_type="*" index_name="kl_Posts_OwnerUserId_CreationDate" request_mode="X" request_status="GRANT" request_count="3" /> <Lock resource_type="PAGE" page_type="*" index_name="kl_Posts_OwnerUserId_CreationDate" request_mode="X" request_status="WAIT" request_count="1" /> </Locks> </Object> </Objects> </Database> |

Yep, it’s waiting on a page lock in kl_Posts_OwnerUserId_CreationDate.

But How Many Locks did SHRINKFILE Really Need?

Maybe it needed just a few locks… right?

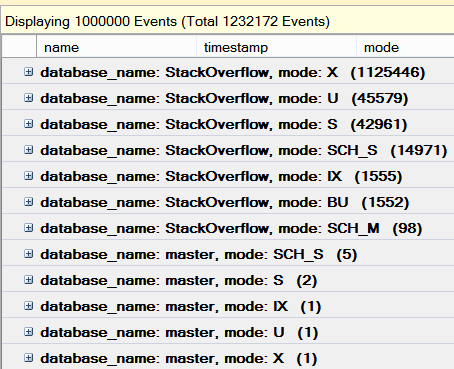

Well, I ran a trace against my session that did that shrinkfile command, and here’s the number of locks by database and type that it caught…

And this was a FAST run, except for that blocking!

Shrinking files is painful

It can actually be worse than this — on a few runs, I was able to reproduce blocking with SCH_M locks that could cause even nastier blocking chains.

Shrinking is slow, tedious work. It eats CPU, steals your IO, and always takes longer than you want. And if it has work to do and you’ve got users or processors modifying that data, lock contention ensues.

Worst of all, at the end of shrinking a database, nobody will ever thank you. Be careful out there, and if you must run it make sure you don’t leave it unattended.

Brent says: whoa, I never even thought about that. Maybe we should design indexes to improve DBCC SHRINKDB speed.

Erik says: if you do this more than once, I will come find you.

36 Comments. Leave new

It is important to note that all those locks are not held at the same time. Rather they are acquired in small batches.

Still, a very valuable post. To my knowledge the first article on the web about this issue. Good job!

Oh, absolutely, the 1 million+ events was a trace of the whole thing, not a single moment in time.

And the amount of blocking depends on how much work shrink finds that it actually has to do — the blocking recipe won’t work without the index rebuild at the beginning.

Would blocking occur on the shrink of the log file? The reason I am asking is that I know a dba who has a very limited amount of space for his log files as the sysops will not allocate him any more space and to get around this he regularly shrinks his logs.

Just curious if you thought to try a test with the log file as well.

No, locking and reads aren’t at all the same for the log file, so blocking isn’t the concern.

If performance matters, you don’t want to shrink log files for other reasons, though. Whenever SQL Server grows a log file, it has to zero out the content, and it is often quite slow. Any transactions making modifications have to wait for the growth to finish before they can complete.

Also, I seem to remember a post detailing that after shrink all of your indexes would be reversed in order on disc requiring index rebuilds. Found it: http://www.sqlskills.com/blogs/paul/why-you-should-not-shrink-your-data-files/

Yep, shrinking a data file after doing an index rebuild is basically the “hamster wheel of death” — defragment, then refragment!

Would it be worth considering breaking up a large data file shrink into multiple smaller chunks/steps programmatically?

Large shrink operations seem like they leave you crossing your fingers desperately hoping for the best.

Yes– I’ve known folks who needed to do a lot of this (moving hundreds of GB of data to a new filegroup) to definitely work on it in sets. You can’t pass a timelimit to shrinkfile, but you can set the target size or just manually stop the command.

In some situations, just creating a new database and loading all the data can also be much faster, but of course that’s not always an option.

Excellent article.

DBCC Shrinkfile seems to be not recommended, so what is the best solution to retrieve the unused space in database files after a large data move and deletion ?

Your choices are:

1) Leave the space and let it be re-used

2) Shrink (the best option of which is SHRINKFILE, because it targets a single file and not all of them)

3) Move everything to a new database

Option 2 is arguably over-used when the freed space might just be re-used within six months to a year.

Kendra,

We have a “poor man’s/woman’s Data Warehouse” that we re-build every night that creates flat-file tables for use by Excel Pivot Tables (not elegant, but much easier for end users than doing it in Analysis Server). All the processing causes the Log File to grow quite huge, and the log is not needed at all because there is NO transaction processing in this database. This is the one time that we find it very important to “clean up after ourselves” and not leave a 15GB Log File hanging around, so we do a DBCC to shrink the Log File at the end of the Job. I have written in a display of the size of the Log File before and after the shrink, and the process works wonderfully – so there IS actually a use for shrinking a file. Thanks for the article on DBCC Blocking, more knowledge is important.

Mat – do you realize that by shrinking the log file each time, you’re causing your build jobs to take longer? SQL Server has to pause to grow out the log file again each night.

If you really don’t need the LOG file – consider changing your Recovery model to SIMPLE. As pointed out by Brent, shrinking the LOG slows everything down because it has to grow again (SQL Server wants!! 15GB)… and growing the LOG is considered a slow operation.

Obviously there is transaction processing occurring because the log is growing. But if you don’t need point in time recovery – SIMPLE might be for you.

Although a 15GB log file isn’t all that large. If you have the space – leave it. Your system wants 15GB of Log – let it have it.

I’m here reading this blog because my maintenance plan is blowing out the LOG to 130GB. Thinking of switching to BULK LOG for maintenance and back to FULL – because I do need point-in-time offered by FULL recovery and 130GB log increases my restore time significantly. My experiments so far show I need only 25GB of disk space using BULK.

Not knowing how you import your data – you might also look into BULK LOG. *Some* operations for importing are compatible with this mode. Read up on it though – because you lose point-in-time while bulk logging. You can have Before and After – but not in between.

Weigh that with the effort to maintain backups when switching between BULK & FULL. Is the complexity worth 15GB?

Have fun!

So let’s mark that as another reason to not shrink my Database.

Is it a bad idea to Shrink Log file in occasional period like monthly once also. Then, Why every one in my team suggesting me to do log file shrink.

your comments are very helpful.

People seem to like shrinking the files because they feel like they’re winning something back. But if you have to do it regularly, doesn’t that mean you need to have that space available for when the log grows— you can’t use it for anything else? And when the log does grow, that can be a very slow process.

So if the space isn’t really free (you have to have it there for when it grows), and you’re losing performance, then it does end up a bad deal.

This is true in the case of a single database.

We had a situation similar to Ayubkhan but with 20 different databases…they all needed to 50% of the disk space for this one process once every month but NOT at the same time.

So, we would iterate thru the client DB’s needing processing, presize the log file for that operation, execute, re-shrink to normal operational size and repeat. thereby reusing the SAME 50% across all clients… Ultimate answer was to refactor the process BUT it was a useful, pragmatic approach at the time.

Disk gets cheaper and cheaper every year though, while the operational costs of writing the code, monitoring it, and dealing with performance impacts of it going wrong are comparatively higher.

I’m not saying there’s never a time for a workaround. I have observed that people often skip the step of evaluating what disk cost would be, and it’s often much cheaper than they’d think.

Definitely agree!

With a spectrum of variables you get a spectrum of results 😉 (or, better known as “It Depends”)

Just wanted to point out a middle ground solution that worked well when we didn’t have the option.

I appreciate everything you write Kendra! Only hoping to add value, not contradict it!

Oh, I appreciate it! You just wouldn’t believe the number of times I still find autoshrink and shrink jobs in place (often when there’s even tons of free space still on the drives and nobody knows why they’re there).

It’s like trading in you big car for a small one, after you had to move stuff or a couple of people joining you on a ride. You don’t need the extra space, so get back a small car. While it may actually help you saving on fuel for your individual uses, the next time you have to move more again, you must first trade in again for a bigger (and hope you can still get it). It’s a hassle!

When I run a Shrink/ReOrg, certain clusters of pages tend to cause more blocking than others. I run shrinks manually and if I notice protracted blocking, I reduce the size of the Shrink file down, sometimes to 100MB chunks at a time, but usually in 1-3 GB Chunks.

SQL Server has a problem expanding transaction log files apparently without bound. For databases that undergo large delete/write operations, the transaction log file grows without bound. This is true even under the simple recovery model. I have seen transaction log files that consume 200 Gb – all of which is empty. The database in question did not have any transaction near that large.

In these cases, it makes sense to periodically truncate the transaction log file on disk to something on the order of 10 Gb.

Hi David,

Well, I do think there are bounds to how much it grows. Think about logging of transactions: if undoing the action itself requires more log space, don’t we need to make sure that we have enough space in the log for rollback in case we need to do that? Logged transactions do need more space for this reason. It’s totally true that going to the SIMPLE recovery model doesn’t remove the need for logging. Some transactions can be “minimally” logged, but it can require special coding, trace flags, and only supports certain schemas and operations.

I do NOT recommend truncating the transaction log in SQL Server. There’s no need for it and the command is no longer supported. In the full and bulk logged recovery model, you should do log backups. In the SIMPLE recovery model you should identify a stable size for your transaction log, and if you need it to be smaller investigate minimally logged operations.

Kendra

Hello Kendra, I found your article and I think it is very usefull. But I have a doubt, have you considered just regular tables, but in case of partitioned tables? I have a database in SQL Server 2012 with 5 TB that grows 25 GB per day. Those transactions are always moved to a database history over 10 TB. As several tables are partioned, and change between filegroups, some datafiles becomes unused and almost empty because the data moving to history. If I shrink an old datafile where there are partitions of a core table in the database, will be there big blockings? Even is this scenario, with the old datafiles not growing anymore, is it a good practice nto to shrink the datafiles?

Thanks!

Tafarelo – generally you don’t move the data between filegroups when you’re doing partitioning. Put each year in its own filegroup, for example, and leave it there.

Hi Kendra,

Blocking itself is not necessarily bad and it is there to enforce database durability.

DBCC SHRINKFILE being blocked is really not a big deal – it is a maintenance task that has no impact on service availability. If it has to wait for another transaction to finish, so what?

However, DBCC SRRINKFILE can and will block other sessions attempting to to read or modify data in the section the shrink operation is trying to move. On a highly-transaction system, it will cause a production outage.

I have found DBCC SHRINKFILE command to have a nifty feature built-in. I do not see this documented anywhere:

SET LOCK_TIMEOUT 10000;

DBCC SHRINKFILE (N’TestShrink’ ,215)

Lock timeout scope DOES NOT affect DBCC command in a conventional way, but instead sets a timeout (MS) for its internal operations. In other words, it attempts to get a lock, gets blocked, times out and retries again. Meanwhile all processes blocked by shrinking can proceed through. This creates similar functionality to spinlocks, which back off if they collide with each other.

LOCK_TIMEOUT should be a mandatory setting for most DBA activities, especially shrinking. Your customers will thank you.

Vladimir – we’re going to agree to disagree that “being blocked is not really a big deal.” The problem is that DBCC SHRINKFILE, when blocked, can block OTHER queries. Users tend to be a little unhappy about that. Your customers will not thank you. 😉

I think that’s a great suggestion. I use LOCK_TIMEOUT to control blocking when Index Rebuilds happen.(with Ola’s script). I’ve killed Shrink a million times while in progress, it’s a very clean operation.

Hi Brent,

You missed the most impart part of my comment about LOCK_TIMEOUT setting. I completely agree that running a shrink in its default state may cause a massive production outage. However, LOCK_TIMEOUT enabled shrinking to back off and let blocked processes through after a preset number of milliseconds. The end result – shrinking is blocked, yet it keeps things going. Happy days.

Vladimir – given that I’ve never seen MS document or mention it for DBCC usage, I’m not too keen on recommending that. I’m glad it’s working for you though, that’s great.

Hello Vladimir and Brent, I think we were victim of a shrinkfile log describe here. We ran an shrink on tempdb log because of an unexpected load. The shrink completed normally within 4 min but some times after, cpu went to 100% and connections were block. Situation was resolve when the cluster “reset” the service base on HealthCheckTimeout.

I felt bad about recommending this even though MS doc said we could run it on a tempdb with activity 🙁

Alain, please verify disk space usage first using:

USE tempdb;

SELECT name,

s.used / 128.0 AS SpaceUsedInMB,

size / 128.0 – s.used / 128.0 AS AvailableSpaceInMB

FROM sys.database_files

CROSS APPLY

(SELECT CAST(FILEPROPERTY(name, ‘SpaceUsed’) AS INT))

s(used)

WHERE FILEPROPERTY(name, ‘SpaceUsed’) IS NOT NULL;

LOCK_TIMEOUT not works.

Blocked sessions can be avoided setting the database as ‘single_user’, right?

HAHAHA, sure.