Over the years, I’ve done all kinds of awful things with T-SQL and made countless mistakes. Some were harmless; others were borderline catastrophic (exciting times!). I was curious what kind of horrible mistakes other people make, so I threw the question out to Twitter.

Every answer I got was unique, which was both telling (so many ways for developers to mess up) and fascinating (no consensus which was worst). Since I didn’t get any winners by popular vote, here are the top three answers I agree with most, with the worst mistake first:

#1: CODING FOR TODAY

We’ve all been there — we just need a script or stored procedure to run and we’re under the gun to get it deployed. The problem here is that as soon as it works and passes QA — you do have QA, don’t you? — we call it a success and don’t look back. We don’t account for any kind of future growth in data size or user base. Instead of moving on, this is the perfect time to check the working copy into source control and then start refactoring to improve performance.

If we don’t revisit and revise our code, we end up with a server full of code that “ran well enough at the time” but now won’t scale. Future-proofing is never at the top of anyone’s list of priorities but it’s just like exercise and healthy eating. We all know we need to do it. It’s a matter of making the commitment to write leaner, healthier code before it clogs the SQL Server’s arteries or gives it a heart attack outright.

There is no better time to refactor than when you get it to meet requirements. If you don’t start refactoring right away, you’ll forget all of the nuance and context involved with the code, you probably won’t remember how/why you arrived at the code that’s there now, and you may not even get permission to spend time on it later. Future You will thank Present You for it.

THE FIX: Buffer your development time to include refactoring, and make sure you have a performance goal in mind. For example: “We need this procedure to return the top five recommendations for the specified account in under 1500 milliseconds.”

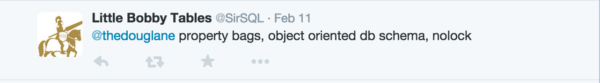

#2: NOLOCK

Do you care if your query results are wrong? No? Congratulations, NOLOCK might be right for you!

The trouble with NOLOCK is twofold: developers usually don’t fully understand the risks involving dirty reads, and when they do, they often leave it in code because it’s better to risk sketchy results than move back to the suburbs of Slowville.

There are appropriate circumstances for NOLOCK, but developers often add it blindly as a performance enhancer, not a legitimate solution.

THE FIX: If the risks that come with NOLOCK aren’t acceptable, you can usually fix those performance problems either with code or index changes. For example, if a SELECT and UPDATE are fighting over the same index and locking each other out, an index tailored to the SELECT statement will prevent it from waiting for the UPDATE to release its lock.

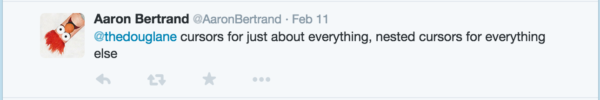

#3: CURSORS

Cursors do terrible, terrible things to performance. Every developer has this moment of enlightenment at some point. As soon as we learn cursors are performance killers, we hunt them down like a starving owl in a field of mice. We shouldn’t be embarrassed that we wrote them in the first place; we were solving the problem the best way we knew how at the time. Still, there’s a universal sense of shame when one developer sees another developer’s cursor, as if the neighbor passed by our open garage and spotted the recycling bin full of cheap beer cans and Cat Fancy magazines.

Like NOLOCK, there are times it’s appropriate to use a cursor, but those occasions are very rare.

THE FIX: Write code that operates in sets, rather than one row at a time.

HONORABLE MENTION:

- Overuse of dynamic SQL

- Security by obscurity

- No indexes

- Incorrect data types, leading to implicit conversions

- Not following their own best practices

- The same mistakes they made two years ago

- Hundreds of columns in a table

- Triggers

31 Comments. Leave new

What?! Triggers? Someone needs to elaborate. Your top 3 are my top 3.

Triggers aren’t universally bad. However, they add complexity both to the database design and the troubleshooting process. For example, say an INSTEAD OF trigger replaces the UPDATE statement in a stored proc. When you try to troubleshoot that proc, you see update statement B running, not statement A like you might expect. Where did statement B come from? Triggers don’t announce themselves so you have to hunt it down yourself.

Now imagine an environment with dozens of examples like that. It can get out of hand very quickly. That’s why most developers (in my experience anyway) try to avoid them if possible.

It’s the old “With great power somes great responsibility ” story. Makes more sense. Great article!

I look at triggers as deliberate side effects. And side effects are something I try to avoid, or otherwise minimize.

We have one of our primary vendor-provided databases completely dependent on trigger-based logic. I just ran select count(*) from sys.triggers….1286 of them. So sometimes you have to live with them, but it definitely does make troubleshooting interesting at times.

Also, it affects performance considerations…particularly when DML triggers are involved, sometimes large sets perform significantly worse than iterative small-set batching.

Still though, I love my logon rollback trigger for when clients connect to certain servers with Access! MUAHAHAHAHA

haha, that’s good! What do you actually do when they connect that way? We have a few users here who use Access to query some DB’s – but it’s just querying… no access for anything else…

It doesn’t get used much since we basically cut off access anyway on that server to people who tend to use Access, but it rolled the logon event back, literally…user gets error message, then logs details of the event to a table. Kind of evil, but then, so are some of those “SELECT ALL THE DATA” Access-based queries, too.

Our main prod db has numerous triggers that really are a good fit for what the app is trying to do. To help with the “What the heck did that?!” problem I have a note requirement where we list all affected tables in the procedure itself. That way I can do an easy search through routine definitions when something happens.

In line with your #1…testing code for performance with undersized data. You test an input process with a 100MB file when you expect a 10GB file in production? Bad form, but the answer isn’t to test with a 10GB file. Test with 100GB files.

I think developers sometimes approach performance testing with the idea that they should hope their code squeaks by, but if you want to produce code that sticks around for a while, you WANT to break it…you should want to expose the bottlenecks and problems, so that in two years when your code is being asked to handle 10x the data it was designed to handle (AND IT WILL BE), it doesn’t break much of a sweat. “Good enough” is often not.

That’s a good corollary to #1: your code will stay in use much longer than you planned.

I agree totally. I would extend it to include that your test data should be proportional as well. For example, we work with student data. So, a school district might have 20 school records, 30K student records, but over many years 300K contact records and millions of assessment and attendance records.

We also found that having reasonable data in them is important so that when you see results in your application, you can immediately tell if something is not right (16 year old students in 1st grade or students marked with ‘English’ as primary language being enrolled in ESL classes).

We’ve found that the Red-Gate data generator program is great for making data like this in a reasonable amount of time. There might be others out there, but that’s what we use. Worth its weight in gold (does software have weight? – you get the idea…)

Rob

Spinning databases up with a single file. Unlimited file growth but grow by 1mb set. And then carrying that forwards to production environments.

Deep sigh….

I once had a client with a process that was taking six hours to complete. When I looked at the SQL, they were using loops and temporary tables to cycle through thousands of records, performing the same operation on each. When I asked the developer why he implemented these loops, he replied “Because cursors are bad for performance.”

From that point forward I’ve tried to stress that it is LOOPS that are bad, and cursors are a form of looping logic.

I rewrote the process using set-based operations and it executed in under six minutes.

sqlblindman, I am fighting that one now. They have all three of Doug’s suggestions in a 900 line stored procedure that walks through a list of dates and applies code through sub-queries that have nested converts 3 layers deep on the date field they are searching through.

The problem I have is the claim that “It’s been running fine for three years and suddenly…” They were happy when it ran in 1 1/2 hours but now, it’s taken 5-6 and they don’t have time to retool the query. Their solution to management is to buy a bigger server.

Scalar UDFs killed me once. Writing code like a application developer instead of set-based queries.

Over recent years I have had several customers who love to do the following;

Create a view

that takes input from another view…

that takes input from another view…

that takes input from another view…

that takes input from another view…

The best was one with a stack of 17 views. Phew.

I agree with this one, but if you really want hell change those SQL views to Access queries. Have fun waiting 5+ minutes to get 10 results from three joined tables…

Oh I forgot about 200 lines of case statements as part of that 900 line behemoth.

I have also noticed that SQL Server migrated from 2000 to 2008 or higher version. More often team member not takes ownership of code to make appropriate changes using latest functionality. Mentality get set that code was working fine before why touch it and spend time, not realizing there are great built in future available to optimize code that improve server performance and improve application usage..

Too many indexes on a single table can also be problematic. And how about multiple mistakes rolled into one: a trigger which uses a cursor (or two) and uses the NOLOCK query hint as well. I’ve seen it. 🙁

Using only single-column-indexes in the hope that the SQL server will combine them magically in the right way to get a good performance

Example:

– pk_index on the UNID as varchar(32)

– index 1 on creation date

– index 2 on creation user

– index 3 on deleted flag

– index 4 on order date

– index 5 on customer id (as varchar(32))

…

queried with something like where customer_id = ‘xxxyyyzzzz’ and order_date > getdate() – 365 and deleted = 0

I would add non SARGable queries to that list…as well as overuse of UDFs

ADO.NET’s SqlCommand.Parameters.AddWithValue(). It’s just all sorts of awesome with varchar columns.

For a good example of “Not following their own best practices,” see every Microsoft System Center product.

You nailed it with this one, Brent!

When I read item #1 it reminded me of a quote that an old COBOL instructor of mine had displayed on his office door about 25 years ago: “If we don’t have the time to do it right, when will we ever find the time to do it over?”

In my opinion, it’s better to do something right the first time rather than do it over and end up having to do it twice.

This reminds me of my favorite saying: If I look back on code that I’ve written in the past and don’t say “What was I thinking?!” or “I should have done it this way…” then I haven’t learned anything.

So my answer would change day to day depending on what I was working on. 🙂

Is there a chance to find who created a particular stored procedure?

Sounds like the real problem is code not being written or reviewed by properly trained software engineers. Where are the standards? Is there a good book written on this subject?

Murphy’s law: Recent significant research in this area has been conducted by members of the American Dialect Society. Society member Stephen Goranson has found a version of the law, not yet generalized or bearing that name, in a report by Alfred Holt at an 1877 meeting of an engineering society.

It is found that anything that can go wrong at sea generally does go wrong sooner or later, so it is not to be wondered that owners prefer the safe to the scientific …. Sufficient stress can hardly be laid on the advantages of simplicity. The human factor cannot be safely neglected in planning machinery. If attention is to be obtained, the engine must be such that the engineer will be disposed to attend to it.

And since when is Cat Fancy magazine a source of shame?! Haters gonna hate, I guess…

My personal fave is creating an IDENTITY column on every frickin’ table and using that as the clustered PK – even on child tables that are NEVER queried alone. Which leads to a couple dozen ginormous covering indexes to cover up the fact that you screwed the pooch on the clustering to begin with…

Are people still using NOLOCK? I’ve been hearing NOLOCK has been bad for close to 20 years now and I haven’t seen a NOLOCK used except in very old code. I have scripts I run that check for things like NOLOCK and that code won’t pass a peer view thus never making it into production code. I have written basic rules like avoiding cursors, NOLOCK, and other bad habits into coding standards for some time now. None of these bad habits make my top 3 because we break those habits with new developers from the very beginning.

I’ve seen it at 3 different companies in the last 3 weeks.