Everything You Know About Clustering is Wrong

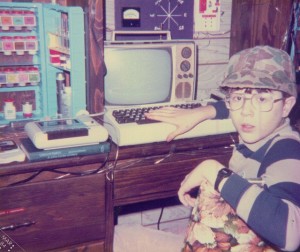

Remember that weirdo in high school who had no social skills? Couldn’t get a date, worked at the fast food joint as a fry cook, face covered with zits – you know the one.

Okay, actually, it was us. Anyway, the point is, we got our act together, didn’t we? So did Windows Failover Clustering. When you weren’t looking, Windows Server 2008 cleaned up its clustering, and now it’s the new hotness that gets all the dates. It’s time to revisit what you thought you knew about Windows clusters.

Clusters Require Identical Hardware and Configuration

When I was your age, I had to look up every single piece of server hardware on the Windows Hardware Compatibility List (HCL) to make sure it was tested and approved. I either had to buy approved clusters as a package, or assemble them from detailed hardware lists. The new servers I wanted were never on the HCL, or they were way too expensive. Even when I got the goods, I had to assemble everything and then just hope it worked right. Inevitably, it didn’t, but the hardware usually wasn’t to blame – it was my own stupidity.

With Windows 2008 and newer, you can slap together pretty much any old hardware, run the Validate a Cluster wizard, and know right away that…uh, you’ve got a lot of work to do. I know you’ve got passionate feelings about how wizards are evil, but the Validate a Cluster wizard is AWESOME. It tests just about all of the requirements for a failover cluster and gives you a simple report of what’s broken and why you need to fix it.

You don’t need identical hardware on each node anymore – not by a long shot – but the configuration rules are still really, really specific. Some rules are guidelines (like the suggestion of multiple network cards to mitigate risks of a patch cable coming loose) but some are outright requirements.

See, this is one of my favorite things about the wizard: by default, if your cluster doesn’t pass the validation wizard, SQL Server won’t install. This is a DBA’s best friend in the war for systems excellence. If your company has separate Windows, storage, and networking teams, you can run the wizard before installing SQL Server. If it doesn’t pass, you can shrug, pass the ball back to the other teams to get the setup right, and work with them to get ‘er done.

Clusters Need a Heartbeat Network

Cluster nodes used to keep tabs on each other, and if they couldn’t reach a node, they’d freak out. To minimize the freakiness, we used a separate heartbeat network that didn’t handle any other traffic than just cluster chatter. In simple two-node clusters, this was often done by running a crossover cable between the two nodes, which even eliminated the possibility of switch failures. This was a giant pain, and almost nobody got the configuration quite right – 258750 was one of the few Microsoft knowledge base article numbers I actually knew by heart.

Windows Server 2008’s failover cluster networking is less freaky and more friendly: it’ll use whatever networks it can to reach the other nodes. This has its own drawbacks – we need to make sure that any node can reach any other node over any available network, and we need to make sure that all of our networks are highly available. That highly available part is key – preferably we’ve got two network cards in a teamed pair, and we test before go-live to make sure the cluster stays up if a patch cable goes down.

Clusters Require Shared Storage (SAN)

The entire history of Windows clustering has revolved around a shared set of drives that all of the nodes could access. If one server crashed, another node reset the drive ownership, took control, and fired up SQL Server.

You can still build shared storage failover clusters, but Windows Server 2008 and 2012 both manage to run some clustered applications with no shared storage devices. The app has to be designed to work without shared storage, like SQL Server 2012’s new AlwaysOn Availability Groups. Heck, we can even fake-out traditional shared-disk clustering solutions by using UNC paths for our databases. Jonathan Kehayias wrote an in-depth tutorial on how to build a SQL Server cluster on a NAS.

Cluster Quorums Manage Themselves

Back in the days of the quorum drive, all of the cluster nodes got together and decided who was boss simply based on who could see the quorum drive on shared storage. We could move the cluster ownership around by passing the quorum drive around.

Today, since we don’t necessarily have shared storage, we can’t rely on a quorum drive. Windows Server now offers a variety of quorum options including node majority, node and disk majority, and my favorite at the moment, node and file share majority. This means a file share can act as a voting member of the team, enabling two-node clusters with no shared storage.

Configuring quorum – especially managing non-voting members of the quorum – is a tricky but necessary part of building a solid cluster. I’ve already helped a few folks bring their clusters back online after they accidentally took the whole thing down due to rebooting just one (seemingly) passive node. We have to understand our cluster’s quorum method, document what happens if one of the members is rebooted, and ensure that all team members know what needs to happen during patch windows.

Cluster Management is Painful and Obscure

If you’ve had the misfortune of memorizing cluster.exe commands just to get your job done, raise your hand. No, wait, put your finger back down, that’s not appropriate. This is a family web site, and we don’t need to hear your horror stories about reading obscure knowledge base articles in the dead of night.

Unfortunately, the bad news is that this particular point is still true. You’re still going to be managing clusters at the command line.

The good news is that for the most part, you can use PowerShell instead of cluster.exe. This means that as you learn to manage clusters, you’ll also be learning a language that can be used to manage more SQL Servers simultaneously, plus Windows, VMware, Exchange, and lots of other things that you probably didn’t want to have to learn. Okay, so that’s also still kinda bad news – but the good news is that sysadmins will find cluster management more intuitive, because they can use the language they already know.

More Microsoft SQL Server Clustering Resources

Whether you want help choosing between an active/passive and an active/active cluster, or if you’re the kind of DBA who knows that’s not even the right name for failover clustered instances anymore, check out our SQL Server clustering training page.

Related

Hi! I’m Brent Ozar.

I make Microsoft SQL Server go faster. I love teaching, travel, cars, and laughing. I’m based out of Las Vegas. He/him. I teach SQL Server training classes, or if you haven’t got time for the pain, I’m available for consulting too.

Get Free SQL Stuff

"*" indicates required fields

59 Comments. Leave new

Clustering has gone a long way from NT indeed – now you can have a cluster on your PC (if CPU supports virtualization), all it takes is Hyper-V and 3-4 VMs.

The “IF” is not necessary (under VBox use only 32bit OS versions and run your VM’s as you’d like)

Nice post Brent ! As you said clusters are way too easy to setup and manage with 2008.

Adding a disk as a dependency for a resource(say SQL)without recycling the service was one of the coolest things to happen starting 2008 🙂

I love that Brent is pushing PowerShell. Did not think I would ever see the day. Great article and timely as I think I will be building a new SQL Server cluster very shortly. Thanks for all the great info you put out.

I’ve only worked with SQL clustering since Server 2003/SQL 2005 but I can attest to the facts you’ve laid out in this post. You mentioned that admin is still painful and I’d stress that folks shouldn’t assume that easy setup means easy maintenance. Without going into great detail many admin tasks are pretty simple (GUI or PS) once things are set up but you can get deep in the weeds very quickly. I had a very painful 48 hrs over a weekend a few months back that started with a fairly simple operation of removing a disk resource from a clustered instance of SQL Server. I now have a facial tic and an obvious limp 😉

Will,

I had the same thing a while back on a SQL cluster. The Cluster still works but some resources are only visible via Powershell/CLi. The GUI does not show some resources. Little things like SQL Server!

Thanks for the ever consistent valuable articles.

You crack me up.

Hi Brent,

I have a question regarding the virtual file latency for our production database. I pulled up some stats using the DMV sys.dm_io_virtual_file_stats and found high read and write latencies for the mdf file placed on a local physical machine partition. We checked for bad sectors and did not find any. Also, we got a lot of free space.We performed defragmentation and re-indexing jobs. But still the production mdf file latencies are high. Other MDFs on the same physical partitions have fine values for virtual file stats. Could you provide your opinion here, thanks.

Abhinav – does your production MDF have significantly *more* reads and writes than the other files? Are the other databases simply not doing that much activity? Or it may also have to do with the time of day when the slow reads and writes are happening.

Hi Brent,

Yes, since this is our production database , this MDF does have a high read and write load as compared to other databases. But using the DMV , the read latency I got was around 65 and the write latency around 270, is that normal. Since I compared this to another database on the same partition, although not used as largely as this one, had the values 10 and 15 respectively.

Thanks for helping out.

That sounds pretty high on the write latency. Generally I get worried if I see write latencies over 100ms. If you’d like consulting help with this, click the Contact link at the top of the page and we can work with you on a SQL Server health check to narrow down the root cause, or check out the storage chapter that I wrote in the book Pro SQL Server 2008 Internals and Troubleshooting.

Thanks Brent, I have that book, will check that chapter again to seek answers.

Great post, Master Brent. One thing that a lot of people implementing Windows Server 2008 failover clusters need to understand is that just because it is supported to have a single network adapter on each node, doesn’t mean it’s OK. I’ve dealt with customers who have highly critical databases running on clusters with only ONE network card. Since the Failover Cluster Validation Wizard simply flags this as Warning and not an Error, they go ahead and build the cluster anyway. They forgot about the reason why they have the cluster in the first place – high availability. Even if you have multiple NICs per node that are teamed up, how sure are we that the network switches are redundant and highly available? I’ve seen DR exercises where only the servers are tested but not the underlying network architecture. Only when the network switches themselves fail fo they realize that they not at all highly available. I still recommend having a dedicated network for the heartbeat communication and if the customer can guarantee that the network layer is highly available, then I’ll be happy with a NIC teaming implementation.

Edwin – thanks, sir! Yep, when it really counts, you want redundancy everywhere. Same thing with redundant power distribution units in the datacenter – ideally, I want every server plugged into separate ones, running off separate battery supplies, etc.

I’m with you on having redundancy when it really counts. Unfortunately, most IT professionals don’t think beyond the scope of their job responsibilities. Things like HVAC, power sources, documentation, etc. should be part of the entire HA/DR stack. I remember dealing with a cluster that blew up simply because the AC broke down and turned the temperature up in the datacenter. We had to bring in industrial fans and power down non-production, non-critical servers just to keep the temperature down a bit. This was the driving motivation behind my 24HoP presentation last year

http://www.sqlpass.org/24hours/fall2011/SessionsbySchedule/DisasterRecoveryIsNotJustAboutTechnology.aspx

Hi

Thanks for nice post. I have been using disk cluster for many years for oracle database and for server redundancy. Now we are thinking to use windows 2012 but eliminate disk cluster. Is it possible to have server redundancy without server?, how about share storage that we need to put oracle database?

Thanks,

Hello,

I think I may not have understood your question completely, but I’ll give it a shot.

If you’re asking if you can have redundancy for a SQL Server without shared storage, the answer is yes– check out Brent’s video on high availability and disaster recovery for SQL Server here: https://www.brentozar.com/go/fail/

If you’re asking about clustering Oracle, I can’t help on that one!

Thanks for your comment, actually it is for oracle 11g Standard edition, nor SQL server. Do you have some link to build the server redundancy (forget about oracle for now), without disk cluster, I will start from there!.

Thanks

How much does it cost to install cluster server for a company with less than 100 people?

Lyang – well, cluster installation costs don’t usually depend on the number of people, but more on the size of the application, the requirements for Recovery Point Objective (RPO) and Recovery Time Objective (RTO), scale-out needs, number of nodes, and so forth.

Thanks for this article, I enjoyed reading.

I am a bit late to this POST.

I wonder when exactly Microsoft will launch the final release of its CLUSTERING !

I would like to add that clustering is not the ultimate solution for our business continuity…better is backup.

GEO~

Geo – backups are only a part of the solution. After all, if you experience an outage, you can’t usually wait for a restore to finish before the business comes back online. 😀

Nice article. One question: I only need to restart the server that acts as the passive node. Do I need to take extra precautions, or can I just restart the server and trust that the clustering service will deal with it properly?

It’s usually not a big deal to reboot the idle node (as long as it’s really idle and you aren’t just missing an active service on it). Personally I still save that work for a reserved downtime whenever possible.

If it has to be done in an emergency situation I let people know that during the duration of the work high availability will be briefly suspended and that while an outage isn’t planned, things can always get wacky.

I’m paranoid like that because I’ve had problems getting servers to come up after reboots and I’ve just generally learned to expect the unexpected.

Hi,

We are planning on expanding our current cluster in the next 6-12 months by adding nodes on a different (new) subnet than the one we are currently on. We are on Windows Server 2008 R2 SP1 and have implemented AlwaysOn AGs for our databases.

After watching Brent’s recent webcast on “AlwaysOn Lessons Learned” I realized we made a mistake not going with Windows Server 2012 but it is too late to undo this.

Going forward with our cluster expansion, can we build the new nodes on Win 2012?

Is it possible to have some nodes on Win 2008 R2 and some on Win 2012?

I’m guessing this is not supported but was wondering what are people’s thoughts on this.

Thank you,

Marios Philippopoulos

Brent and Kendra,

I am very new to writing blogs. But read the SQL Server blogs regularly.

Fantastic effort you put into the SQL Server industry by the way of providing the information and the free tools which helpful to the thousands of people who use SQL Server.

I recommended Blitz into our Production environment and we implemented it almost in all of our Production servers. It reduced our manual work tremendously and we started to focus on the Clustering and other High Availability now.

Thanks again for your wonderful service to the SQL World!

Hi Brent,

I have a doubt, when by any case, cluster is broken, then I guess we can revisit and find the last status of the cluster through cluster hive which is stored in C:\.

If that is the case, can you please help me with the path and I could not find it on the node.

If not, is there a way where we can get last status of the cluster.

Hi There,

First of all great articles Brent…they have helped me on many occasions 🙂

OK have a quick question i am doing some Cluster Failover Testing and when I switch a node off the other node comes up and everything works perfect.

Now i wanted to push the failover testing a little further and simulate a network failure, so i unplug the network on node one (Primary) but unlike a machine switchoff Node 2 does not take over and infact just screams that it cant see the other Node.

I’m sure this is something very stupid but wondered if anyone got any ideas how to do with a network failure in a clustered environemnt?

Big thanks,

Steve

Steve – sure, your network administrator can “black hole” a particular port so that it’s still online, but it can’t see any other nodes. Examples of doing this include putting it on a different subnet.

Hi Brent,

Thanks for the quick reply.

I should mention a little about the setup…

* Two subnets

* Windows 2012

* SQL 2012 AlwaysOn

In this example Firewall is switched off on all machines

Node01 = 01srv01 – sites on subnet 1

Node02 = 02srv01 – sites on subnet 2

ClusterName – 00srv01

I am using VM to do this (On an ESX box) I have consoled onto the Node01 machine and disabled the Network (what i felt was the nearest to unplug of a network cable 🙂 ) on Node02 i cant ping node01.

I have full control over the N/W and VM’s so can tweak as required…confess I am not a big N/W expert but can get my way roun dmost things.

Oh one more thing I have done a powershell trick by a Symon Perriman guy from MS to add RegisterAllProvidersIP

Thanks Steve

Hi Brent,

I fixed it and the issue was due to Quorum.

I setup Quorum witness (Fileshare) and it worked fine.

I’m going to investigate this more but now testing going well.

Thanks,

Steve

Hi,

I have a question, I have an active/active two node SQL 2008 R2 Cluster running on Server 2008 R2, now due to some business changes, I want to make this a multi-site cluster but I want to keep the SQL instance names the same (saves me having to change a lot of configs). I have five SQL instances

At the moment the two nodes are attached to shared storage, so in a multi site cluster I will need similar storage at the other site, which I will purchase.

So can this be done? Can I change the cluster to introduce another node and make the cluster a multi site cluster and keep the SQL instance names?

Thanks in advance

Jason – ooo, that’s a big complex question that’s kinda outside the scope of a blog comment. It’s an architectural question that involves a lot of work. I’ll be honest – I wouldn’t want to take an existing Win2008R2 cluster and turn it into a multi-site cluster – when things are critical enough to go geoclustering, then it’s time to get onto Windows Server 2012R2. Kendra’s blogged about some of the awesome changes here:

https://www.brentozar.com/archive/2014/03/need-high-availability-sql-server-windows-server-2012/

Thanks Brent, after reading about it a bit more and realizing I must be the only person wanting to do this (lack of google hits…), I came to the same conclusion that upgrading to Server 2012 R2 would be the way to go and more sensible!

Hi Brent,

I read there is no requirement to configure MSDTC from Windows server 2008 onwards under clustering as DTC service runs locally on the nodes.

Could you please confirm the same ?

Regards,

UPENDRA

Upendra – interesting, where did you read that?

I read at below link

http://blogs.msdn.com/b/alwaysonpro/archive/2014/01/15/msdtc-recommendations-on-sql-failover-cluster.aspx

Please confirm from your side if it is correct.

Regards,

UPENDRA

Upendra – great, now reread that post *carefully*, because it doesn’t say “there is no requirement to configure MSDTC.” I know it’s a long post, but it’s long for a reason.

Brent,

I see as below

Do I Need to Cluster MSDTC after SQL installation?

With Windows 2008 Failover cluster and later you do not need to cluster MSDTC to utilize the functionality of the MSDTC service. This is because MSDTC was re-designed in Windows 2008 and unlike Windows 2003 if Windows Failover Cluster was installed you had to cluster MSDTC. This is no longer the case when using Windows 2008, since by default MSDTC service is running locally, even with Failover Clustering installed.

Currently there is some documentation that states you need to have a MSDTC Resource in your cluster.

I want to start out by emphasizing that the statement “You must install MSDTC is not 100 percent accurate.” The statement does not take into account the OS and the changes with MSDTC in Windows 2008 and later.

Regards,

UPENDRA

Upendra – you need to read the ***whole*** post. Thanks.

Hey how can i get ride from the following error ” cluster shared disk available check failed”.

I need to setup testing environment, i am not going to use any external storage.

is there any alternate way to use local disk as storage and avoid this error

Kailas – kinda sorta:

https://www.sqlskills.com/blogs/jonathan/failover-clustering-without-a-san-sql-server-2012-and-smb-for-shared-storage/

Kindly confirm if the below number of IPs specified below are sufficient :

Current Setup :

Windows 2012 R2 Server

SQL server 2014 Servers

2 node Single instance failover cluster (Active/passive)

• 1 IP for Windows Cluster

• 1 IP for SQL Server Virtual Network Name

• 1 IP for public network

• 1 IP for heartbeat network (Private)

• 2 IP for the Windows physical servers( 2 servers)

Pushpa – personalized cluster configuration is a little beyond what we can do in an unrelated blog post comment.

Hi Brent,

i have problem with WFSC configuration in my always-on setup .i have used one public network for two node Cluster. some network delay in same subnet nodes wrere removing from active cluster membership and again include the cluster group once ,it got network connections. due to this my cluster failover very often. once it has reach threshold of failover count , cluster role stopped.

i have increased cluster subnet threshold 5 to 20. still i need a clarification about heart beat network is must for WFSC configuration for always on. ?

Selvakumar – you’ll want a very reliable network for AlwaysOn AGs (or anything else that relies on Windows Server failover clustering.) We’ve written about that here: https://www.brentozar.com/archive/2012/06/why-your-sql-servers-network-connection-matters/

I have a SQL Cluster running in Windows 2012 server. I want to remove an existing Mount Volume Point from the SQL cluster. I am planning to do the below steps. Please let me know if it is correct.

1. Remove the dependencies of MVP on SQL cluster.

2. Bring MVP offline.

3. Remove MVP from SQL Cluster.

4. Finally remove it from Cluster storage.

Arju – for questions, head over to http://DBA.StackExchange.com.

I have a SQL 2014 Windows 2012 AlwaysOn cluster. Is it still recommended to use a HeartBeat Network with AlwaysOn?

Wory – please read the post.

Mr. Brent, do you recommend to use a different subnet for heartbeat network or can I use same subnet to keep firewall simple to manage? The network is already redundant and heartbeat network is going to be over the same.

Sohail – read the post.

Thank you. I got it this time.

I have two nodes cluster with quorum disk, recently I have updated windows patches as well as SQL 2008R2′ SP3. afterward, we’re unable to move cluster resource (MSSQLSERVER) to another passive node. whenever I start to move it it automatically comes back to original node with two events ID 1069 and 1205.

Nirbhay – for support questions, your best bet is to call Microsoft or open a question at https://dba.stackexchange.com.

Hello Brent! I actually have no question, just wanted to say I’m impressed that an author actually takes the time to respond to a fair amount of comments, even years after the original article was published. Congratulations, a superb job in both content and service.

Thanks!

What will be the impact of having 8 node cluster rather having 2-node cluster.

Madhusudan – the pros and cons of an 8-node cluster design are kinda beyond what I can do in a blog post comment, unfortunately. An infrastructure that complex is where consulting comes in.