March 31st is World Backup Day, and while most of the press will be focusing on backing up your priceless lolcat photos, it’s still a good time to talk with management about your database backups.

Your boss will have some simple questions, and you can arm yourself with a few easy queries.

Are We Backing Up Every Database?

Even if you think your backups are running successfully, you have to double-check. I had a client recently who’d copy/pasted jobs across several different servers and databases. While editing one of the jobs, someone made a typo, and they were backing up the master database instead of the user databases. The backups worked, ran successfully every night, but weren’t actually backing up the data that people really cared about.

This query will list the last full backup for all of your databases, and like all of the queries in this post, the results should come back nearly instantaneously with no blocking. They’re completely safe to run in production.

|

1 2 3 4 5 6 |

SELECT d.name, MAX(b.backup_finish_date) AS last_backup_finish_date FROM master.sys.databases d WITH (NOLOCK) LEFT OUTER JOIN msdb.dbo.backupset b WITH (NOLOCK) ON d.name = b.database_name AND b.type = 'D' WHERE d.name <> 'tempdb' GROUP BY d.name ORDER BY 2 |

Review that query result and double-check – then ask, “Do I really need to back up all of these databases?” If you’ve got the demo databases AdventureWorks, Northwind, or pubs on your server, and you’re backing them up daily, you’re wasting resources. Get ’em off your production servers.

Are Our Backups Fast Enough?

In the msdb database, SQL Server saves backup size and duration for all backups. We can use those two numbers, we can use the power of math to get throughput:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

SELECT @@SERVERNAME AS ServerName , YEAR(backup_finish_date) AS backup_year , MONTH(backup_finish_date) AS backup_month , CAST(AVG(( backup_size / ( DATEDIFF(ss, bset.backup_start_date, bset.backup_finish_date) ) / 1048576 )) AS INT) AS throughput_MB_sec_avg , CAST(MIN(( backup_size / ( DATEDIFF(ss, bset.backup_start_date, bset.backup_finish_date) ) / 1048576 )) AS INT) AS throughput_MB_sec_min , CAST(MAX(( backup_size / ( DATEDIFF(ss, bset.backup_start_date, bset.backup_finish_date) ) / 1048576 )) AS INT) AS throughput_MB_sec_max FROM msdb.dbo.backupset bset WHERE bset.type = 'D' /* full backups only */ AND bset.backup_size > 5368709120 /* 5GB or larger */ AND DATEDIFF(ss, bset.backup_start_date, bset.backup_finish_date) > 1 /* backups lasting over a second */ GROUP BY YEAR(backup_finish_date) , MONTH(backup_finish_date) ORDER BY @@SERVERNAME , YEAR(backup_finish_date) DESC , MONTH(backup_finish_date) DESC |

The awesome part of this query is that it’s not measuring backup duration alone – sure, backups run longer as you add more data. This query’s actually measuring backup throughput, meaning how fast the backups can get data out.

When I review the results of this query, I focus on the throughput_mb_sec_avg field and look for big drops (or increases) over time. If backup speed dropped by 30% in January, I start asking questions about what network or storage changes we made at that time.

Compare that number to the bandwidth rates in Kendra Little’s “How Big Is Your Pipe?” bandwidth reference poster, and you’ll get a rough idea for comparison. If you can’t get at least the throughput of a 1Gb Ethernet connection, it’s time to start talking to your storage and network admins about teamed network cards, RAID 10 backup targets, and how to get shorter maintenance windows with backup compression.

Are We Backing Up Corrupt Data?

Just because your backups are succeeding doesn’t mean you’re backing up legit data. The backup process doesn’t do anything like the DBCC CHECKDB process, which checks that the data on disk actually makes sense.

I really, really care about this because if you get a data corruption error, you may have to restore the most recent database backup and see if the data’s corrupt there too. If it is, step back and restore the full backup from the day before that – and the day before that – and the day before that. If you’re lucky, you’ve got a copy of the data from before the IO corruption occurred. If you’re unlucky, you don’t, and then you start looking for an uncorrupted copy of your resume.

The key to success: run DBCC more often than you expire backups. If you only keep 7 days of backups, then you should run DBCC more frequently than that. If you only run DBCC once a month, you won’t find corruption until long after the clean backups are gone.

Here’s how to see the last time DBCC CHECKDB finished successfully on each database (for SQL Server 2005 and newer, and must be run in a database in compatibility level 90 or higher):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

CREATE TABLE #temp ( ParentObject VARCHAR(255) , [Object] VARCHAR(255) , Field VARCHAR(255) , [Value] VARCHAR(255) ) CREATE TABLE #DBCCResults ( ServerName VARCHAR(255) , DBName VARCHAR(255) , LastCleanDBCCDate DATETIME ) EXEC master.dbo.sp_MSforeachdb @command1 = 'USE [?]; INSERT INTO #temp EXECUTE (''DBCC DBINFO WITH TABLERESULTS'')', @command2 = 'INSERT INTO #DBCCResults SELECT @@SERVERNAME, ''?'', Value FROM #temp WHERE Field = ''dbi_dbccLastKnownGood''', @command3 = 'TRUNCATE TABLE #temp'; --Delete duplicates due to a bug in SQL Server 2008 WITH DBCC_CTE AS ( SELECT ROW_NUMBER() OVER ( PARTITION BY ServerName, DBName, LastCleanDBCCDate ORDER BY LastCleanDBCCDate ) RowID FROM #DBCCResults ) DELETE FROM DBCC_CTE WHERE RowID > 1 ; SELECT ServerName , DBName , CASE LastCleanDBCCDate WHEN '1900-01-01 00:00:00.000' THEN 'Never ran DBCC CHECKDB' ELSE CAST(LastCleanDBCCDate AS VARCHAR) END AS LastCleanDBCCDate FROM #DBCCResults ORDER BY 1, 2, 3; DROP TABLE #temp, #DBCCResults ; |

If you’re not happy with the results of this query, it’s time to start running DBCC CHECKDB more often. If you’re unable to run it in production due to performance/uptime issues, consider restoring your databases at least once a week to a development or QA or disaster recovery server, and run DBCC CHECKDB there. Some backup products even help schedule this for you.

Are The Backups Making It to Tape?

If you’re backing up to disk, and the network admins are backing up your disks to tape, … well, are they? How can you be sure?

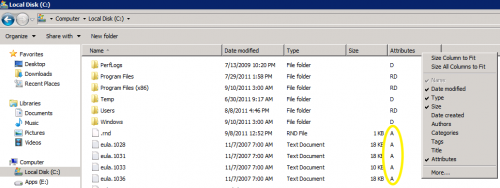

The first step is to check the archive flag. Open Windows Explorer and navigate to the folder where you’re writing backups. Right-click on the bar where Name, Date Modified, and Type are shown, and click More. Choose the Attributes column, and your Explorer window will look something like this:

If you see an “A” in the Attributes column, that means the Archive bit is set – the file is ready to be archived. When your backup software runs and sweeps these files to tape, it resets the Archive bit to off, thereby telling you that your file no longer needs to be archived. If you see A bits on database backups from yesterday, that tells you they haven’t made it to tape yet, and it’s time to start asking questions about backup schedules.

40 Comments. Leave new

Great post. This will help me better plan my upcoming backup planning project.

However, doesn’t the first query show all databases and their last backup dates, not just the ones that haven’t been backed up in the past 7 days?

I tossed a having clause in there and got the specified results, but I think I like seeing the full set anyways.

Jason – great catch! I was thinking of our Blitz script when I wrote the words, and I fixed those to reflect that it’s all databases. Thanks!

I am still new with SQL but query to check last DBCC check has some errors… I tried to parse query and got the following error

Msg 102, Level 15, State 1, Line 29

Incorrect syntax near ‘;’.

Please let me know if I am doing something wrong

Rizwan – make sure you’re running that on a database in compatibility level 90 (SQL 2005) or newer. That query won’t work in SQL Server 2000, which is out of support.

Hi Brent,

I am currently running it on SQL 2008 (10.0.2710), and running against master DB.

Thanks!

Brent:

I got the same error. I changed line 29 from:

WHERE RowID > 1 ;

to

WHERE RowID > 1 ;

and it seems to run OK now.

Ah, the joys of SQL in HTML! Thanks guys, fixed.

That is, I changed “& g t ;” to “>”.

That passed parse but now getting following error

Msg 208, Level 16, State 0, Line 1

Invalid object name ‘#temp’.

Msg 208, Level 16, State 0, Line 1

Invalid object name ‘#temp’.

Msg 4701, Level 16, State 1, Line 1

Cannot find the object “#temp” because it does not exist or you do not have permissions.

Any suggestions… I have verified that I have sa rights on the server.

Thanks!

I have resolved the issue.. thanks for the great article… the results are very valuable

Found same issue with script for backup speed…

Line 15 and Line 16 need to switch “>” to “>”

dang it… I meant change “& gt ;” to “>”

Hey Brent, great post as usual!Thanks for all the information!

Ran into an issue with the last DBCC ran script on my 2008 R2 server. (10.50.1600). It breaks during the sp_MSforeachdb command. I have a database named [SharePoint_AdminContent_9bb5f3f3-d7bd-4da1-8eda-c777296e1e9e], but the error says:

“Msg 911, Level 16, State 1, Line 1

Database ‘SharePoint_AdminContent_9bb5f3f3’ does not exist. Make sure that the name is entered correctly.”

So, for some reason it’s not picking up the entire db name for this database.

Ooo, yeah, I can see why. In the USE statement, I wasn’t enclosing the database name in []. Try now.

Yeah that works perfect

As usual very good advice.

On a related subject I find it’s helpful to direct DBCC CHECKDB results to a table as a good way to keep your own history. This way you can plonk a simple report on top of the table that searches for the ‘CHECKDB found%’ string

That way you can give who you like access to the line “CHECKDB found x allocation errors and x consistency errors in database ‘mydb’ ” for all databases for as long as you’ve been running checkdb

So even your manager can check you’re ok (or not) now.

had to laugh, the sharepoint_AdminContent database got me too. what kind of name is that microsoft!?!

Great article, well written and funny as always 🙂

Another one for my bookmarks.

Thanks Brent, very informative and entertaining at the same time.

A couple of extra things I noticed.

I run checks against a VLDB by running CheckFilegroup. This sets the last date value, but does not mean that the entire DB has been checked.

Also I run against a user created snapshot which means that the date in the original DB never gets set.

Thanks again.

Paul.

Paul – yep, if you run DBCC against your own snapshot, that won’t set the database’s date. Make sure to be really, really careful that you’re capturing errors successfully – I recently worked with a client who was doing this, and their error handling script wasn’t working successfully. It kept reporting that the job had succeeded, but DBCC was hurling all over the place. Take care!

Thanks Brent, I use the ever so useful, but ever so undocumented (why?) with tableresults option and dump the errors to a table.

Of course, the same issue with the date not being set happens with read-only databases as well. We have several, and it gave me a bit of a shock before I realized what was up.

Was comparing my speed with those on Kendra’s chart..May I suggest one change? Your script reports speeds in megabytes per second (if I’m understanding the divide by 1048576 correctly). Kendra’s poster uses both gigabits (Gb) and gigabytes (GB). The lowercase mb in your column names might lead some to believe these are megabit speeds, not megabyte speeds. I find this is always a bit source of confusion when talking network speeds, especially with the network guys. And also.. Great script!!

Shaun – sure, changed it. I usually lowercase my fields like that, and that has a funny side effect here. Thanks!

Backups of the data are a good step in the right direction, but for full DR you also need server system state data, application files and comprehensive system configuration documentation to enable any server to be rebuilt outside its normal environment.

John – yep, absolutely, great point. I’m just trying to get folks started with baby steps. 🙂

Thanks for the the backup throughput query, I get a lot of mileage out of it. What factors within_a_database might cause it to back up at 1/10th the speed of other DBs on same server (DBs on same disks, backing up to same remote location, no change in contention, etc). Could backup compression software – a la Quest/Idera – impact one database more than another?

Stephen – yep, different types of data within a database could affect it, as well as timing of the backup. For example, I had a client who backed up databases in alphabetical order. The A-M databases went fast, but by the time we got midway into the alphabet, another server’s backup jobs had started kicking in, writing data to the same target location. Backups slowed down dramatically then.

Turns out the extremely-slow-to-backup database was dominated by one table, which has a varbinary(max) column. That seems to not compress very well. Result; slower and larger backups when compared to databases not dominated by varbinary(max) data.

Great article Brent — still valid 2.5 years later!

One issue with the first query, however. It includes databases which were restored from another server.

A slight modification will filter the resultset down to only databases backed up on this server:

SELECT d.name, MAX(b.backup_finish_date) AS last_backup_finish_date

FROM master.sys.databases d WITH (NOLOCK)

LEFT OUTER JOIN msdb.dbo.backupset b WITH (NOLOCK) ON d.name = b.database_name AND b.type = ‘D’

WHERE d.name ‘tempdb’

AND server_name = @@servername

GROUP BY d.name

ORDER BY 2

As always very nice work and thanks for sharing..

Found it to be useful article. But I couldn’t figure out why I get different result in duration than SQL server logged as backup duration by running the following code after making minor adjustment.

SELECT @@SERVERNAME AS ServerName ,

YEAR(backup_finish_date) AS backup_year ,

MONTH(backup_finish_date) AS backup_month ,

DAY(backup_finish_date) AS backup_day,

SUM(DATEDIFF(s, backup_start_date, backup_finish_date)) AS [TimeinSeconds],

SUM(BACKUP_SIZE/ 1048576) AS Backup_size,

CAST(AVG(( backup_size / ( DATEDIFF(ss, bset.backup_start_date,

bset.backup_finish_date) )

/ 1048576 )) AS INT) AS throughput_MB_sec_avg ,

CAST(MIN(( backup_size / ( DATEDIFF(ss, bset.backup_start_date,

bset.backup_finish_date) )

/ 1048576 )) AS INT) AS throughput_MB_sec_min ,

CAST(MAX(( backup_size / ( DATEDIFF(ss, bset.backup_start_date,

bset.backup_finish_date) )

/ 1048576 )) AS INT) AS throughput_MB_sec_max

FROM msdb.dbo.backupset bset

WHERE bset.type = ‘D’ /* full backups only */

AND bset.backup_size > 0 /* 5GB or larger */

AND DATEDIFF(ss, bset.backup_start_date, bset.backup_finish_date) > 0 /* backups lasting over a second */

GROUP BY YEAR(backup_finish_date) ,

MONTH(backup_finish_date),–,backup_finish_date,backup_start_date

DAY(backup_finish_date)

ORDER BY @@SERVERNAME ,

YEAR(backup_finish_date) DESC ,

MONTH(backup_finish_date) DESC,

DAY(backup_finish_date) DESC

While the following query returned exactly same duration as SQL server logged.

What do you think?

For script troubleshooting, head on over to http://dba.stackexchange.com.

Appologies !! Here is the code..

select CONVERT(Varchar(10),backup_start_date,101), DATEDIFF( mi, min(backup_start_date), max(backup_finish_date))

From msdb.dbo.backupset

where media_set_id in (

select media_set_id

from msdb.dbo.backupmediafamily

where physical_device_name like ‘%_Full%’ )

and backup_start_date >= ’06/20/2016′

and DATEPART(hh,backup_start_date) > 18

group by CONVERT(Varchar(10),backup_start_date,101)

order by cast(CONVERT(Varchar(10),backup_start_date,101) as smalldatetime)

Thanks for this article.

Looking at the throughput results I get values of sth. between 20 Gigabytes/sec and 80Gigabytes / sec in average from different SQL Servers. Our Network admin controlling and managing the backups so far has told me that the max. possible backup throughput we could have is 1Gigabit/s, so 0,12 Gigabytes / sec.

Now I am confused about those figures I see… any idea where in this conversation we missed each other?

Jeff – unfortunately we can’t really troubleshoot this in a blog post comment.

Late reply here but I just love this:

SELECT d.name, MAX(b.backup_finish_date) AS last_backup_finish_date

FROM master.sys.databases d WITH (NOLOCK)

LEFT OUTER JOIN msdb.dbo.backupset b WITH (NOLOCK) ON d.name = b.database_name AND b.type = ‘D’

WHERE d.name ‘tempdb’

GROUP BY d.name

ORDER BY 2

Very elegant. Thanks!!!

Anyone know if there is an equally super way to join on msdb.dbo.backupmediafamily and return the physical_device_name for those databases that do have recent backups? Result set should still return the ones without backups. All my attempts thus far stink. Saturdays should be spent doing less nerdy stuff 🙂

Mark – for questions, your best bet is to head over to http://dba.stackexchange.com.