At least once a month, a client has The Postgres Conversation™ with me.

It happens at the end of a consulting engagement, after we’ve talked about the work that the team needs to do in order to meet their performance objectives.

A manager who’s been quiet the whole meeting suddenly pipes up and quietly says, “I know you don’t really want to hear me ask this, but … what’s the point where we start thinking about different database back ends?”

They expect me to valiantly defend Microsoft SQL Server, talk about how powerful it is, discuss how it makes your app perform fantastically. However, they’re taken completely off guard when I say,

“It shouldn’t be used for most new applications you build today. If you have an existing app built on it, you’re kinda stuck, but if you’re building a new application, you should use Postgres instead.”

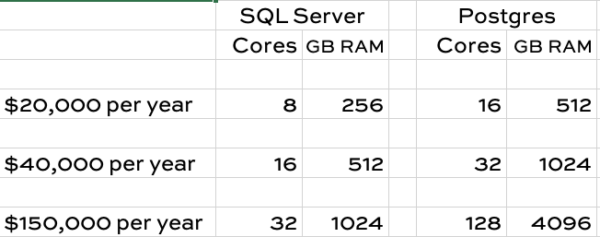

On a recent call, the client’s DBA disagreed with me, saying that Microsoft SQL Server’s query optimizer is better, so it makes queries run faster. Who cares? For the price of the licensing, you can buy a dramatically larger Postgres server:

(Details about those numbers: I’m using x2iedn reserved instances at EC2, with licensing included. Vantage screenshot of more detailed pricing comparison, and you’re free to make your own at EC2instances.info or whatever Azure pricing calculator you wanna use. If you use an Azure pricing tool from Microsoft, though, make sure it includes the cost of the licensing – a lot of their tools do a magical “licensing assurance” thing that implies you’re bringing some imaginary free licensing from somewhere.)

If you go with Postgres, you get literally twice the hardware for the same money – and by the time you hit SQL Server Enterprise Edition tiers of hardware, four times as much hardware! Why on earth would you pick SQL Server given that comparison?

Or to put it another way, these two things cost the same:

- Just 1 32-core SQL Server running Enterprise Edition

- A 4-replica fleet of 32-core servers running Postgres

Microsoft’s problem is that good hardware got too cheap.

We’re living in a world where $6,000 buys a Lenovo laptop with 24 cores and 128GB RAM – but to license that laptop with SQL Server Standard Edition would cost about $50,000. Don’t even get me started on the fact that when the next bigger laptop comes out, you would have to step up to SQL Server Enterprise Edition – just to license that laptop! During the lifespan of SQL Server 2022 and 2019, we are absolutely going to see laptops so fast that they would require Enterprise Edition.

We’re living in a world where $6,000 buys a Lenovo laptop with 24 cores and 128GB RAM – but to license that laptop with SQL Server Standard Edition would cost about $50,000. Don’t even get me started on the fact that when the next bigger laptop comes out, you would have to step up to SQL Server Enterprise Edition – just to license that laptop! During the lifespan of SQL Server 2022 and 2019, we are absolutely going to see laptops so fast that they would require Enterprise Edition.

Server pricing isn’t much higher: you can configure a low-end Dell PowerEdge R6515 with a fast 24-core processor and 128GB RAM for less than $7,000.

SQL Server’s licensing is desperately out of touch given today’s hardware. The Microsoft tax doesn’t offset the ability to use two to four times more hardware with Postgres for the same price as licensing a box with SQL Server.

Don’t give me the “total cost of ownership” spiel, either, unless you’re willing to show me how Microsoft SQL Server DBAs somehow cost less money than Postgres DBAs.

So, is SQL Server going to get cheaper?

Honestly? I doubt it, and if I was in Microsoft’s shoes, I wouldn’t discount the boxed product either. Existing applications are trapped in their SQL Servers, and they’re used to paying $2,000 Std & $7,000 Enterprise per CPU core. They don’t realize how expensive that’s become today.

Even customers who do understand it, look at the cost of migrating their application from SQL Server over to Postgres, and most of them quickly decide to kick the can down the road a while longer. “We’re going to rewrite the app from scratch soon,” they say, “and when we do, we’ll take a fresh look at our database choices then.”

That’s why Microsoft offers Azure Database for PostgreSQL, and why they bought Citus Data a few years ago. Microsoft saw this coming too, and they’re hedging their bets to make sure whatever database you wanna use – expensive or not – they’re going to offer it on Azure.

And that’s a good thing for all of us. More competition and more offerings is good.

61 Comments. Leave new

Hi Brent,

Why the discussion is limited to postgresql, while there are others as well?

Thanks,

KD

Because I only write so much per blog post. 😀

Perhaps one reason that pgres ranks higher than mysql/maria for greenfield work is that pgres seems “independent” from the majors. This has always been a problem and a major reason why mysql is shunned for maria. pgres has always had a more permissive OSS license.

Yup recently bought a new server for a project, was about 45k for the hardware as we get good discounts. $224k for SQL enterprise. And somehow SQL Server is still cheaper than oracle.

That said even with the crap in the first few SQL 2022 CU’s I still feel SQL server is more reliable than postgresql, hanging out in postgresql slack there have been some very interesting bugs that cause data loss etc.

I am not a Postgresql DBA so I am curious what is the true cost of running postgres is? I doubt its free thats for sure. Who do you call when you hit a bug in postgresql and you cant open a sev 1 with the vendor.

Edb is providing 24/7 support on postgres and SLA. Of course is not free

Thank you Brent. Yet another insightful blog.

My pleasure! Glad you liked it.

How many times have you seen clients paying for Enterprise license on secondary FCI nodes? Worst case I’ve seen was about $200k/year of unnecessary spend.

That’s a great question. Because I’m a performance tuner, I don’t usually ask licensing compliance questions. The way I phrase it is, “Based on how these servers are set up, your current licensing exposure is X cores. I’m not the licensing police, so I’ll ask it this way: would you like help reducing that licensing exposure?”

The answers are usually one of two extremes. It’s either, “No, we’re fully licensed,” or “Yes, we want to reduce that exposure.” I don’t ask questions about either one. 🙂

I find it’s easier to justify my fee with concrete cost savings over just performance improvements, but I don’t have your reputation. Best case is when I can prove both, which is normally what happens.

Also, love it when you quote yourself. Makes it seem so much more credible.

Really enjoy all your content and am thankful you’re willing to share the way you do!

Hahaha, thanks! I like using pull quotes as a style – it helps emphasize parts of the post for readers who scan.

It’s all about Scans

One problem is Microsoft does not have meaningful mix of editions. Standard edition is worthless with indexing restricted to DOP 1. The DW/BI is not of limited value with memory at 128GB? Although I believe that could be made to work with powerful storage, not the pathetic azure storage options.

There should probably be two standard editions, one limited to 8 p-cores and another to 24, so true enterprise should start at 32. AG in standard remains 2 nodes, memory limit should periodically be adjusted. Consideration for intel E and AMD c cores should made, perhaps 2:1.

The lower standard should be fully price competitive with Postgres.

The true intent of enterprise edition however is not how big it is, but rather in problem resolution. Someone who purchased EE plus support should bypass level 0/1 call center, and go straight to someone who actually has good experience in SQL, with an option to immediately get expert level attention ( very expensive of course)

To some extent, that’s what they did with Azure SQL DB (and Hyperscale and Managed Instances.) They scale costs based on the number of CPU cores – there’s no separate Standard or Enterprise.

Now, granted, there are plenty of other performance limitations with those platforms, heh – the log rate comes to mind – and the pricing certainly isn’t easy for small business users to understand. I’m only saying they got rid of the Std vs Ent query performance discussion. It’s still a hot mess, though.

Oh, and I couldn’t possibly agree more with this statement:

That should be unrelated to Microsoft Premier Support, which is just too big of a pill for a lot of companies to swallow.

Would you see MS SQL Server in a better position of it would have a good free tier (Express Edition with 4 CPU, 64GB of RAM and Agent, let’s say), then higher limits for Standard and leave EE for the top end?

For a small, license-free server I would prefer MS SQL to Postgres because of the quality of tooling and documentation, for small AGs I still prefer SQL because it is easier to set up and manage, for bigger stuff my experience is that support (our internal operations team cost) is cheaper with SQL EE, but it is a statistics with a sample of 1 so it’s not relevant.

web edition can be your choice

it is not that expensive

Can you prove your assertion that mssql has better quality of tooling and documentation? Others may beg to differ.

Not speaking for Brent here but pls remember the premise of his post is that “pgres is better for new dev work.” I think he’s right. Rarely on ANY new dev work would you need an enterprise-class rdbms. Why? Because there are definitely new thoughts and architectures in the past, let’s say, 10 years that are causing folks to rethink just how much data truly needs to be in a relational format. CQRS is one example, nosql is another. Both of these paradigms stress that certain kinds of data should NOT be in a relational database. Any dev team building something new would be well-served to understand these paradigms and evaluate them properly. I’m not saying rip out your sql server and use mongo, but rather understand where the tech might not make sense. And things like CQRS might just solve the need for AGs, FCIs, and other HA-style solutions.

Last thing is: a large portion of Brent’s premise of “new dev work should go to pgres and not ms sql” assumes that DBAs and Ent Arch teams are actually making technology decisions at most companies. Are they? I dunno. What I see is a shift in the past, say, 20ish years, to things like the “full stack developer”, the MEAN stack, LAMP, etc where decisions are made based on “solutions” and not components. In many of these orgs, for various reasons, a business problem is presented to a team and they decide how to solve it and with what tech components. I’m not saying that is RIGHT or WRONG, it certainly sucks when a dev team decides to use Mongo and no one in the org understands how to do HADR on mongo, back it up, perf tune it, license it, etc….I’m merely saying that by the time those topics come up in the conversation it’s already pretty far down the implementation path, and the project sponsor at that point is more concerned about delivery than unimportant things like HADR (just bein honest).

Further, with the move to OpEx in favor of CapEx models, it’s always cheaper/better to pay less now and worry about scale later, IF it even becomes a problem and IF the solution gains market share. Cloud PaaS offerings are further meaning that statements like, “but we don’t have any DBA talent that knows pgres” are becoming irrelevant anyway.

> large portion of Brent’s premise of “new dev work should go to pgres and not ms sql” assumes that DBAs and Ent Arch teams are actually making technology decisions at most companies. Are they? I dunno.

I agree with you! They’re not, and that’s part of the point of the post. If you leave it up to developers, they’ll typically lean towards hosted, managed solutions that avoid dealing with DBA teams altogether.

DBAs…read carefully Brent’s last sentence. Then read it again in a few days.

If you wanna know why Mongo took off like it did, he just gave you the reason.

I once built a solution on Mongo and we wanted to purchase support from them. They came in and gave us a canned prezi for those on the team that were not familiar with Mongo. This was maybe 2012ish.

I’m not kidding. The 3rd slide was “why do companies use Mongo”. the bullet points were:

* no backups need

* no HA configuration needed, it just works

* no indexing needed

* no DBA needed.

Frankly, I can distill those 4 bullets down to just the LAST BULLET.

Now, that’s complete bullsh1t. we all know it. But the psychology of putting that on a slide…I’ll bet they solve a large percentage of their services based SOLELY on that “No DBA” messaging.

DBAs gotta stop being the Department of No.

(I’m sure that comment will ruffle some feathers).

Even things like pgres and mysql are built to run _pretty good_ in a container where the data gets backed up using whatever is already backing up your k8s stuff. ie, no DBA needed.

> Even things like pgres and mysql are built to run _pretty good_ in a container where the data gets backed up using whatever is already backing up your k8s stuff. ie, no DBA needed.

Especially when most businesses just don’t take backups and high availability as seriously as the DBAs claim to.

“it certainly sucks when a dev team decides to use Mongo and no one in the org understands how to do HADR on mongo, back it up, perf tune it, license it, etc” It happened to us and now we have to support it. Wrong technology chosen by the team as we are in the midst of getting rid of MongoDB and putting that functionality back on SQL

It would be interesting to know WHY the dev team chose Mongo in the first place. Is it because they didn’t want to talk to the DBAs?

I’m convinced so many HORRENDOUS decisions that we make in the IT world are due to folks not wanting to talk to the DBAs (or the architect team, or any team that has a default answer of NO).

Do you have JSON/XML cols in your database? Why? I’ll bet it’s because some developer wasn’t exactly sure what the schema for a new table needed to be so they created those so that they wouldn’t have to kiss a DBA’s butt for schema changes later.

Or, this one is my favorite, “We use Service Broker”. Why, in any sane world would you use this abomination? The answer is almost always “we wanted to use JMS but the architecture team said NO so we used SB” or “it was easier enabling that in the db vs getting another ‘thing’ added to our visio” or the ABSOLUTE BEST ONE … “we really just needed to run a stored proc asynchronously and we didn’t know how to do it and didn’t want to bother our DBA” . hahaha.

I have found that devs that want to use mongo usually just don’t understand what a database does or is for. Plus, a syndrome I call ‘developers gone wild,’ WHERE without an enterprise architect plan for a project, just start using whatever random solution to a problem that they find for that particular problem and then may never use that solution again, or may start randomly using it for other scenarios with similar requirements even if they don’t quite match.

To me, if the accuracy and integrity of your data is critical, that disqualifies any nosql option.

I have been in environments where the DBAs always said no – but most of them were as a result of bad developer decisions in the past and it doesn’t matter if the DBA is involved in the conversation, those same problems will invariably emerge on another platform, but it will be under the responsibility of someone without the knowledge to resolve it. This is an organizational problem, not a technical problem.

My experience is that many developers think that since they know how to write a SELECT statement, that they’re capable of being DBAs. Not 100% across the board, but I’ve been burned enough now that I am wary of any front end dev who claims to also be an expert in databases. They’re almost always the “know enough to be dangerous” type. Frequently wanting SA or elevated rights so they can do everything themselves without scrutiny. SQL is just “one of the many languages they are experts in”, etc. This contributes to a company culture with the attitude that databases are an afterthought.

I think some of it is developers wanting to use The Next New Thing ™ so they can put it on their resume. Take a look at the explosion of frameworks for building a web site, and some developers (especially contractors) think that their job depends on having worked with as many as possible. Why would they limit themselves to SQL Server if they think getting their next gig might be easier if they choose some other data storage technology for the current project, even if SQL Server is the best fit?

In my opinion as a dev who has to put on a DBA hat occasionally is that MSSQL /w query analyzer is much more user friendly to someone not familiar with the DB than anything on Postgres. MSSQL’s ability to write procedural code ad hoc without resorting to a do block is a huge advantage when exploring a schema or debugging. I can easily take the guts of a MSSQL stored proc, paste them into query analyzer, and declare the params as constants and easily view the I termediate results by sprinkling in some select statements. In Postgres, I have to wrap everything in a do $$ block and resort to stashing data in temp tables or the like. Not impossible, but much more friction.

Err, Query Analyzer hasn’t been a thing in 20 years? What query tool are you talking about?

I could be worse, he could have been talking about Enterprise Damager

Tooling and documentation: SSMS vs PGAdmin; Books Online (learn.microsoft.com); SQL First Responder Kit; Brent’s courses; Sentryone/SolarWinds Plan Explorer – these are the first examples that comes to mind. If I include Availability Groups (not a tool, but a big help), MS SQL is not that bad anymore for my company’s use case.

Additional challenge is competitor clouds. If license costs do not come down, there will be no customers there at some point. It’s better to collect half the money than none

Hi Brent, does the situation improve a bit for on-prem deployments? Yes, I agree there is one-off licensing cost you have to pay for SQL Server license (and most likely for Widows OS license), but then theoretically you should not have extra expenses as compared to Postgres (if you opt out from paying for Microsoft support or software assurance)?

If you opt out from paying software assurance (and companies certainly have), then you have a limited amount of time before the database is no longer under support. Most companies – especially public ones, and ones who have security teams – won’t run commercial software that is no longer supported by the manufacturer.

“Microsoft’s problem is that good hardware got too cheap.”

Well said and I’ve never seen anyone state it so succinctly. Remember that b4 SQL2008R2 (I think that was it, but it mighta be 2012….who cares) the goal of the “rack and stack” crowd was to buy as much hardware as they could afford. Meaning buy beefy hardware with as many cores as you could afford from Dell. That’s because licensing was socket-based. When SQL (and Windows) licensing moved to core-based, now suddenly that entire purchasing culture went out the window. Now the point was to buy the least amount of hardware you could reasonably get by with for the next 18-36 months because the licensing became the biggest expense.

The real problem was that “buy as much hardware as you could afford” culture took a few years to change. Meanwhile licensing didn’t change and there were a ton of companies that were (probably still are) running SQL 2008 and would NOT upgrade the DBMS. Internally within MSFT (yeah, I’m a softie) it took YEARS b4 anyone realized that the REAL REASON companies weren’t upgrading from SQL 2008 to 2016, 2020, etc etc was due to the fact that the licensing bill would go up about 40%. Yikes.

Companies have long memories and they realize that the last thing they want to do is get even more in bed with big DBMS vendors like Oracle. So they ATTEMPT to migrate to pgres and that’s likely never gonna work. BUT, folks are learning, and they don’t want to continue to be in the “licensed software” game if they don’t have to be. Certainly you might be able to make a TCO argument for mssql….but nobody will listen, so don’t.

I commend Brent for writing this article. Too many times I see DBAs and data folks in general that don’t understand the economic reasons for why the industry is moving in ways they may not understand.

There are a ton of “economic” undertones to this article: why pgres and not mysql…I answered that one above, hardware is cheaper than licensing, capex/opex, OSS licenses are not all created equal, and OSS license type likely doesn’t even matter when dealing with PaaS (mongo and redis are learning this)…are all examples. Even decisions like “do we even need our EDW anymore” are being driven by the economics of the cloud far more than for any tech reason. This last point is eye-opening when finally understood by data practitioners.

Thanks Dave!

I agree totally: Microsoft did the right thing by foreseeing the hardware changes, and then switching from socket-based licensing to core-based licensing.

Now, we’re way past the time for the next shift.

I was starting to think on similar lines – until I started to appreciate just how primitive PostgreSQL is. Recent new killer SQL Server features have primarily been either boondoggles or extremely niche that virtually no one will ever use, but subscribers still have to pay for. Of the new features that are actually broadly useful, most of them are so unstable that you are taking your life in your own hands. Then there are events like CU15 in SQL 2019 that broke restores and by extension, log shipping. On top of all of that, the documentation quality keeps declining, though it seems they may have started doing better in that recently?

Don’t get me wrong, I feel that Microsoft is failing hard on delivering value to justify the cost of SQL server. The standard edition RAM limits are completely ridiculous. If nothing else, the Enterprise edition price increases were indefensible considering how little has been added to EE.

But in Postgres where logical fragmentation is a problem, requiring regular disruptive maintenance, vacuum is disruptive. The best HA it has to offer is not quite in parity with database mirroring which few use in SQL Server anymore because availability groups are just better and require less support, and simpler HA options

Yes, a lot of hardware can be thrown at an application for the cost of the Enterprise Edition licenses, but hardware is distant second fiddle in cost compared to the salaries of your DBAs which you will need more of, than you would to manage SQL server. Postgres also lacks several commonly critical encryption features that require more staff overhead to implement. I am also pretty skeptical of how much hardware can really be thrown at an OLTP system and realize gains in performance to make up for inefficient query execution. More cores may not improve locking and could make it worse.

OLAP is of course a different scenario and to me it likely only makes sense to use Postgres at this stage – if you have the staff to manage it that would be managing a DW anyways.

I agree. Adding hardware is often ineffective as you pointed out, and expensive when it does. In the cloud, adding compute to make up for PG’s lower performance can easily offset SQL Server licensing costs, due to the very high cost of cloud compute. Running SQL on Linux in combination with selective use of InMemory (a significant capability that PG lacks) can make SQL Server cheaper to run than PG, on purely compute+license costs. I do agree with Brent’s comments generally though – MS are on the wrong track with SQL licensing, which is heavily geared toward Enterprise & doesn’t support mid market very well, mainly due to Std Edn’s severe constraints which haven’t changed significantly for many years.

>Don’t get me wrong, I feel that Microsoft is failing hard on delivering value to justify the cost of SQL server. The standard edition RAM limits are completely ridiculous.

Or, this is part of the grand scheme to get everything into the cloud into a recurring revenue model. EVERY software vendor is moving to the subscription-based model. Why? Economics!

>but hardware is distant second fiddle in cost compared to the salaries of your DBAs which you will need more of, than you would to manage SQL server.

Are you sure? The push in the industry is clearly toward PaaS where the cloud vendor is managing more of what a traditional DBA would do. Many of us, myself included, call BUNK to that…but that’s the perception. Indisputably, the role of the DBA is changing (but will probably always be needed).

>OLAP is of course a different scenario and to me it likely only makes sense to use Postgres at this stage – if you have the staff to manage it that would be managing a DW anyways.

I disagree. Again, look at the push in the industry lately. The move is AWAY from DWs and more toward data lakes/lakehouses. Even Bill Inmon has a free ebook courtesy of databricks where he recants a lot of what he proposed for DECADES for the EDW/CIF.

So why are we pushing AWAY from DWs? For the same reason cubing technologies are dying … the hardware/tech is so good that we can overcome performance problems through other means. A core premise of the cloud is separation of storage and compute. Why? Economics. It’s cheaper to have a ton of data (possibly even copies of the same data) lying around in storage that I can express a compute engine over, at querytime, to handle the nature of the request. Data scientists don’t like DWs, they’d rather use something else. Even the new MS Fabric is getting rid of the notion of “Dedicated SQL Pools” which was the tech meant for star schema – style solutions.

>But in Postgres where logical fragmentation is a problem, requiring regular disruptive maintenance, vacuum is disruptive. The best HA it has to offer is not quite in parity with database mirroring which few use in SQL Server anymore because availability groups are just better and require less support, and simpler HA options

But you assume that any of this matters. Again, if you are designing a GREENFIELD app properly with 12Factor patterns, CQRS, etc, then possibly fragmentation and mirroring wouldn’t be needed.

Cloud or on-prem, the software costs are still there. MS offers other purchasing models in the cloud, but the license costs are still essentially the same problem no matter where it is hosted.

Cloud hardware and on-prem hardware are fairly synonymous in pricing scale. In a direct comparison, on-prem hardware will probably be less expensive, but not by orders of magnitude as it is with developer and DBA cost, comparing to hardware costs.

The move away from traditional OLAP data warehouses, is not a supporting consideration for implementation of Postgres or SQL Server. Where OLAP data warehouses are still used, Postgres is still the most cost competitive where its negative OLTP attributes are not a problem.

It doesn’t matter the application design – fragmentation and managing vacuum is a problem in Postgres SOMEWHERE. You may not suffer the same degree of blocking, or degraded performance, but it will need to be managed. I am uncertain of what the reference to mirroring has to do with application design, at least as an availability function.

Hi Brent, thank you for that post! To be honest, I am a little bit confused after it, but maybe I am missing something.

My understanding is that you are predicting that SQL Server will be slowly losing its market share in favor of Postgres (and your explanations seem reasonable). At the same time, I believe that in many office hours, you advised (career-wise) to “stand next to the most expensive thing in the room so your salary looks cheap”.

So, what you are saying? Does betting a career on SQL Server make less and less sense (because it will be pushed by Postgres)? And, betting a career on Postgres will not make sense because it is cheap?

Radek – imagine there is a parking lot with 3 cars in it: a Ferrari, a Toyota, and a Lada. They all need mechanics.

The Ferrari catches fire and burns to the ground.

Now, what’s the most expensive car in the parking lot? And will it need mechanics?

If you can solve that mystery, you can answer your question. 😉

Thanks

Yeah when you put it this way… it was not very smart question…

Its a shame there is no cap on the vCPU licensing.. There are now some amazing metal instance types available, e.g. aws r7i.metals with 4th Gen Intel Xeon (Sapphire Rapids), that would be great for SQL Server (QAT accelerators!!) but the vCPU count (64|98|192) is prohibitive for licensing. I find it sad when I see instances running with constrained CPUs… CPUs actually turned off in order to save on licensing..

Alan – yeah, a core count cost cap would be cool, but I’d be surprised if Microsoft ever did it because it would also need to subtly affect their Azure SQL DB pricing too. Right now, that pricing goes up in lockstep with core count, all the way up to the max. If SQL Server’s costs capped at a certain number of cores, then SQL Server would be more cost-effective to run, and I don’t think Microsoft wants that.

The biggest cost for developers depends on tooling available to solve problems. For example SQL Profiler is pretty good at finding where performance problems are. I don’t now tooling for Postgress but I suspect it isn’t at par. So SQL Server is chosen for the documentation, the tooling and ease of use. It either two ways. You decrease you product development costs and maintenance and extend your product lifecycles and your customer has to pay more for the server and less for your product. Or you choose cheapest Db tech for which customer won’t have to pay, but you are going to increase dev and maintenance costs and pass them to customer by higher pricing of your product. Moreover database platform may weight more in product pricing dev and maintenance of your product that one suspect. About server size, we need to remember that queries that optimize poorly (and it may have something related to how the Db engine is sophisticated) can not necessarily be solved by hardware. So bigger servers aren’t the solution, and cheapest tech. that allows to afford that, will not necessarily help.

I’m going to be brutally honest and to the point here: if you’re relying on Profiler to solve performance problems in the year 2023, your skills are not up to par. You need to attend my fundamentals classes as quickly as possible for the sake of your career.

Spot on Brent!

if you’re building a new application, you should use Postgres. !!!! YHEH

What’s interesting about the viewer comments is they’re really about small shops and a few hundred thousand dollars of licensing.

But back to Brent’s original observation about who’s really paying for this stuff, Enterprise customers, things work very differently!

Once you’re at hundreds/thousands of cores you start to get sweetheart SQL deals for a few million dollars, maybe you won’t even know how much because it’s budgeted into a huge Microsoft OS and Office contract.

There, the terms change back to, “Why would we use something free when we paid millions for the real thing?” Funny isn’t it.

Thanks for the post Brent!

Is it then fair to say that you’ve seen Postgres to be as (or more) performant than SQL Server thanks to double or quadruple the hardware?

Apologies if this was answered in the MANY comments this has received so far… and yeah I know it’s a loaded question 🙂

Kevin, is that a serious question?

Are you really asking if different queries on different database platforms with different schema options and different hardware, unequivocally perform better on one platform than another?

Because that reeks of amateur trolling.

I’m asking anecdotally if you’ve found performance to be similar, in your experience. You’re comparing the two platforms and making the point about how much more hardware you can afford with the latter. So the end question is just a simple ask about your experiences with it. No trolling intended.

I totally get if you had a large database moving from SQL Server is likely not going to happen but what if we had several small databases? Also if you throw WiltonDB (https://wiltondb.com) which claims to convert your SQL Server queries to Postgres?

Thanks for insightful post Brent !

At some point the article got me to the tail of migrating from Oracle to SQL, a few years back. Oracle is too expensive, SQL is cheaper than Oracle, etc. Back when MS released SQL 2016 Scott Guthrie presented the cost difference and how Microsoft would support migration and discounted prices.

The irony for me here is that Azure Postgres is the Microsoft’s recommendation to migrate from Oracle to Azure databases nowadays (https://techcommunity.microsoft.com/t5/azure-database-for-postgresql/new-oracle-to-postgres-migration-guide-for-azure/ba-p/2055303) (https://learn.microsoft.com/en-us/azure/postgresql/migrate/how-to-migrate-from-oracle). Better SQL language overlap.

Another interesting aspect of this is CPU’s with high core density are slower (clock speed) than one ones with fewer cores (heating aspects). And SQL license is based on cores, not speed.

More cores mean you can run more parallel tasks, which should increase overall performance. But we all know how tricky parallel processing is in SQL.

In a way, SQL licensing is getting more expensive because it runs instructions on a slower hardware than a few years ago.

Great post, and I agree. Licensing is a bit out of whack with hardware, especially for Standard. I see a lot of customers using postgreSQL or mySQL for some things, with cost being a factor.

I like SQL Server better, and I think the tooling overall is better, and many features beyond CRUD stuff are better, but are they better enough? That’s the main question. I think for most apps I see, basic tables, procs, views, inserts, updates, deletes, indexes, PKs/FKs, roles, are enough to power them. There are subtle differences between T-SQL v pgsql v others, but really if you write good code in T-SQL, you’ll learn another dialect.

I do think admin efforts/knowledge has to be gained, which is labor cost. I also think unfamiliarity with dev work against other platforms is a bigger burden than I expect, but it’s also worth an investment in the long term. If you can plan for the long term. Something lots of orgs struggle with.

[…] ???? […]

It’s $3,945 per core for a *standard* version of which makes a single 1-socket 24 core server cost you 100k in licensing costs alone, excluding the licenses you would have to pay for Windows Server.

We are living in the day and age where we have 128 core CPU and many companies are able to afford the hardware, yet nobody can affords Microsofts tax.

I believe you’re referring to the price of a 2-core pack. Standard is $2k per core, Enterprise is $7k, but they’re sold in 2-core packs.